I Built an LLM Bot in 3 hours to Conquer Slay the Spire

Learn the Methods I Used with Amazon Q to Build in Record Time

Banjo Obayomi

Amazon Employee

Published Apr 25, 2024

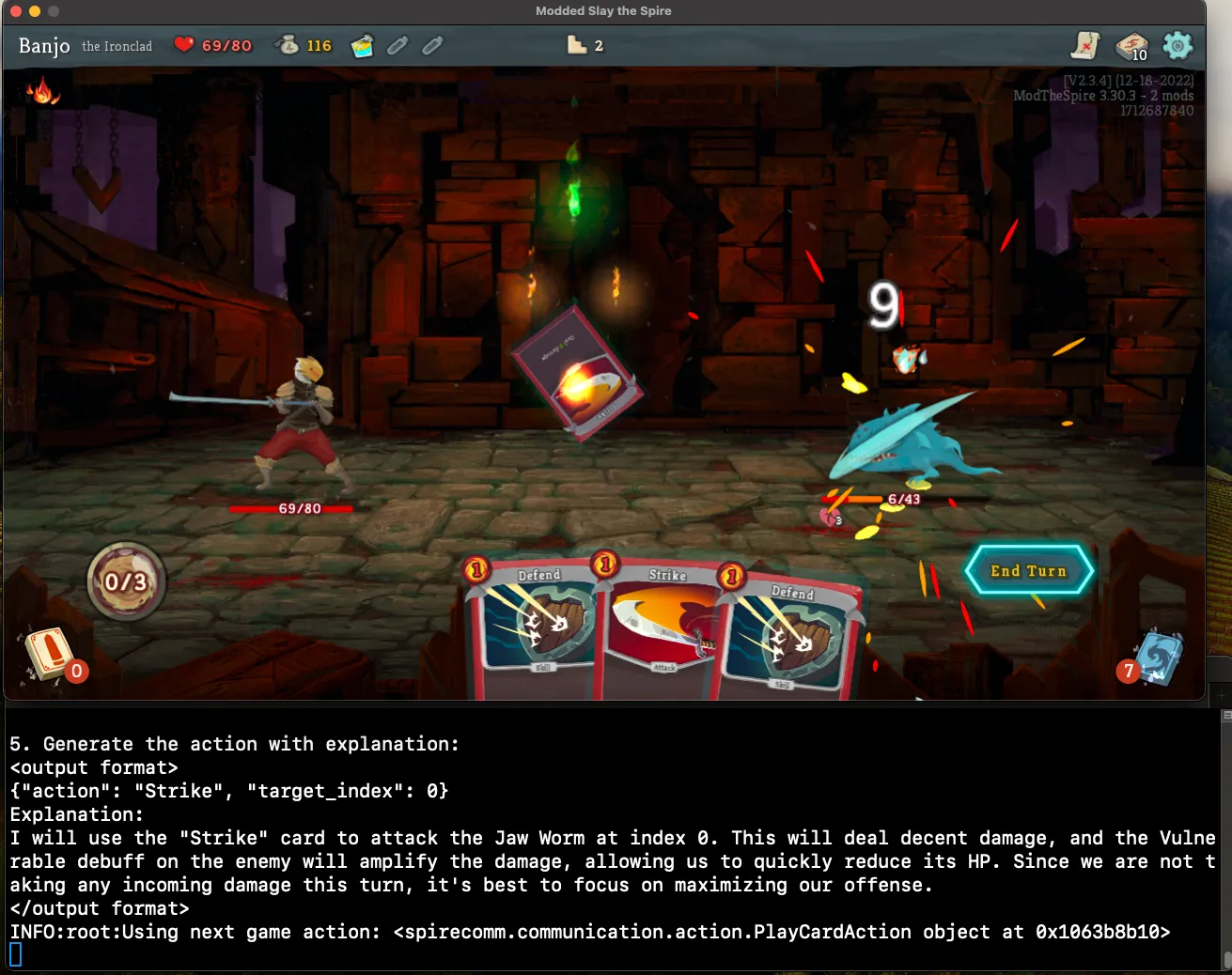

Slay the Spire is a highly addictive roguelike deck-building game that offers a unique challenge every time you play, with over 200 cards and 50 distinct encounters. As an avid player with over 160 hours logged and eagerly awaiting the just announced sequel, I was fascinated by the idea of creating an AI bot capable of playing through the game.

While I discovered a promising skeleton project from 5 years ago, builders know that updating and understanding an ancient codebase with little documentation is a challenge. Undeterred, I saw this as an opportunity to leverage Amazon Q, a generative AI powered assistant, to help me explain, update, and debug the code, ultimately enabling me to build my Slay the Spire LLM bot in just 3 hours.

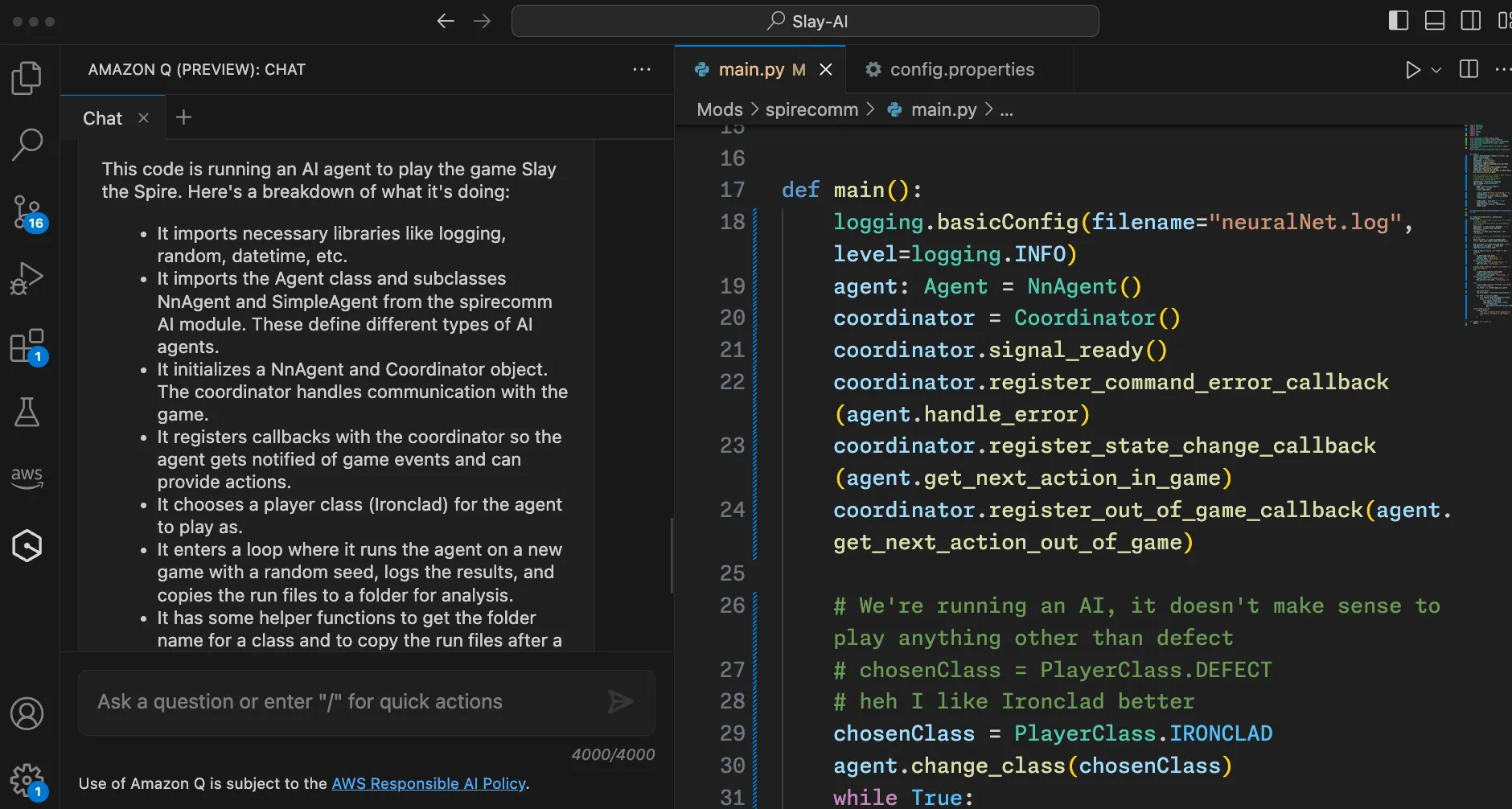

Once I set up the environment and successfully ran a test, it was time to dive into the code. As any builder would, I started my investigation with

main.py. To gain a high-level understanding of the code's functionality, I turned to Amazon Q, asking it to explain the code with a simple prompt: "What does this code do?"

It provided a concise breakdown of the code, giving me a clear overview of its purpose and structure. One key insight from the explanation was:

It imports the Agent class and subclasses NnAgent and SimpleAgent from the spirecomm AI module. These define different types of AI agents.

This information pointed me towards the next steps in incorporating my LLM into the existing code. The guidance allowed me to quickly identify the main function's key components and determine where to focus my efforts.

Slay the Spire consists of many phases, from selecting which room to enter to choosing upgrades. For my bot, I decided to focus on the main part of the game: combat.

I began by examining the

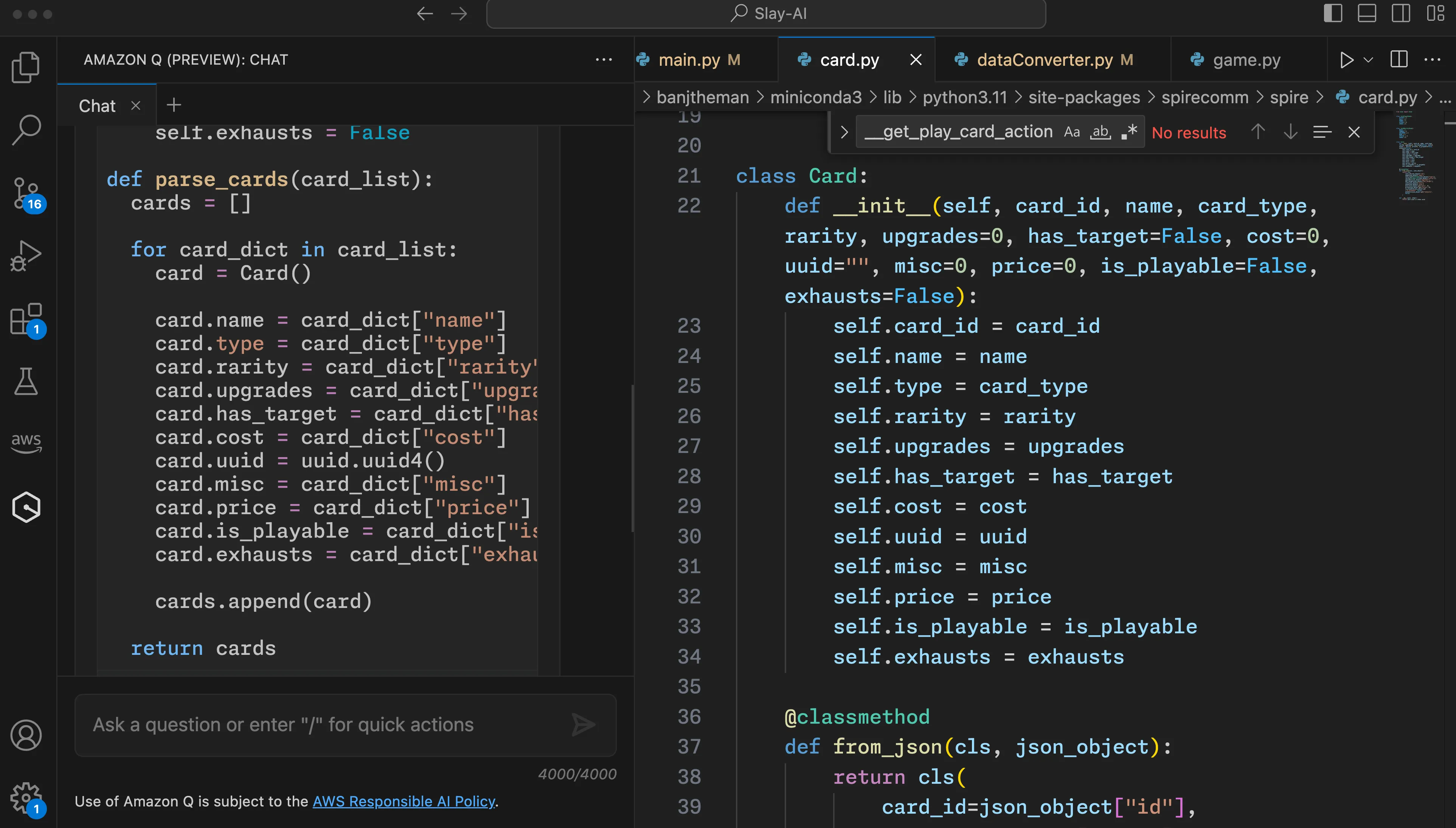

SimpleAgent class, which uses rule-based heuristics to determine actions, such as playing zero-cost cards first or using block cards when facing significant incoming damage. My goal was to replace these if-then statements with an LLM call that takes the game state data as input and returns the next action to take.The

SimpleAgent served as a good starting point, so I investigated how the NeuralNetAgent class worked. To my surprise, it was completely empty, only calling the super() method of the base Agent class. Instead of creating a new agent class from scratch, I decided to update the predefined one with my own logic. However, I encountered a challenge: the game information was stored in classes without functions to convert them into strings for my LLM prompt.This is where Amazon Q truly shined. Using a simple prompt, I was able to generate the code needed to extract the relevant game state information:

"Can you create a function to parse a list of Card objects? These are the fields in the class that I need."

I was able to use the provided a solution quickly, allowing me to incorporate the card data into my LLM prompt. I repeated this process for other essential game elements, such as monster data, relics, powers, and player information.

The ability to instantly generate tedious "boilerplate" code directly within my IDE was a huge time saver. I’m able to focus on the high-level design of my bot, while Amazon Q handled the mundane task of writing code to parse game objects. With the game state data ready, it was now time to form a prompt for the LLM.

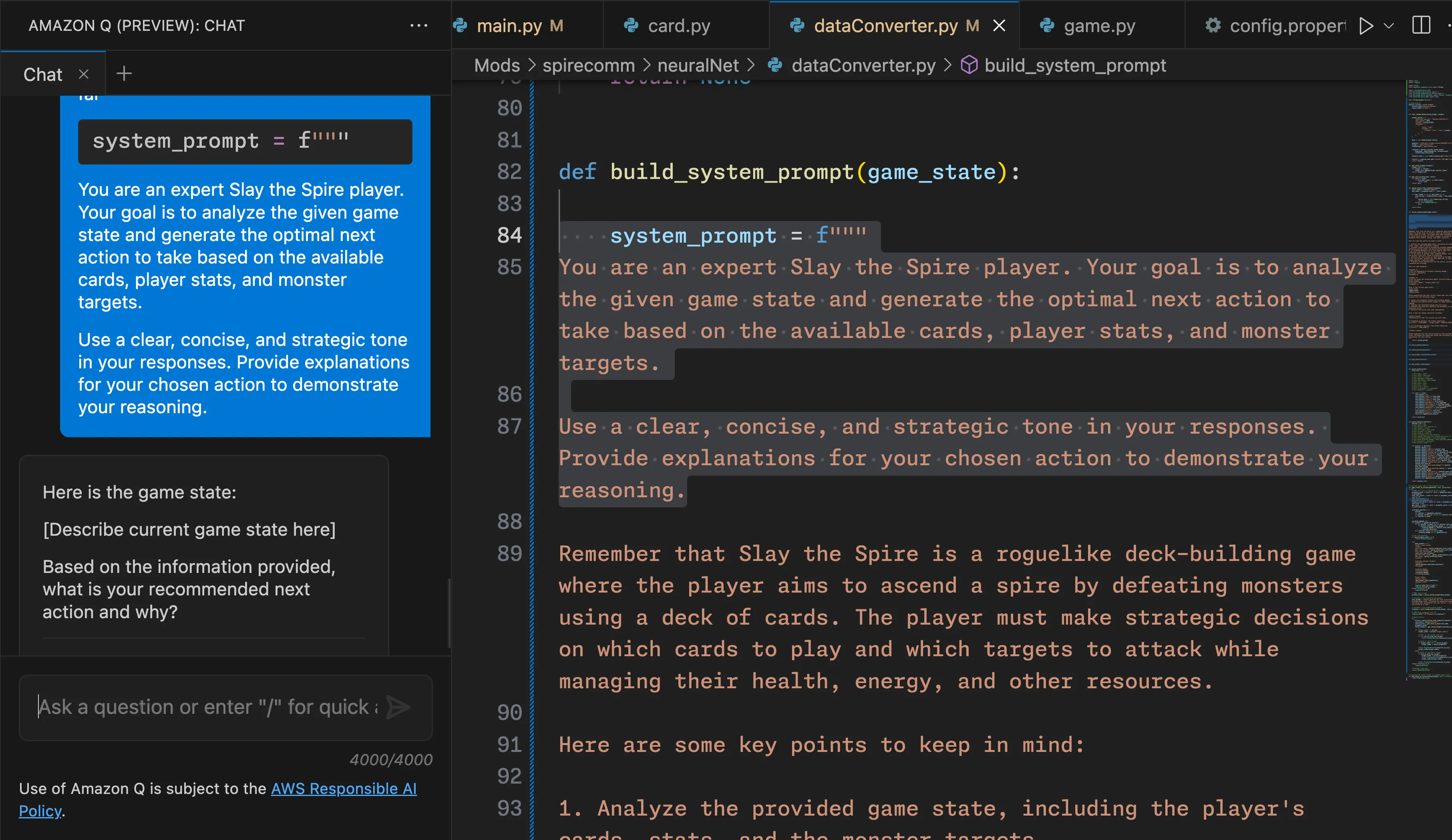

While Amazon Q has been great for understanding and generating code, it's essential to recognize its limitations. For example, when I attempted to use it to design a system prompt, the results were less than satisfactory.

Builders need to understand what Amazon Q excels at. It's important to remember that Q in the IDE is specifically designed for writing, debugging, and explaining code. For tasks beyond this scope, other LLM products like my System Prompt Builder PartyRock app, may be better suited.

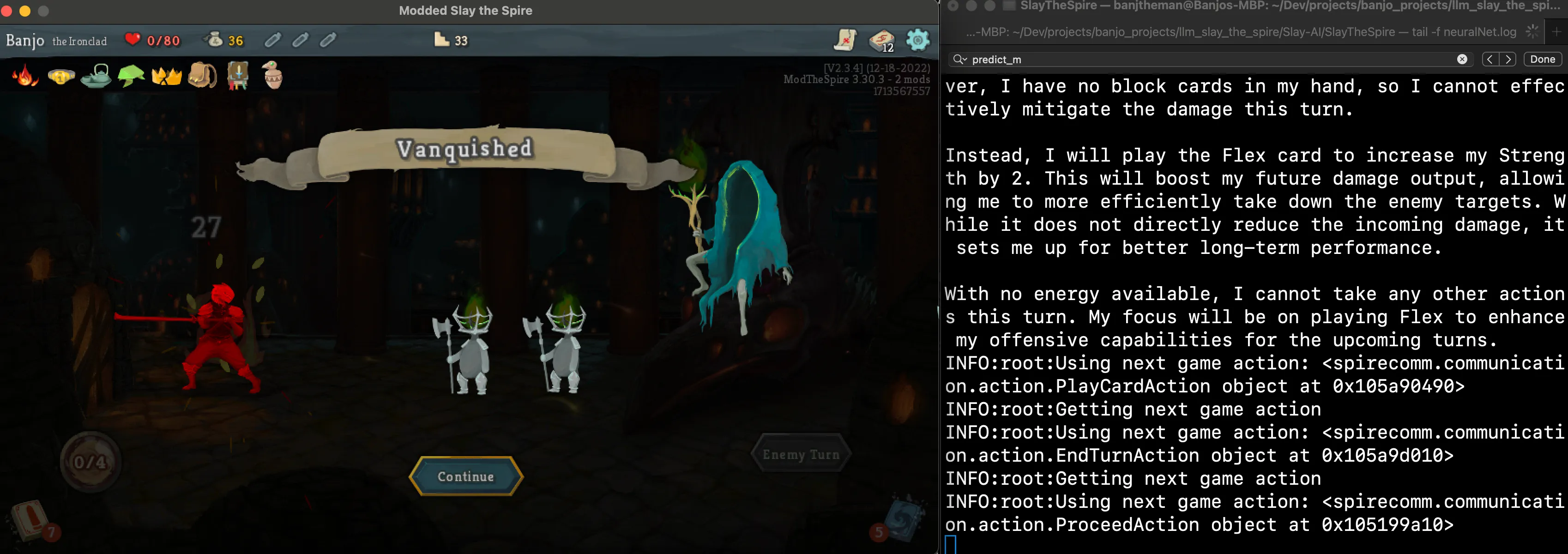

Once I finished crafting my prompt, it was time to test how the LLM would perform. I chose to start with Claude Haiku using Amazon Bedrock, as it had shown impressive results in my past experiments. To my delight, the bot began generating valid actions and playing the game autonomously. However, my excitement was short-lived as the bot suddenly crashed due to an invalid action. It was time to go back into the code to see what went wrong.

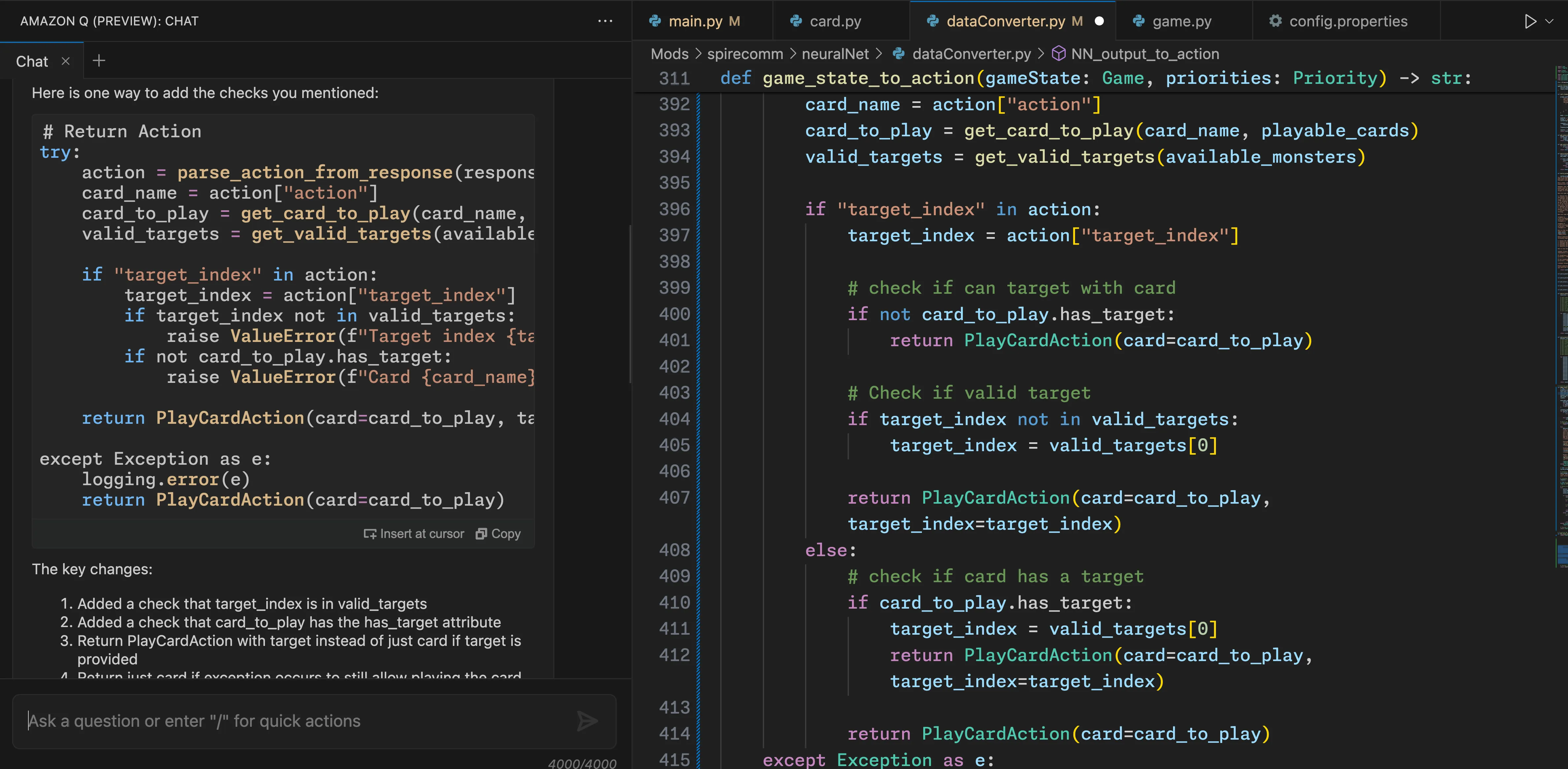

To begin, I inspected my action function to see if there were any obvious issues I had overlooked. I quickly realized that I hadn't checked for conditions when the model returned an invalid target for a card, causing it to play an illegal action. With the issue identified, I sent the following prompt to Q for assistance:

Can you fix this code, so it checks if the target_index is in valid_targets, and if the card_to_play.has_target == True? I was getting errors in my code by not having correct error checking.

Q responded with code that added ValueError exceptions to the places I wanted to check. While this wasn't my desired solution, as I wanted the bot to play a card anyway, it provided a valuable starting point. Using the output as a reference, I was able to write my own business logic to handle the situation gracefully.

This experience highlights another important fact, despite what some may claim, AI assistants cannot perfectly translate your ideas into functional code 100% of the time.

While AI assistants are excellent creative aids and can help eliminate tedious work, they still require human oversight and input to validate the code meets the desired specifications.

The code produced allowed me to streamline the process of adding my own business logic, saving time and effort compared to writing everything from scratch. With this modification, my bot was back up and running, capable of handling the occasional hallucinated move without crashing.

Building a Slay the Spire LLM bot was a fun project made possible with the help of Amazon Q. I was able to quickly understand and update a 5-year-old codebase (written by someone else! ), create tedious functions with ease, and efficiently debug issues as they arose, all within 3 hours.

My bot got as far as the second boss using Claude Haiku, the long term planning needed to beat bosses requires picking the right cards, selecting the right combos, and more just isn't there with current LLMs. I can try using Claude Opus and improve the prompt to provide general strategies, on further attempts.

If you're interested in running or improving the project, all the code and documentation is available on GitHub. I encourage you to explore the code, experiment with different prompts and LLMs, and share your findings with the community.

To get started with Amazon Q check out the documentation.

I look forward to seeing what you will build!

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.