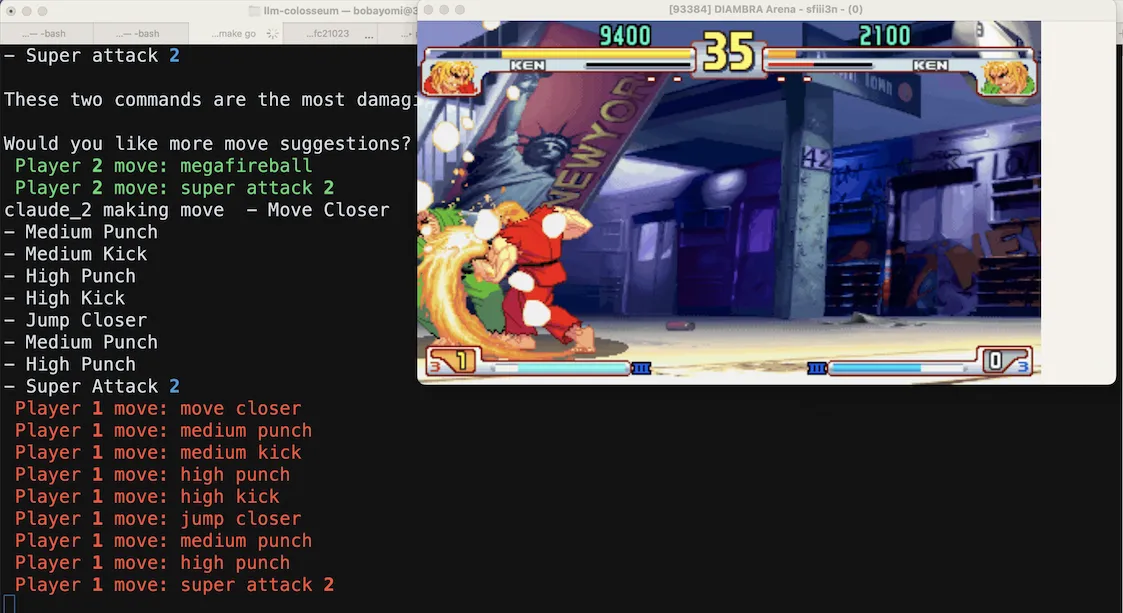

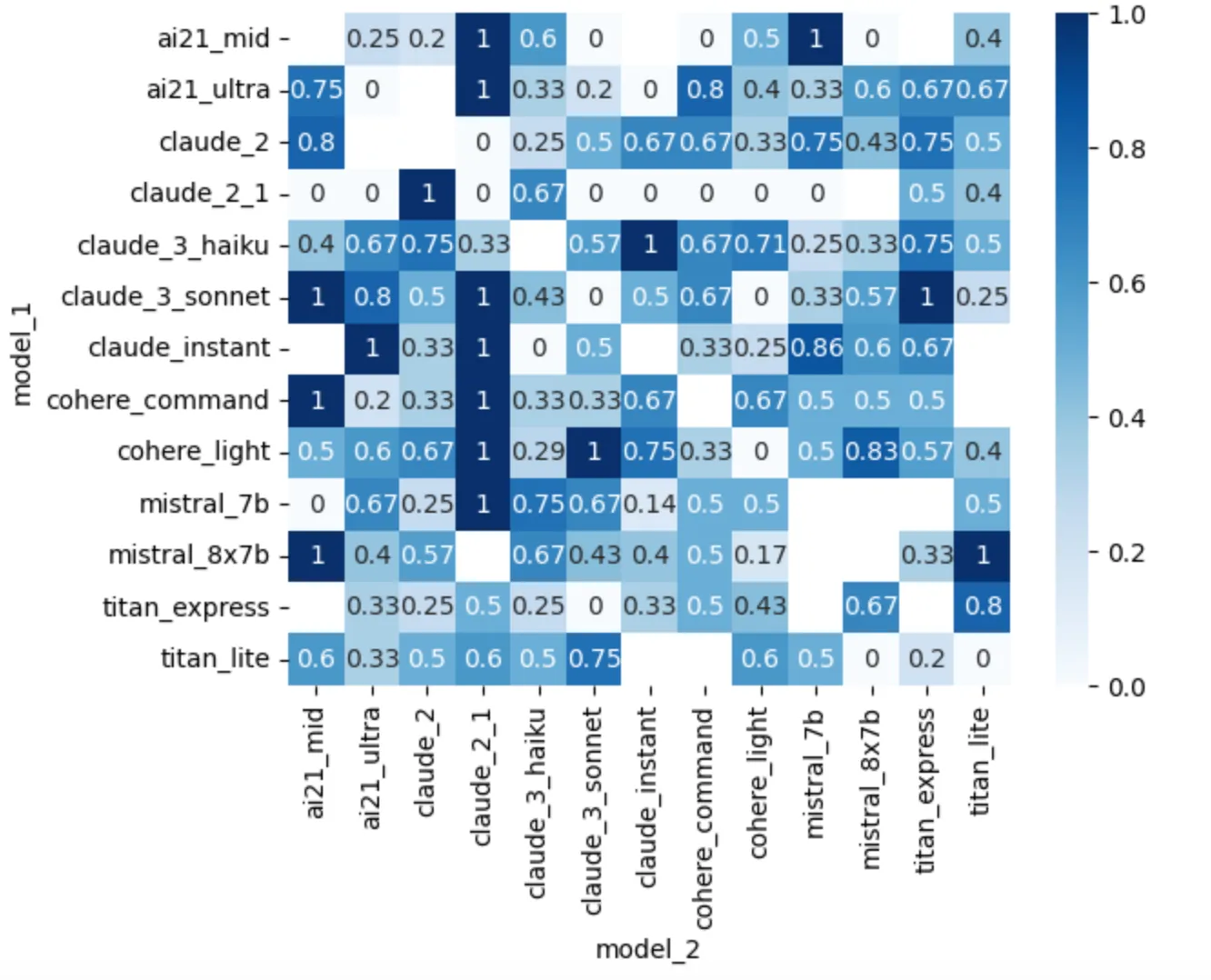

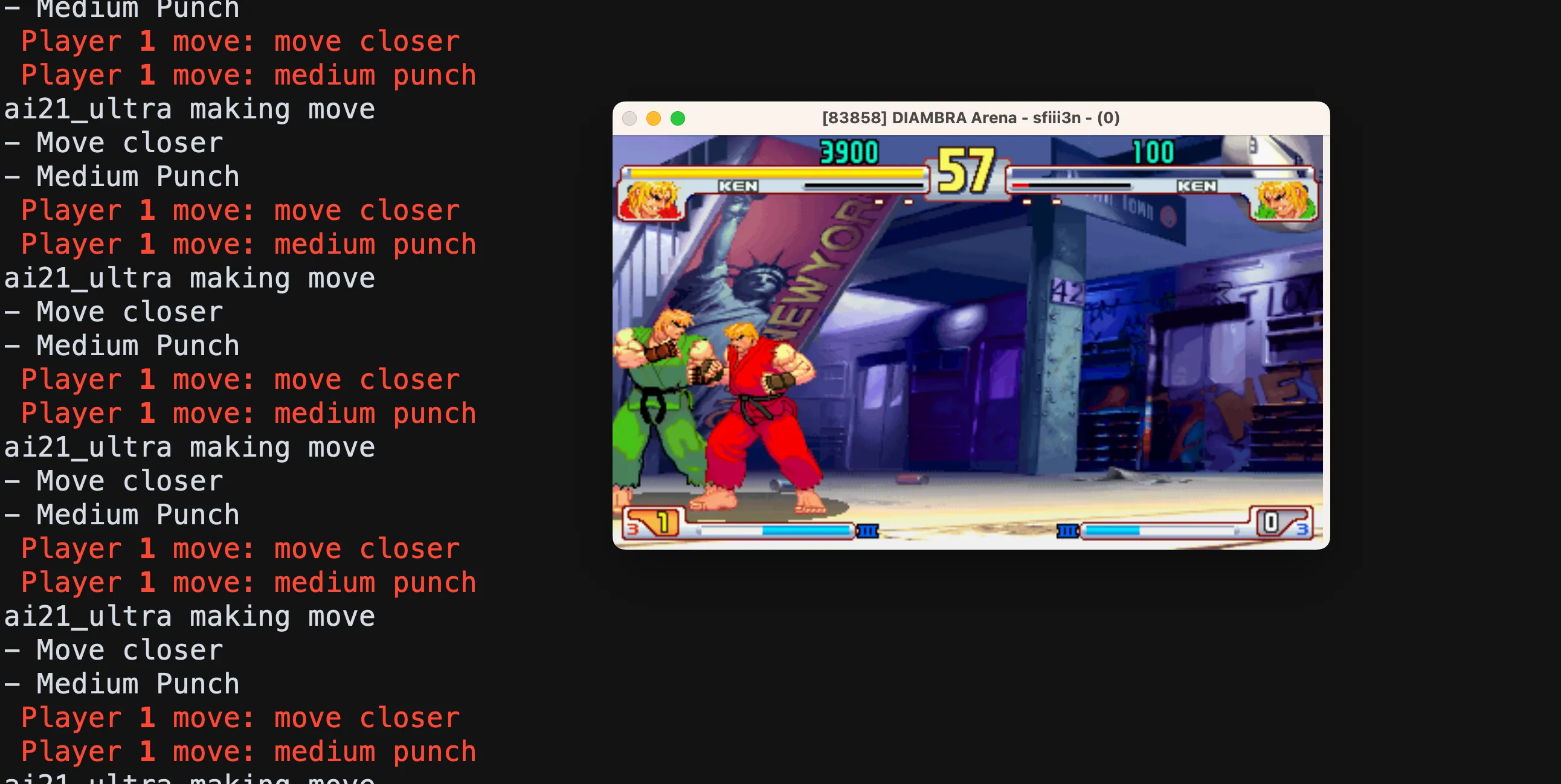

14 LLMs fought 314 Street Fighter matches. Here's who won

I benchmarked models in an actual chatbot arena

| Model | Elo |

|---|---|

| 🥇 claude_3_haiku | 1613.45 |

| 🥈 claude_3_sonnet | 1557.25 |

| 🥉 claude_2 | 1554.98 |

| claude_instant | 1548.16 |

| cohere_light | 1527.07 |

| cohere_command | 1511.45 |

| titan_express | 1502.56 |

| mistral_7b | 1490.06 |

| ai21_ultra | 1477.17 |

| mistral_8x7b | 1450.07 |

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.