Optimize DB Recovery:Multi-Thread PostgreSQL Restores on Amazon Aurora Serverless

Unlocking Restore Speed and Efficiency in Cloud Database Management and minimizing the RTO and RPO by restoring a single database.

- Postgres tools were installed directly on the EC2 instance or a workstation with access to the Amazon RDS instance for this demonstration. An Amazon Linux instance was utilized to install Postgres and execute the subsequent commands.

1

sudo dnf install postgresql15- The instance profile has access to the Secret Manager, which stores the credentials and connection details for the Amazon Aurora Serverless cluster.

- Copy the script below to the instance with access to the Amazon Aurora Serverless cluster and the Secret.

- Currently, the script doesn't automatically create the target database. However, this feature can be conveniently incorporated into the script if required. For the time being, manually connect to the RDS and initiate the creation of a target database with the command:

CREATE DATABASE Demo;, this feature can be conveniently incorporated into the script if required - Modify the

secret_nameon line 4 to match your secret's name. Additionally, the script logs the restoration duration by writing to the output file named.pg_restore_log.txt.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

# Name of the secret in AWS Secrets Manager

secret_name="postgres/credentials"

# Path to the log file where start and finish times will be written

LOG_FILE="./pg_restore_log.txt"

# Capture and write the start time to the log file

echo "Start time: $(date)" > $LOG_FILE

# Retrieve the password from AWS Secrets Manager

secret_value=$(aws secretsmanager get-secret-value --secret-id $secret_name --query 'SecretString' --output text)

echo $secret_value

# Check if the secret was successfully retrieved

if [ -z "$secret_value" ]; then

echo "Failed to retrieve secret from AWS Secrets Manager."

echo "Failed to retrieve secret from AWS Secrets Manager." >> $LOG_FILE

exit 1

fi

PASSWORD=$(echo $secret_value | jq -r '.password')

HOST=$(echo $secret_value | jq -r '.host')

USERNAME=$(echo $secret_value | jq -r '.username')

ENGINE=$(echo $secret_value | jq -r '.engine')

DUMP_FILE_PATH="./latest.dump"

JOB_NUMBER=8

DB_NAME_TO_RESTORE="Demo"

echo "PASSWORD: $PASSWORD"

echo "HOST: $HOST"

echo "USERNAME: $USERNAME"

echo "ENGINE: $ENGINE"

echo "DUMP_FILE_PATH: $DUMP_FILE_PATH"

echo "JOBS_NUMBER: $JOBS_NUMBER"

echo "DB_NAME_TO_RESTORE: $DB_NAME_TO_RESTORE"

# Check if the values were successfully extracted

if [ -z "$PASSWORD" ] || [ -z "$HOST" ] || [ -z "$USERNAME" ] || [ -z "$ENGINE" ]; then

echo "Failed to extract database credentials from JSON file."

exit 1

fi

# Export PGPASSWORD for PostgreSQL authentication

export PGPASSWORD=$PASSWORD

# Build the pg_restore command with all options and arguments

PG_COMMAND="pg_restore --verbose --clean --no-acl --no-owner -h $HOST -U $USERNAME -d $DB_NAME_TO_RESTORE -j $JOB_NUMBER $DUMP_FILE_PATH"

echo "PG_COMMAND:$PG_COMMAND"

# Execute the pg_restore command using eval to interpret the string as a command

$eval $PG_COMMAND

# Unset the PGPASSWORD to avoid leaving sensitive information in the environment

unset PGPASSWORD

# Capture and write the finish time to the log file

echo "Finish time: $(date)" >> $LOG_FILE1

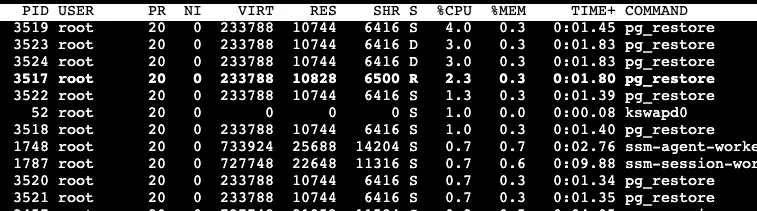

watch "ps aux | grep pg_restore | grep -v grep"