Format and Parse Amazon S3 URLs

Find the right Amazon S3 URL

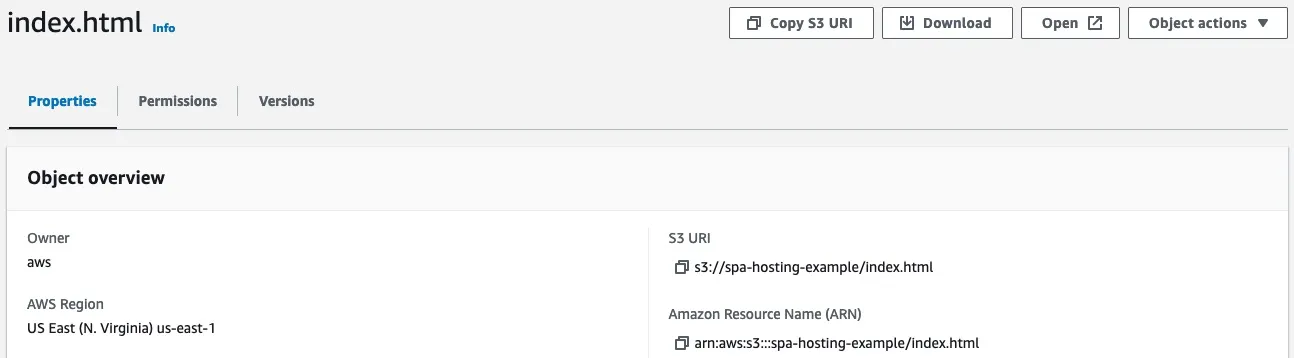

s3:, http:, or https:. Then, there are the ones with s3.amazonaws.com, s3.us-east-1.amazonaws.com, or even s3-us-west-2.amazonaws.com (note the dash instead of the dot between s3 and the region code). And where do you put the bucket: is it <bucket>.s3.us-east-1.amazonaws.com/<key> or s3.us-east-1.amazonaws.com/<bucket>/<key>? And when it comes to static website hosting, of course, there is also <bucket>.s3-website-us-east-1.amazonaws.com and <bucket>.s3-website-us-east-1.amazonaws.com (again, note the dash and the dot).s3://<bucket>/<key>. This URL is also displayed by the AWS management console.

1

https://s3.<region>.amazonaws.com/<bucket>/<key>1

https://<bucket>.<region>.s3.amazonaws.com/<key>us-east-1 have a legacy global endpoint that doesn't need a region code in the hostname:1

2

3

4

# Legacy hostname with path-style

https://s3.amazonaws.com/<bucket>/<key>

# Legacy hostname with virtual-hosted-style

https://<bucket>.s3.amazonaws.com/<key>1

2

3

4

# Regional hostname with path-style

https://s3.<region>.amazonaws.com/<bucket>/<key>

# Regional hostname with virtual-hosted-style

https://<bucket>.<region>.s3.amazonaws.com/<key>- instead of a dot . between s3 and <region>:1

2

3

4

# Dot-style

https://s3.<region>.amazonaws.com/<bucket>/<key>

# Dash-style

https://s3-<region>.s3.amazonaws.com/<bucket>/<key>us-west-2 region would support the legacy dash-style URL like https://s3-us-west-2.amazonaws.com/<bucket>/<key>. Nevertheless, the standard format https://s3.us-west-2.amazonaws.com/<bucket>/<key> is also available for these outliers.s3.amazonaws.com or s3.<region>.amazonaws.com hostname, but more importantly, they support secure HTTPS connections. That means all these URLs work with https:// as the protocol.1

2

3

4

# Website hostname with dot-style

http://<bucket>.s3-website.<region>.amazonaws.com/<key>

# Website hostname with dash-style

http://<bucket>.s3-website-<region>.amazonaws.com/<key>- or a dot . separating s3-website and <region>. To see which one is right for your region, you have to check the list of Amazon S3 website endpoints.s3://<bucket>/<key>. Other clients and SDKs probably use the regional REST endpoint with the bucket name either in the hostname or pathname.1

2

3

com.amazonaws.services.s3.model.AmazonS3Exception:

The bucket is in this region: eu-west-1.

Please use this region to retry the request (Service: Amazon S3; Status Code: 301; Error Code: PermanentRedirect;)formatS3Url, parseS3Url, and isS3Url.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

import { formatS3Url, parseS3Url, isS3Url, S3Object } from 'amazon-s3-url';

/* Types */

type S3UrlFormat =

| "s3-global-path"

| "s3-legacy-path"

| "s3-legacy-virtual-host"

| "https-legacy-path"

| "https-legacy-virtual-host"

| "s3-region-path"

| "s3-region-virtual-host"

| "https-region-path"

| "https-region-virtual-host";

type S3Object = {

bucket: string;

key: string;

region?: string;

};

/* Signatures */

function formatS3Url(s3Object: S3Object, format?: S3UrlFormat): string;

function parseS3Url(s3Url: string, format?: S3UrlFormat): S3Object;

function isS3Url(s3Url: string, format?: S3UrlFormat): boolean;

/* Examples */

// Global path

// Without format param (defaults to s3-global-path)

formatS3Url({ bucket: 'bucket', key: 'key' });

parseS3Url('s3://bucket/key');

isS3Url('s3://bucket/key');

// Legacy path-style

// With format param for explicit formatting and parsing

formatS3Url({ bucket: 'bucket', key: 'key' }, 'https-legacy-path');

parseS3Url('https://s3.amazonaws.com/bucket/key', 'https-legacy-path');

isS3Url('https://s3.amazonaws.com/bucket/key', 'https-legacy-path');

// Regional virtual-hosted-style

// With region property for regional endpoints

formatS3Url({ region: 'us-west-1', bucket: 'bucket', key: 'key' }, 'https-region-virtual-host');

parseS3Url('https://bucket.s3.us-west-1.amazonaws.com/key', 'https-region-virtual-host');

isS3Url('https://bucket.s3.us-west-1.amazonaws.com/key', 'https-region-virtual-host');