Co-ordinating Robot Fleets with AWS Lambda

See how to build two simple functions using AWS Lambda to manage a database of robot statuses. This serves as a base for building a fleet management system.

- Customers should be able to place orders online through a digital portal or webapp.

- Any order should be dispatched to any available robot at a given location, and alert the user when complete.

name and a status as arguments, attached to the event object passed to the handler; check the status is valid; and update a DynamoDB table for the given robot name with the given robot status. For example, in the Python code:1

2

3

4

def lambda_handler(event, context):

# ...

name = str(event["name"])

status = str(event["status"])name and status. If valid, the DynamoDB table is updated:1

2

3

4

5

6

7

8

9

10

11

ddb = boto3.resource("dynamodb")

table = ddb.Table(table_name)

table.update_item(

Key={"name": name},

AttributeUpdates={

"status": {

"Value": status

}

},

ReturnValues="UPDATED_NEW",

)1

2

3

4

5

6

7

8

9

10

11

enum Status {

Online,

}

// ...

struct Request {

name: String,

status: Status,

}enum, so no extra code is required for checking that arguments are valid. The arguments are obtained by accessing event.payload fields:1

2

3

let status_str = format!("{}", &event.payload.status);

let status = AttributeValueUpdate::builder().value(AttributeValue::S(status_str)).build();

let name = AttributeValue::S(event.payload.name.clone());1

2

3

4

5

6

7

8

9

10

11

let request = ddb_client

.update_item()

.table_name(table_name)

.key("name", name)

.attribute_updates("status", status);

tracing::info!("Executing request [{request:?}]...");

let response = request

.send()

.await;

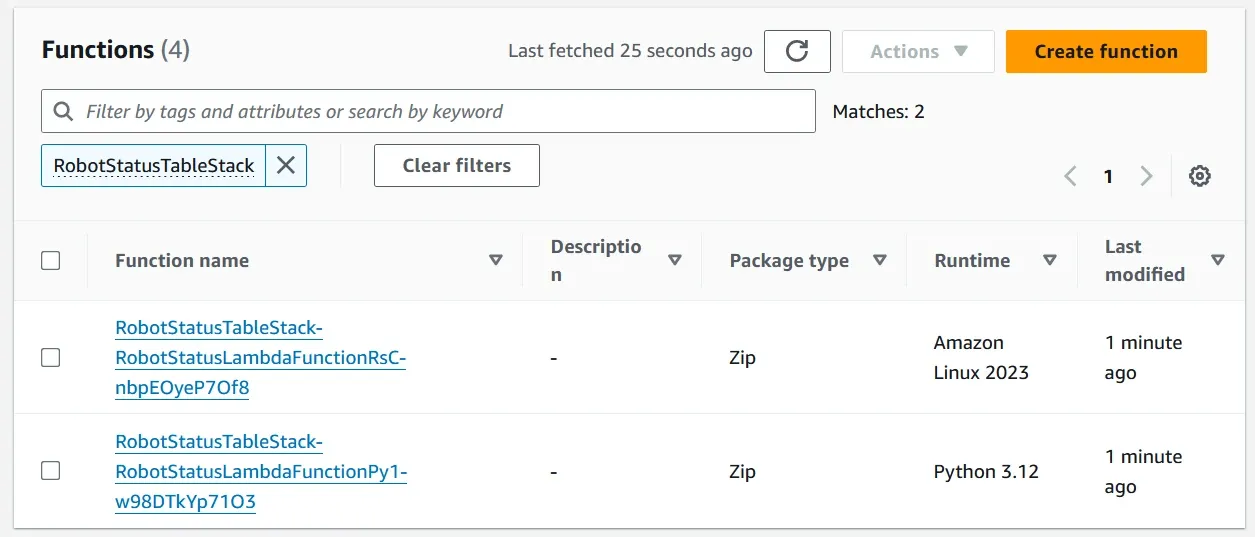

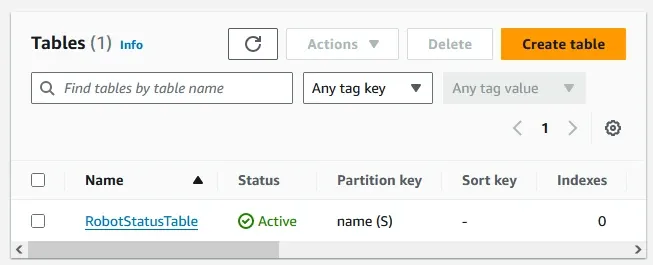

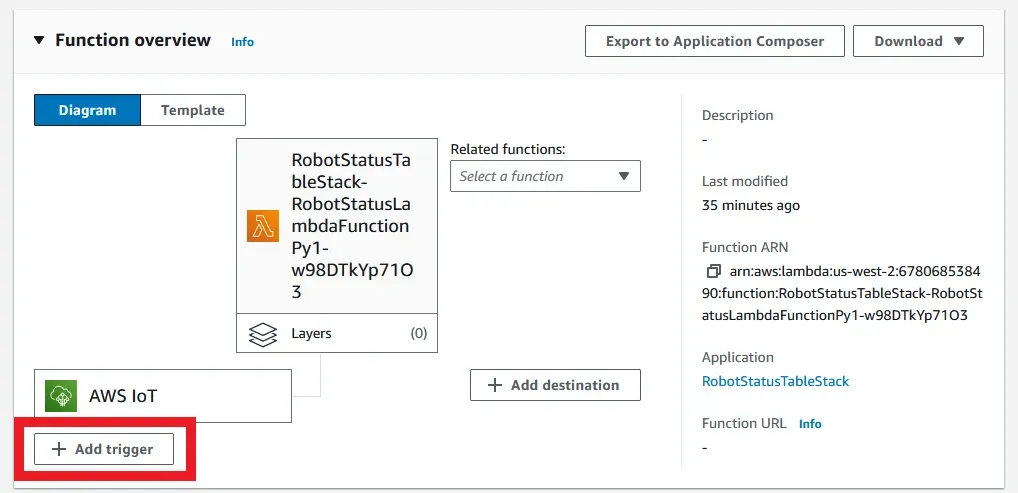

tracing::info!("Got response: {:#?}", response);- The two Lambda functions

- The DynamoDB table used to store the robot statuses

- An IoT Rule per Lambda function that will listen for MQTT messages and call the corresponding Lambda function

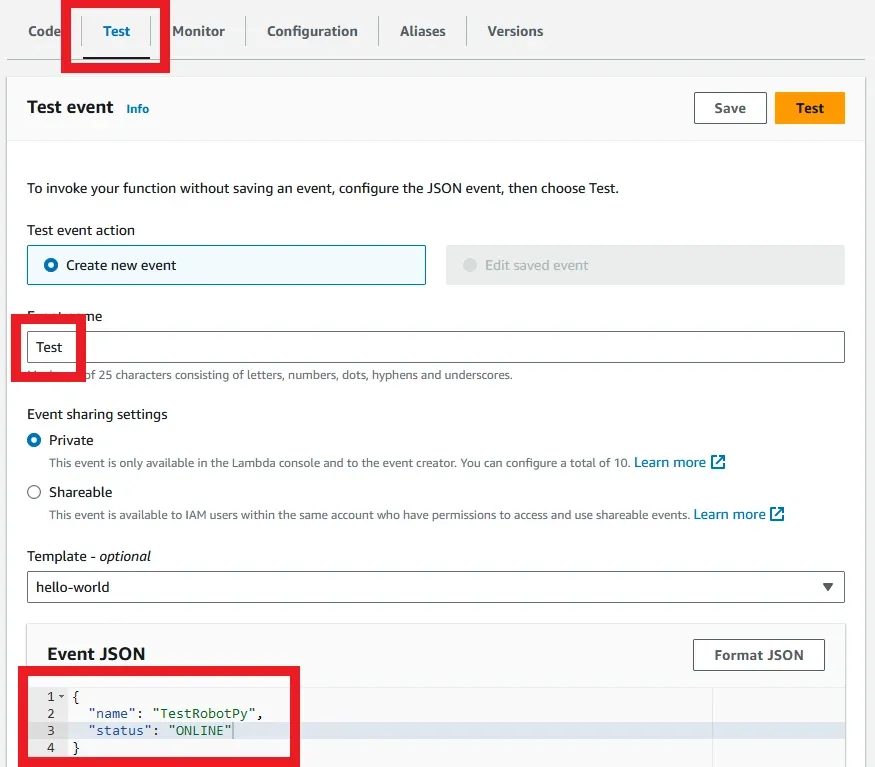

Great! Let's open one up and try it out. Open the function name that has "Py" in it and scroll down to the Test section (top red box). Enter a test name (center red box) and a valid input JSON document (bottom red box), then save the test.

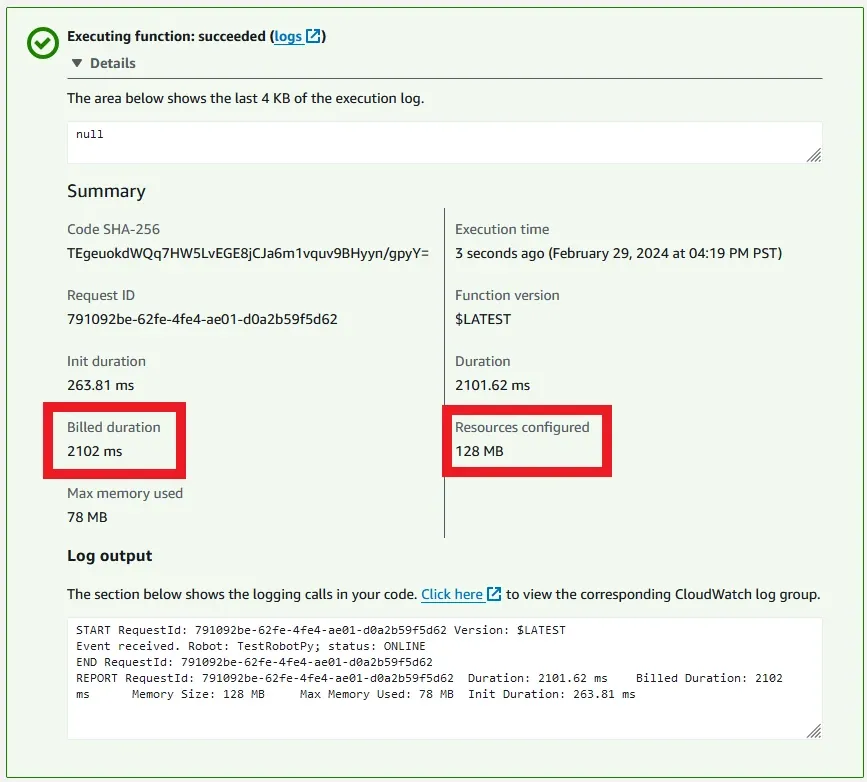

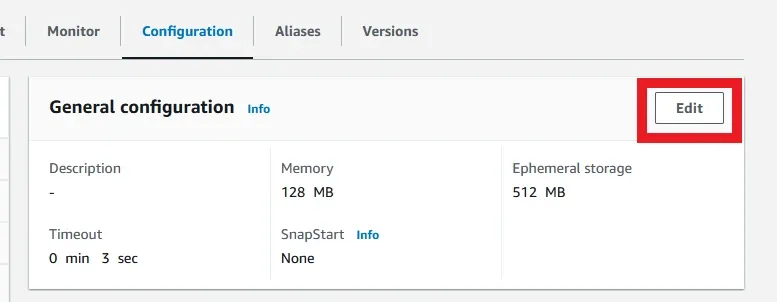

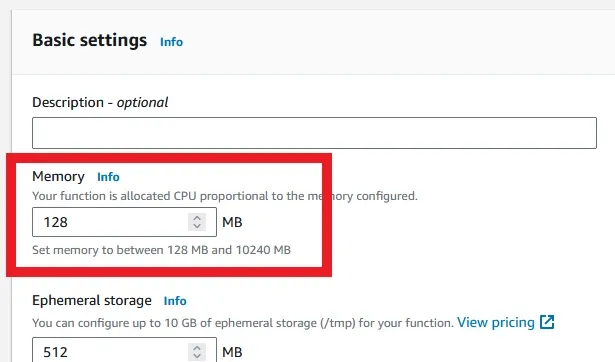

Now run the test event. You should see a box pop up saying that the test was successful. Note the memory assigned and the billed duration - these are the main factors in determining the cost of running the function. The actual memory used is not important for cost, but can help optimize the right settings for cost and speed of execution.

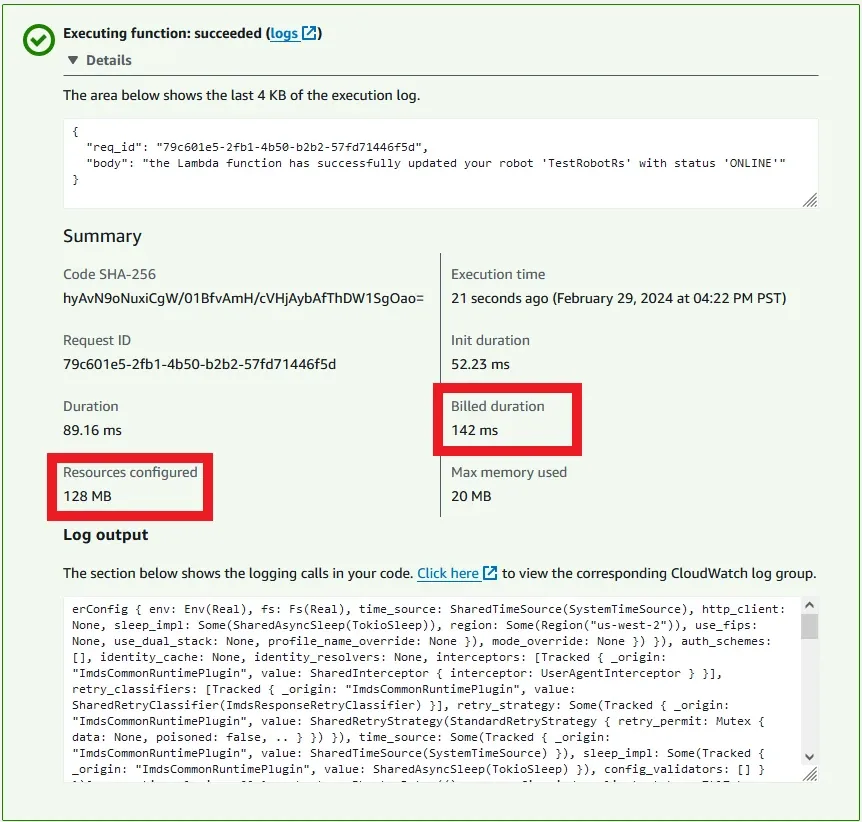

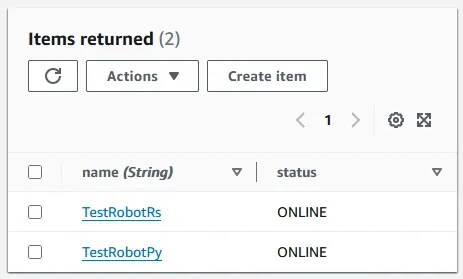

You can repeat this for the Rust function, only with the test event name changed to

TestRobotRs so we can tell them apart. Note that the memory used and duration taken are significantly lower.

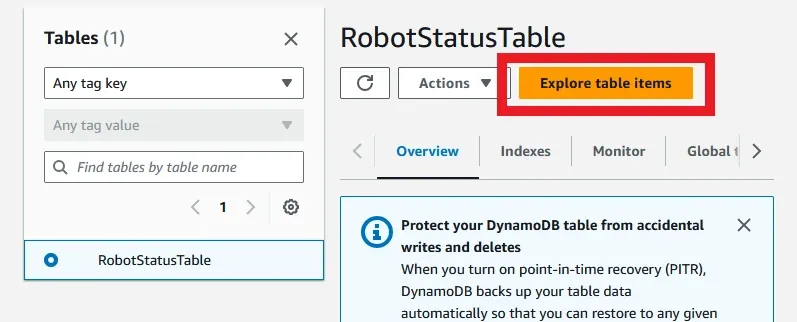

Select the button in the top right to explore items.

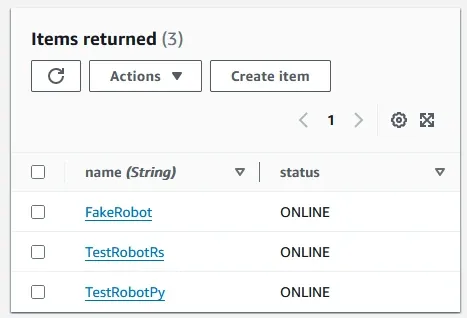

This should reveal a screen with the current items in the table - the two test names you used for the Lambda functions:

Success! We have used functions run in the cloud to modify a database to contain the current status of two robots. We could extend our functions to allow different statuses to be posted, such as OFFLINE or CHARGING, then write other applications to work using the current statuses of the robots, all within the cloud. One issue is that this is a console-heavy way of executing the functions - surely there's something more accessible to our robots?

There's already one input for each Lambda - the AWS IoT trigger. This is an IoT Rule set up by the CDK stack, which is watching the topic filter

robots/+/status. We can test this using either the MQTT test client or by running the test script in the sample repository:1

./scripts/send_mqtt.sh

There is only one extra entry, and that's because both functions executed on the same input. That means "FakeRobot" had its status updated to ONLINE once by each function.

Finally, we will use an ARM architecture, as this currently costs less than x86 in AWS.

| Test | Python (128MB) | Python (256MB) | Python (512MB) | Rust (128MB) | Rust (256MB) | Rust (512MB) |

|---|---|---|---|---|---|---|

| 1 | 594 ms | 280 ms | 147 ms | 17 ms | 5 ms | 6 ms |

| 2 | 574 ms | 279 ms | 147 ms | 15 ms | 6 ms | 6 ms |

| 3 | 561 ms | 274 ms | 133 ms | 5 ms | 5 ms | 6 ms |

| Median | 574 ms | 279 ms | 147 ms | 15 ms | 5 ms | 6 ms |

| Monthly Cost | $5.07 | $4.95 | $5.17 | $0.99 | $0.95 | $1.06 |