How to Prompt Mistral AI models, and Why

There are some peculiar things about prompting with Mistral AI Instruct models, what are they? And why?

<s> is all about, and more.1

What is the capital of Australia?1

The capital city of Australia is Canberra...1

I like drinking tea.1

I like drinking tea in a nice mug. I like drinking tea from a nice mug..1

<s>[INST] Instruction [/INST] Model answer</s>[INST] Follow-up instruction [/INST]1

<s>[INST] What is the capital of Australia? [/INST] 1

The capital city of Australia is Canberra...1

<s>[INST] What is the capital of Australia? [/INST] G'day 1

mate! I'd be happy to help you out. The capital city of Australia is Canberra...1

<s>[INST] Write the following data into a JSON format. Name: Mike, Favorite service: Amazon Bedrock. [/INST] {1

2

3

4

5

"name": "Mike",

"favorite_service": "Amazon Bedrock"

}1

<s>[INST] I like drinking tea. [/INST] 1

That's great to hear! Tea is a popular beverage...1

2

<s>[INST] I like drinking tea. [/INST] That's great to hear! Tea is a

popular beverage...</s> [INST] What is the best way to brew tea? [/INST] 1

1. Choose the Right Water...1

2

3

4

<s>[INST] I like drinking tea. [/INST] That's great to hear! Tea is a

popular beverage...</s> [INST] What is the best way to brew tea? [/INST]

1. Choose the Right Water...</s> [INST] Should I dunk biscuits in my

tea? [/INST] 1

2

3

4

5

6

7

messages = [

{"role": "user", "content": "What is the capital of Australia?"},

{"role": "assistant", "content": "The capital city..."},

{"role": "user", "content": "But I thought it was Sydney!"},

{"role": "assistant", "content": "Sydney is indeed a large..."},

{"role": "user", "content": "Thanks for putting me straight."},

]1

2

3

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("mistralai/Mistral-7B-Instruct-v0.2")

print(tokenizer.chat_template)"{{ bos_token }}{% for message in messages %}{% if (message['role'] == 'user') != (loop.index0 % 2 == 0) %}{{ raise_exception('Conversation roles must alternate user/assistant/user/assistant/...') }}{% endif %}{% if message['role'] == 'user' %}{{ '[INST] ' + message['content'] + ' [/INST]' }}{% elif message['role'] == 'assistant' %}{{ message['content'] + eos_token}}{% else %}{{ raise_exception('Only user and assistant roles are supported!') }}{% endif %}{% endfor %}"1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

This Jinja2 template does the following:

- {{ bos_token }} outputs a BOS (beginning of sentence) token

- It then loops through a list of messages:

- It checks that the role of each message alternates between 'user'

and 'assistant', raising an error if not

- For user messages, it surrounds the content with [INST] and [/INST]

tags

- For assistant messages, it outputs the content followed by an EOS

(end of sentence) token

- It raises an error if the role is anything other than 'user' or

'assistant'

- After looping through all the messages, the result is a sequence

of user and assistant utterances with special tokens, ready to be

processed by a dialog system.

The main purpose is to format a conversation with alternating

user/assistant roles into an input that can be consumed by a

conversational AI system. The BOS and EOS tokens mark sentence

boundaries, while the [INST] tags annotate the user utterances.1

2

3

4

5

6

7

8

9

10

11

data = {

"bos_token": "<s>",

"eos_token": "</s>",

"messages" : [

{"role": "user", "content": "What is the capital of Australia?"},

{"role": "assistant", "content": "The capital city..."},

{"role": "user", "content": "But I thought it was Sydney!"},

{"role": "assistant", "content": "Sydney is indeed a large..."},

{"role": "user", "content": "Thanks for putting me straight."},

]

}1

chat_template = "{{ bos_token }}{% for message in messages %}{% if (message['role'] == 'user') != (loop.index0 % 2 == 0) %}{{ raise_exception('Conversation roles must alternate user/assistant/user/assistant/...') }}{% endif %}{% if message['role'] == 'user' %}{{ '[INST] ' + message['content'] + ' [/INST]' }}{% elif message['role'] == 'assistant' %}{{ message['content'] + eos_token}}{% else %}{{ raise_exception('Only user and assistant roles are supported!') }}{% endif %}{% endfor %}"1

2

3

4

5

6

7

8

9

10

from jinja2 import Template

# Load the template string into a Jinja object.

template = Template(chat_template)

# Combine the data with the template.

rendered_template = template.render(data)

# Take a look at what we got!

print(rendered_template)<s>[INST] What is the capital of Australia? [/INST]The capital city of Australia is Canberra...</s>[INST] But I thought it was Sydney! [/INST]Sydney is indeed a large and famous city in Australia, but it is not the capital city...</s>[INST] Thanks for putting me straight. [/INST]1

<s>[INST] Instruction [/INST] Model answer</s>[INST] Follow-up instruction [/INST]1

2

Note that <s> and </s> are special tokens for beginning of string (BOS)

and end of string (EOS) while [INST] and [/INST] are regular strings.| Token ID | maps to |

|---|---|

| 100 | a |

| 101 | b |

| 102 | c |

| ... | ... |

| 8701 | Amazon |

| 12266 | Bed |

| 16013 | rock |

| 15599 | rocks |

| 28808 | ! |

| Token ID | maps to |

|---|---|

| 1 | bos_token |

| 2 | eos_token |

| _* | pad_token |

| Token ID | maps to string |

|---|---|

| 1 | <s> |

| 2 | </s> |

1

2

3

4

5

6

7

8

9

10

11

12

from tokenizer import Tokenizer

# Load the tokenizer from local disk

tokenizer = Tokenizer("Mistral-7B-v0.2-Instruct/tokenizer.model")

# Get some tokens for a string.

token_ids = tokenizer.encode("Time to brew some tea.")

print(token_ids)

# Convert the ids back to text for completeness.

tokens = tokenizer.decode(token_ids)

print(tokens)1

2

[1, 5329, 298, 18098, 741, 9510, 28723]

Time to brew some tea.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

from transformers import AutoTokenizer

# Load the tokenizer from HuggingFace

tokenizer = AutoTokenizer.from_pretrained("mistralai/Mistral-7B-Instruct-v0.2")

# Get some tokens for a string.

token_ids = tokenizer("Time to brew some tea.")

print(token_ids['input_ids'])

# Convert the ids back to text for completeness.

tokens = tokenizer.decode(token_ids['input_ids'])

print(tokens)

##################################################

# Get some tokens for a string.

token_ids = tokenizer("Time to brew some tea.", add_special_tokens=False)

print(token_ids['input_ids'])

# Convert the ids back to text for completeness.

tokens = tokenizer.decode(token_ids['input_ids'])

print(tokens)1

2

3

4

[1, 5329, 298, 18098, 741, 9510, 28723]

<s> Time to brew some tea.

[5329, 298, 18098, 741, 9510, 28723]

Time to brew some tea.1

2

3

4

5

6

7

8

9

10

11

12

from transformers import AutoTokenizer

# Load the tokenizer from HuggingFace

tokenizer = AutoTokenizer.from_pretrained("mistralai/Mistral-7B-Instruct-v0.2")

# Get some tokens for the string [INST].

token_ids = tokenizer("[INST]", add_special_tokens=False)

print(token_ids['input_ids'])

# Convert the ids back to text for completeness.

for token_id in token_ids['input_ids']:

print(f"{token_id}: \t '{tokenizer.decode(token_id)}'")1

2

3

4

[733, 16289, 28793]

733: '['

16289: 'INST'

28793: ']'1

2

Prompt: What is the capital of Australia?

Answer: The capital of Australia is Canberra.1

2

3

4

5

6

[START_SYMBOL_ID] +

tok("[INST]") + tok(USER_MESSAGE_1) + tok("[/INST]") +

tok(BOT_MESSAGE_1) + [END_SYMBOL_ID] +

…

tok("[INST]") + tok(USER_MESSAGE_N) + tok("[/INST]") +

tok(BOT_MESSAGE_N) + [END_SYMBOL_ID]<s>[INST] What is the capital of Australia? [/INST] The capital of Australia is Canberra.</s>[INST] What is the capital of Queensland? [/INST] The capital of Queensland is Brisbane.</s>[INST] How do you make a cup of tea? [/INST] 1. Choose the Right Water...</s><s> used for the bos token id is only used once at the very start of the data, and that </s> is used after each answer. The question (or more accurately 'instruction') is wrapped in the [INST] and [/INST] tags. The format and the capitalisation of all of these tags is set, and we must follow this when we prompt.<s> is all about, and that you need to add it sometimes, and not in others.us-west-2 region, more regions are coming, so check to see if they're now available in another regions:

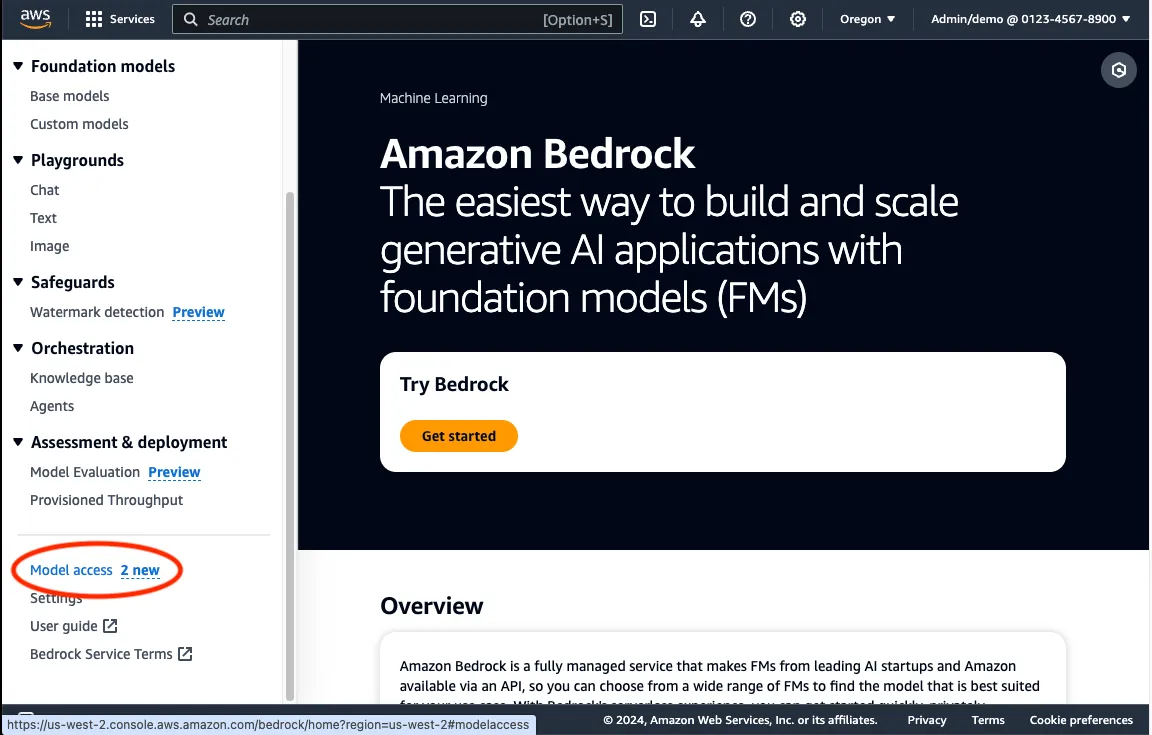

Expand the menu on the left hand side, scroll down and select "Model access":

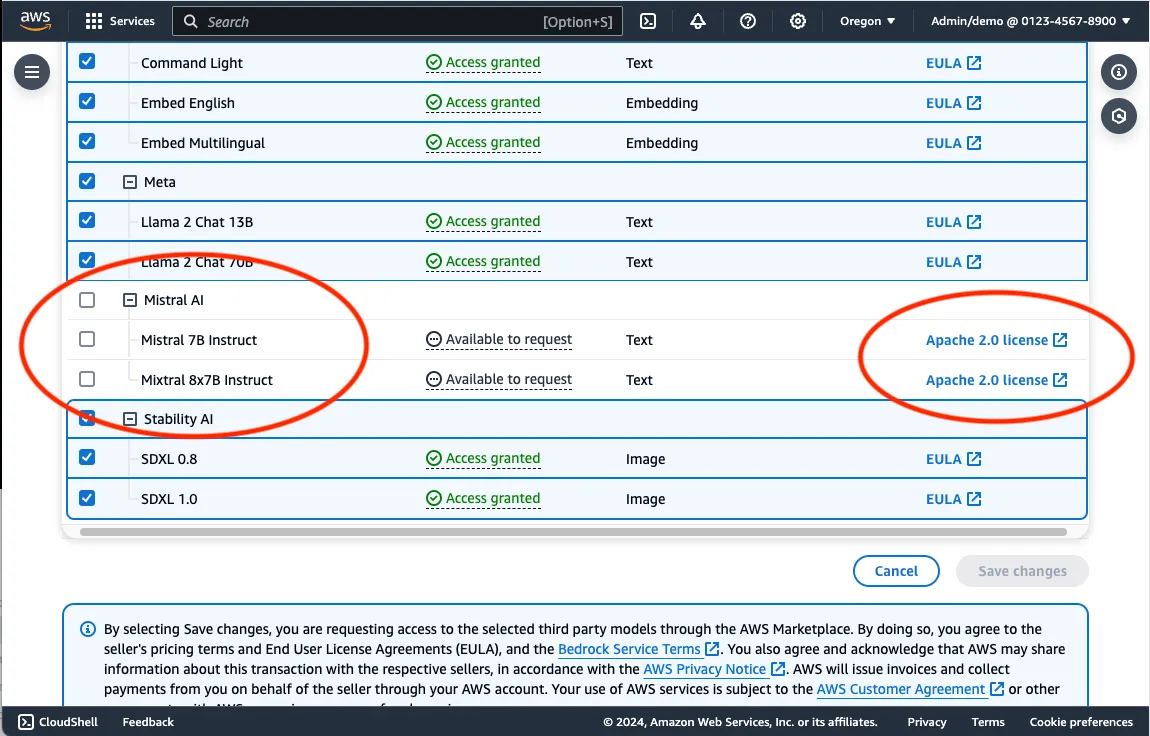

Select the orange "Manage model access" button, and scroll down to see the new Mistral AI models. If you're happy with the licence, then select the checkboxes next to the models, and click 'Save changes'.

You can now access the models! Head to the Amazon Bedrock text playground to start experimenting with your prompts. When you're ready to write some code, take a look at the code samples we have here,and here.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.