IVFFlat vs. HNSW - and how to use them in Amazon DocumentDB

Learn about he most popular vector search indexes, IVFFlat and HNSW, and how to use them in DocumentDB turning into a really capable vector database.

- Very fast built time

- Small storage size

- Low memory requirements

- Slower to generate responses

- quality of results deteriorates easily with data updates needing frequent rebuilding of the index

- Needs a substantial amount of data before you can create an IVFFlat index (several thousands of data points at minimum is recommended)

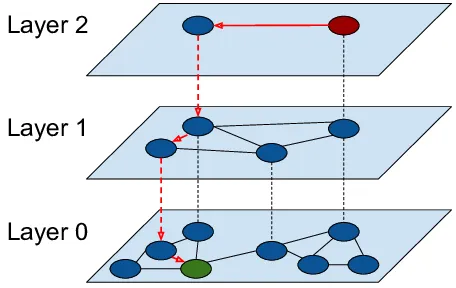

- Very fast (some benchmarks put it at a bit over 15x better than IVFFlat for response times)

- Very resillient to data updates with negligible impact on recall (that is the quality of relevance of results don’t degrade easily)

- No need for any data at all. You can create the index and populate it over time with very little impact

- Build time is significantly slower than IVFFlat (some benchmarks demonstrate that it can be 32x times slower) … but you may not need to rebuild the index very frequently if at all

- Needs more memory to run

- Has a bigger size in storage than an IVFFlat index (nearly triple the size according to some benchmarks)

1

2

3

4

5

6

7

8

9

10

11

12

13

db.collection.createIndex(

{ "<vectorField>": "vector" },

{ "name": "<indexName>",

"vectorOptions": {

"type": " <hnsw> | <ivfflat> ",

"dimensions": <number_of_dimensions>,

"similarity": " <euclidean> | <cosine> | <dotProduct> ",

"lists": <number_of_lists> [applicable for IVFFlat],

"m": <max number of connections> [applicable for HNSW],

"efConstruction": <size of the dynamic list for index build> [applicable for HNSW]

}

}

);1

2

3

4

5

6

7

8

9

10

11

db.collection.createIndex(

{ "vectorEmbedding": "vector" },

{ "name": "myIndex",

"vectorOptions": {

"type": "ivfflat",

"dimensions": 3,

"similarity": "euclidean",

"lists":1

}

}

)1

2

3

4

5

6

7

8

9

10

11

12

db.collection.createIndex(

{ "vectorEmbedding": "vector" },

{ "name": "myIndex",

"vectorOptions": {

"type": "hnsw",

"dimensions": 3,

"similarity": "euclidean",

"m": 16,

"efConstruction": 64

}

}

);