Build an AI image catalogue! - Claude 3 Haiku

Building an Intelligent Photo Album with Amazon Bedrock

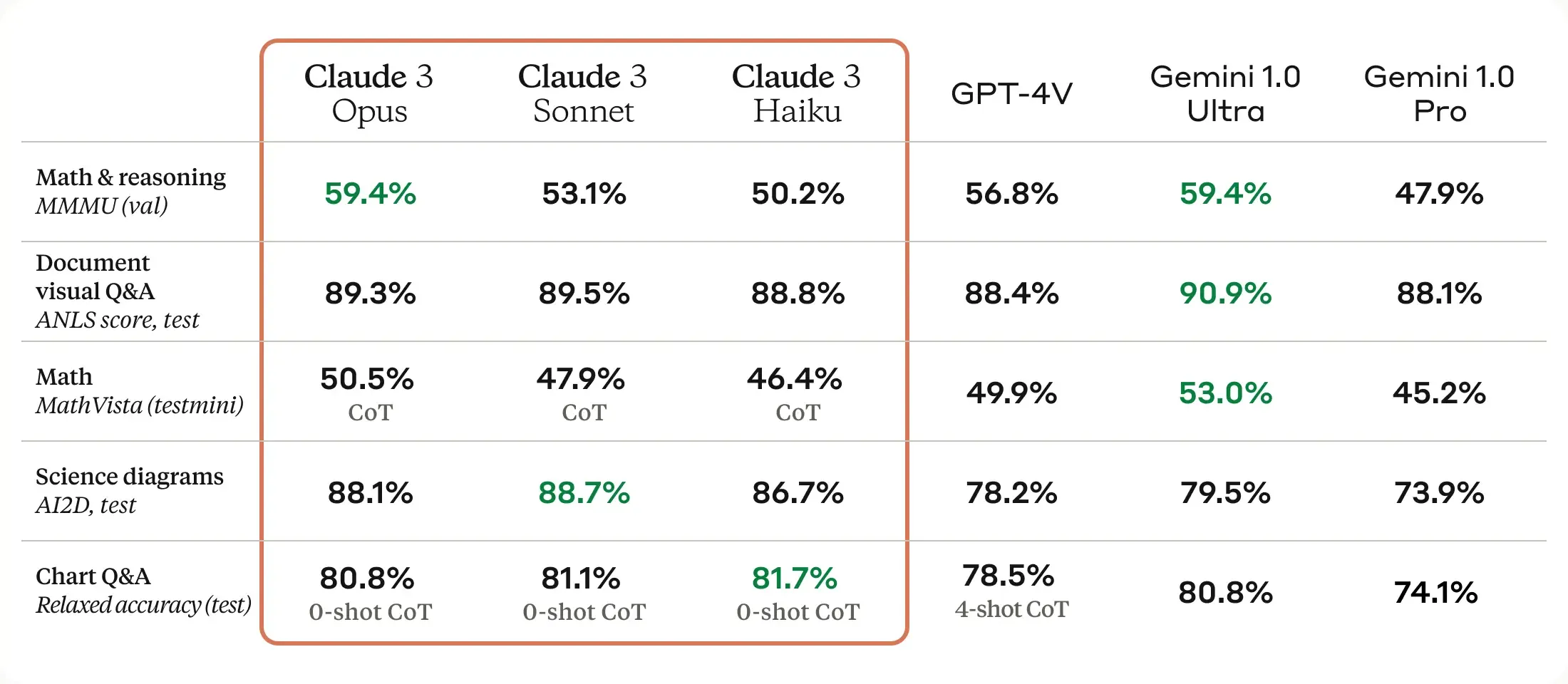

“The next generation of Claude: A new standard for intelligence” - https://www.anthropic.com/news/claude-3-family

- Haiku (Available on Amazon Bedrock)

- Sonnet (Available on Amazon Bedrock)

- Opus (Coming soon to your favourite AI provider starting with B… and ending in rock)

- Near-instant results

- Strong vision capabilities

- Fewer refusals

- Improved accuracy

- Long context and near-perfect recall

- Responsible design

- Easier to use

“The Claude 3 models have sophisticated vision capabilities on par with other leading models. They can process a wide range of visual formats, including photos, charts, graphs and technical diagrams. We’re particularly excited to provide this new modality to our enterprise customers, some of whom have up to 50% of their knowledge bases encoded in various formats such as PDFs, flowcharts, or presentation slides.”

“Claude 3 Haiku: our fastest model yet” - https://www.anthropic.com/news/claude-3-haiku

- The fastest model in the Claude 3 family

- The most cost-effective model in the Claude 3 family.

“Speed is essential for our enterprise users who need to quickly analyze large datasets and generate timely output for tasks like customer support. Claude 3 Haiku is three times faster than its peers for the vast majority of workloads, processing 21K tokens (~30 pages) per second for prompts under 32K tokens [1]. It also generates swift output, enabling responsive, engaging chat experiences and the execution of many small tasks in tandem.”

- Seamlessly integrate Claude 3 Haiku into a photo album app

- Calling Amazon Bedrock from a serverless application

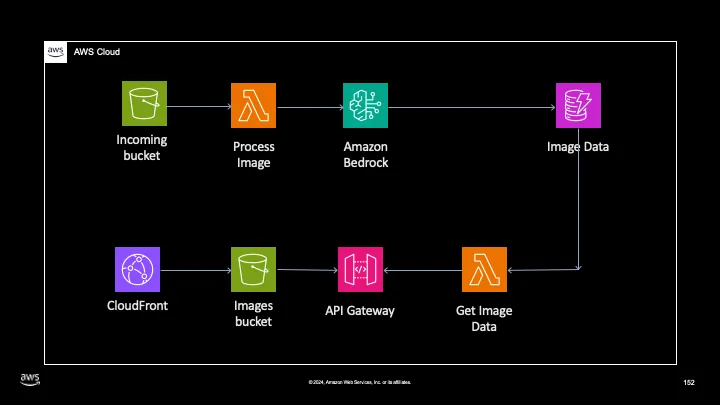

- Basic Architecture needed to achieve this.

- Some lessons learned with Prompt Engineering

- To receive images and send them to be processed using S3 Events

- To store and host processed images and a static hosted HTML page API Gateway

- To accept a GET request from a static hosted HTML page AWS Lambda

- To receive the S3 event and process the image

- To accept a GET request and provide the user with the image data Amazon Bedrock

- To leverage Claude 3 and provide an AI-generated summary and category Amazon DynamoDB

- To store the information generated by Claude 3 / Amazon Bedrock

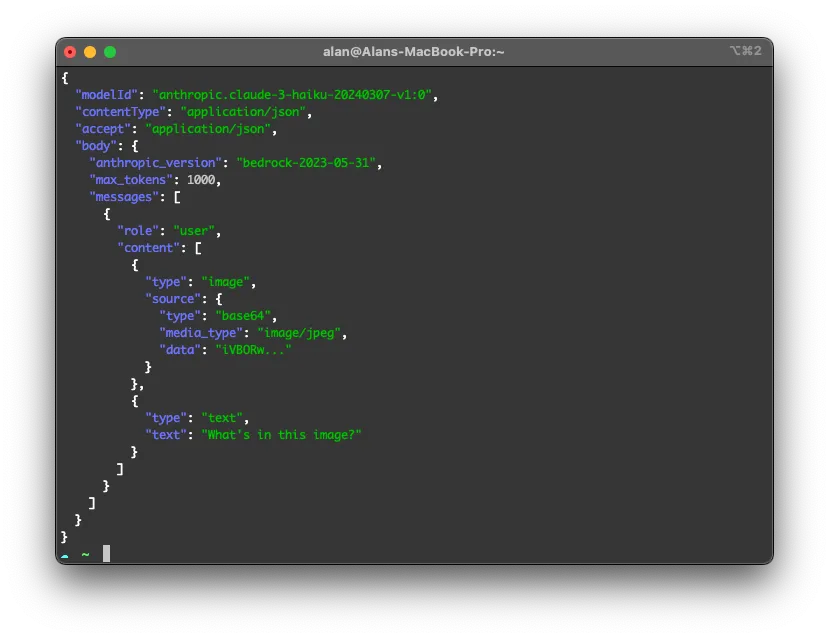

“Provide me a summary of this image and give it a short category”.

- Time for the model to think about what it’s doing - When we are thinking about something or trying to work on something, we generally think it through 3 or 4 times maybe, sometimes we’ll jot down some notes as well.

- Structure your output - Claude likes XML tags, so maybe have Claude structure its output. Ask it to put its thinking into XML tags such as

<SCRATCHPAD>or<NOTES>, closing them respectively. Then have it put the output of value, your output, into another XML tag such as<OUTPUT></OUTPUT> - The more detail the better. Assume you’re explaining this task for the first time to a trainee. If a trainee runs this task for the first time and gets it wrong, you didn’t explain it well enough and Claude definitely won’t get it right. Give it a purpose or a role. It’s not good to expect a toaster to dispense ice. So ensure that your model is given a contextual purpose to work to. * This will help make your answers more accurate.

- Decode Image Name: Extracts the name of the uploaded image from the S3 event and removes any obfuscation caused by URL formatting of the name.

- Retrieve Image Type: Determines the MIME type of the uploaded image using the S3 object key.

- Retrieve Image Data: Fetches the image data from the designated S3 bucket.

- Encode Image Data: Converts the image data to a base64-encoded string for processing by the Claude 3 Haiku model.

- Generate Summary: Utilizes the Claude 3 Haiku model to produce a summary of the image content.

- Extract Summary: Parses the response from the Claude 3 Haiku model to extract the generated summary.

- Store Summary in DynamoDB: Stores the extracted summary along with relevant image details (e.g., object ID) in a DynamoDB table for future retrieval.