How Fazz Financial Group reduced MTTR for production failures with Generative AI

In this blog post, we will share about our innovation journey with GenAI through deliberate training, enablement, collaboration and rapid prototyping.

- (1): GenAI knowledge gaps

- While our Engineers, Product Managers and Data Analysts have some interest in GenAI, they are not familiar with the underlying technology or basic building blocks they can use to build GenAI use-cases.

- (2): Unclear ROI of GenAI use-cases

- While ideas were being thrown around and our builders have been researching popular GenAI reference projects, it remains unclear which use-case would actually carry tangible ROI for our unique business.

- (3) Lack of structured path to build & deploy GenAI apps

- Finally, while our builders are strong in shipping technical services - it’s not apparent if we can stick to the same processes with GenAI features. What do we do if LLMs start to hallucinate? How can we set up guardrails? The path to take GenAI projects to production remains unclear.

Partnering with relevant teams at AWS, we sat down and co-developed a custom GenAI Training & Enablement curriculum tailored for our very own needs. We worked backwards from identified gaps, and designed 4 technical workshops focused on equipping our builders with core GenAI knowledge and hands-on expertise to build GenAI use-cases on AWS. This involves sessions around GenAI for Financial Services, GenAI security and GenAI Product Management - things we care deeply about.

Following which, here comes the fun part! We then gave our builders an opportunity to apply their new-found skills through an internal hackathon. Focusing on developing GenAI use-cases to influence our roadmap, we got our Engineers, Product Managers and Data Engineers in one room - and have them ideate and build prototypes to solve day-to-day challenges using GenAI.

During the 24-hour hackathon, our teams built 10 prototypes using AWS GenAI services from SageMaker Jumpstart, Bedrock and Amazon Q. The event also gave us an opportunity to connect our regionally-dispersed teams across Singapore, Jakarta and Taiwan through a fun and interactive technical collaboration.

We were really impressed with the creative prototypes surfaced through the way they addressed our real-world business challenges - whether it was to streamline internal processes or delight our customers. In the following section, we will showcase one of these projects - where we built an internal tool to drastically reduce our Mean Time To Resolve (MTTR) for production failures using Generative AI.

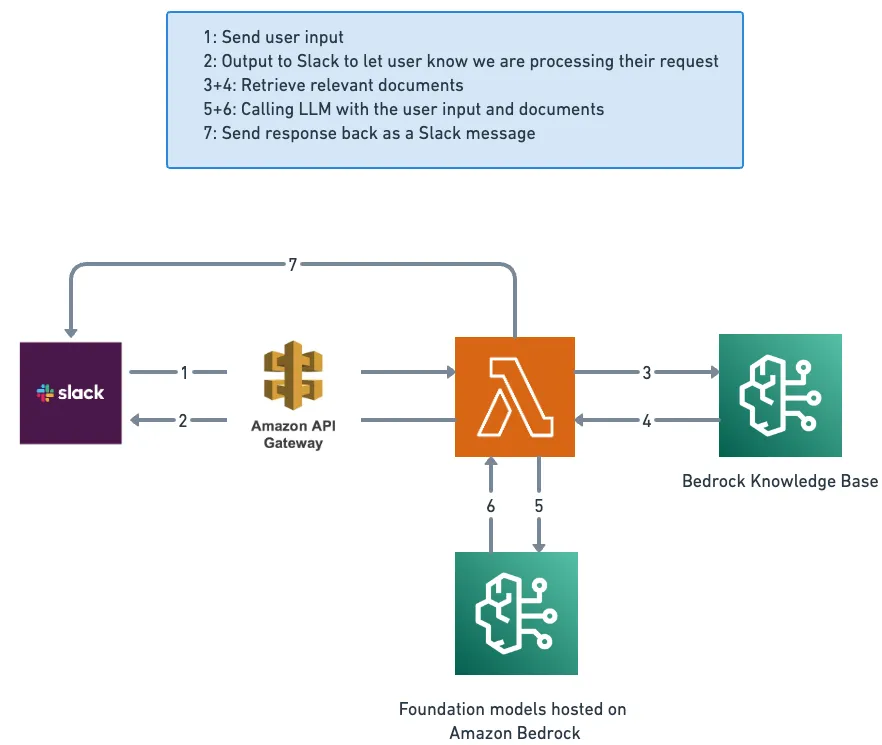

Our architecture has 4 main components:

- Slack acting as our user interface

- AWS Lambda for Serverless hosting of our code

- Amazon Bedrock Knowledge Base to store and retrieve our data sources

- Foundational models hosted on Amazon Bedrock for understanding and processing natural language

Building on Amazon Bedrock allows us to tap on on different first-party (E.g. Amazon Titan) and third-party language models (E.g. Anthropic Claude) using the same data platform. This allows us to build a single GenAI platform, yet constantly adapt the best model for our use-case at different points in time. Given the rapid pace of development in GenAI and foundation models, this helps us build longevity into our GenAI stack going forward.

Using Amazon Bedrock's Knowledge Base has been a key part of adopting Retrieval Augmented Generation (RAG). This ready-made tool makes it easy to save and find our data. After we upload our documents, it turns them into text, breaks them into pieces that overlap enough, changes them into vector forms, and keeps them in a database. When we need to get our data back, we can simply use a Python function to call the API and get the most relevant information related to our question.

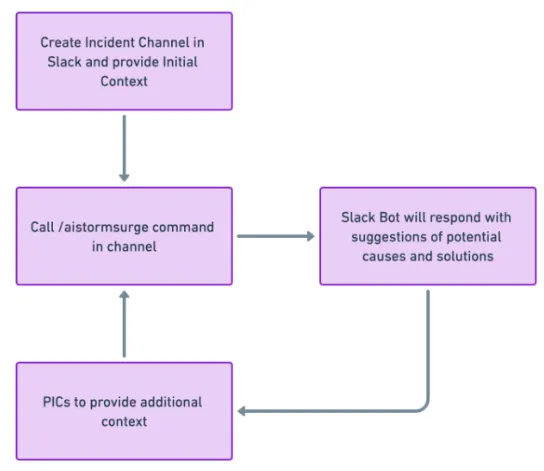

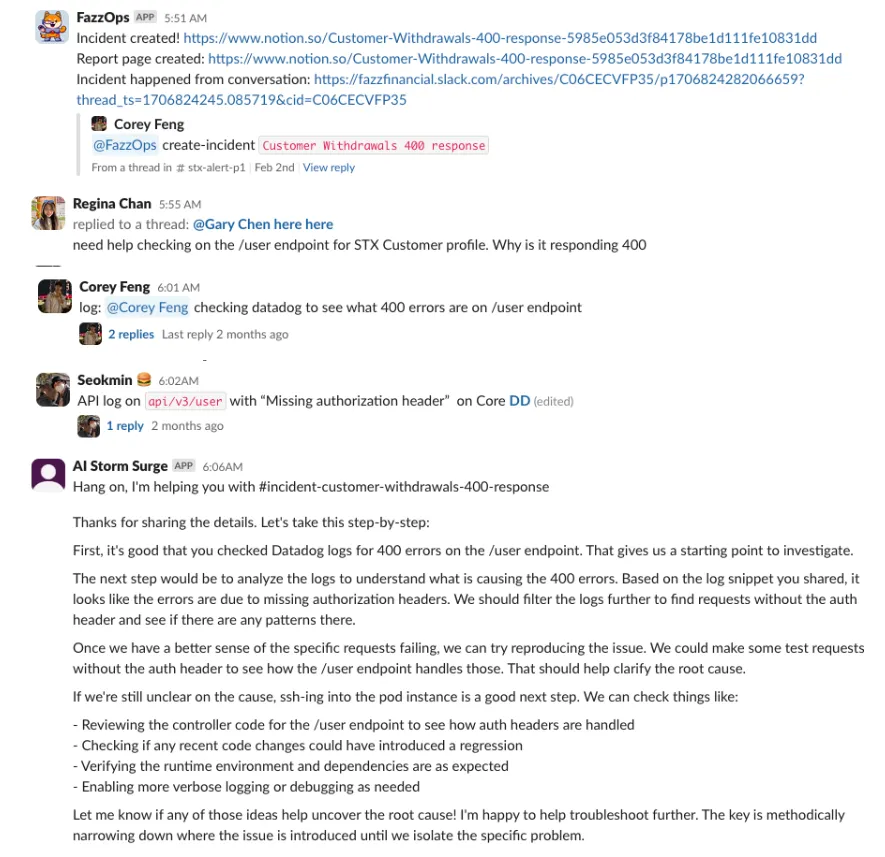

Prompt Engineering was necessary to incorporate the context obtained from RAG into the prompt and to enhance the response quality. For our use case, it was crucial that the response was coherent and easy to read. Therefore, we added the following instructions to the prompt: “think step-by-step,” “write in a conversational tone,” and “keep your replies concise.”

As the conversation progresses, the bot is able to take in the additional information, revise its context, and provide updated responses.

Truth be told, I used to think GenAI and machine learning was complex and out of reach, not really related to my daily responsibilities. But participating in the training, workshops, and hackathon showed me how advanced and user-friendly Gen AI technology is. Now I see that you don't need to be an expert to use it to create new and exciting services and products. It's truly impressive how much GenAI can do.

Going forward, we would be iterating on our agent such that it can be used in production environments to help us with real-time incidents. We can’t wait to implement and scale the innovative solutions developed during the hackathon, as they hold the potential to revolutionise our operations, delight our customers, and further cement Fazz's position as a leader in the FinTech space. Special thanks to our friends at AWS for their constant support, guidance, and mentorship in our Startup journey.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.