Run LLMs on CPU with Amazon SageMaker Real-time Inference

A solution for running Large Language Models for real-time inference using AWS Graviton3 and Amazon SageMaker without a need for GPUs.

Karan Thanvi

Amazon Employee

Published May 7, 2024

Large Language Models (LLMs) have emerged as powerful tools in natural language processing, enabling machines to understand and generate human-like text. These models, powered by advancements in deep learning, have found applications across various domains, from chatbots and language translation to content generation.

In this blog we will demonstrate how to run Large Language Models (LLMs) without the need to use Graphics Processing Units (GPUs) by leveraging Amazon SageMaker Hosted Endpoints on AWS Graviton3 instances. We envision to contribute to lowering a bar for using and running generative Artificial Intelligence (AI) models by creating a solution that would lower inference cost, as an alternative to more powerful but also suitable for larger budgets GPUs.

SageMaker Hosted Endpoint is a fully managed hosting service that abstracts away heavy lifting of hosting models and enables you to use real-time model inference via an API. Endpoints are hosted on AWS Graviton that is a family of 64-bit ARM-based CPUs offered by Amazon Web Services designed to deliver the best price performance for cloud workloads. Models used for inference are available for download from HuggingFace in universal file format GGUF. The full code is available on the GitHub repo.

Large Language Models (LLMs) use floating-point numbers (bf16) for storing weights. Floating-point representation allows for a balance between precision and efficiency, accommodating the complex and nuanced relationships within the vast amounts of training data. These large models with billions of parameters require massive amounts of compute resources, usually GPUs, to perform inference quickly.

The amount of GPU memory required for the inference of a model with bf-16 weights is typically 3-4 times the number of model parameters. A 7 billion parameters LLM like Llama 7B or Zephyr 7B would require GPU to run, and at least

g5.2xlarge instance, often increasing the costs and operational complexity of the solution.However, with the recent developments in LLM knowledge distillation and quantization the landscape is evolving. CPU-based inference is gaining attention as a viable alternative when using smaller LLM models. While it's important to note that CPU-based inference is not a complete replacement for GPU-based inference, it holds its own advantages in area of cost-optimization and can be a practical choice.

Using quantized models in the context of CPU-based LLM inference is a strategy aimed at optimizing both memory usage and computational efficiency. Quantization involves reducing the precision of the model's parameters from 32/16-bit floating-point numbers to lower bit representations, typically 8-bit integers. As we are going to show in this blog post even 4-5 bit quantized models can be used with minimal impact on the model quality. By using quantized model we are able to use Graviton 3 ARM based instances to perform this task.

There are several frameworks that support CPU-based LLM inferencing, but we have chosen Llama.cpp project for a number of reasons:

- Popular high-performance C++ transformers implementation

- Support and optimisation for multiple platforms and configurations, e.g. Linux, Mac, Windows

- Has REST API server, bindings for multiple programming languages

- Supports CPU and GPU inference with multiple quantisation options

- Supports response streaming

Llama.cpp uses GGUF, a special binary format for storing the model and metadata. Existing models need to be converted to GGUF format before they can be used for the inference. Fortunately, there are plenty of models in GGUF available on HuggingFace website. GGUF supports 2,3,4,5,6 and 8 bit integer quantisation, and 4-5 bit quantisation is providing the optimal speed/accuracy ratio for the most use-cases. Despite the name, Llama.cpp is not restricted to Llama LLM models.

We provide the end-to-end solution to deploy Llama.cpp as Amazon SageMaker Hosted Endpoint using ml.c7g.8xlarge Graviton 3 instance with 64 GB instance memory and 32 vCPUs, while keeping the costs on the same level as with comparable GPU requirements.

For this blog we will be using HuggingFace Zephyr-7B-Beta model in GGUF format with 4-bit integer quantisation.

Zephyr-7B-Beta was trained to act as helpful assistant using mix of publicly available datasets using Direct Performance Optimisation (DPO). DPO is a novel fine-tuning technique with improved ability to control sentiment of generations and increased response quality in summarization and single-turn dialogue. The solution can easily be extended to use any LLM available in GGUF format.

Zephyr-7B-Beta was trained to act as helpful assistant using mix of publicly available datasets using Direct Performance Optimisation (DPO). DPO is a novel fine-tuning technique with improved ability to control sentiment of generations and increased response quality in summarization and single-turn dialogue. The solution can easily be extended to use any LLM available in GGUF format.

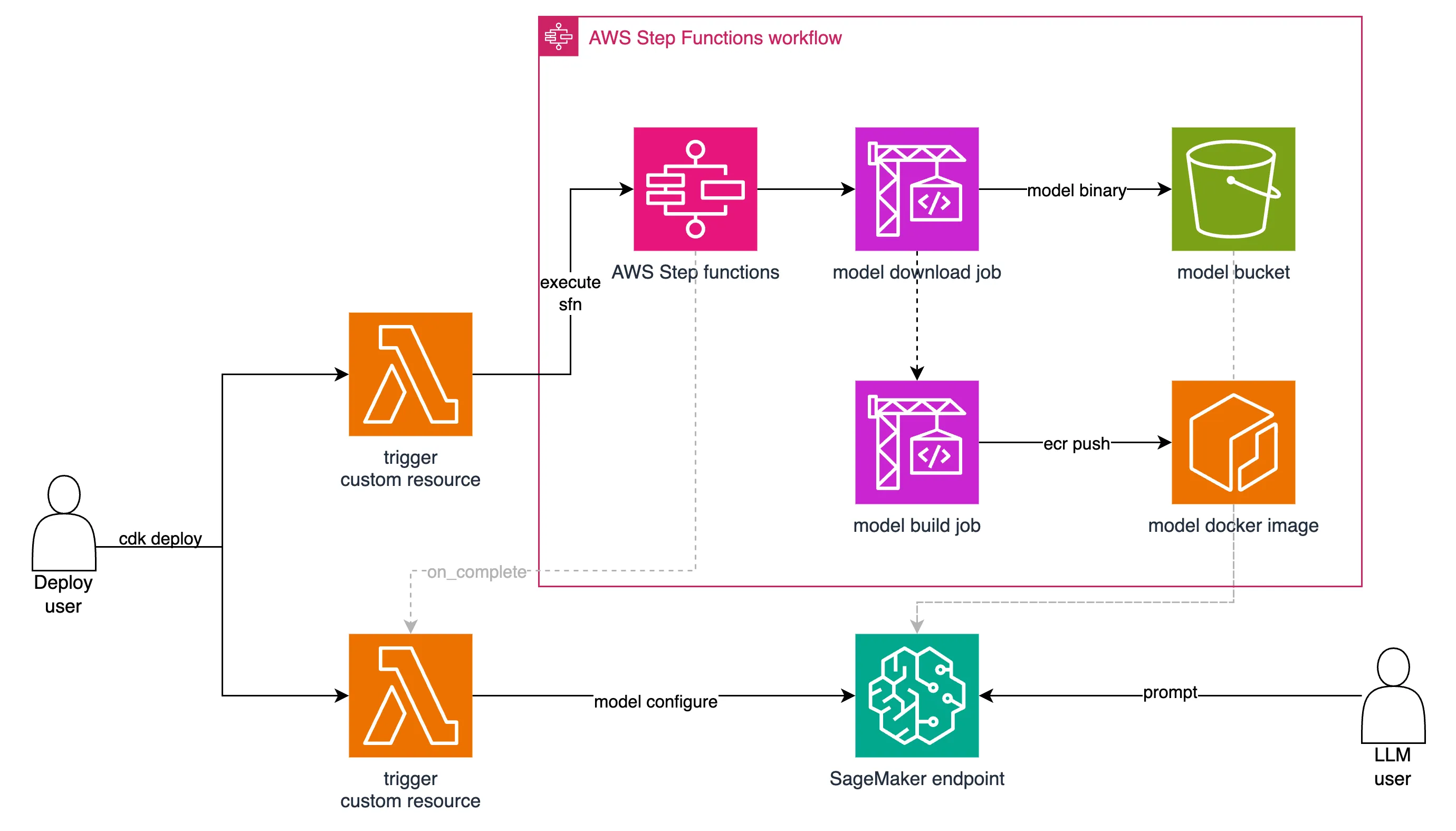

Let’s take a closer look at the solution architecture:

- Firstly, model download job downloads Large Language Model (LLM) files to Simple Storage Service (S3) bucket used as object store.

- Model build job creates a Docker image used for inference and pushes it to Elastic Container Registry (ECR).

- After both previous steps complete successfully, SageMaker Endpoint is deployed for model inference with the underlying Elastic Compute Cloud (EC2) Graviton instance.

- Finally, to configure SageMaker inference endpoint with model-specific parameters, AWS Lambda Functions is triggered.

The stack can be found in

./infrastructure directory.After successful deployment of all the stacks, real-time model inference API that gets available to LLM-user (actor), and

notebooks/inference.ipynb Jupiter Notebook provides an example on how to interact with the endpoint and prompt it with sample questions.Please note that IAM credentials / IAM Role that you use to run the notebook has to allow

sagemaker:InvokeEndpoint API calls. If you don't have an existing environment to run Jupyter notebooks, the easiest way to run the notebook would be to create new SageMaker notebook instance using default settings and letting SageMaker to create the necessary IAM role with enough permissions to interact with provisioned LLM endpoint.Would you like to give it a go? Prerequisites and deployment steps can be followed from GitHub project README, together with guide on how to run CDK deployment, as project is created by using Python AWS CDK. Parameters like instance type, model and platform among others can be found and adjusted in config.yaml file.

Llama.cpp provides detailed statistics for every request which is conveniently split into 2 sections - prompt evaluation and response generation.

The example below shows us that it took 198 milliseconds to evaluate 11 tokens of the initial prompt and 3.5 seconds to generate 128 response tokens which gives almost 36 tokens/second ratio.

print_timings: prompt eval time = 198.17 ms / 11 tokens ( 18.02 ms per token, 55.51 tokens per second)

print_timings: eval time = 3570.65 ms / 128 runs ( 27.90 ms per token, 35.85 tokens per second)

print_timings: total time = 3768.82 ms

print_timings: prompt eval time = 198.17 ms / 11 tokens ( 18.02 ms per token, 55.51 tokens per second)

print_timings: eval time = 3570.65 ms / 128 runs ( 27.90 ms per token, 35.85 tokens per second)

print_timings: total time = 3768.82 ms

We have run our tests with 1, 4 and 8 concurrent requests and received the following average response times using c7g.8xlarge instance:

| Concurrency | Total requests | Total time to complete all requests (sec) | Total number of generated tokens | Tokens/sec* |

|---|---|---|---|---|

| 1 | 1 | 3.582 | 128 | 35.73 |

| 4 | 4 | 11.446 | 512 | 44.73 |

| 8 | 8 | 20.916 | 1024 | 48.95 |

* Actual output generation token/sec will be slightly higher as the table above includes time for prompt evaluation as well.

In this post, we explored a solution for running Large Language Models for real-time inference by using Graviton 3 processors and Amazon SageMaker hosted endpoints. Now you can embed capabilities like personalized recommendations, chatbots or translation into applications, powered by the latest models, without a need for specialized machine learning expertise or hardware.

Wide availability of Graviton provides you with flexibility to optimize instance sizing and cost. These building blocks can be found and deployed as part of our GitHub repo, or adjusted for your specific use-case needs and model choice.

Aleksandra Jovovic's journey began with a passion for coding, expanding into consulting, and solutions architecture. She joined AWS team to specialize in helping Startups crafting cloud-based applications.

Karan Thanvi is a Startup Solutions Architect working with Fintech Startups helping them to design and run their workloads on AWS. He specialises in container-based solutions, databases and GenAI. He is passionate about developing solutions and playing chess!

Alex Tarasov is Senior Solutions Architect working with Fintech Startup customers helping them to design and run their data and AI/ML workloads on AWS. He is a former data engineer and is passionate about all things data.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.