Run LLMs on CPU with Amazon SageMaker Real-time Inference

A solution for running Large Language Models for real-time inference using AWS Graviton3 and Amazon SageMaker without a need for GPUs.

g5.2xlarge instance, often increasing the costs and operational complexity of the solution.- Popular high-performance C++ transformers implementation

- Support and optimisation for multiple platforms and configurations, e.g. Linux, Mac, Windows

- Has REST API server, bindings for multiple programming languages

- Supports CPU and GPU inference with multiple quantisation options

- Supports response streaming

Zephyr-7B-Beta was trained to act as helpful assistant using mix of publicly available datasets using Direct Performance Optimisation (DPO). DPO is a novel fine-tuning technique with improved ability to control sentiment of generations and increased response quality in summarization and single-turn dialogue. The solution can easily be extended to use any LLM available in GGUF format.

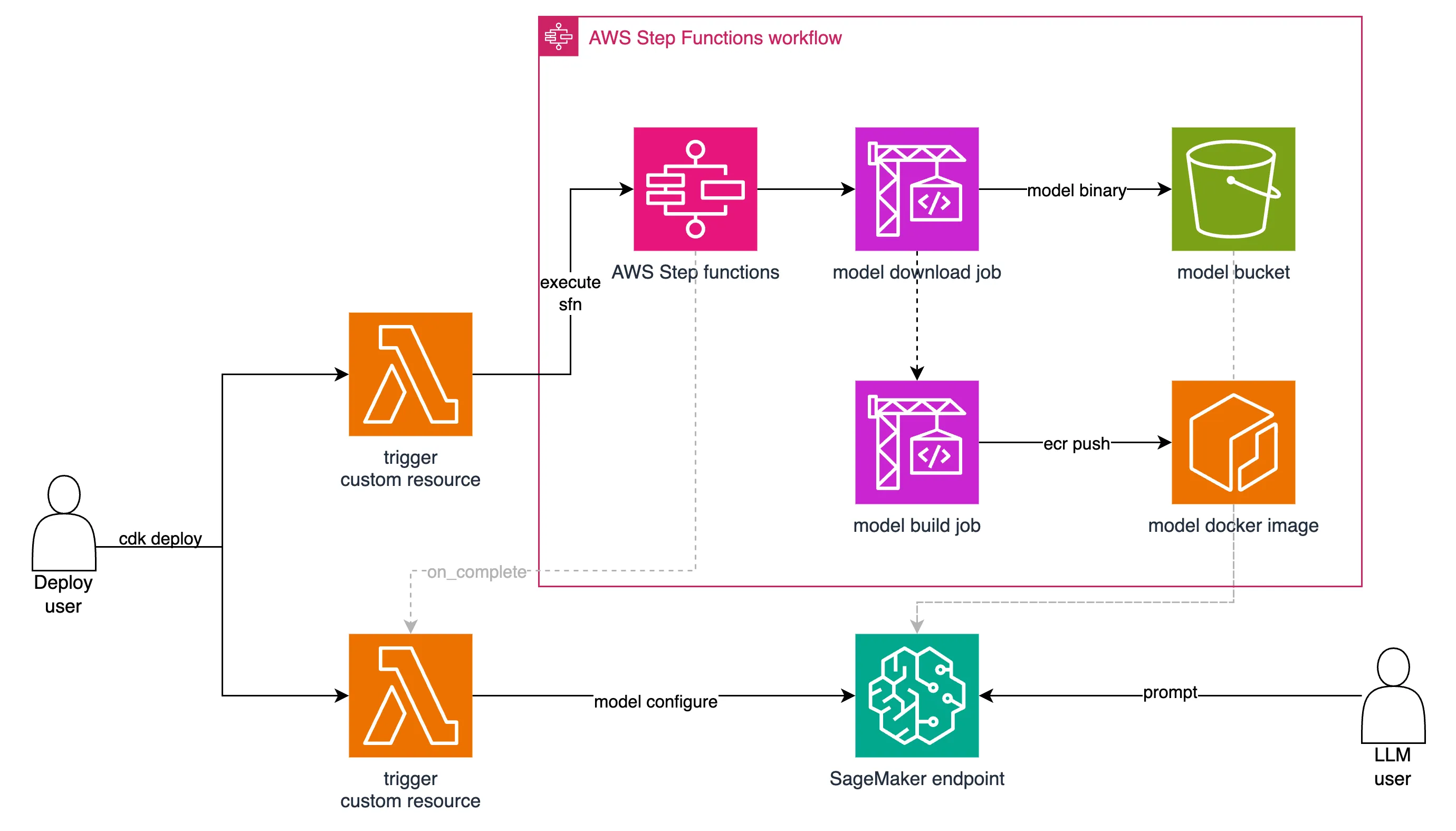

- Firstly, model download job downloads Large Language Model (LLM) files to Simple Storage Service (S3) bucket used as object store.

- Model build job creates a Docker image used for inference and pushes it to Elastic Container Registry (ECR).

- After both previous steps complete successfully, SageMaker Endpoint is deployed for model inference with the underlying Elastic Compute Cloud (EC2) Graviton instance.

- Finally, to configure SageMaker inference endpoint with model-specific parameters, AWS Lambda Functions is triggered.

./infrastructure directory.notebooks/inference.ipynb Jupiter Notebook provides an example on how to interact with the endpoint and prompt it with sample questions.sagemaker:InvokeEndpoint API calls. If you don't have an existing environment to run Jupyter notebooks, the easiest way to run the notebook would be to create new SageMaker notebook instance using default settings and letting SageMaker to create the necessary IAM role with enough permissions to interact with provisioned LLM endpoint.print_timings: prompt eval time = 198.17 ms / 11 tokens ( 18.02 ms per token, 55.51 tokens per second)

print_timings: eval time = 3570.65 ms / 128 runs ( 27.90 ms per token, 35.85 tokens per second)

print_timings: total time = 3768.82 ms

| Concurrency | Total requests | Total time to complete all requests (sec) | Total number of generated tokens | Tokens/sec* |

|---|---|---|---|---|

| 1 | 1 | 3.582 | 128 | 35.73 |

| 4 | 4 | 11.446 | 512 | 44.73 |

| 8 | 8 | 20.916 | 1024 | 48.95 |

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.