GenAI under the hood [Part 1] - Tokenizers and why you should care

This is the first post in a series "GenAI under the hood" diving in on a basic concept - tokenizers! Read on to learn about what they are, how you can create one for your own data, and the connection tokens have with cost and performance.

Shreyas Subramanian

Amazon Employee

Published Apr 4, 2024

I'm sure you've lost a few minutes (hours?) wondering how language models like ChatGPT or Claude can understand and generate human-like text with such remarkable fluency and depth of knowledge. The answer lies in a fundamental concept called "tokenization" – the process of breaking down text into smaller, meaningful units called "tokens." These tokens serve as the building blocks that language models comprehend and manipulate to construct coherent and natural language.

Tokenization is akin to deconstructing a complex Lego structure into its individual bricks. Just as intricate Lego models are built from individual bricks, language models leverage tokens to construct meaningful and fluent text. These tokens represent the smallest meaningful units of text, such as words, subwords, or even individual characters, depending on the tokenization strategy employed.

Roughly, a tokenizer goes from ...

Sentences > sub-words > token-IDs

... based on a fixed vocabulary (file mapping sub-words to IDs)

But why is tokenization necessary in the first place? Imagine trying to build a complex Lego model without having access to individual bricks – it would be an impossible feat! Similarly, language models require text to be broken down into granular units, or tokens, to effectively interpret and manipulate it. By understanding patterns, relationships, and context at the token level, these models can generate human-like responses, translate between languages, summarize text, and perform a wide array of language-related tasks.

One of the most widely used tokenization techniques in modern language models is Byte Pair Encoding (BPE). BPE has several desirable properties that make it a popular choice:

- Reversibility and Losslessness - BPE allows for converting tokens back into the original text without any loss of information.

- Flexibility: BPE can handle arbitrary text, even if it's not part of the tokenizer's training data.

- Compression BPE compresses the text, resulting in a token sequence that is typically shorter than the original byte representation.

- Subword Representation: BPE attempts to represent common subwords as individual tokens, allowing the model to better understand grammar and generalize to unseen text.

So, how can you work with tokens in practice? Most modern natural language processing (NLP) libraries and frameworks, such as HuggingFace's Transformers, provide built-in tokenization utilities and pre-trained tokenizers for popular language models. These tools make it easier to tokenize text, convert tokens back to text, and even handle advanced tasks like padding, truncation, and batching.

For example, with HuggingFace's Transformers, you can load a pre-trained tokenizer for a specific language model like this:

Once you have the tokenizer loaded, you can tokenize text and convert it back to its original form:

Understanding tokenization is crucial for anyone working with generative AI applications, as it directly impacts the performance, reliability, and capabilities of language models. Here are some reasons why tokens matter:

- Performance Optimization: Different tokenization strategies can affect model size, training time, and inference speed, all of which are critical factors in real-world applications.

- Multilingual Support: If your application needs to handle multiple languages, tokenization becomes even more critical, as different languages have unique characteristics and challenges.

- Domain-Specific Optimization: In specialized domains like medicine or law, where terminology and jargon play a crucial role, tailored tokenization techniques can improve the model's understanding and generation capabilities.

- Interpretability and Explainability: By understanding tokenization, you can gain insights into the inner workings of language models, aiding in debugging, model analysis, and ensuring trustworthiness.

- Cost Optimization: Optimizing tokenization can potentially reduce computational resources and lead to significant cost savings, especially in large-scale deployments.

TL;DR - pretty much everywhere.

Andrej Karpathy, highlighted several scenarios where tokenization can significantly impact the behavior and performance of language models, such as:

- Why can't language models spell words correctly? Tokenization.

- Why can't they perform simple string processing tasks like reversing a string? Tokenization.

- Why do they struggle with non-English languages like Japanese? Tokenization.

- Why are they bad at simple arithmetic? Tokenization.

- Why did GPT-2 have trouble coding in Python? Tokenization.

Let me add some more ...

- Why do LLM based agent runs break sometimes? Tokenization

- Why do some critical numbers, code, or key words get messed up on processing/extraction when involving LLMs? Tokenization

- Why do models fail to understand complex logical operations or reasoning? Tokenization.

- Why do language models sometimes generate biased or offensive content? Tokenization.

- Why do models have difficulty with domain-specific jargon or technical terminology? Tokenization.

- Why do language models struggle with tasks that require spatial or visual reasoning? Tokenization.

- Why do models sometimes fail to maintain consistency or coherence in generated text? Tokenization.

- Why do language models have trouble understanding ambiguous or idiomatic language? Tokenization.

- Why do models sometimes generate text that violates grammatical or stylistic rules? Tokenization.

In short, yes—you should strongly consider training your own tokenizer, especially if you're working with specialized domains or large language models (LLMs) for mission-critical applications. Training a domain-specific tokenizer can significantly improve the performance, reliability, and cost-effectiveness of your language models.

As covered earlier, tokenization plays a pivotal role in how LLMs understand and generate human-like text. Pre-trained tokenizers, while generally effective for many use cases, may not be optimized for the unique linguistic characteristics and terminology of specialized domains like medicine, finance, or law. By training a tokenizer on domain-specific data, you can ensure that your LLM accurately captures and understands the nuances of the language in your field.

For instance, in the medical domain, where terminology is highly specialized and often involves complex compound words, a custom tokenizer can more effectively segment and interpret these terms, leading to improved model performance and accuracy. Similarly, in finance, where specific jargon, numerical data, and unique compound words are common, a domain-specific tokenizer can accurately parse and interpret such language, enhancing the LLM's understanding and generation capabilities in these contexts.

Training your own tokenizer can also help mitigate issues like spell errors, string processing problems, arithmetic challenges, and even biased or offensive content generation—all of which can stem from suboptimal tokenization strategies.

Not very difficult, it turns out. Imagine you were dealing with a custom dataset that had the following tweets:

- "Just bought some $AAPL stocks. Feeling optimistic!"

- "Earnings report for $NFLX is out! Expecting a surge in prices."

- "Discussing potential mergers in the tech industry. $MSFT $GOOGL $AAPL"

- "Great analysis on $TSLA by @elonmusk. Exciting times ahead!"

- "Watching closely: $AMZN's impact on the e-commerce sector.

These tweets utilize symbols like '$' for stock tickers and '@' for mentioning companies and analysts. Typically, out of the box tokenizers will not recognize $AAPL or @elonmusk as a unique token, but this could be useful.

You may find a similar stock tweets dataset in huggingface if you are interested:

That dataset contains about a million stock related tweets.

To prepare for training, we need to create a function to generate batches of examples, each containing a thousand tweets:

Next, we'll select a tokenizer suited for a basic GPT2 model:

With the initialization complete, we'll embark on training. Setting the vocabulary size to 64,000, we initiate the training of our new tokenizer:

Training with almost a million examples completes in approximately two minutes on a g5.4xlarge instance on AWS. Try it on SageMaker Studio.

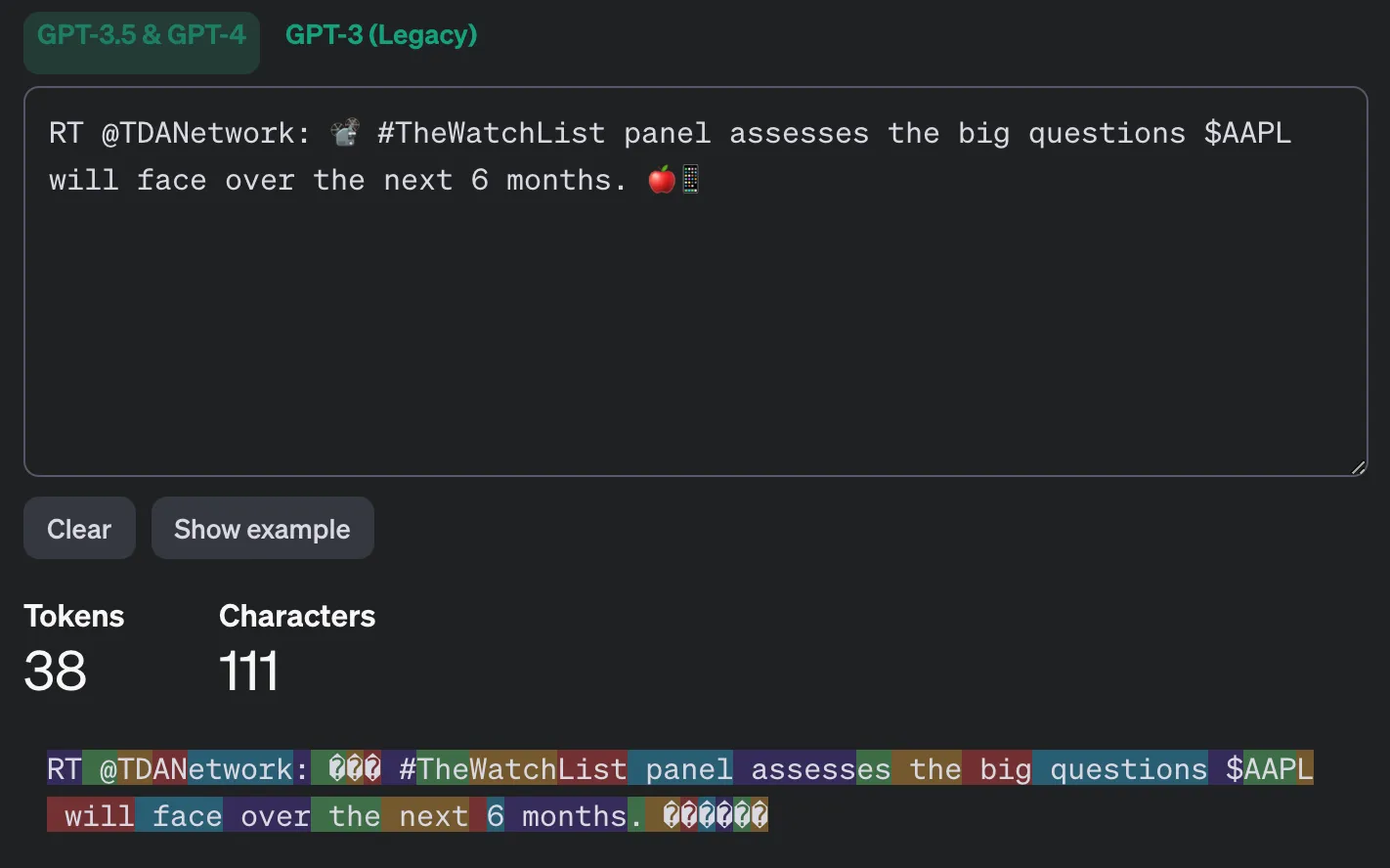

Now, let's compare the effectiveness of our new tokenizer with its predecessor. We'll examine a sample tweet that includes references to TDA Network, stock hashtags, and emojis:

Here's an output of tokens recognized by GPT4:

| Old Tokenizer tokens | Trained new tokenizer tokens |

|---|---|

| RT | RT |

| @ | @ |

| TD , AN, etwork | TDANetwork |

| : | : |

| # | # |

| The, Watch, List | TheWatchList |

| panel | panel |

| assesses, es | assess, es |

| the | the |

| big | big |

| questions | questions |

| $, AAP, L | $AAPL |

| will | will |

| face | face |

| over | over |

| the | the |

| next | next |

| six | six |

| months | months |

| ?????? | 🍎📱 |

Which do you like better? Which one would work better in training/inference?

Optimizing token usage can lead to significant cost savings, especially when dealing with high-volume applications or long-form text generation tasks. Here are some strategies to improve token efficiency:

- Prompt Engineering: Carefully crafting prompts to minimize unnecessary tokens can reduce the overall token count without sacrificing performance.

- Tokenization Strategies: Exploring alternative tokenization techniques, such as SentencePiece or WordPiece, may yield better compression and reduce token counts.

- Token Recycling: Instead of generating text from scratch, reusing or recycling tokens from previous generations can reduce the overall token count and associated costs.

- Model Compression: Techniques like quantization, pruning, and distillation can reduce the model size, leading to more efficient token processing and lower inference costs.

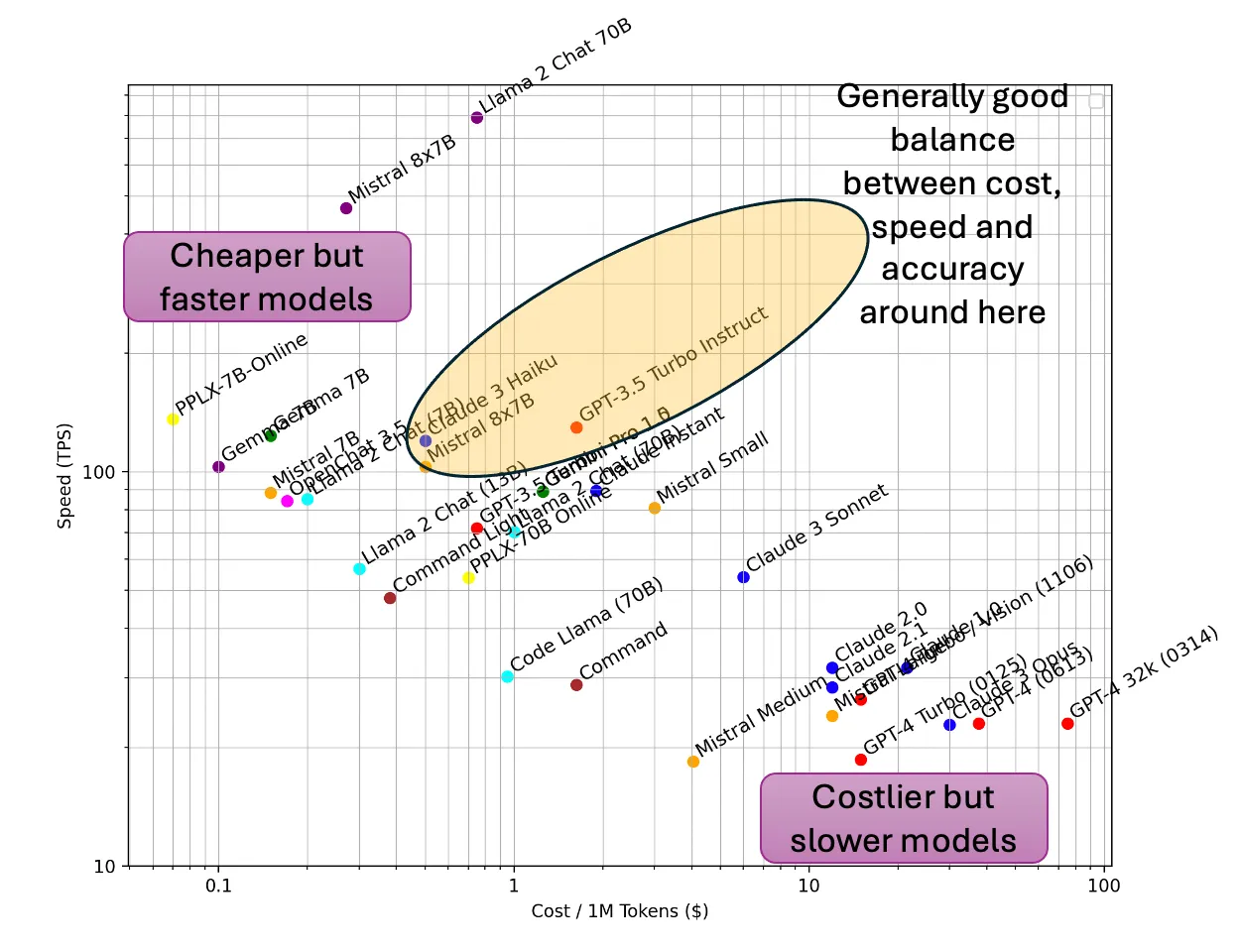

A critical factor in assessing the cost and performance of large language models (LLMs) is the tokens per second (TPS) metric. TPS measures the rate at which an LLM can generate or process tokens during inference. Higher TPS values generally translate to faster response times and the ability to handle higher throughput workloads. We would obviously like a super fast model, which is great at every task, and costs almost nothing. But physics, and profitable businesses may disagree.

When evaluating LLM providers or self-hosted solutions, benchmarking TPS is crucial. This metric can vary significantly depending on factors such as model architecture, hardware accelerators (GPUs/TPUs), and optimizations like batching and parallel processing.

Improving TPS can directly impact the cost-effectiveness of LLM-based applications. By increasing the TPS, fewer computational resources (e.g., GPU instances) are required to handle a given workload, resulting in reduced operational costs.

Optimizations like dynamic batching, where multiple requests are combined and processed together, can significantly boost TPS. Additionally, techniques like model parallelism and tensor parallelism can distribute the computational load across multiple hardware accelerators, further increasing the overall TPS.

Here is a visualization of TPS vs cost showing that you pay for the performance you need. A third but important dimension, accuracy at tasks is not shown. Costlier but slower (and likely better) models are in the bottom right, slower but much faster models are on the top left; and a decent balance exists in the middle:

In summary, tokenization plays a pivotal role in the reliability and performance of generative AI applications. By understanding the intricacies of tokens, token efficiency, and TPS, organizations can unlock the full potential of large language models while maintaining a favorable cost-performance balance.

In the next few posts in this series "GenAI under the hood", we will dive deeper into the recent advancements around the architecture of foundation models. Stay tuned!

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.