Lambda Monitoring Made Simple: Observe, Learn, and Optimize

Analyzing the performance of your AWS Lambda functions is not just a best practice; it's a necessity to ensure that your applications are not only running smoothly but also cost-efficient and scalable.

Published Apr 10, 2024

Last Modified Apr 25, 2024

Why should you, as a developer or a business owner, invest your time in reading this article? The answer lies in the numerous benefits that efficient performance monitoring and analysis provide. By gaining insights into your Lambda functions, you can identify bottlenecks, optimize resource allocation, reduce costs, and enhance the overall user experience. This article acts as your guide through the complexities of AWS Lambda, offering practical advice based on my own experience regarding the strategies I followed to elevate my application to the next level.

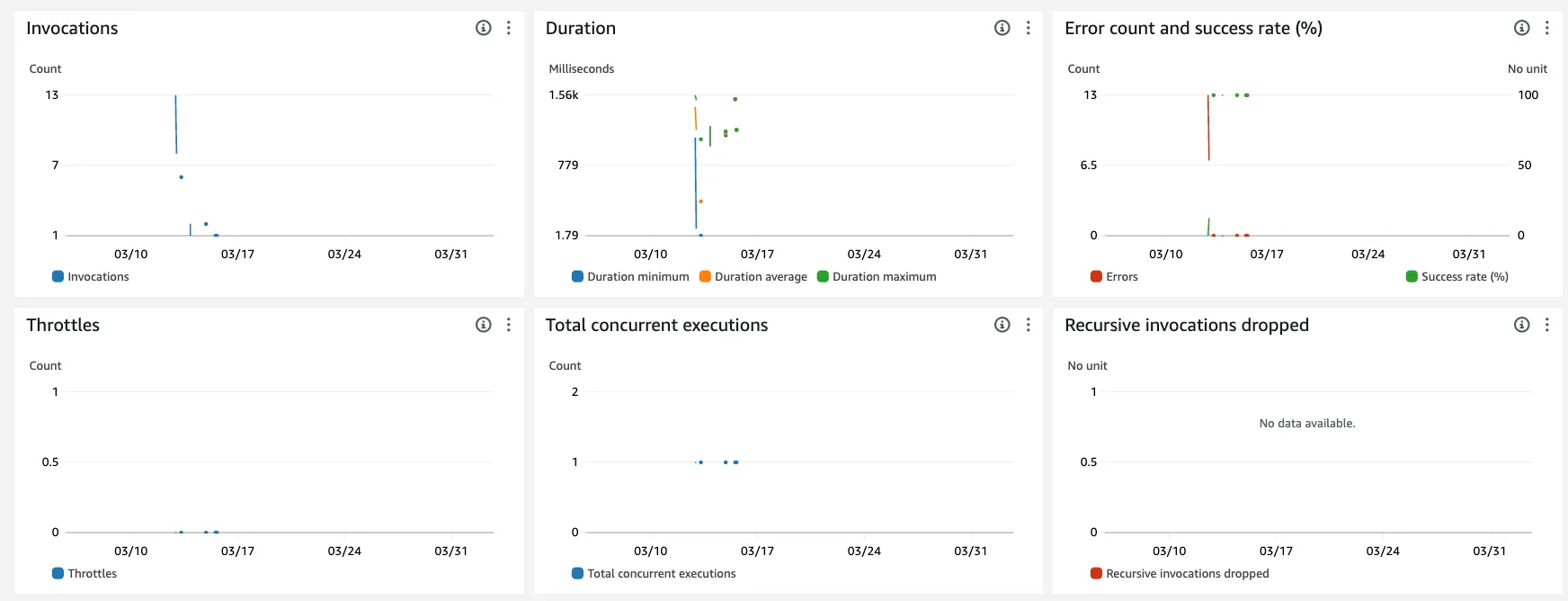

- Invocations: This metric counts the number of times your Lambda function is invoked in response to an event or a direct call. It's a primary indicator of how often your function is used.

- Errors: Tracks the number of invocations that resulted in a function error. Monitoring this metric helps in identifying the stability and reliability of your function.

- Dead Letter Queue Errors: Counts the failed attempts to place an event on a dead-letter queue. This metric is vital for ensuring that your system gracefully handles invocation errors.

- Duration: Measures the time taken by your Lambda function to execute and process an event. It's billed to the nearest 1ms and is a direct cost factor.

- Throttles: Represents the number of Lambda function invocation attempts that were throttled due to reaching the concurrent execution limit. This metric is crucial for understanding and managing the scaling behavior of your functions.

- Concurrent Executions: This metric provides the number of function instances that are running simultaneously. It's a key indicator of the scalability and parallel processing power of your Lambda setup.

Understanding these metrics is the first step in performance analysis. AWS provides tools like Amazon CloudWatch and AWS X-Ray to monitor these metrics, offering a comprehensive view of your Lambda functions' performance.

- Amazon CloudWatch allows you to collect and track metrics, set alarms, and automatically react to changes in your AWS resources.

- AWS X-Ray, on the other hand, provides an end-to-end view of requests as they travel through your application, helping you analyze and debug distributed applications, such as those built using a microservices architecture.

Benchmarks Lambda Performance

- Identify Key Performance Indicators (KPIs): Start by identifying which metrics are most critical to your Lambda functions. These might include invocation count, error rate, duration, and memory usage.

- Record Baseline Metrics:**** Before making any changes or optimizations, record the current performance metrics of your function. This provides a point of comparison for future changes.

- Consider the Function's Purpose: The expected performance can vary significantly depending on what the function is doing. A function interacting with a database might have different performance expectations compared to one processing a simple computation.

- Account for Environmental Factors:**** Performance can be influenced by factors such as network latency, third-party service response times, and AWS regional performance. Ensure these factors are considered when setting benchmarks.

- Comparing Like-for-Like: Ensure that you're comparing apples to apples. When comparing performance metrics over time or against other functions, ensure the conditions are as similar as possible. Variations in factors like payload size, external API response times, and function configuration can skew your comparisons.

- Regular Reviews and Updates: Regularly review and update your benchmarks. "As your application changes, your benchmarks should also change". This ensures that they remain relevant and useful for ongoing performance analysis.

Once you've established benchmarks and gathered performance data, the next step is to analyze this data to understand how your Lambda functions are performing and identify areas for improvement. Effective analysis can help you tackle issues that may be affecting performance and guide you in optimizing your functions. Here's how to approach this:

- Understanding Trends: Look for trends in your metrics over time. Sudden spikes in duration, error rates, or throttle counts can indicate underlying issues that need attention.

- Correlation Analysis: Sometimes, the cause of an issue isn't straightforward. Correlating different metrics, like increased invocation times with higher error rates, can help you understand more complex issues.

- Trace Analysis: AWS X-Ray provides trace data that shows the path of a request through your application. This can help you identify bottlenecks, such as slow database queries or inefficient Lambda function code.

- Service Map: X-Ray's service map visually presents your application's components and how they're interconnected. This can be invaluable for spotting performance bottlenecks in a microservice architecture.

- Cold Starts: Lambda functions may have longer initiation times after being idle, known as cold starts. Analyzing invocation patterns can help you identify if cold starts are affecting performance.

- Resource Limitations: Lambda functions have limits, like memory and execution time. Analyzing your function's resource usage against these limits can highlight the need for adjustments.

Regularly compare current performance against your established benchmarks. This not only helps in measuring improvement but also in catching any regressions promptly.

After analyzing your AWS Lambda performance data and identifying potential bottlenecks, the next step is to implement strategies to improve performance. Optimizing your Lambda functions not only enhances the user experience but also can lead to significant cost savings. In order to do this follow the next tips for improving the performance of your Lambda functions, these are the ones that I regularly used:

- Efficient Code: Write clean, efficient code. Avoid unnecessary loops, and make sure that your code is as streamlined as possible. Keep an eye in your code review process!

- Dependency Management: Minimize the number and size of your function's dependencies. Larger deployment packages can increase the time it takes for your Lambda function to start executing.

- Right-size Memory Allocation: Allocate just enough memory for your function to perform its task. More memory means more CPU and faster execution, but also higher cost.

- Execution Time: Reduce your function's execution time by optimizing your code and resources. Remember that AWS bills you for the time your code executes.

- Minimize Cold Starts: Use strategies such as keeping your functions warm with Provisioned Concurrency to minimize cold starts, especially for functions where latency is a concern.

- Connection Management:**** Reuse database connections across multiple invocations, instead of creating a new connection for each invocation. This reduces latency and resource utilization.

- Step Functions: For complex workflows, consider using AWS Step Functions to manage the orchestration of your Lambda functions.

- Lambda Layers: Use Lambda Layers for managing your code dependencies more efficiently.

- Continuous Monitoring: Keep monitoring your Lambda function's performance even after making optimizations. Use the insights gained to make further adjustments.

- Iterative Improvement: Performance tuning is an ongoing process. Regularly update your functions based on the latest best practices and your evolving application needs.

By implementing these strategies, I was able to improve the performance, efficiency, and cost-effectiveness of my AWS Lambda functions.

Consistent and proactive monitoring is key to maintaining the performance of your AWS Lambda functions. Automation can significantly help in this process, ensuring that you're always aware of how your functions are performing and are alerted to potential issues before they escalate. How?

- CloudWatch Alarms: Set up CloudWatch alarms to notify you when specific metrics exceed or fall below your defined thresholds. For instance, you can create an alarm for high error rates or longer than usual execution times. Consider setting these alarms by environment and set the correct point of contact according to the environment.

- Real-time Monitoring: Use CloudWatch Dashboards to create real-time visualizations of your metrics, helping you quickly understand the current state of your Lambda functions.

- Detailed Logging: Ensure that your Lambda functions are logging relevant information. Use CloudWatch Logs Insights to run queries on your log data and gain deeper insights into the function's execution behavior.

- Pattern Recognition: Set up metric filters to look for specific patterns in your logs, such as error codes or performance bottlenecks, and trigger alerts based on these patterns.

- Automated Traces: Enable AWS X-Ray for your Lambda functions to automatically trace requests as they travel through your application. This helps in identifying performance bottlenecks and tackling the root cause of issues.

- Analysis and Visualization: Use the X-Ray service map and trace analytics to visualize and analyze the performance of your application components.

- Automated Response: Use AWS Lambda functions in response to CloudWatch alarms to automatically take corrective actions, such as modifying configurations or restarting functions.

- Notification Integration: Integrate with Simple Notification Service (SNS) to receive notifications via email, SMS, or other supported channels when specific events occur. In my case I also included Slack to provide a quicker response from the team.

As serverless architectures continue to evolve, so too will the tools and practices surrounding AWS Lambda. It is crucial to stay informed and adaptable. The journey toward optimal serverless performance is ongoing, and these are the strategies I have identified, applied, and found effective. This is why I want to share them. However, there may be more strategies out there, which is why it's important to keep monitoring and applying what works best for your specific scenario.