Coding Perspective: PartyRock App Development with Amazon Bedrock APIs

An alternative approach to building Gen AI applications using Amazon Bedrock -architectural considerations & model selection.

- AWS cloud account with access to Amazon Bedrock.

- To use Amazon Bedrock, you must request access to BedRock's FMs (configure IAM policy to access Bedrock APIs). You may also have to submit your usecase to access a few models from Anthropic.

- For experimenting with this use case, I chose Amazon Titan Embeddings G1 - Text and Anthropic Claude foundational models.

- I had my code running in us-west-2 (Oregon) region and I used Amazon SageMaker studio for simplicity.

- To use BedRock APIs, python was installed.

- Install the boto3 and botocore AWS SDK for python (Boto3)

- Necessary Supporting Libraries: FAISS (Facebook AI Similarity Search) and LangChain (framework for developing applications powered by language models.).

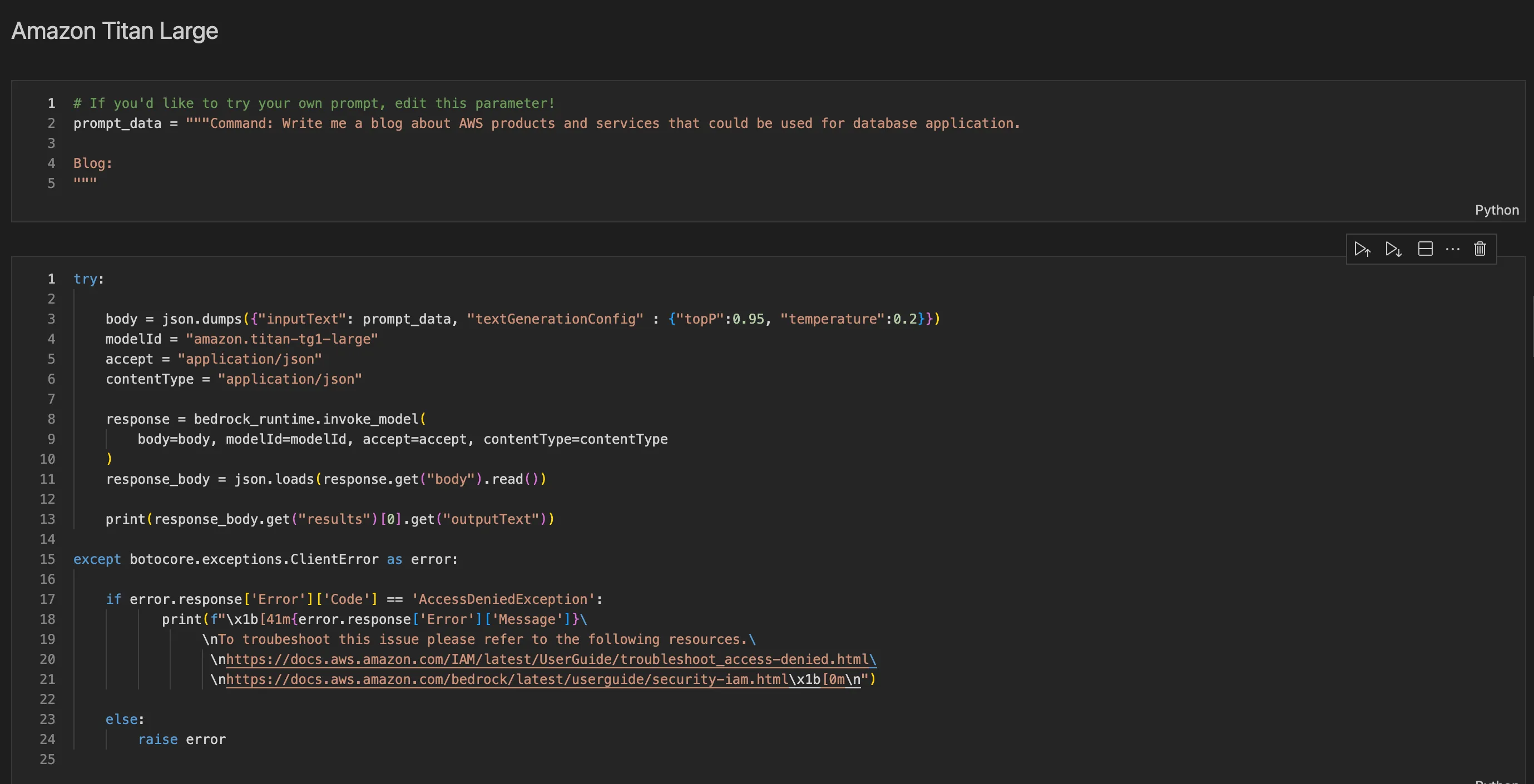

- Amazon Titan:

- Description: Amazon Titan foundation models offer a wide range of high-performing image, multimodal, and text model choices through a fully managed API.

- Features: Pretrained on large datasets, Amazon Titan models are powerful and versatile, supporting various use cases while ensuring responsible AI usage.

- Customization: These models can be used as-is or customized with proprietary data to tailor them to specific application requirements.

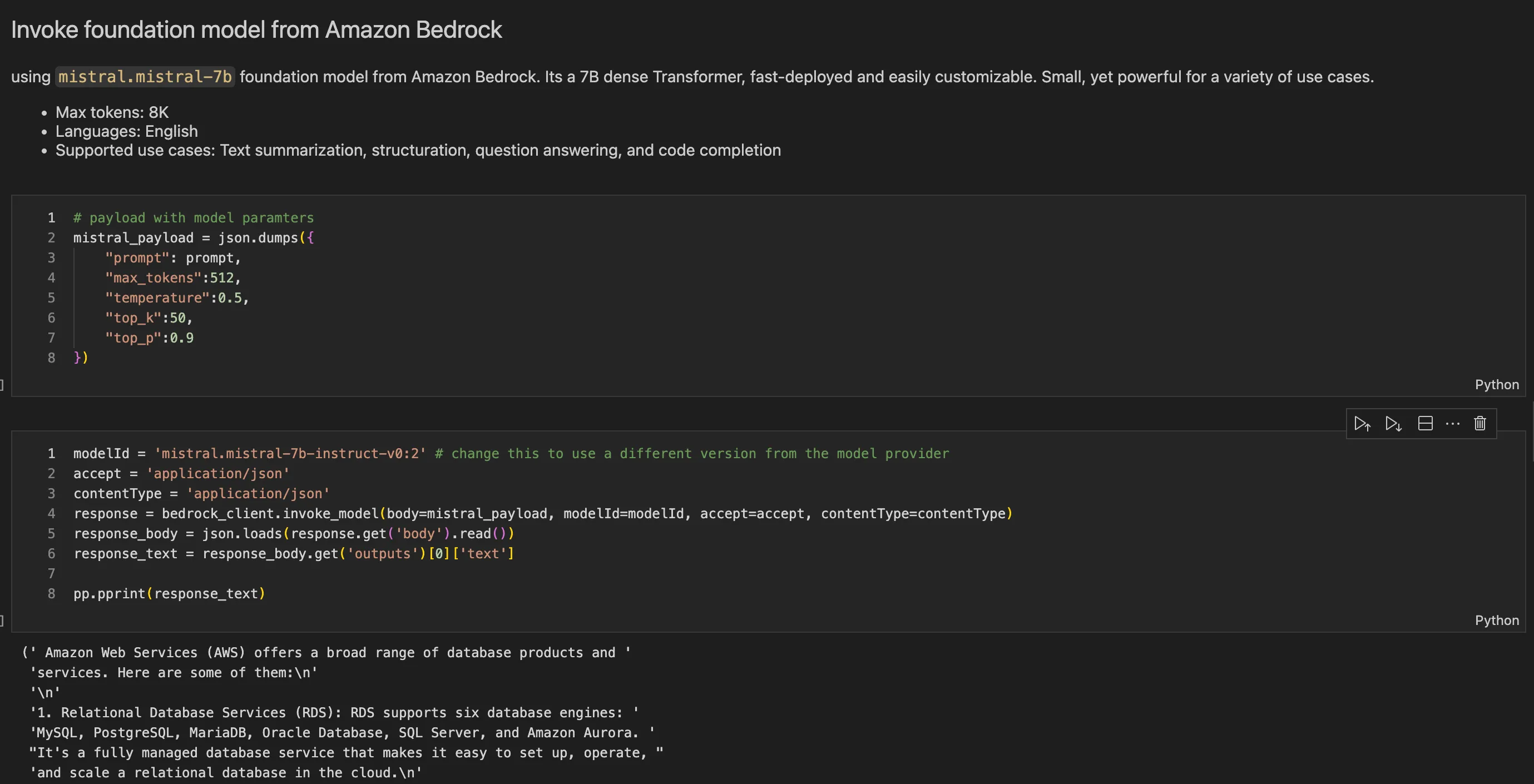

- Mistral AI :

- Description: Mistral AI is committed to advancing AI technologies and elevating publicly available models to state-of-the-art performance.

- Features: I utilized the Mistral 7B model, a compact yet potent dense Transformer suitable for various tasks, including text summarization, question answering, and code completion.

- Customization: Mistral AI models are easily customizable and offer support for multiple languages, making them adaptable to diverse use cases.

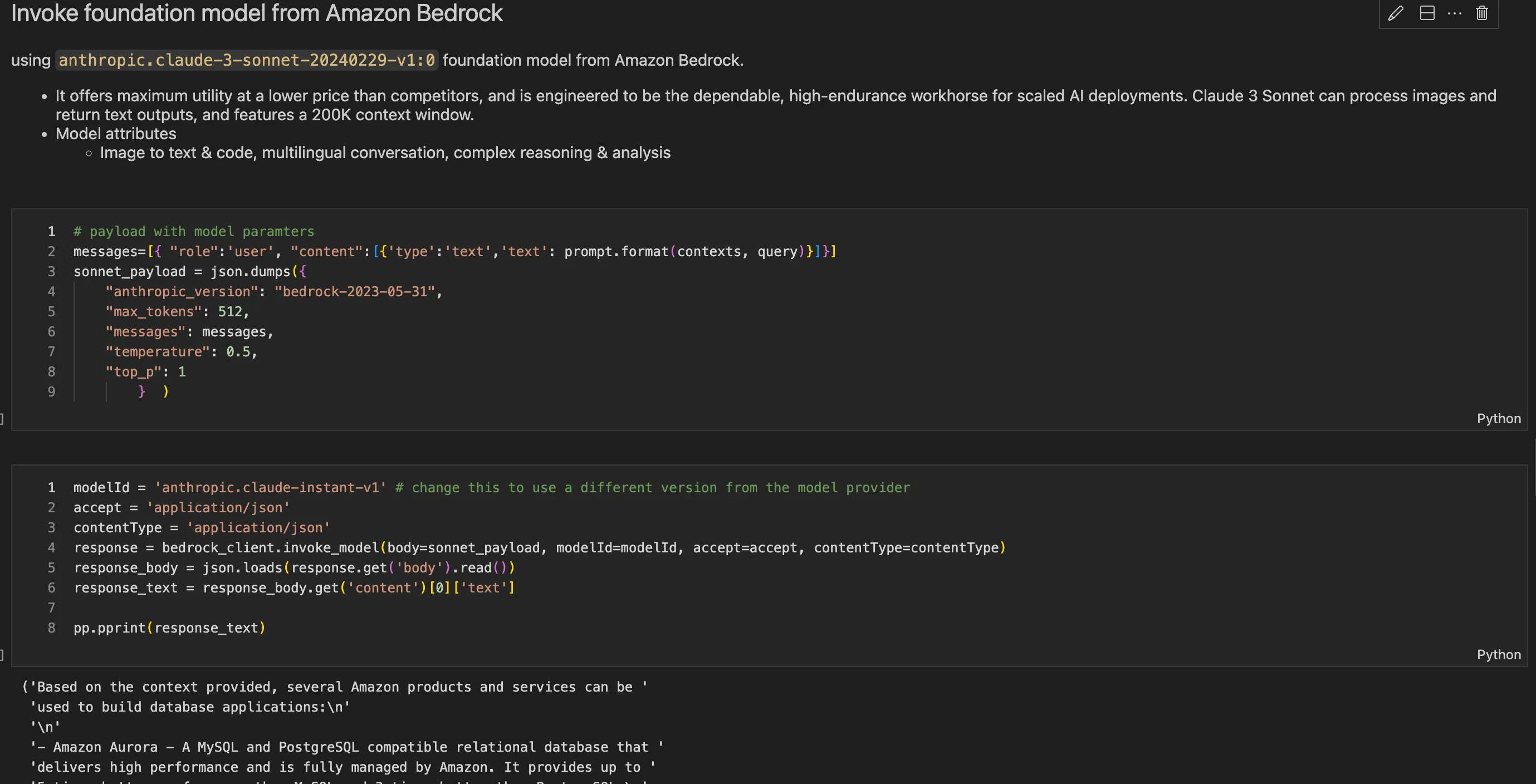

- Anthropic's Claude:

- Description: Anthropic's Claude represents the cutting-edge of large language models, designed to prioritize speed, cost-effectiveness, and context understanding.

- Features: Claude is built on Anthropic's research into creating reliable, interpretable, and controllable AI systems, excelling in tasks such as dialogue generation, content creation, and complex reasoning.

- Customization: I opted for Claude Instant version 1, a faster and cost-effective option with a generous max token limit of 100K. It supports multiple languages and is suitable for various tasks, including text analysis, summarization, and document comprehension.