The Computeless RAG Tool: Orchestrating RAG with AWS AppSync

The Computeless RAG tool, an AppSync and GenAI love story. Tap into private data and leverage GenAI without the need for traditional compute resources.

Published Jun 7, 2024

Hey folks! I’m excited to share a project that combines serverless and generative AI: the Computeless RAG tool. This tool taps into private data, like your company's internal databases, and leverages GenAI without requiring traditional AWS compute resources. Let’s dive into how this tool is built.

The Computeless RAG tool uses AWS AppSync to manage GenAI-driven queries without traditional compute resources. By leveraging AppSync's JavaScript to orchestrate the RAG pipeline, the architecture becomes simpler, faster, and more cost-effective.

Key components include:

- Amazon Bedrock for foundational models.

- Pinecone as the vector database.

- AppSync JS resolvers as the orchestrator.

This integration demonstrates AppSync's potential to serve as the backbone of a serverless GenAI system.

Let's explore the key technologies behind the Computeless RAG tool:

AWS AppSync serves as the central orchestrator, leveraging JavaScript pipeline resolvers to efficiently manage data sequence and context throughout the query and response process.

Pinecone serves as the vector database. It will allow for the storage of the internal data and quick retrieval of vector embeddings based on user queries.

AWS Secrets Manager is used to secure sensitive credentials like the Pinecone API key, crucial for interfacing with the vector database.

Amazon Bedrock provides the AI power in the setup, serving two main functions:

- Amazon Titan Text Embeddings: This model transforms user queries into vector embeddings, crucial for querying the Pinecone database to fetch relevant data.

- Anthropic Claude 3 Haiku: After data retrieval, this model processes the information to generate accurate and contextually appropriate responses to the queries, leveraging its advanced natural language processing capabilities.

The integration of these technologies is orchestrated through several steps:

- Secure API Key Retrieval: Retrieve the Pinecone API key from AWS Secrets Manager to ensure secure access to the database.

- Embedding Generation: Convert user queries into vector embeddings using the Titan model.

- Data Storage: Insert vectors (and the corresponding text) into the Pinecone database for later retrieval.

- Data Retrieval: Use these embeddings to locate the most relevant data vectors within the Pinecone database.

- Response Generation: Employ Anthropic’s Claude 3 Haiku to formulate a final, contextual answer based on the retrieved data and the initial query.

Let's now break down the architecture and workflow of the Computeless RAG tool, providing a detailed look at how each component interacts.

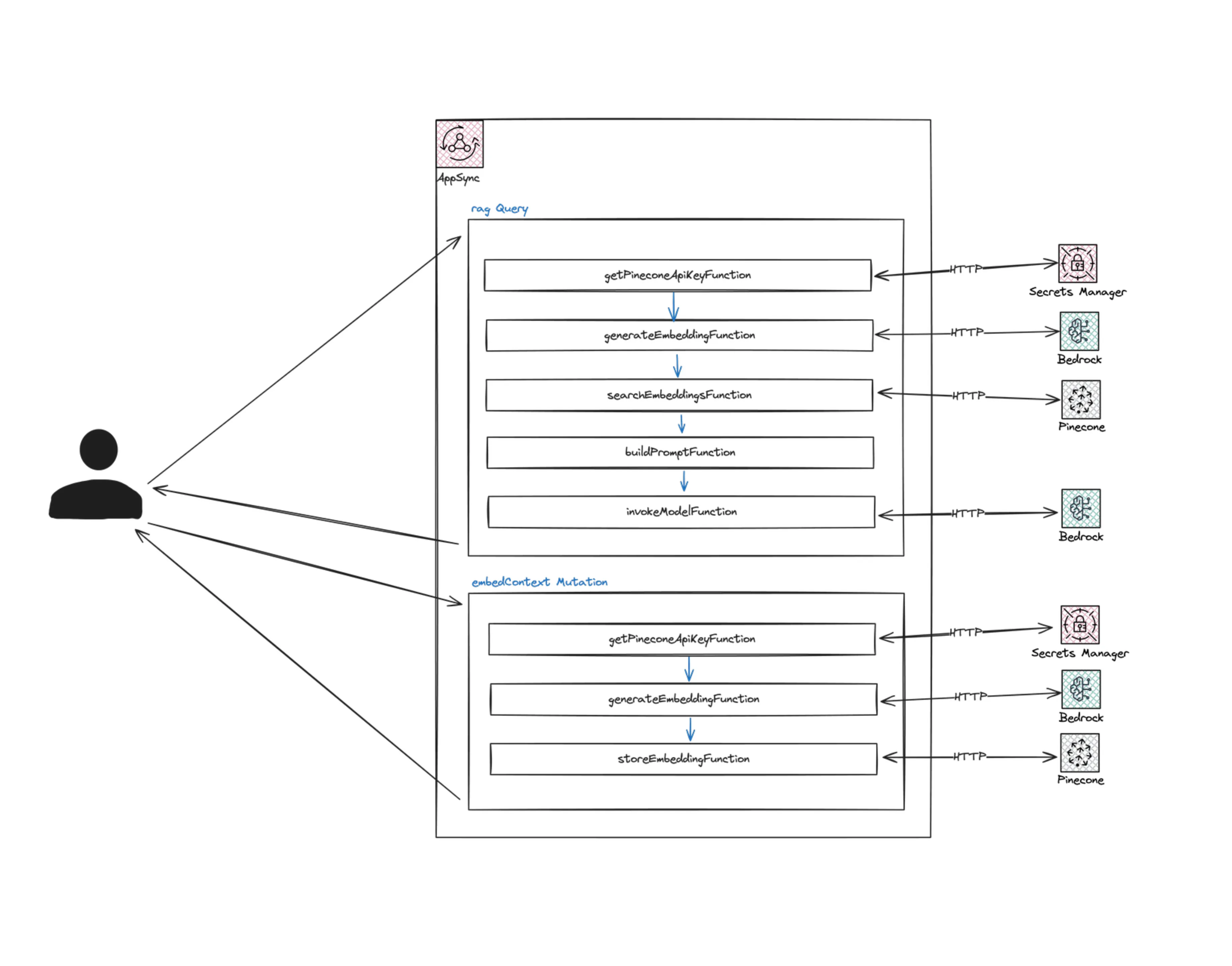

The architecture diagram illustrates the orchestration of services and data flow in the tool. Each GraphQL query or mutation to the AppSync API triggers a JavaScript pipeline resolver. This resolver runs a sequence of functions to interact with services, data sources, or manipulate data for the next step.

AWS AppSync's pipeline resolvers are vital for complex data operations that require multiple steps. They enable you to define a sequence of function calls, each transforming the output and passing it to the next. This is ideal for workflows where data needs to be fetched, transformed, and used to generate responses, just like the Computeless RAG tool.

Why Use Pipeline Resolvers?

- Sequential Logic Execution: Pipeline resolvers execute operations in sequence, where each step depends on the previous one, making them perfect for the use case.

- Decoupling Logic and Data Sources: Each function in the pipeline can use different data sources or none at all, creating a cleaner architecture by separating data retrieval, processing, and response generation.

- Efficiency and Performance: By handling data flow within AppSync, we reduce the need for external orchestration and cut down on latency from multiple network calls.

Each function in the pipeline is managed by individual JS files located in the

api/resolvers/functions folder of the SAM project. This organization makes deployment and updates clear and manageable. Here’s how each function integrates into the pipeline:This function initiates the pipeline by securely retrieving the Pinecone API key from AWS Secrets Manager using an HTTP data source. The key is stored in

ctx.stash, making it accessible to subsequent functions.With the API key secured, this function generates vector embeddings from the user's query. It uses an HTTP data source to send the query to Amazon Bedrock, which leverages the Titan Text Embeddings model to transform the query into vector embeddings. This step is crucial for accurately matching the query with relevant data in Pinecone.

This function is used to store an embedding in the Pinecone database. It uses an HTTP data source to execute this operation.

This function uses an embedding to search the Pinecone database for the most relevant entries. It uses an HTTP data source to perform the query, retrieving data that closely matches the user's initial inquiry based on the previously generated embeddings.

This function does not interact with any data sources and is responsible for assembling the final prompt. It combines the initial query, contextual data from Pinecone, and specific instructions for the language model to create a comprehensive input. This step is crucial for ensuring that the AI model generates relevant and accurate responses.

This function sends a prompt to Anthropic’s Claude 3 Haiku model via Amazon Bedrock using an HTTP data source. It passes the generated answer down the pipeline by storing it in

ctx.stash.The

embedContext mutation in the AppSync architecture is crucial for populating the Pinecone database with data. This mutation converts the user query into embeddings and stores it into the vector store making it available for retrieval in the rag query.The mutation triggers a pipeline resolver composed of the following functions in sequence:

getPineconeApiKeyFunctiongenerateEmbeddingFunctionstoreEmbeddingFunction

The

rag Query is the core functionality of the Computeless RAG tool, designed to retrieve and generate responses based on user queries. This GraphQL query makes a semantic search on the Pinecone database based on the user query, it then generates an answer using Claude 3 Haiku.The query triggers a pipeline resolver composed of the following functions in sequence:

getPineconeApiKeyFunctiongenerateEmbeddingFunctionsearchEmbeddingsFunctionbuildPromptFunctioninvokeModelFunction

The AWS Serverless Application Model (SAM) simplifies creating and deploying serverless applications on AWS. In the Computeless RAG tool project, the SAM template (

template.yaml) and the GraphQL schema (schema.graphql) are key components defining the infrastructure and API interface.The

template.yaml file defines the resources necessary for deploying the Computeless RAG tool on AWS. Here’s a breakdown of the primary components:- AWS::Serverless::GraphQLApi: This resource creates the AppSync API. It uses the GraphQL schema provided in the schema.graphql file. The API is configured with API keys for authentication and connected to various data sources and resolvers for handling operations.

- AWS::Serverless::GraphQLApi - Functions: Functions are defined for each step in the AppSync pipeline resolver, mapped to specific JavaScript files. Those functions have been covered in the AppSync Pipeline Resolver Functions section above.

- AWS::IAM::Role: Defines the roles required for the AppSync data sources to interact with AWS services securely. Each role includes policies that grant necessary permissions for actions like retrieving secrets or invoking AI models.

- AWS::AppSync::DataSource: In the SAM template, we are defining 3 AppSync

HTTPdatasources. They allow us to make HTTP calls on Pinecone, Amazon Bedrock and AWS Secrets Manager from within our AppSync pipeline resolvers.

The

schema.graphql file defines the GraphQL schema used by the AppSync API. Here’s the structure:This schema sets up a simple API with a single type of query (

rag) that accepts a string and returns a QueryOutput type containing a string field output. This setup handles the Q&A functionality of the tool, allowing users to submit queries and receive text responses.It also exposes a mutation

embedContext that we will use to populate the Pinecone database.As mentioned in section 3, the

api/resolvers/functions folder contains the js files representing the AppSync resolver functions that will be executed in the pipeline resolver whenever the GraphQL rag is invoked.Deploying this SAM project involves several steps streamlined by the SAM CLI. Here’s how to deploy the project:

Pre-Requisites:

- Clone the project on your local computer

- Install the AWS SAM CLI.

- Create a Pinecone account, a Pinecone index with

1536dimension (same as the output vector generated by Amazon Titan Text Embeddings) and copy the Pinecone API key and newly created index host from your account. - Create a plaintext secret on AWS Secrets Manager. Name of the secret should be

pineconeApiKeyand value is the API key copied from your Pinecone account. - Update the

YOUR_PINECONE_INDEX_HOSTin thetemplate.yamlfile with the value you copied from your Pinecone account. - Make sure to enable the following models on the Amazon Bedrock Console:

amazon.titan-embed-text-v1anthropic.claude-3-haiku-20240307-v1:0

Build the Project:

- Navigate to the project directory in your terminal.

- Run the command:

sam build. This command prepares the deployment by building any dependencies specified in the template.

Deploy the Project:

- After building the project, deploy it by running:

sam deploy --guided.- The guided deployment process will prompt you to enter parameters such as the stack name, AWS region, and any parameters required by the template.

- Confirm the settings and proceed with the deployment. The CLI will handle the creation of all specified resources and provide you with an output that includes the URL of the deployed AppSync API.

Now that the Computeless RAG Tool is successfully deployed, let's go ahead and test it.

In order to test the tool, we need to populate some data in the Pinecone database. To do so, we have deployed a mutation called

embedContext. Let's add a few entries in the Pinecone index.- Navigate to the AWS AppSync console

- Select the API you just deployed. It should be named

ComputelessRagApi - Click on Queries

- Paste the following in the query editor

- Run all 9 mutations by clicking on the

Runbutton and selecting the corresponding mutation

With "private" data stored in the Pinecone index, we can now query the tool.

- Navigate to the AWS AppSync console

- Select the API you just deployed. It should be named

ComputelessRagApi - Click on Queries

- Paste the following in the query editor

- Run both queries by clicking on the

Runbutton and selecting the corresponding query.- With the negative query, the tool should mention it does not have the necessary information to answer your question.

- With the positive query, it should provide you with an answer incorporating information that you have inserted into your Pinecone index in the previous section.

In order to remove the AWS resources created in this tutorial, just run the following command:

sam deleteDeveloping the Computeless RAG tool has showcased how seamlessly serverless architectures can integrate with generative AI to handle complex queries from private datasets, leveraging AWS AppSync, Amazon Bedrock, and Pinecone.

- Amazon Cognito: Use for user authentication and authorization, allowing access only to data from their respective companies. Cognito integrates seamlessly with AWS AppSync.

- AWS Secrets Manager: Each API request fetches secrets, which has two downsides:

- Costs for secret retrieval with each request, balanced by savings from not using compute resources like Lambda functions.

- Secrets may appear in plaintext in CloudWatch logs when logging is enabled, exposing the Pinecone API key. Amazon CloudWatch Logs data protection can mitigate this.

- Data Ingestion with Bedrock Knowledge Bases: For the data ingestion part in the vector datastore, using Bedrock Knowledge Bases could be an effective option.

- Date Retrieval with Bedrock Knowledge Bases: Instead of manually embedding the user query and making the vector search on Pinecone, using the Retrieve API for Amazon Bedrock Knowledge Bases can be an "AWS managed" way of doing things. This approach combines the functionalities of the

generateEmbeddingFunctionandsearchEmbeddingsFunctioninto a single function, streamlining the process and leveraging AWS-managed services.

I acknowledge that other solutions are possible, and my point here is to present AppSync as one of the options among many, offering the luxury of choice.

- Step Functions: Step Functions can also avoid compute and orchestrate your RAG pipeline, but with a different cost structure. You pay for executions and GB-seconds, which can add up depending on your use case. Compute time adds to the overall cost and network hops can introduce some additional latency.

- Lambda with Bedrock: Using AWS Lambda functions with Amazon Bedrock can handle complex queries and integrate with various data sources. This approach involves costs associated with Lambda invocations and execution time, potentially adding latency.

- Lambda with Bedrock Knowledge Bases: Combining Lambda with Bedrock Knowledge Bases enhances capabilities by providing managed knowledge bases. This approach also involves costs associated with Lambda invocations and execution time, potentially adding latency.

Regardless of the chosen method, using Bedrock incurs a cost. The key difference is that with Lambda or Step Functions, compute time is an additional cost. The advantage of AppSync for this use-case is that it offers 30 seconds of "free" compute time to connect to data sources and execute the linear RAG logic, potentially offering cost savings compared to other options.

In summary, I am not trying to convince that AppSync is the go-to solution for all cases. Rather, I aim to share that AppSync is a lesser-known yet viable option for building serverless, GenAI-driven applications. This adds to the range of choices available to developers, enabling them to select the best tool for their specific needs.

Thank you for joining me on this exploration of the Computeless RAG Tool! I hope you enjoyed reading this as much as I enjoyed building it. Find the code in the following repo.