Goodbye Elasticsearch, Hello OpenSearch: A Golang Developer's Journey with Amazon Q (Lessons Learned)

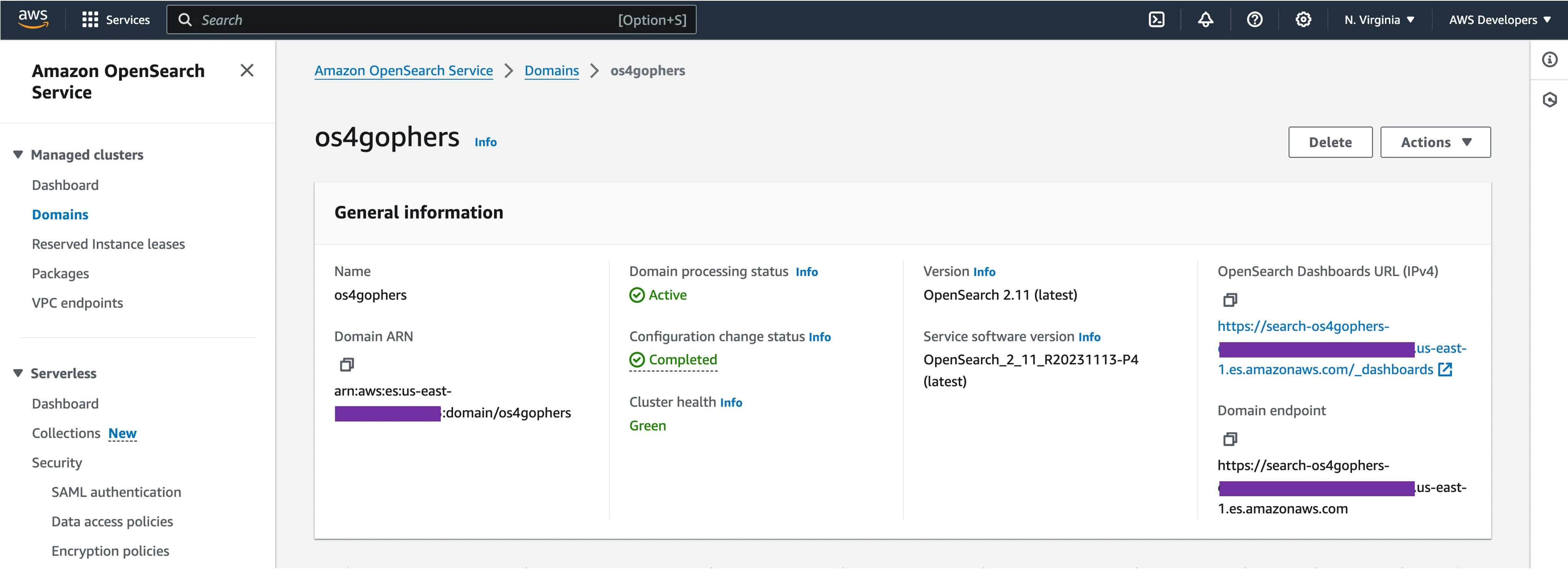

In this blog post, I share my experience using Amazon Q to migrate an application written in Go to replace the backend from Elasticsearch to OpenSearch. Modernizing legacy code and migrating applications are one of the most interesting use cases for AI Coding Companion tools like Amazon Q, and here I share how this worked for me.

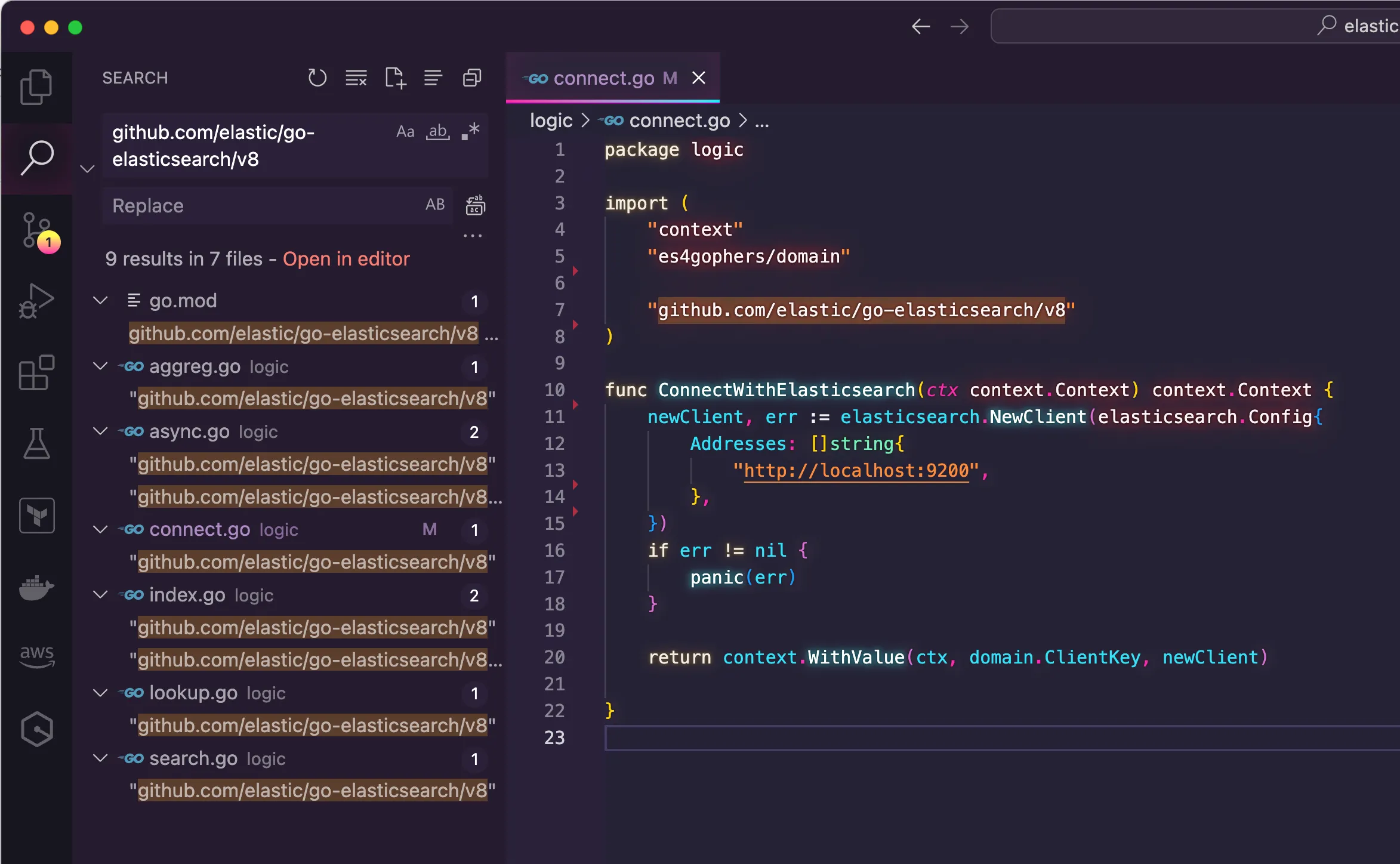

github.com/elastic/go-elasticsearch/v8.

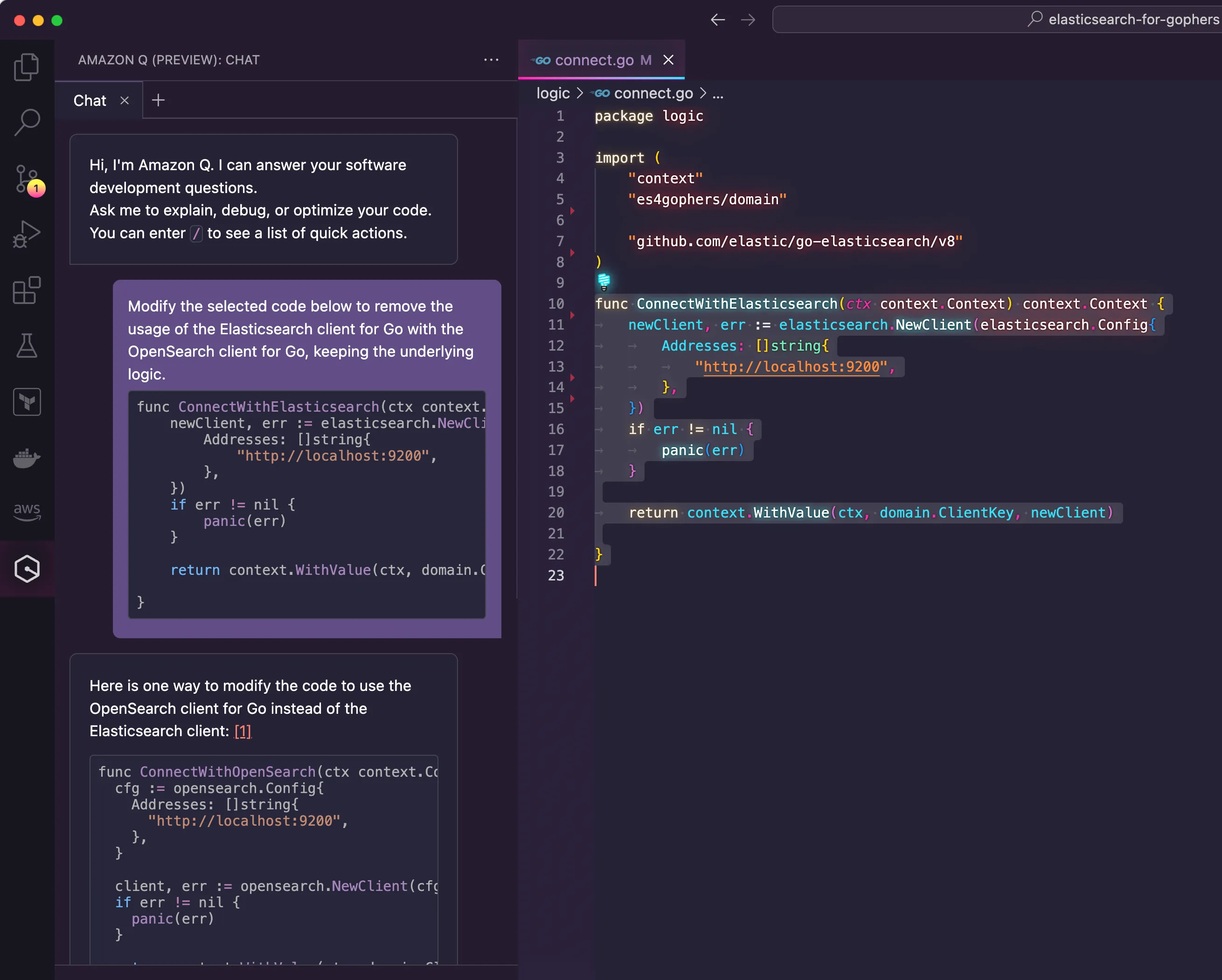

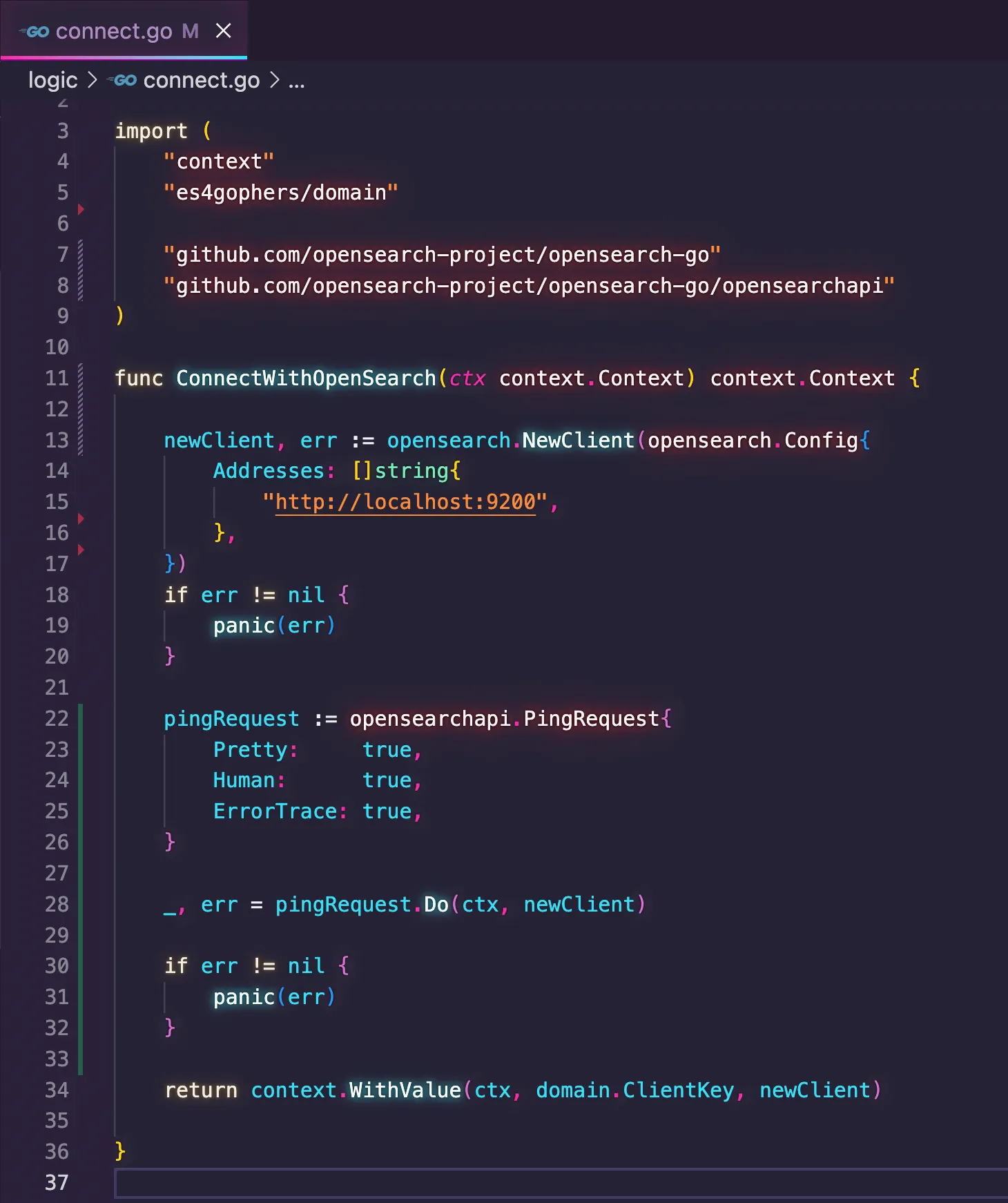

connect.go as it contains the logic about how to establish the connection with the data store. As my first experiment with Amazon Q, I wanted to see how much effort the tool would save me if I asked for help to migrate an entire function instead of bits and pieces of code. Therefore, I selected the entire function called ConnectWithElasticsearch() and sent to Amazon Q as a prompt. Then, I typed:"Modify the selected code below to remove the usage of the Elasticsearch client for Go with the OpenSearch client for Go, keeping the underlying logic."

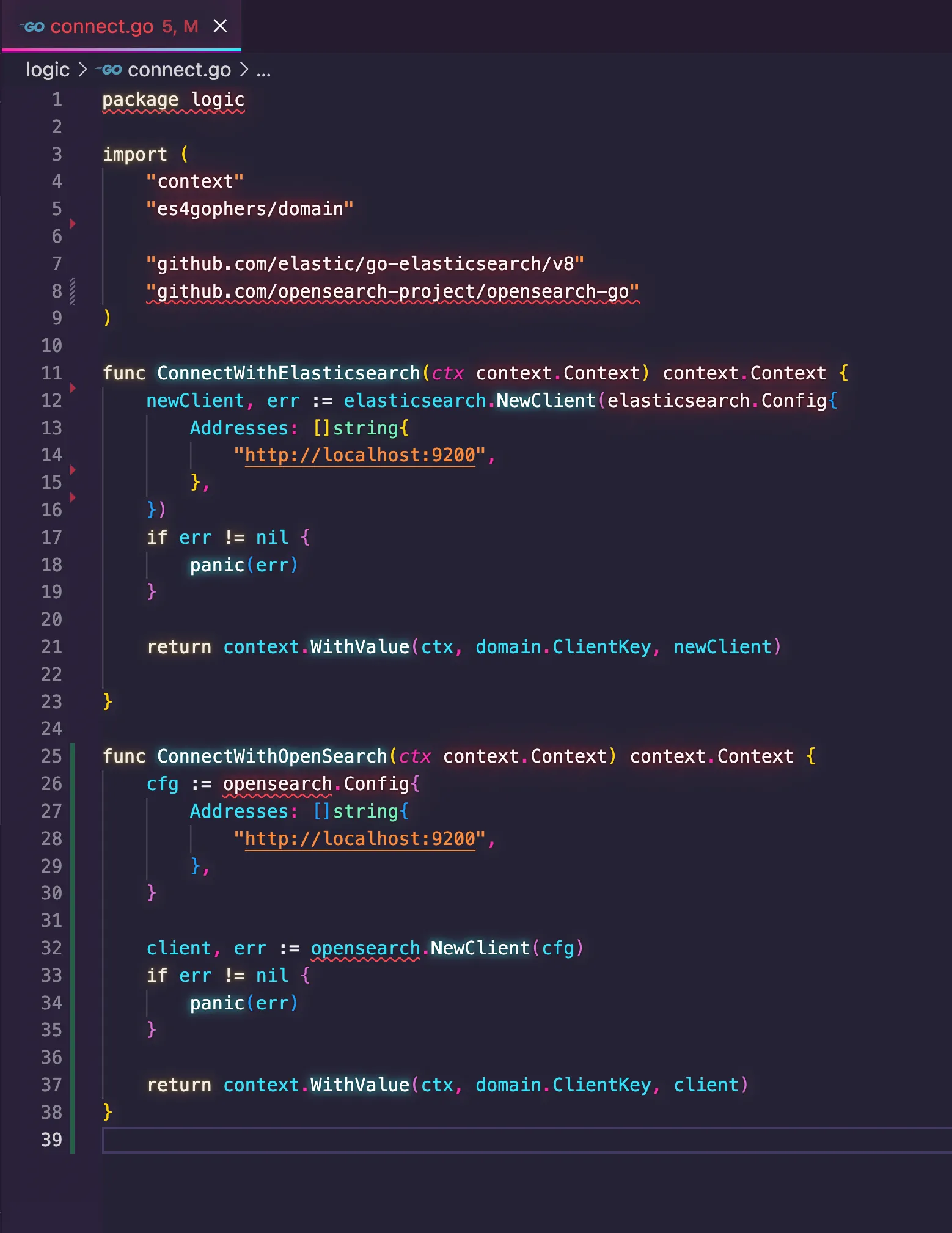

ConnectWithElasticsearch() to ConnectWithOpenSearch(), meaning that it can recognize the nature of the work being done by the developer.

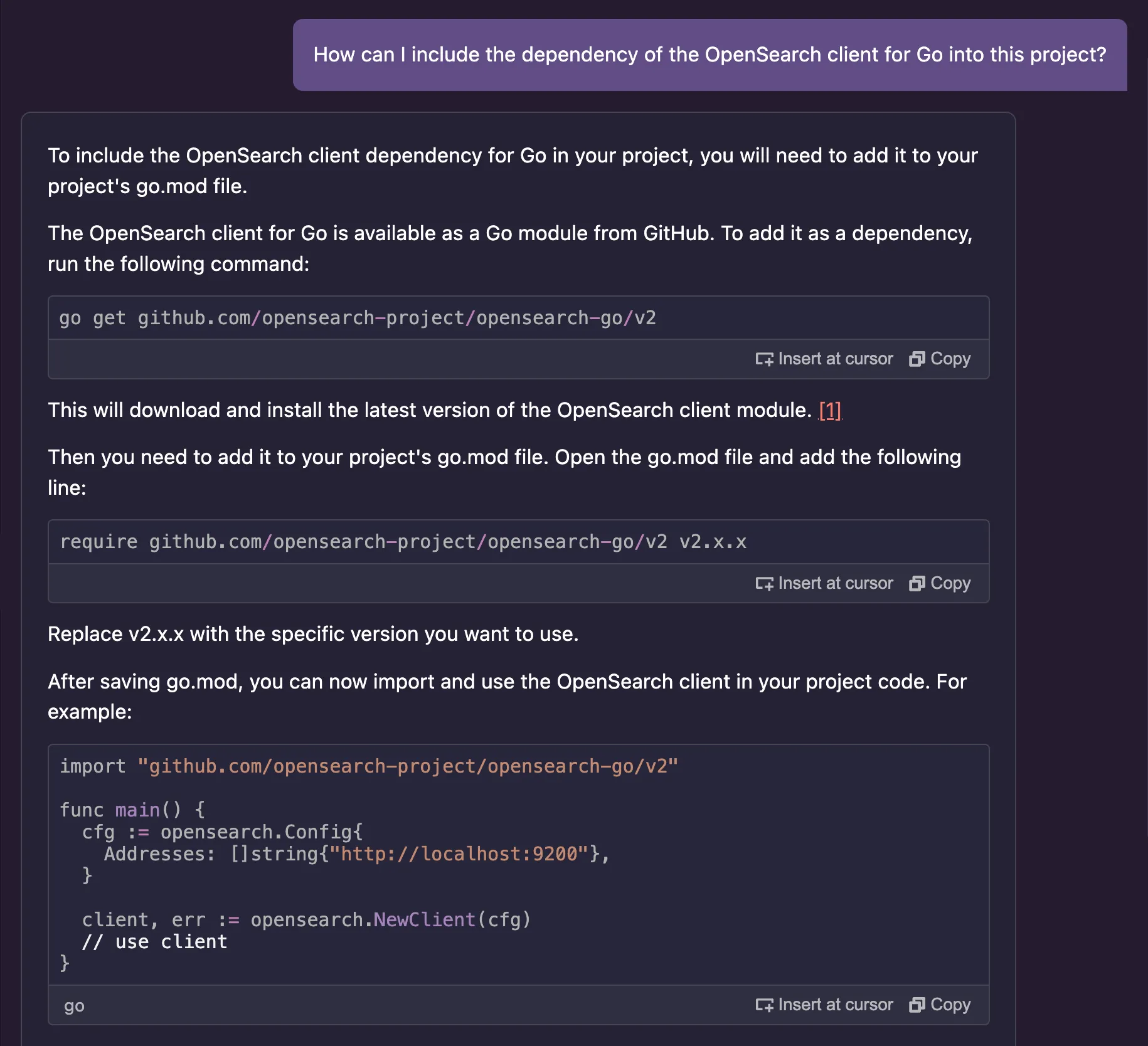

go get command, or include them into the go.mod file of your project. Either way, these are tasks that go beyond the coding part, so I wondered how much Amazon Q could help me with this."How can I include the dependency of the OpenSearch client for Go into this project?"

ping request to check if the client can reach OpenSearch and gave me a snippet of code showing that. I applied the snippet into my function, and here is the new version of the connect.go code.

- Whether the code is not doing this, and what this means for the rest of the code.

- Knowing OpenSearch provides an API to execute ping requests, and how to use it.

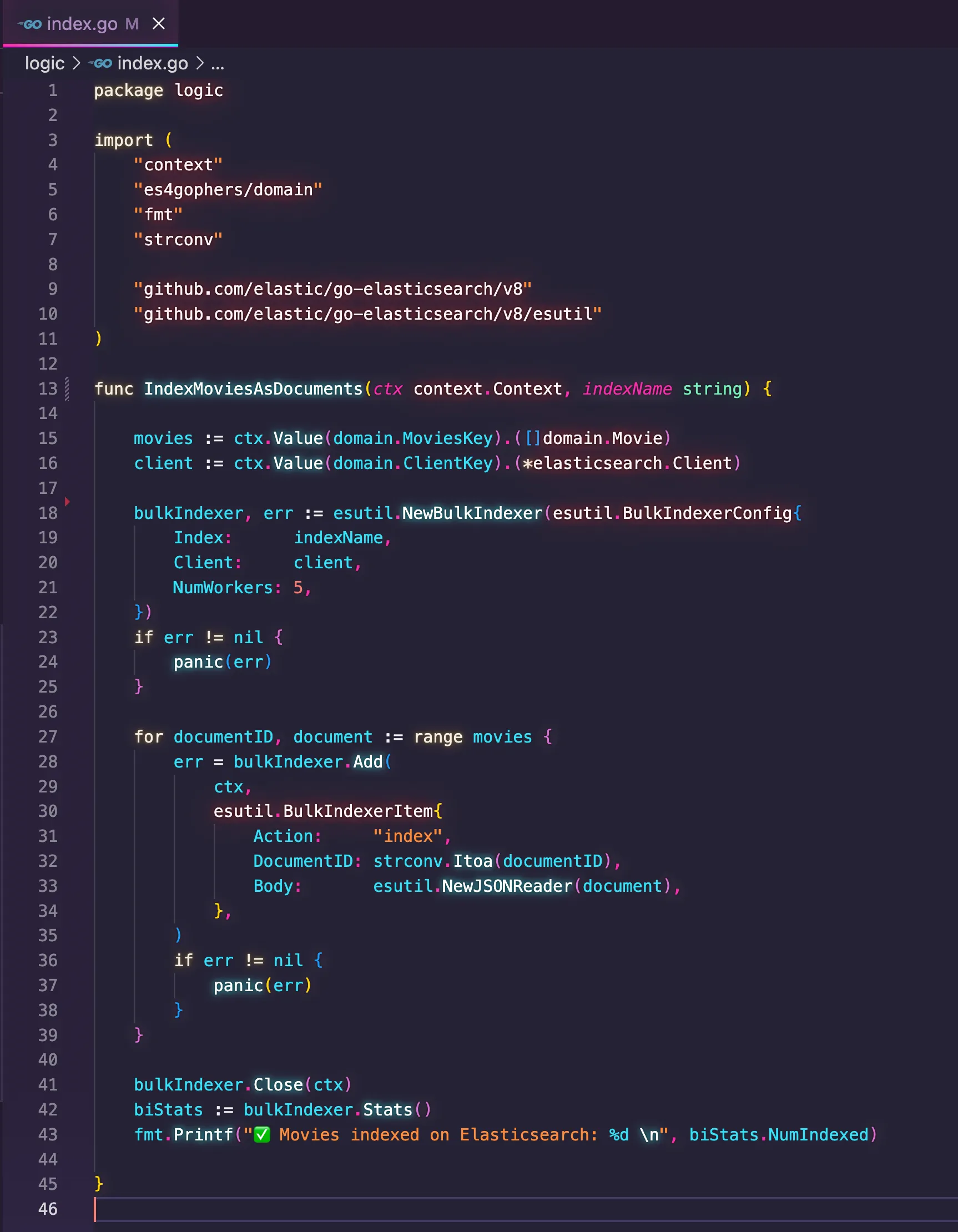

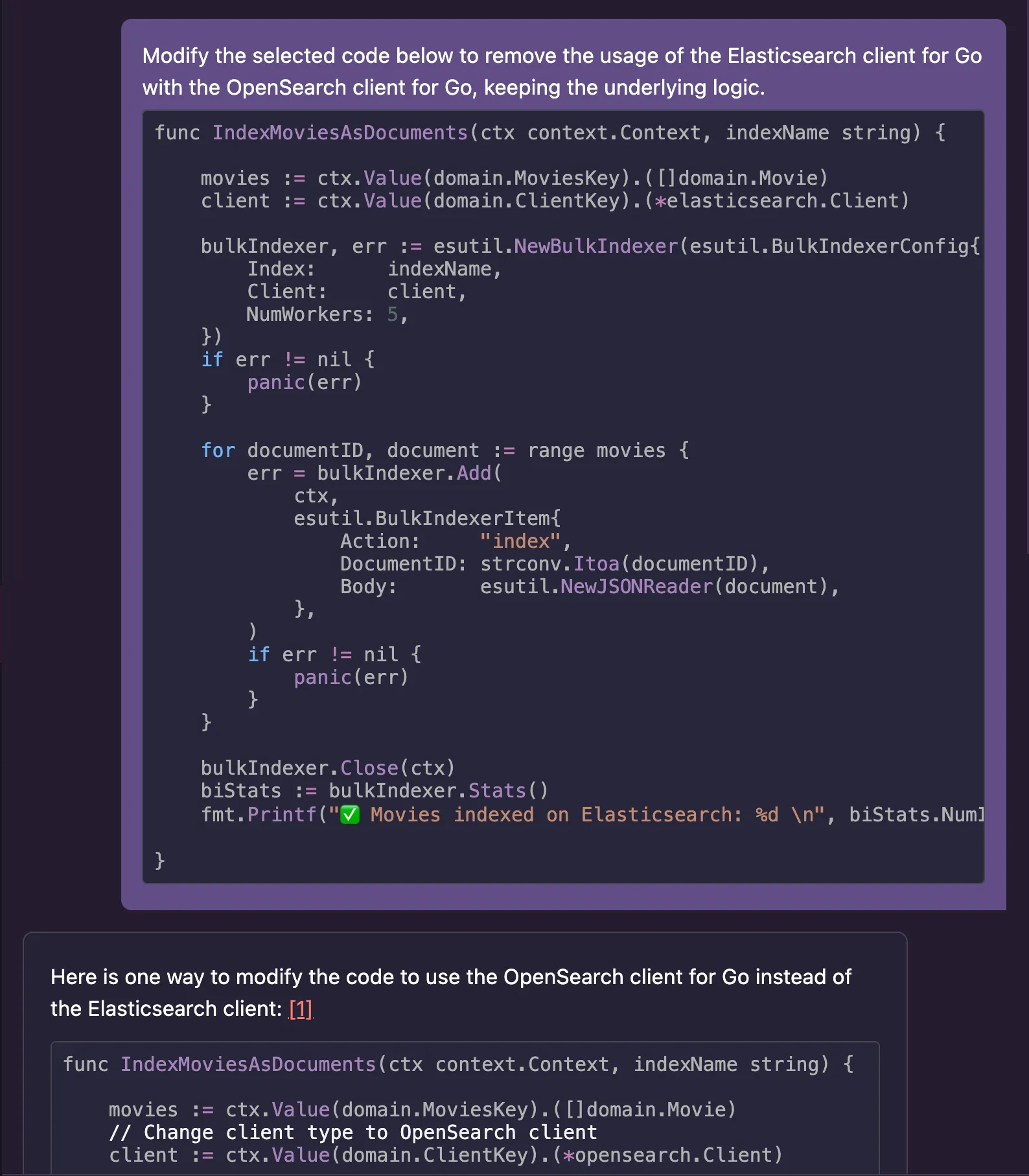

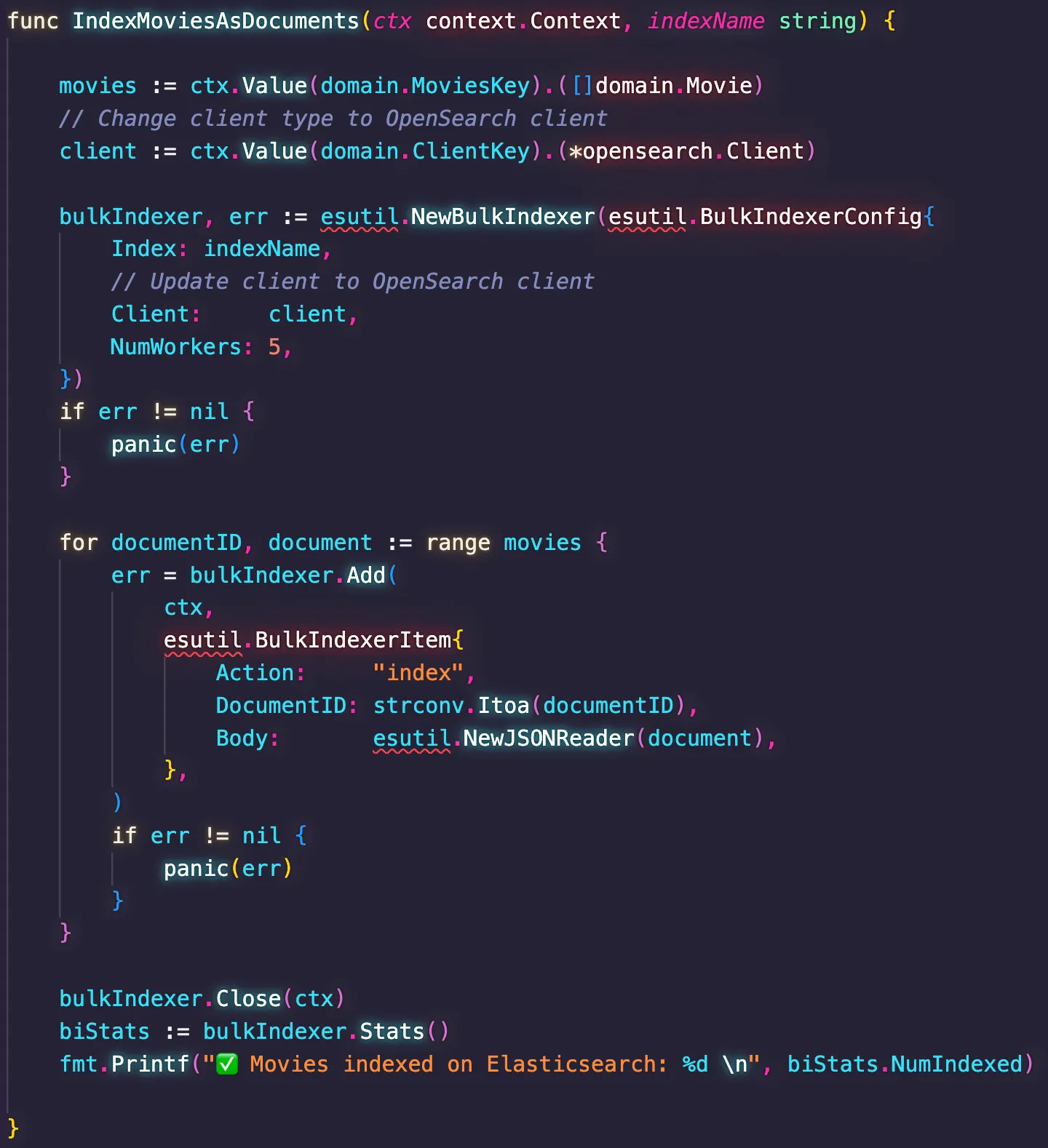

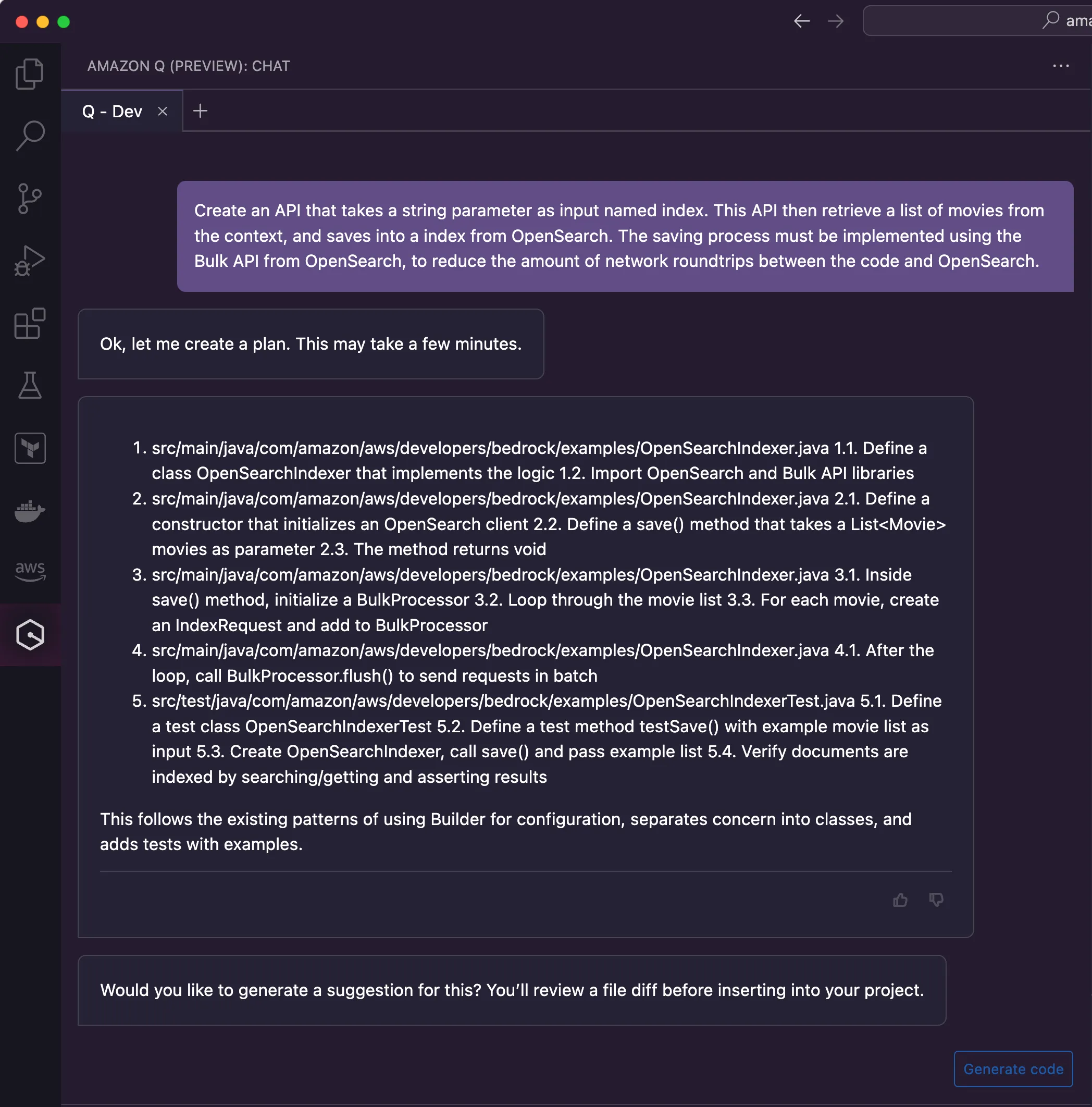

connect.go code file, I stumped upon one edge case where Amazon Q wasn't able to help me on its first attempt. In the code file index.go, I have the implementation that takes the movies loaded in-memory and index them into the data store. Indexing is how data stores like Elasticsearch and OpenSearch call the operation of storing a dataset into the collection that will persist them durably. This is notably known in SQL-based data stores as the insert operation. This is the previous implementation using Elasticsearch.

IndexMoviesAsDocuments()takes the index name as a parameter and the dataset to be indexed from the context. It uses the Bulk API from Elasticsearch to index all the movies with a single API call, which is faster than invoking the Index API for each movie, one at a time. Moreover, the Bulk API also takes advantage of Go's support for goroutines to execute the processing of the bulk concurrently, hence the usage of the parameter NumWorkers in the code. Considering there are roughly 5000 movies to be indexed, using 5 threads means indexing 1000 documents per goroutine, which provides a faster way to handle the dataset. Finally, the code also take advantage of the statistics provided by the BulkIndexer object, which allows the report of how many documents were indexed successfully.

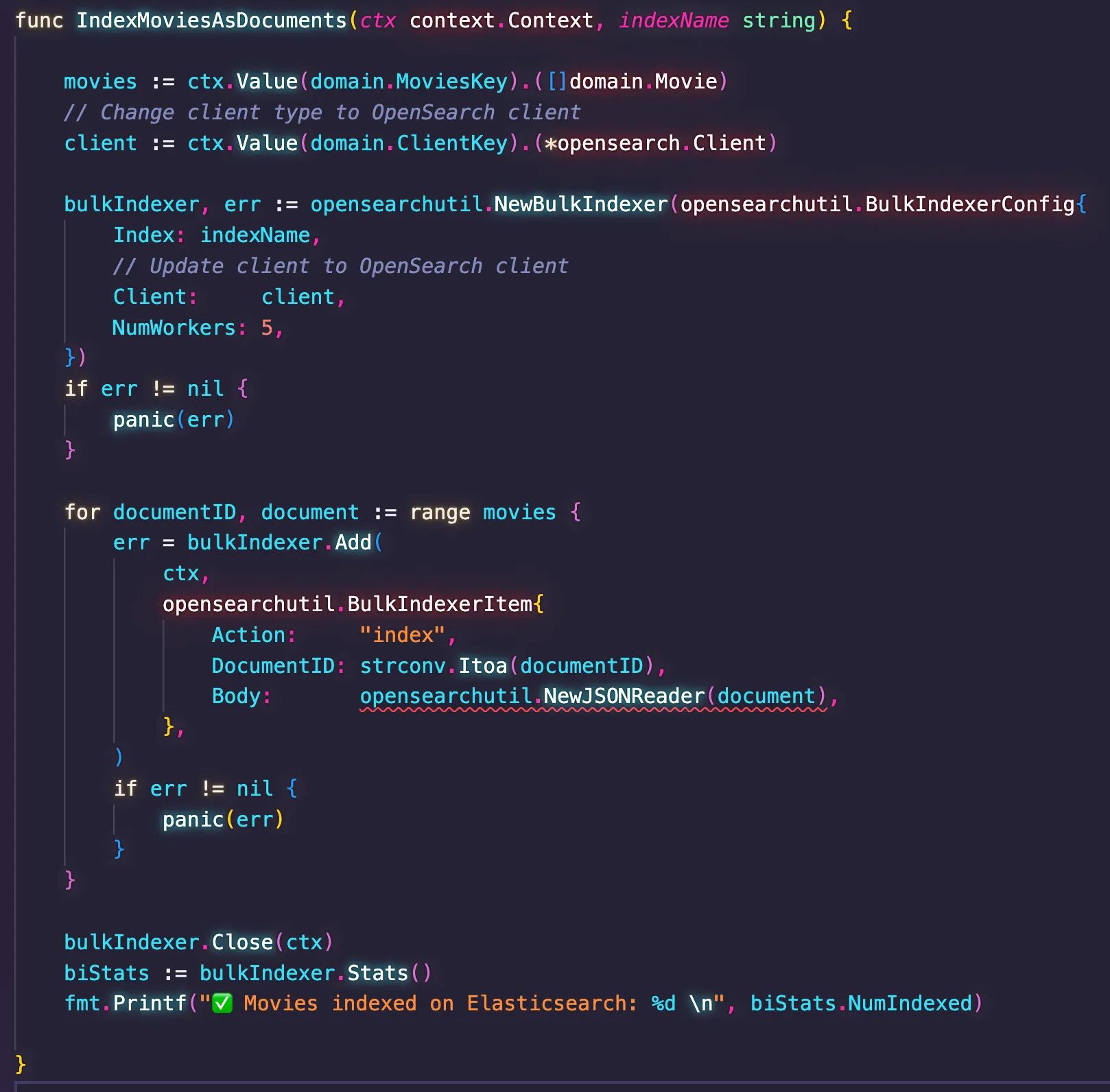

esutil doesn't exist in the OpenSearch client for Go. Instead, developers should use the opensearchutil package, which is semantically similar but has a different name. This time, I decided to fix the problem manually as it would be faster than just creating clever prompts so Amazon Q could discern about the differences. I will provide some tips later about how to do this.

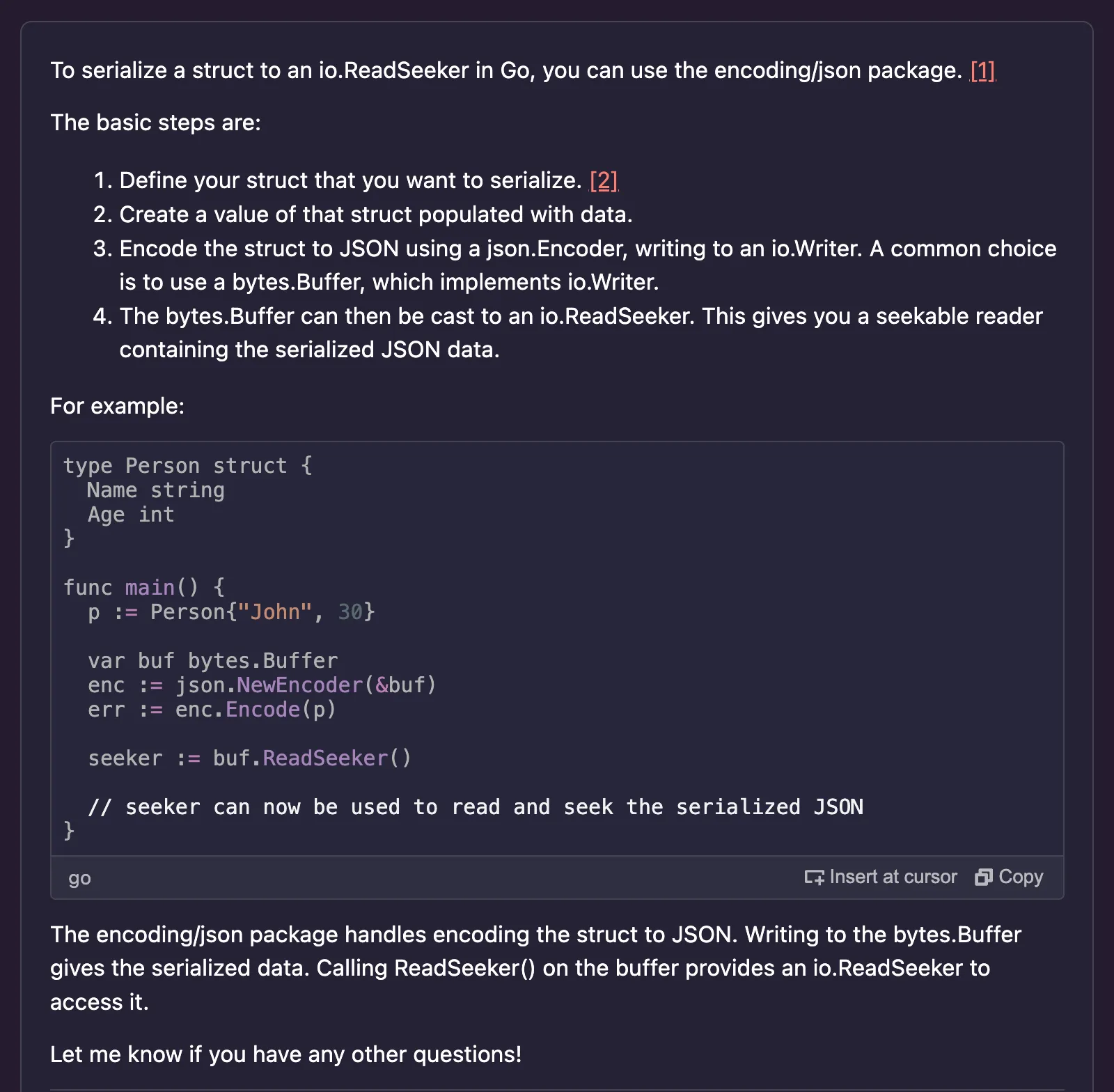

io.Reader, whereas OpenSearch expects the body to be provided as a io.ReadSeeker. Knowing the body is nothing but the version of the movie struct serialized as JSON, I asked Amazon Q how to do this to create the expected type. So I used the following prompt."How do I serialize a struct in Go into a io.ReadSeeker?"

io.ReadSeeker interface, which means implementing two callback functions:- Read(p []byte) (n int, err error)

- Seek(offset int64, whence int) (int64, error)

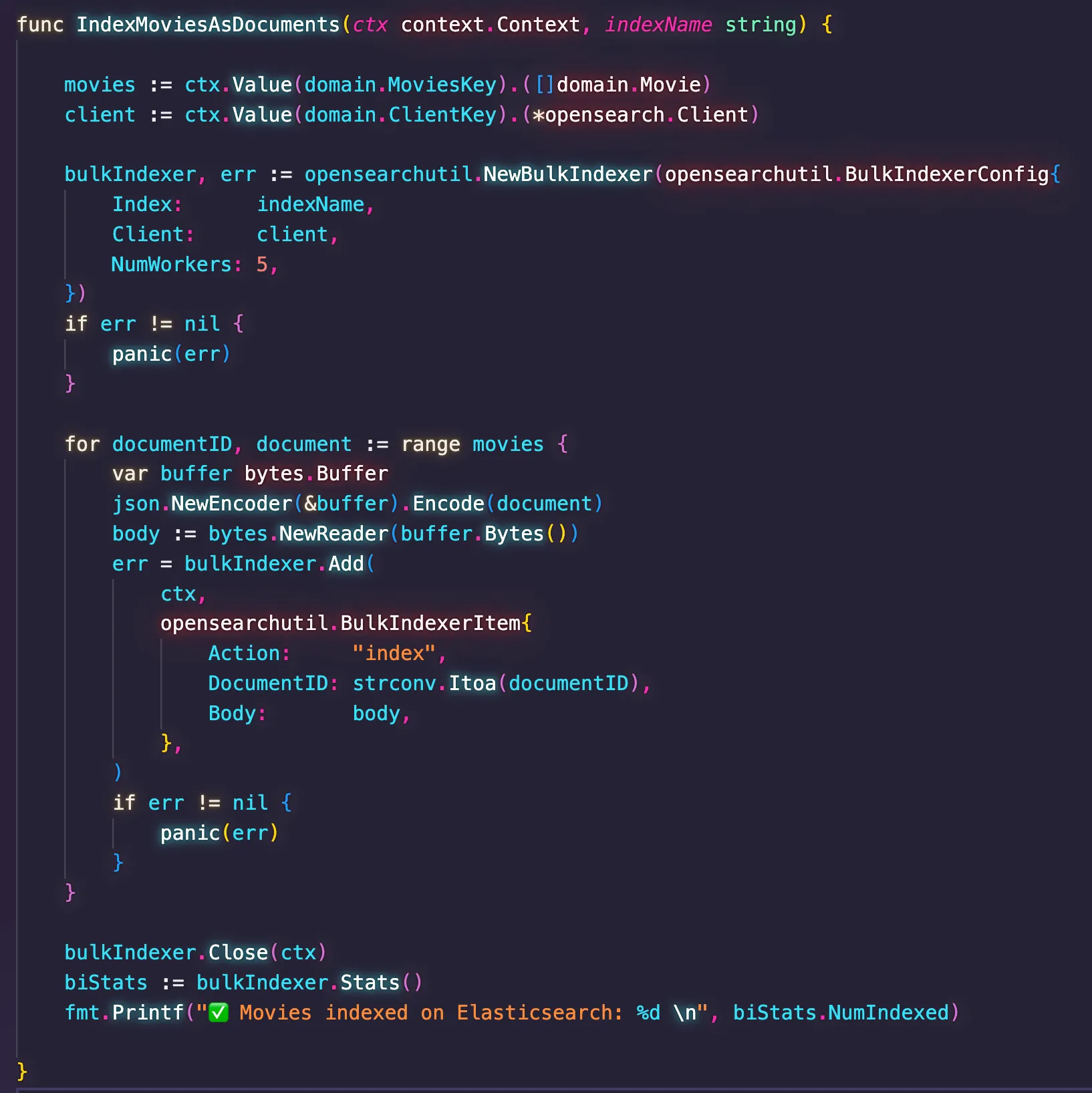

io.ReadSeeker object. After implementing all these changes, I could execute the code successfully. Here is the final version of the index.go code.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

version: '3.0'

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.14.0

container_name: elasticsearch

environment:

- bootstrap.memory_lock=true

- ES_JAVA_OPTS=-Xms1g -Xmx1g

- discovery.type=single-node

- node.name=lonely-gopher

- cluster.name=es4gophers

ulimits:

memlock:

hard: -1

soft: -1

ports:

- 9200:9200

networks:

- es4gophers

healthcheck:

interval: 10s

retries: 20

test: curl -s http://localhost:9200/_cluster/health | grep -vq '"status":"red"'

kibana:

image: docker.elastic.co/kibana/kibana:7.14.0

container_name: kibana

depends_on:

elasticsearch:

condition: service_healthy

environment:

ELASTICSEARCH_URL: http://elasticsearch:9200

ELASTICSEARCH_HOSTS: http://elasticsearch:9200

ports:

- 5601:5601

networks:

- es4gophers

healthcheck:

interval: 10s

retries: 20

test: curl --write-out 'HTTP %{http_code}' --fail --silent --output /dev/null http://localhost:5601/api/status

networks:

es4gophers:

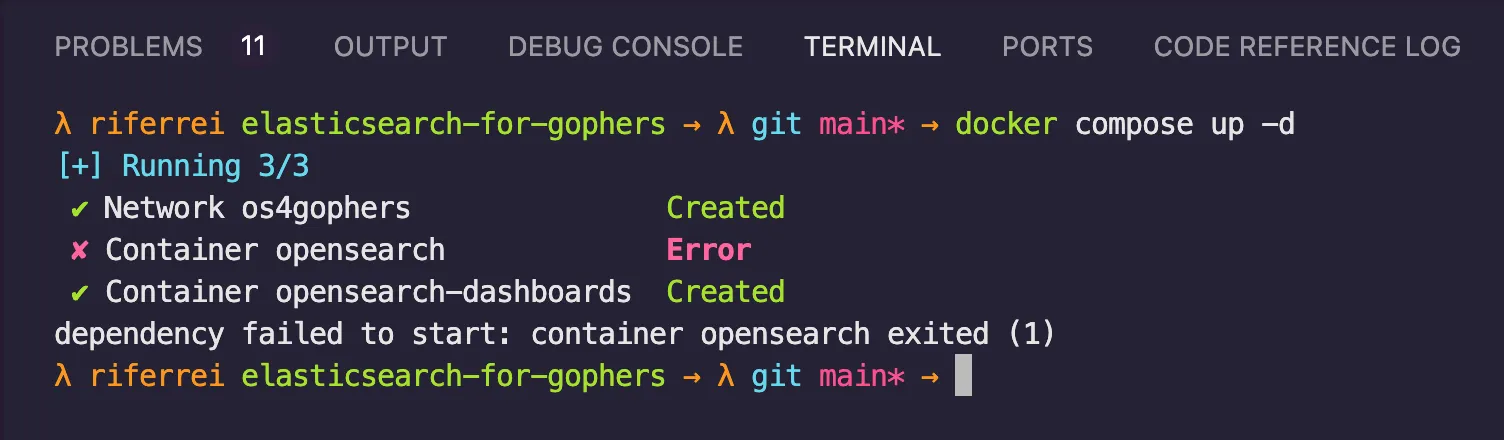

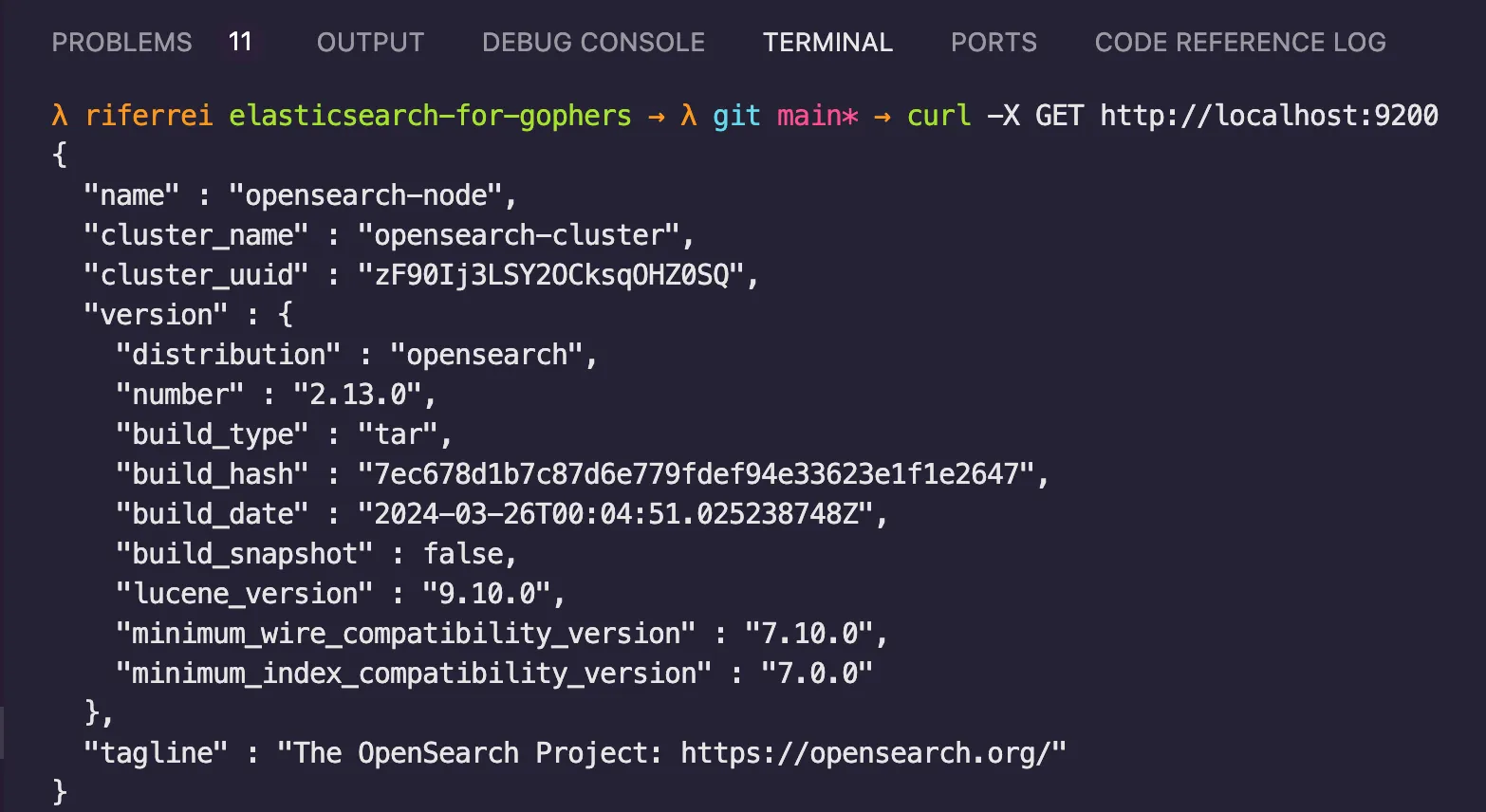

driver: bridgedocker.elastic.co/elasticsearch/elasticsearch to opensearchproject/opensearch. However, things are not that simple. The container image dictates different implementation aspects that affect the way you customize the containers. So I decided to use Amazon Q to do this migration for me, but giving a reference about the ideal solution as part of the prompt. This was the structure of the prompt:"Consider the following Docker Compose file as a reference of how to implement OpenSearch with Docker.<DOCKER_COMPOSE_SAMPLE>

Migrate the Docker Compose file below considering the OpenSearch reference provided. Make sure to keep the same ports exposed.<EXISTING_DOCKER_COMPOSE_FILE>"

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

services:

opensearch:

image: opensearchproject/opensearch:latest

container_name: opensearch

hostname: opensearch

environment:

- cluster.name=opensearch-cluster

- node.name=opensearch-node

- discovery.type=single-node

- bootstrap.memory_lock=true

- "OPENSEARCH_JAVA_OPTS=-Xms1g -Xmx1g"

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

ports:

- 9200:9200

- 9600:9600

healthcheck:

interval: 20s

retries: 10

test: curl -s http://localhost:9200/_cluster/health | grep -vq '"status":"red"'

opensearch-dashboards:

image: opensearchproject/opensearch-dashboards:latest

container_name: opensearch-dashboards

hostname: opensearch-dashboards

depends_on:

opensearch:

condition: service_healthy

environment:

- 'OPENSEARCH_HOSTS=["http://opensearch:9200"]'

ports:

- 5601:5601

expose:

- "5601"

healthcheck:

interval: 10s

retries: 20

test: curl --write-out '"%HTTP %{http_code}"' --fail --silent --output /dev/null http://localhost:5601/api/status

networks:

default:

name: os4gophers

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

services:

opensearch:

image: opensearchproject/opensearch:latest

container_name: opensearch

hostname: opensearch

environment:

- cluster.name=opensearch-cluster

- node.name=opensearch-node

- discovery.type=single-node

- bootstrap.memory_lock=true

- "OPENSEARCH_JAVA_OPTS=-Xms1g -Xmx1g"

- "DISABLE_INSTALL_DEMO_CONFIG=true"

- "DISABLE_SECURITY_PLUGIN=true"

ulimits:

memlock:

soft: -1

hard: -1

nofile:

soft: 65536

hard: 65536

ports:

- 9200:9200

- 9600:9600

healthcheck:

interval: 20s

retries: 10

test: curl -s http://localhost:9200/_cluster/health | grep -vq '"status":"red"'

opensearch-dashboards:

image: opensearchproject/opensearch-dashboards:latest

container_name: opensearch-dashboards

hostname: opensearch-dashboards

depends_on:

opensearch:

condition: service_healthy

environment:

- 'OPENSEARCH_HOSTS=["http://opensearch:9200"]'

- "DISABLE_SECURITY_DASHBOARDS_PLUGIN=true"

ports:

- 5601:5601

expose:

- "5601"

healthcheck:

interval: 10s

retries: 20

test: curl --write-out '"%HTTP %{http_code}"' --fail --silent --output /dev/null http://localhost:5601/api/status

networks:

default:

name: os4gophers

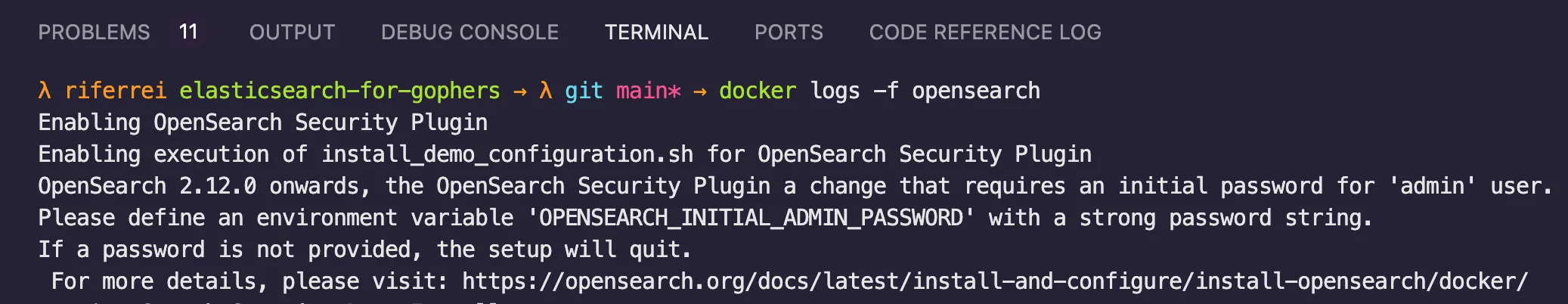

12, 13, and 38. After adding these changes, I was able to execute the Docker Compose file without any errors.

"Migrate the Terraform code below to replace the elasticsearch resource with one called opensearch. Moreover, implement the code using Amazon OpenSearch instead of Elastic Cloud."

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

terraform {

required_version = ">= 0.12.29"

required_providers {

ec = {

source = "elastic/ec"

version = "0.2.1"

}

}

}

provider "ec" {

apikey = var.ec_api_key

}

variable "ec_api_key" {

type = string

}

variable "deployment_name" {

type = string

}

variable "deployment_template_id" {

type = string

}

variable "cloud_region" {

type = string

}

data "ec_stack" "latest" {

version_regex = "latest"

region = var.cloud_region

}

resource "ec_deployment" "elasticsearch" {

name = var.deployment_name

deployment_template_id = var.deployment_template_id

region = data.ec_stack.latest.region

version = data.ec_stack.latest.version

elasticsearch {

autoscale = "true"

topology {

id = "hot_content"

size = "8g"

zone_count = "2"

}

}

kibana {

topology {

size = "4g"

zone_count = "2"

}

}

}

output "elasticsearch_endpoint" {

value = ec_deployment.elasticsearch.elasticsearch[0].https_endpoint

}

output "kibana_endpoint" {

value = ec_deployment.elasticsearch.kibana[0].https_endpoint

}

output "elasticsearch_username" {

value = ec_deployment.elasticsearch.elasticsearch_username

}

output "elasticsearch_password" {

value = ec_deployment.elasticsearch.elasticsearch_password

sensitive = true

}

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

terraform {

required_version = ">= 0.12.29"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 3.0"

}

}

}

provider "aws" {

region = var.region

}

variable "region" {

type = string

}

resource "aws_opensearch_domain" "example" {

domain_name = var.domain_name

elasticsearch_version = "OpenSearch_1.0"

cluster_config {

instance_type = "m5.large.elasticsearch"

instance_count = 1

zone_awareness_enabled = true

zone_awareness_configuration {

availability_zone_count = 2

}

}

ebs_options {

ebs_enabled = true

volume_size = 10

}

node_to_node_encryption {

enabled = true

}

encrypt_at_rest {

enabled = true

}

domain_endpoint_options {

enforce_https = true

tls_security_policy = "Policy-Min-TLS-1-2-2019-07"

}

}

output "endpoint" {

value = aws_opensearch_domain.example.endpoint

}

output "arn" {

value = aws_opensearch_domain.example.arn

}

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

variable "domain_name" {

type = string

default = "os4gophers"

}

variable "opensearch_username" {

type = string

default = "opensearch_user"

}

variable "opensearch_password" {

type = string

default = "W&lcome123"

sensitive = true

}

data "aws_caller_identity" "current" {}

data "aws_region" "current" {}

resource "aws_opensearch_domain" "opensearch" {

domain_name = var.domain_name

engine_version = "OpenSearch_2.11"

cluster_config {

dedicated_master_enabled = true

dedicated_master_type = "m6g.large.search"

dedicated_master_count = 3

instance_type = "r6g.large.search"

instance_count = 3

zone_awareness_enabled = true

zone_awareness_config {

availability_zone_count = 3

}

warm_enabled = true

warm_type = "ultrawarm1.large.search"

warm_count = 2

}

advanced_security_options {

enabled = true

anonymous_auth_enabled = false

internal_user_database_enabled = true

master_user_options {

master_user_name = var.opensearch_username

master_user_password = var.opensearch_password

}

}

domain_endpoint_options {

enforce_https = true

tls_security_policy = "Policy-Min-TLS-1-2-2019-07"

}

encrypt_at_rest {

enabled = true

}

ebs_options {

ebs_enabled = true

volume_size = 300

volume_type = "gp3"

throughput = 250

}

node_to_node_encryption {

enabled = true

}

access_policies = <<CONFIG

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "es:*",

"Principal": "*",

"Effect": "Allow",

"Resource": "arn:aws:es:${data.aws_region.current.name}:${data.aws_caller_identity.current.account_id}:domain/${var.domain_name}/*"

}

]

}

CONFIG

}

output "domain_endpoint" {

value = "https://${aws_opensearch_domain.opensearch.endpoint}"

}

output "opensearch_dashboards" {

value = "https://${aws_opensearch_domain.opensearch.dashboard_endpoint}"

}