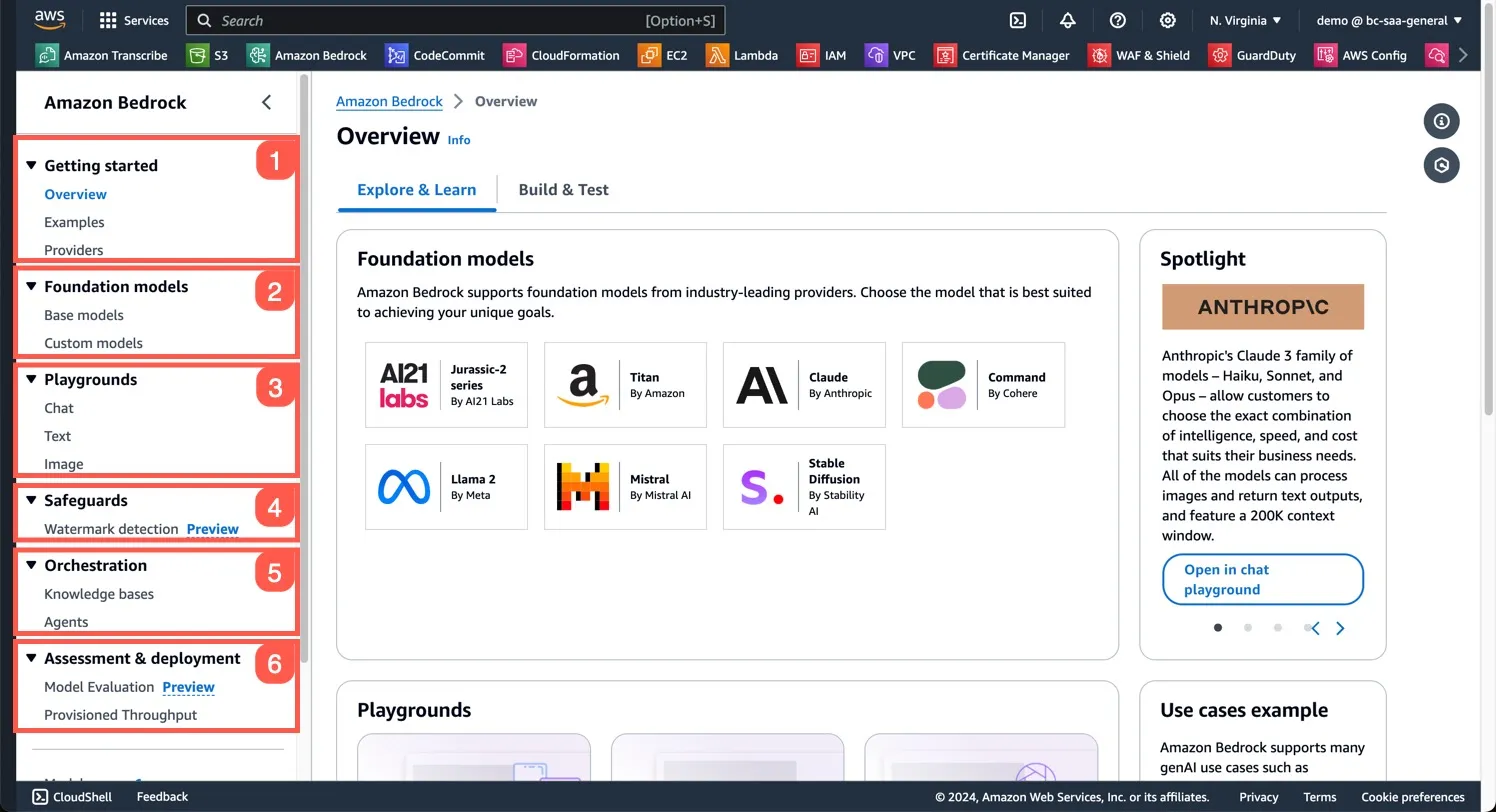

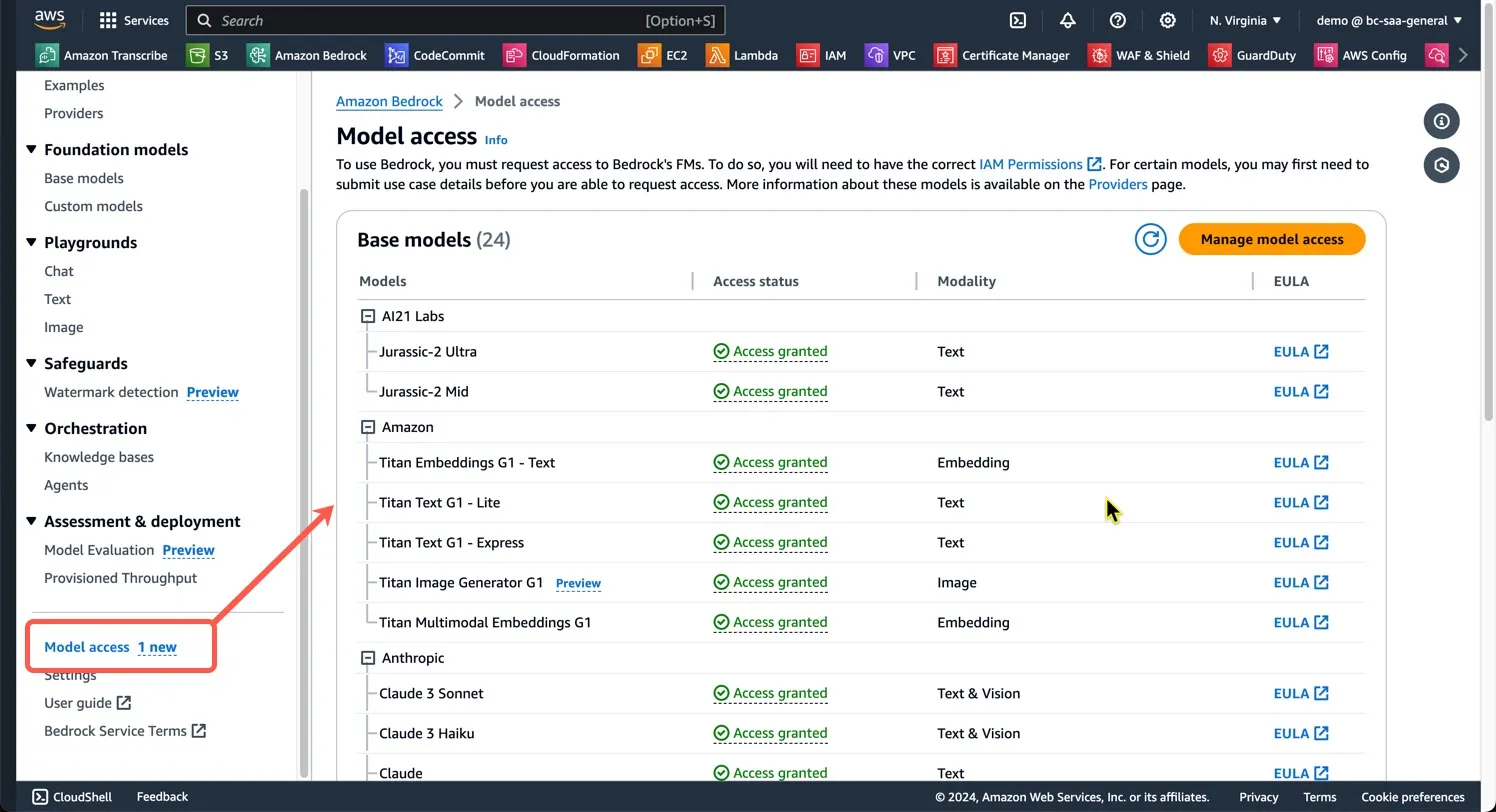

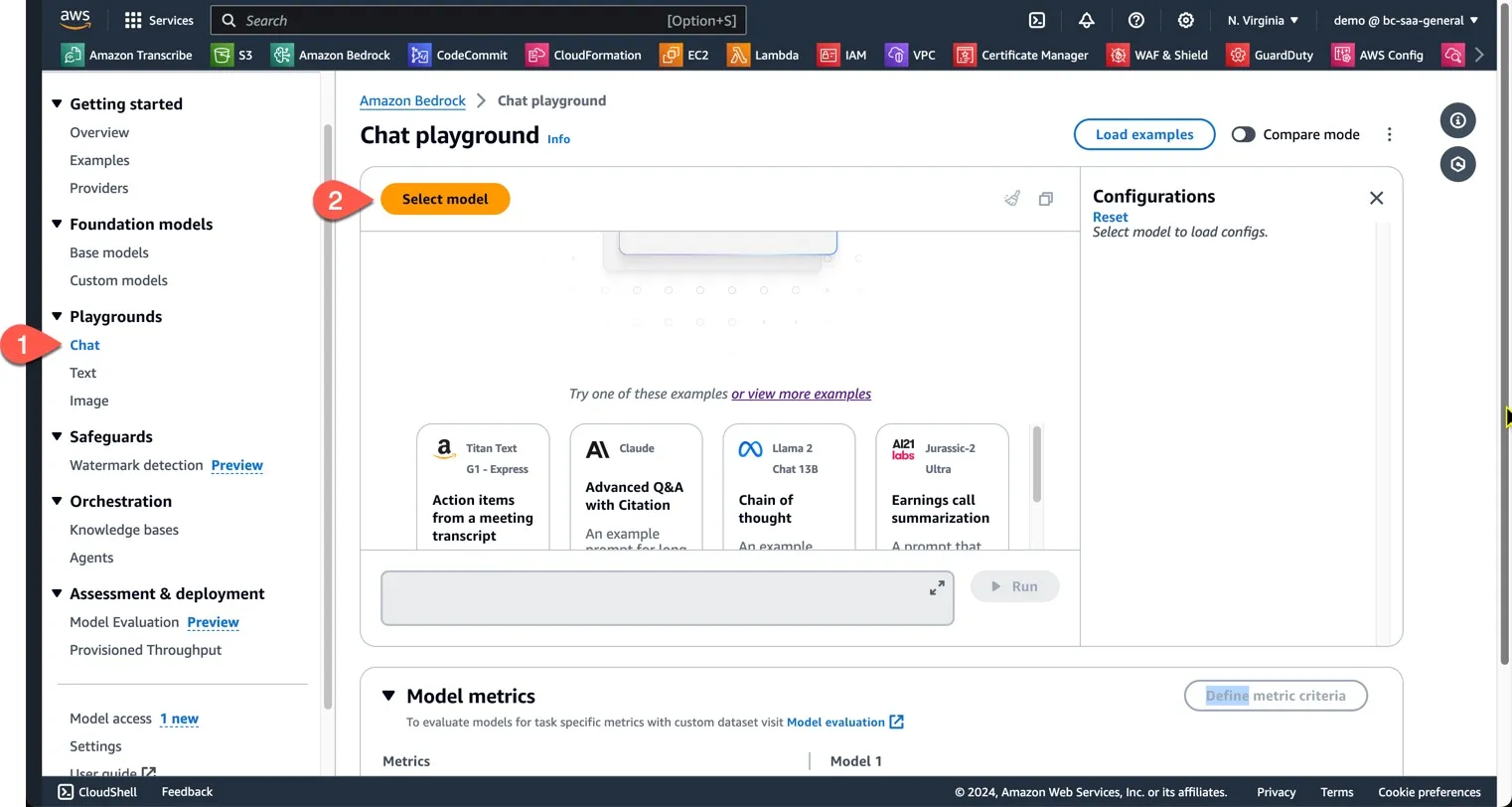

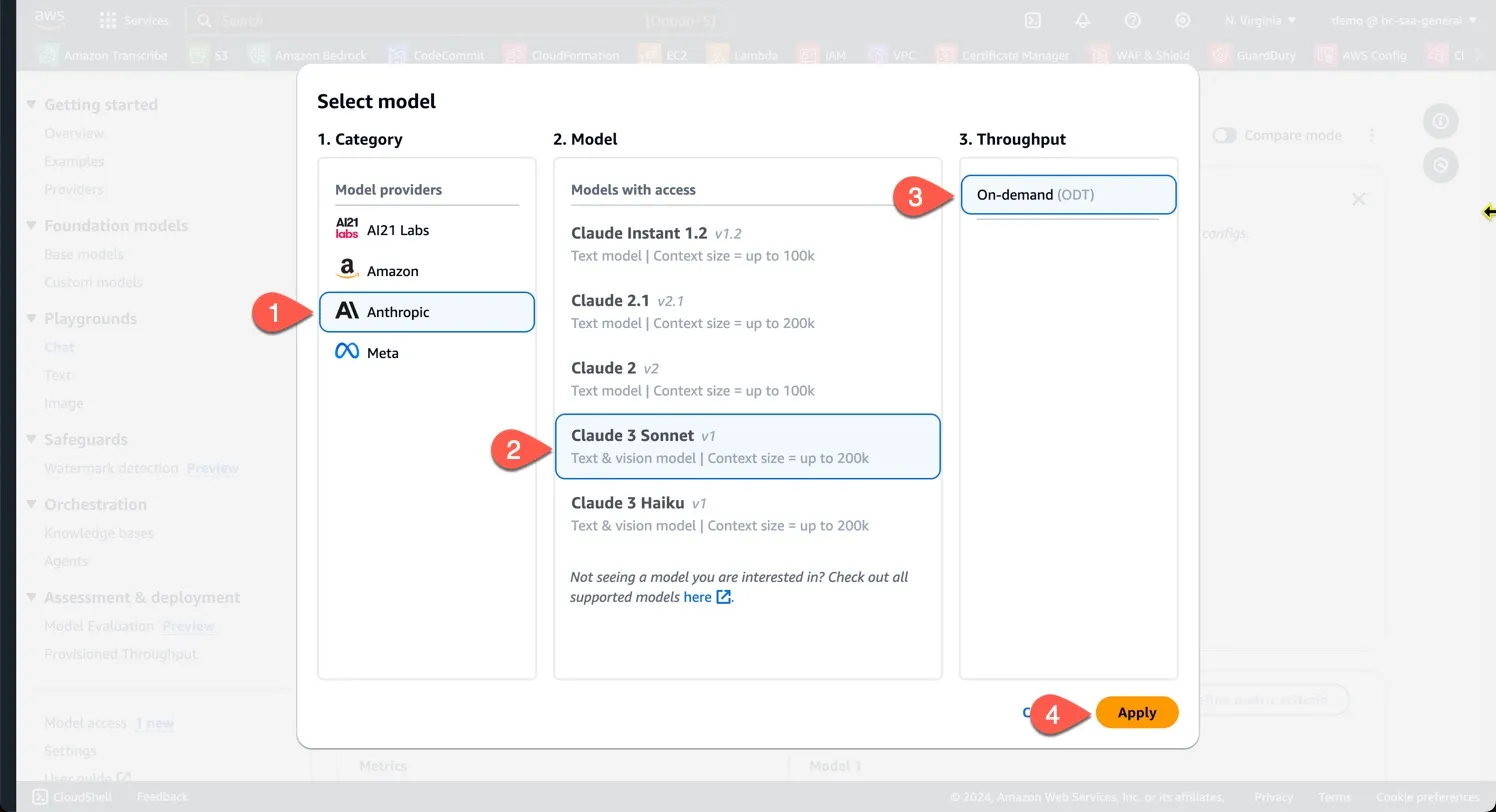

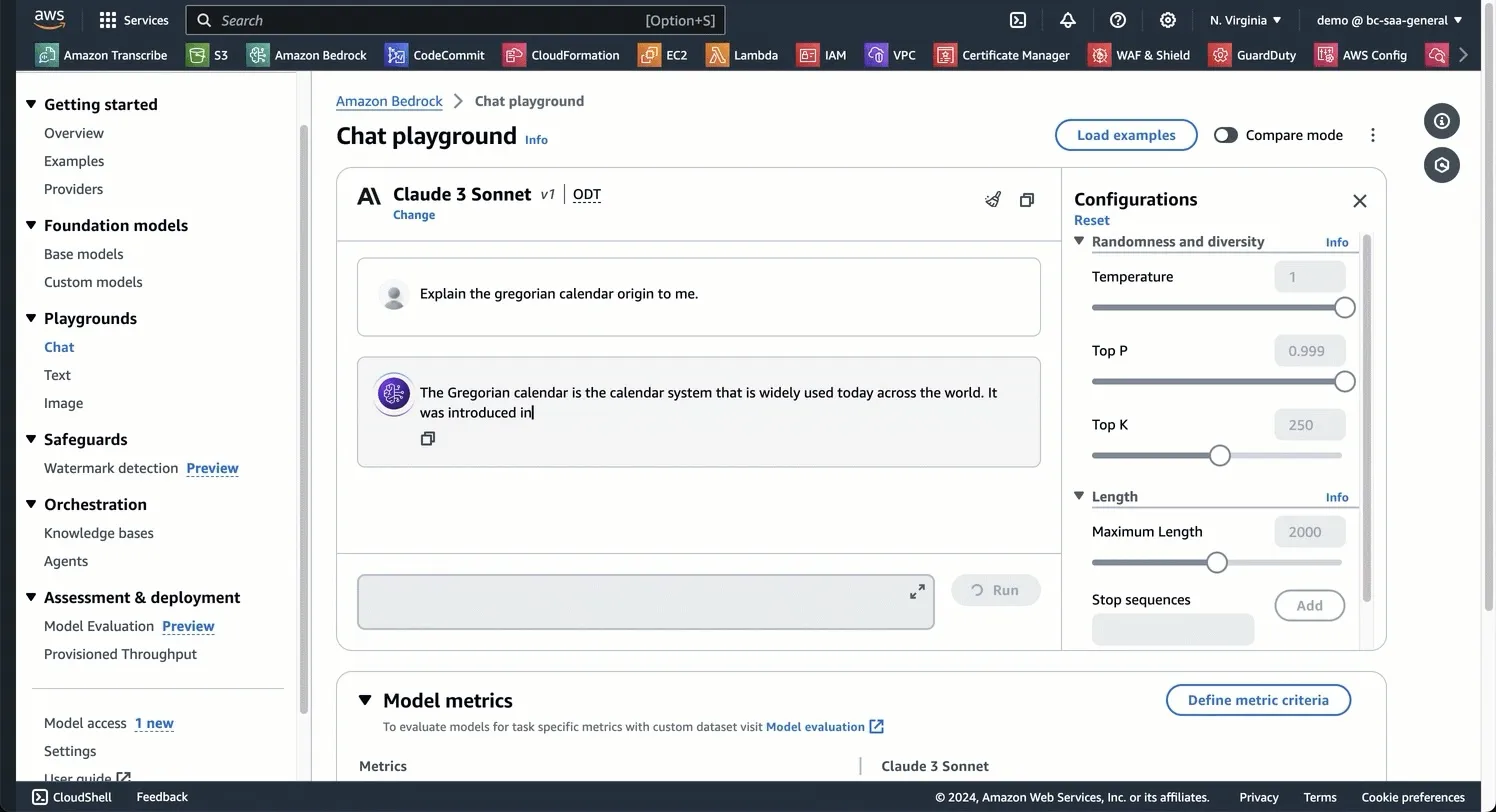

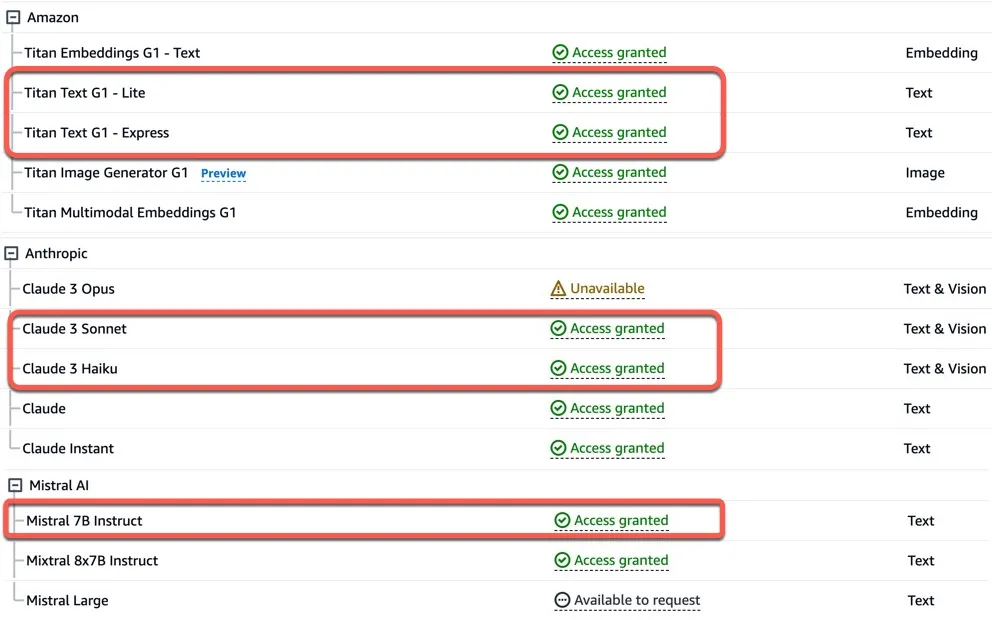

Getting started with different LLMs on Amazon Bedrock

From Anthropic's Claude 3 family to Mistral AI's various models, there are plenty of ways to start experimenting with on Amazon Bedrock. Here are the basics.

- On-Demand

- Batch

- Provisioned Throughout

- Model Customization

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

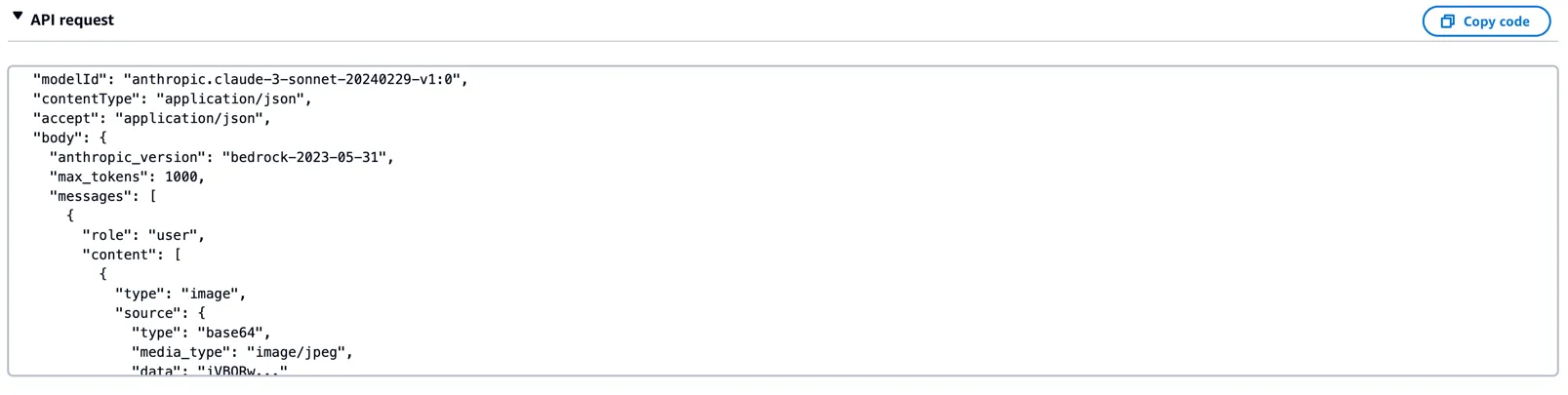

# imports JSON and Boto3.

import json

import boto3

# Initialize Bedrock client

bedrock = boto3.client(service_name="bedrock-runtime", region_name="us-west-2")

# Next, we create a function called `send_prompt_to_claude` that will take a single argument `prompt`. We create a dictionary called `prompt_config` with the configuration of the prompt we send to Claude. Notice that we define the version, the max_tokens, and the message.

def send_prompt_to_claude(prompt):

try:

prompt_config = {

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 1000,

"messages": [

{

"role": "user",

"content": prompt

}

]

}

# We convert the `prompt_config` dictionary into a JSON string.

body = json.dumps(prompt_config)

# Next, `modelId`, `contentType`, and `accept` set the values that are required by the `bedrock.invoke_model` method. Have a look at the [Boto3 documentation](https://brandonjcarroll.com/links/9lmrs) and you will see the [request syntax](https://brandonjcarroll.com/links/0zfs2) that's required. In this case the request and response should be in JSON.

modelId = "anthropic.claude-3-sonnet-20240229-v1:0"

contentType = "application/json"

accept = "application/json"

# We then invoke the model and extract and return the response.

response = bedrock.invoke_model(

modelId=modelId,

contentType=contentType,

accept=accept,

body=body

)

response_body = json.loads(response.get("body").read())

claude_response = response_body.get("content")[0]["text"].strip()

return claude_response

# Lastly we have an exception handling block to catch any exceptions and gives us some details.

except Exception as e:

print(f"An error occurred while invoking Claude: {e}")

return "An error occurred."

# Next we create an infinite loop using `while True`. Inside the loop, the user is prompted to enter their question using the `input` function. The message `"Enter your question (or 'q' to quit): "` is displayed to the user. If the user enters 'q' (case-insensitive), the `break` statement is executed, which will terminate the loop.

if __name__ == "__main__":

while True:

user_input = input("Enter your question (or 'q' to quit): ")

if user_input.lower() == 'q':

break

# Finally we the question entered by the user is passed as an argument to the `send_prompt_to_claude` function. The prompt is sent to Claude and the response is retrieved. The response is stored in the `response` variable and printed. Our loop then starts over.

response = send_prompt_to_claude(user_input)

print(f"\n\n-----------\nClaude's response:\n\n {response}\n -----------\n")

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

[~/Documents/repos/beginning-bedrock]$ python claude.py

Enter your question (or 'q' to quit): Does an amazon VPC support a stateful firewall?

-----------

Claude's response:

Yes, an Amazon Virtual Private Cloud (VPC) supports stateful firewalls. Amazon VPC provides several options for deploying stateful firewalls:

1. AWS Network Firewall: AWS Network Firewall is a managed service that provides a stateful firewall with integrated intrusion prevention system (IPS) capabilities. It allows you to define firewall rules and policies to filter and inspect traffic across your VPCs.

2. Security Groups: Security groups in Amazon VPC act as stateful firewalls at the instance level. They control inbound and outbound traffic to and from your EC2 instances based on rules that you define.

3. Network ACLs (NACLs): Network ACLs are stateless firewalls that operate at the subnet level in a VPC. They provide an additional layer of security by allowing or denying traffic based on rules that you define.

4. Third-Party Virtual Firewalls: You can also deploy third-party virtual firewall appliances, such as solutions from vendors like Palo Alto Networks, Fortinet, and Check Point, within your VPC. These virtual appliances operate as stateful firewalls and can be integrated with your VPC's routing configuration.

While Security Groups and Network ACLs provide basic stateful and stateless firewall capabilities, respectively, you can deploy AWS Network Firewall or third-party virtual firewall appliances for more advanced stateful firewall features. These solutions offer capabilities like deep packet inspection, intrusion prevention, application-level filtering, and advanced threat protection.

-----------

Enter your question (or 'q' to quit):

1

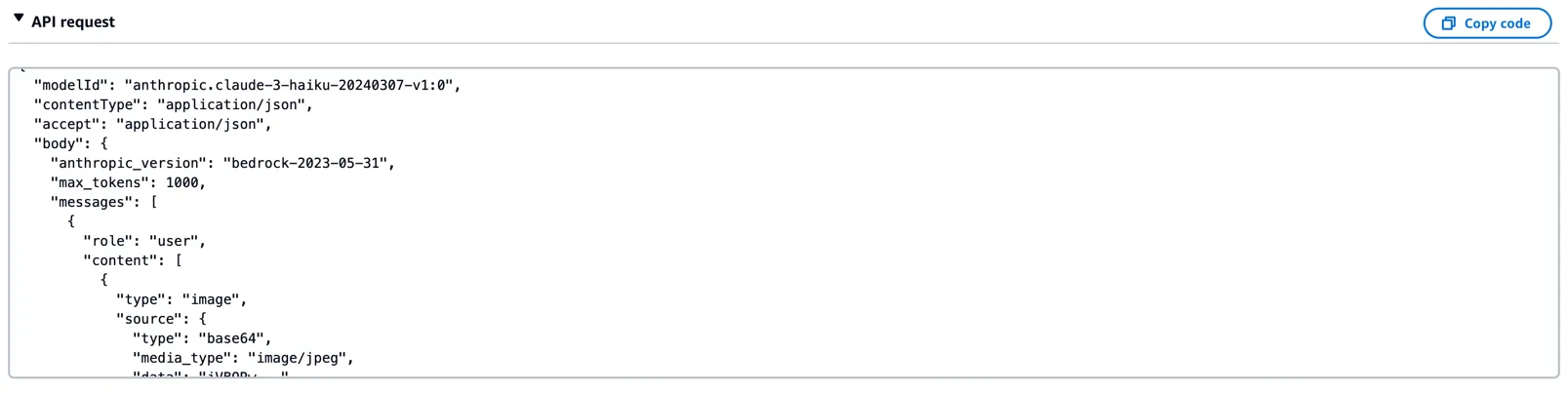

modelId = "anthropic.claude-3-haiku-20240307-v1:0"1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

[~/Documents/repos/beginning-bedrock]$ python claude.py

Enter your question (or 'q' to quit): Does an amazon VPC support a stateful firewall?

-----------

Claude's response:

Yes, Amazon Virtual Private Cloud (VPC) supports a stateful firewall, which is known as a Network ACL (NACL) and a Security Group.

1. Network ACL (NACL):

- NACL is a stateful firewall that operates at the subnet level in a VPC.

- It can be used to create inbound and outbound rules to control the traffic entering and leaving the subnet.

- NACL rules are evaluated in order, and the first rule that matches the traffic is applied.

- NACL keeps track of the state of the connection (i.e., whether it's an incoming or outgoing packet) and applies the appropriate rules accordingly.

2. Security Group:

- Security Groups are also a type of stateful firewall in Amazon VPC, operating at the instance level.

- Security Groups control inbound and outbound traffic to the instances they are associated with.

- Security Group rules are evaluated before the NACL rules, and they are stateful, meaning they remember the state of the connection.

- Security Groups allow you to specify specific ports, protocols, and IP ranges for both inbound and outbound traffic.

Both NACL and Security Groups provide stateful firewall functionality in Amazon VPC, allowing you to control and secure the network traffic in your virtual private environment.

-----------

Enter your question (or 'q' to quit):modelID.

messages Mistral AI does not.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

import json

import boto3

# Initialize Bedrock client

bedrock = boto3.client(service_name="bedrock-runtime", region_name="us-west-2")

def send_prompt_to_mistral(prompt):

try:

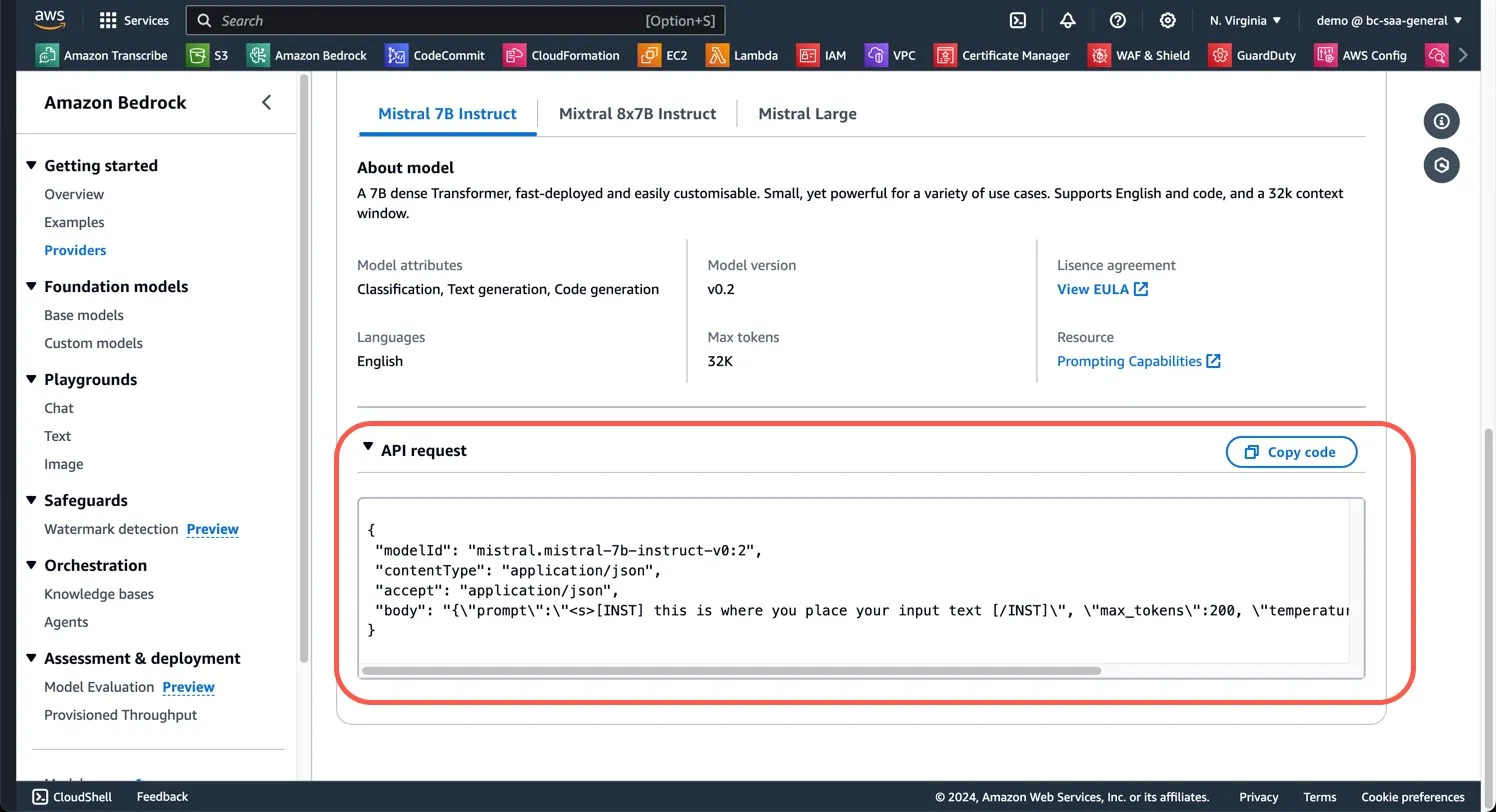

model_id = 'mistral.mistral-7b-instruct-v0:2'

prompt_config = f"""<s>[INST] {prompt} [/INST]"""

body = json.dumps({

"prompt": prompt_config,

"max_tokens": 2000,

"temperature": 0.7,

"top_p": 0.7,

"top_k": 50

})

response = bedrock.invoke_model(

body=body,

modelId=model_id

)

response_body = json.loads(response.get('body').read())

mistral_response = response_body.get('outputs')[0]['text'].strip()

return mistral_response

except Exception as e:

print(f"An error occurred while invoking Mistral: {e}")

return "An error occurred."

if __name__ == "__main__":

while True:

user_input = input("Enter your question (or 'q' to quit): ")

if user_input.lower() == 'q':

break

response = send_prompt_to_mistral(user_input)

print(f"\n\n-----------\nMistral's response:\n\n{response}\n\n -----------\n")

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

import json

import boto3

# Initialize Bedrock client

bedrock = boto3.client(service_name="bedrock-runtime", region_name="us-west-2")

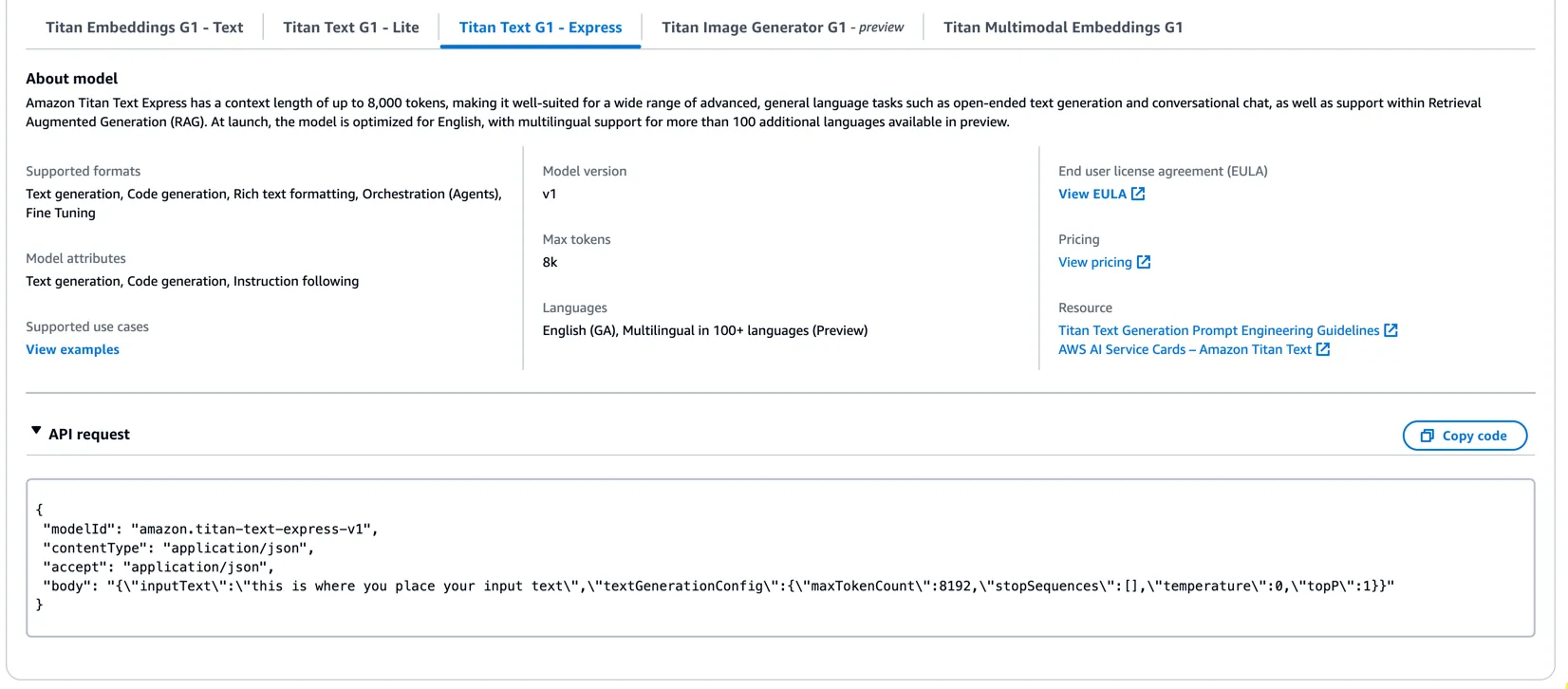

def send_prompt_to_titan(prompt):

try:

model_id = 'amazon.titan-text-lite-v1'

contentType = 'application/json'

accept = 'application/json'

prompt_config = f"{prompt}"

body = json.dumps({

"inputText": prompt_config,

# "max_tokens": 2000,

# "temperature": 0.7,

# "top_p": 0.7,

# "top_k": 50

})

print (body)

response = bedrock.invoke_model(

body=body,

modelId = model_id,

contentType = contentType,

accept = accept

)

# Print the keys of the response dictionary

print("Keys in the response:", response.keys())

response_body = json.loads(response.get('body').read(),)

titan_response = response_body.get('results')[0]['outputText'].strip()

return titan_response

print (titan_response)

except Exception as e:

print(f"An error occurred while invoking titan: {e}")

return "An error occurred."

if __name__ == "__main__":

while True:

user_input = input("Enter your question (or 'q' to quit): ")

if user_input.lower() == 'q':

break

response = send_prompt_to_titan(user_input)

print(f"\n\n-----------\nTitan's response:\n\n{response}\n\n -----------\n")1

2

3

4

5

6

7

8

9

10

11

12

13

14

[~/Documents/repos/beginning-bedrock]$ python titan.py

Enter your question (or 'q' to quit): Does an amazon VPC support a stateful firewall?

{"inputText": "Does an amazon VPC support a stateful firewall?"}

Keys in the response: dict_keys(['ResponseMetadata', 'contentType', 'body'])

-----------

Titan's response:

Yes, Amazon VPC supports stateful firewall rules. Amazon VPC provides a logically isolated AWS environment in which you can launch AWS resources and create private networks. To enable stateful firewall rules in an Amazon VPC, you need to:

1. Create a VPC client in the required region.

2. Define the request parameter params with the VPC ID.

3. Send the request parameter params to the create firewall rule API with the help of the VPC client to enable stateful firewall rules.

4. Retrieve the response of the create firewall rule API.

Here is an example.Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.