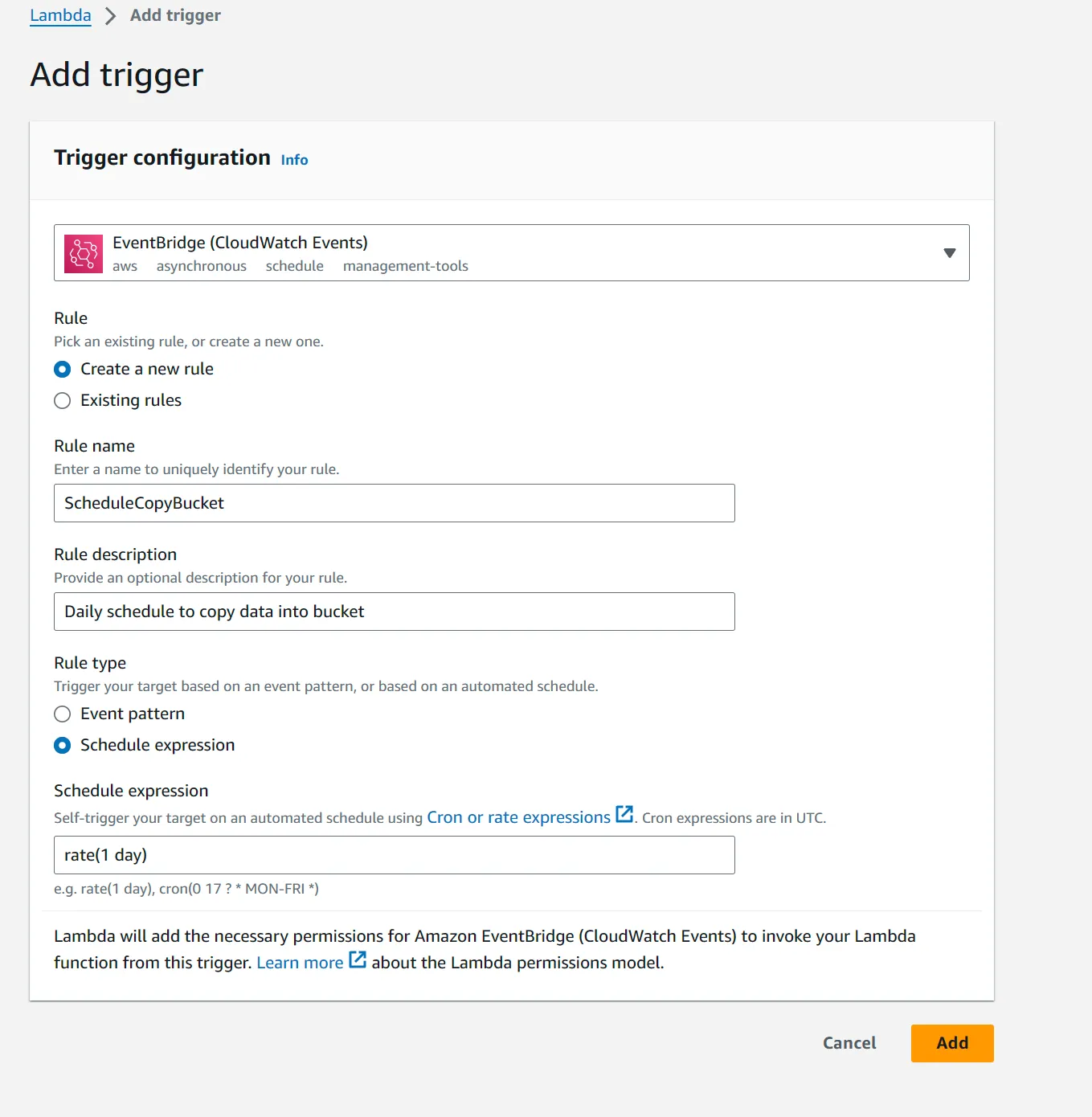

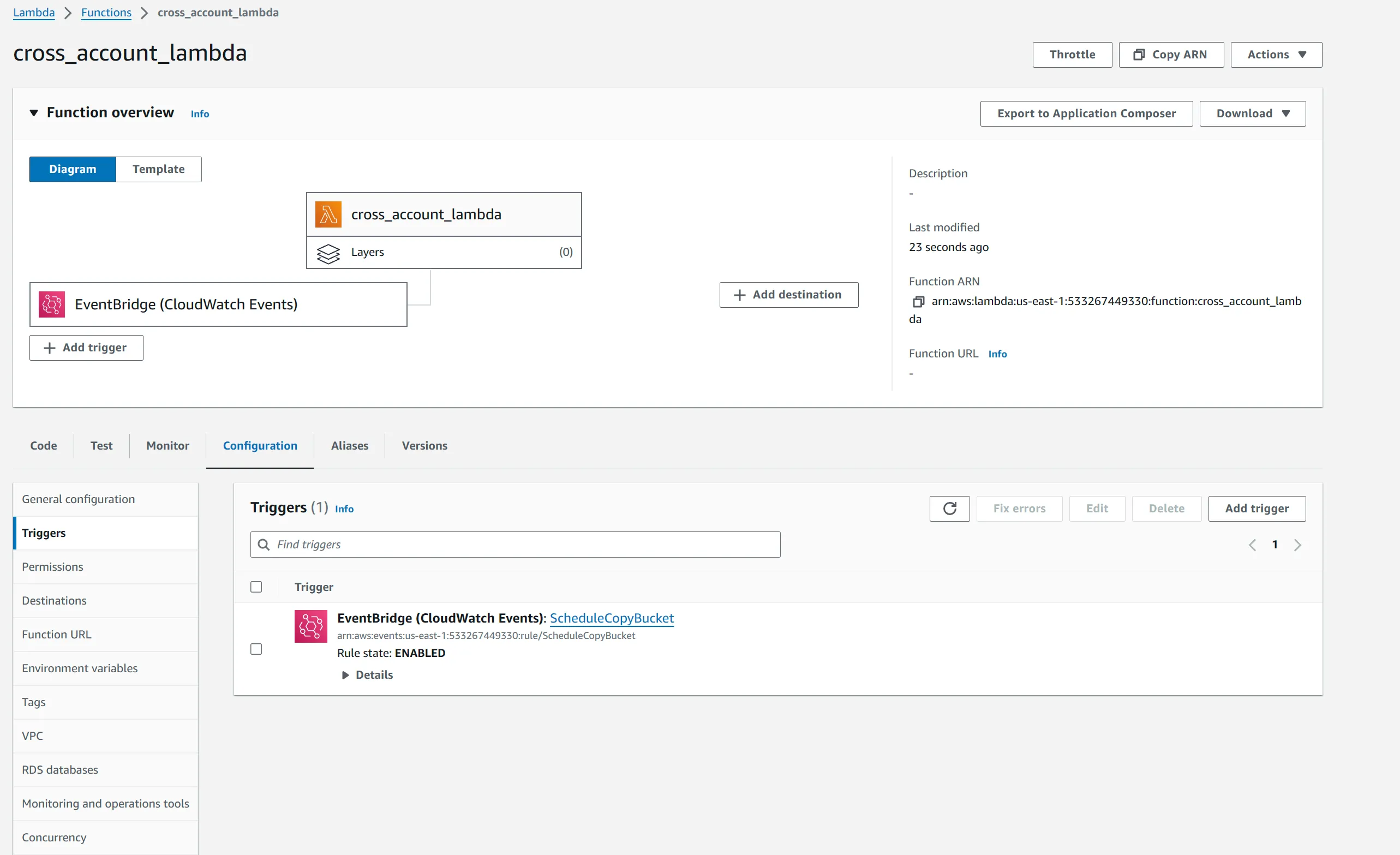

Cross account S3 data transfer using EventBridge and Lambda

Transferring data from S3 bucket in one account to another using Lambda with EventBridge scheduled trigger.

- Create a Amazon S3 bucket

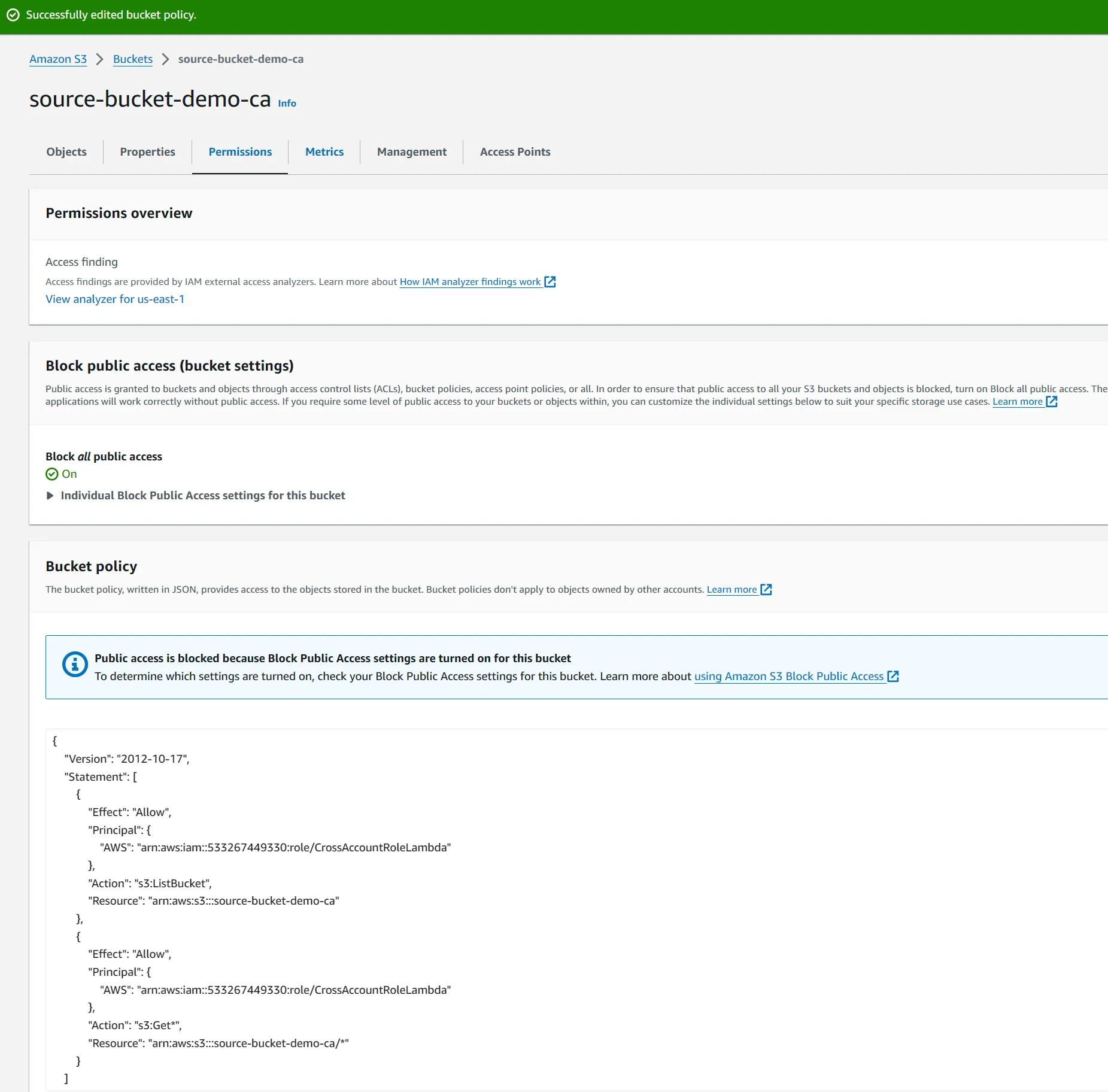

source-bucket-demo-cawith few dummy txt files to be copied over. - In the source bucket, add a bucket policy, to trust cross account role created in the prod account to allow list and get actions on the bucket contents.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::533267449330:role/CrossAccountRoleLambda"

},

"Action": "s3:ListBucket",

"Resource": "arn:aws:s3:::source-bucket-demo-ca"

},

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::533267449330:role/CrossAccountRoleLambda"

},

"Action": "s3:Get*",

"Resource": "arn:aws:s3:::source-bucket-demo-ca/*"

}

]

}

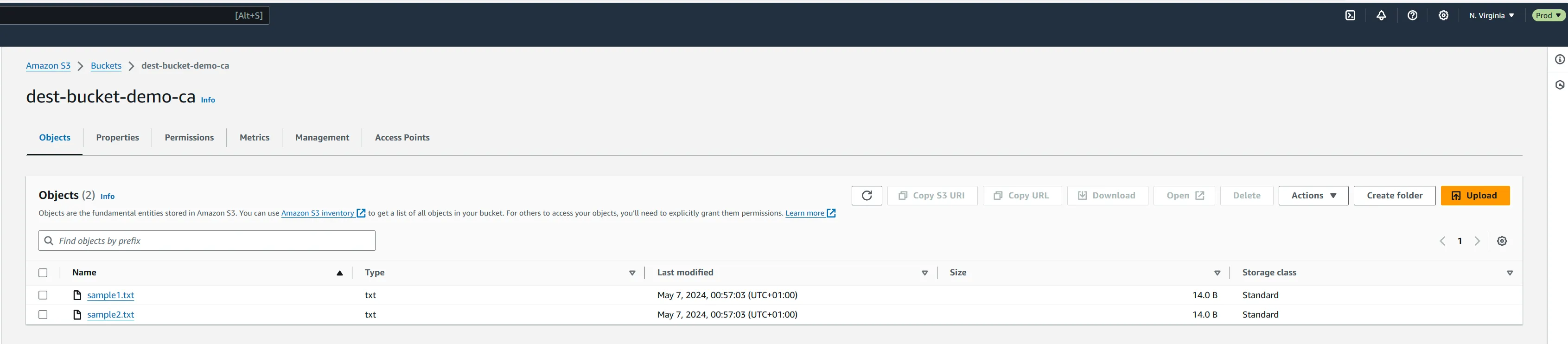

dev account to get the objects from the S3 bucket in dev account and copy into existing bucket. - In the S3 console, create an empty bucket for the files to be copied into. We will name it

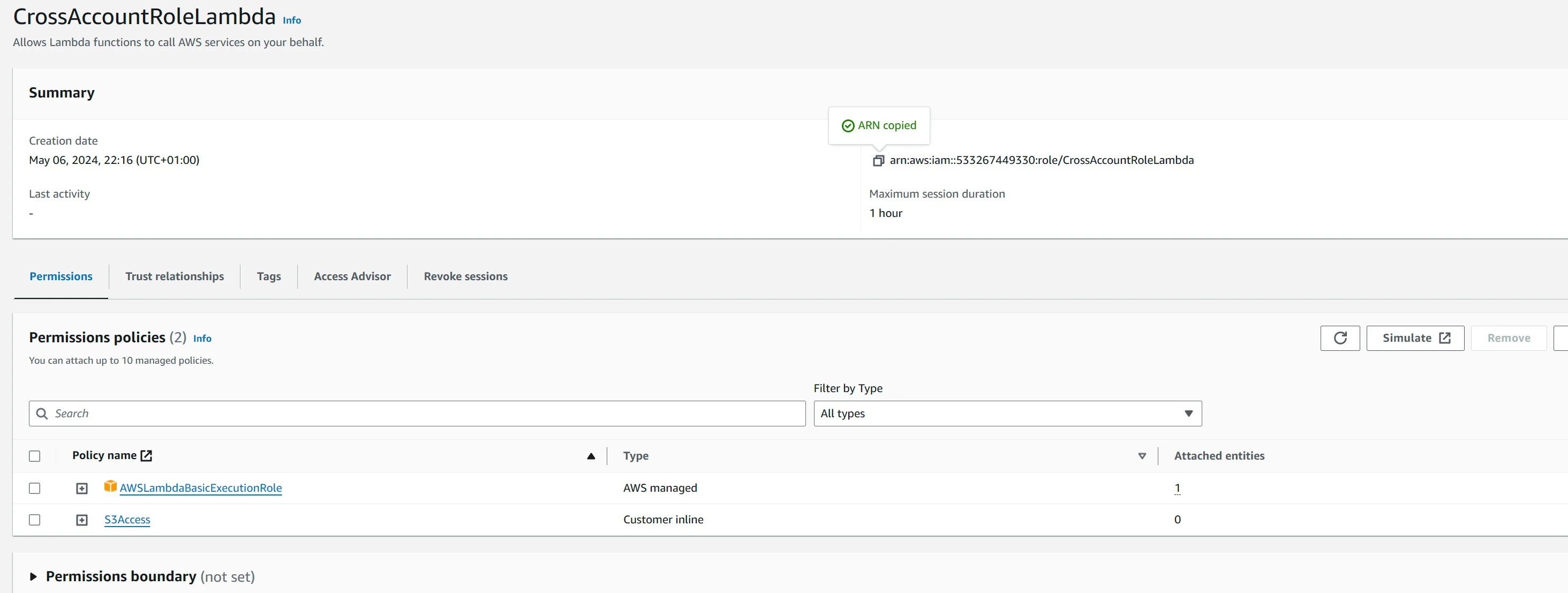

dest-bucket-demo-ca - In IAM console, we will need to create a new role for Lambda to assume. We added the

AWSLambdaBasicExecutionRolemanaged policy and an inline policy for S3 access.

dev and prod accounts:- s3:GetObject on all objects in the

source-bucket-demo-cabucket arn in the dev account. This will need to have a*after the backslash so it applies to all objects in the bucket - s3:ListBucket on the

source-bucket-demo-caarn. - s3:PutObject on the objects in the destination bucket in the

prodaccount. Again, the bucket arn will need to be followed by a/*.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject"

],

"Resource": "arn:aws:s3:::source-bucket-demo-ca/*"

},

{

"Effect": "Allow",

"Action": "s3:ListBucket",

"Resource": "arn:aws:s3:::source-bucket-demo-ca"

},

{

"Effect": "Allow",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::dest-bucket-demo-ca/*"

}

]

}1

2

3

4

5

6

7

8

9

10

11

12

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

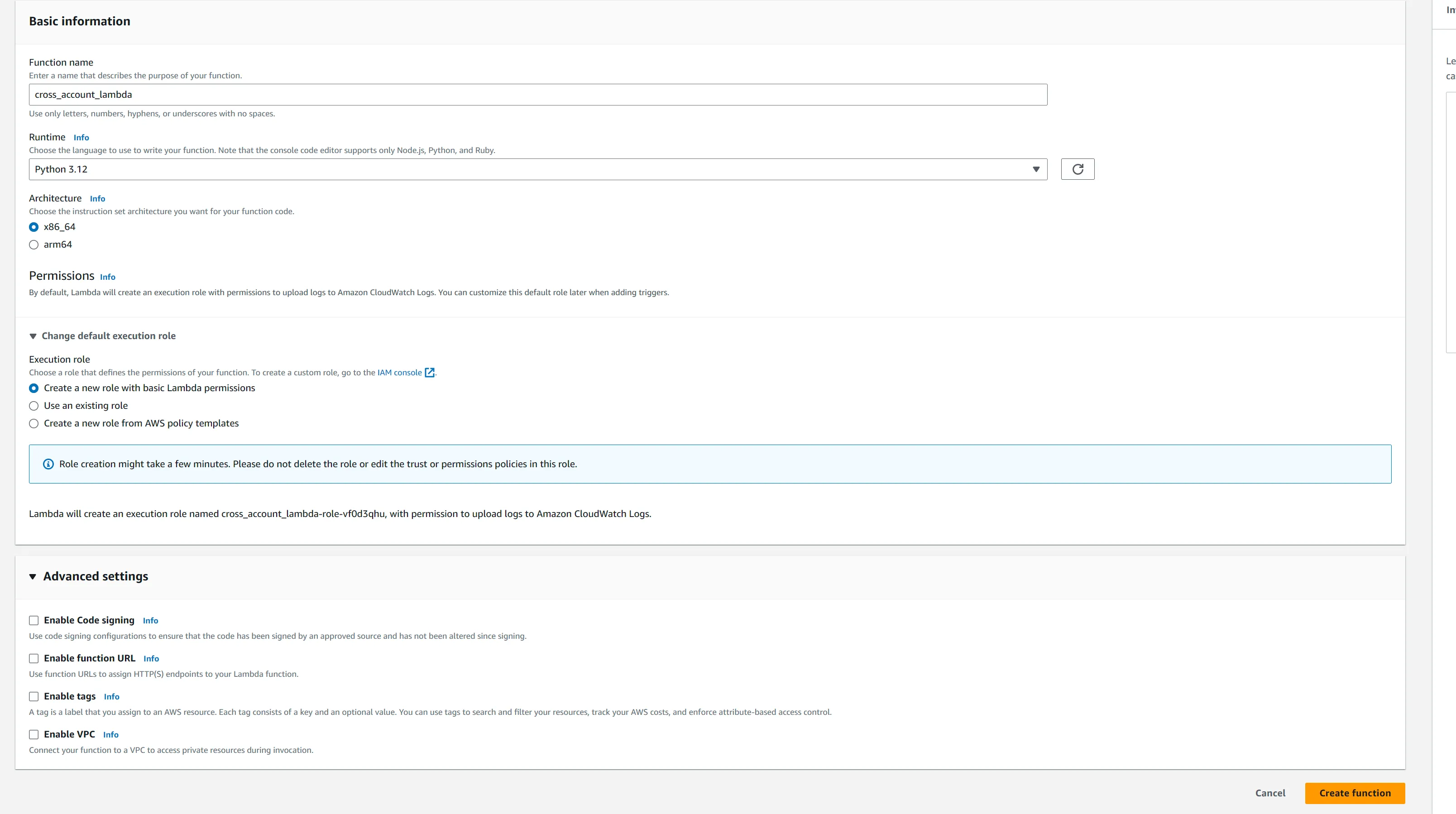

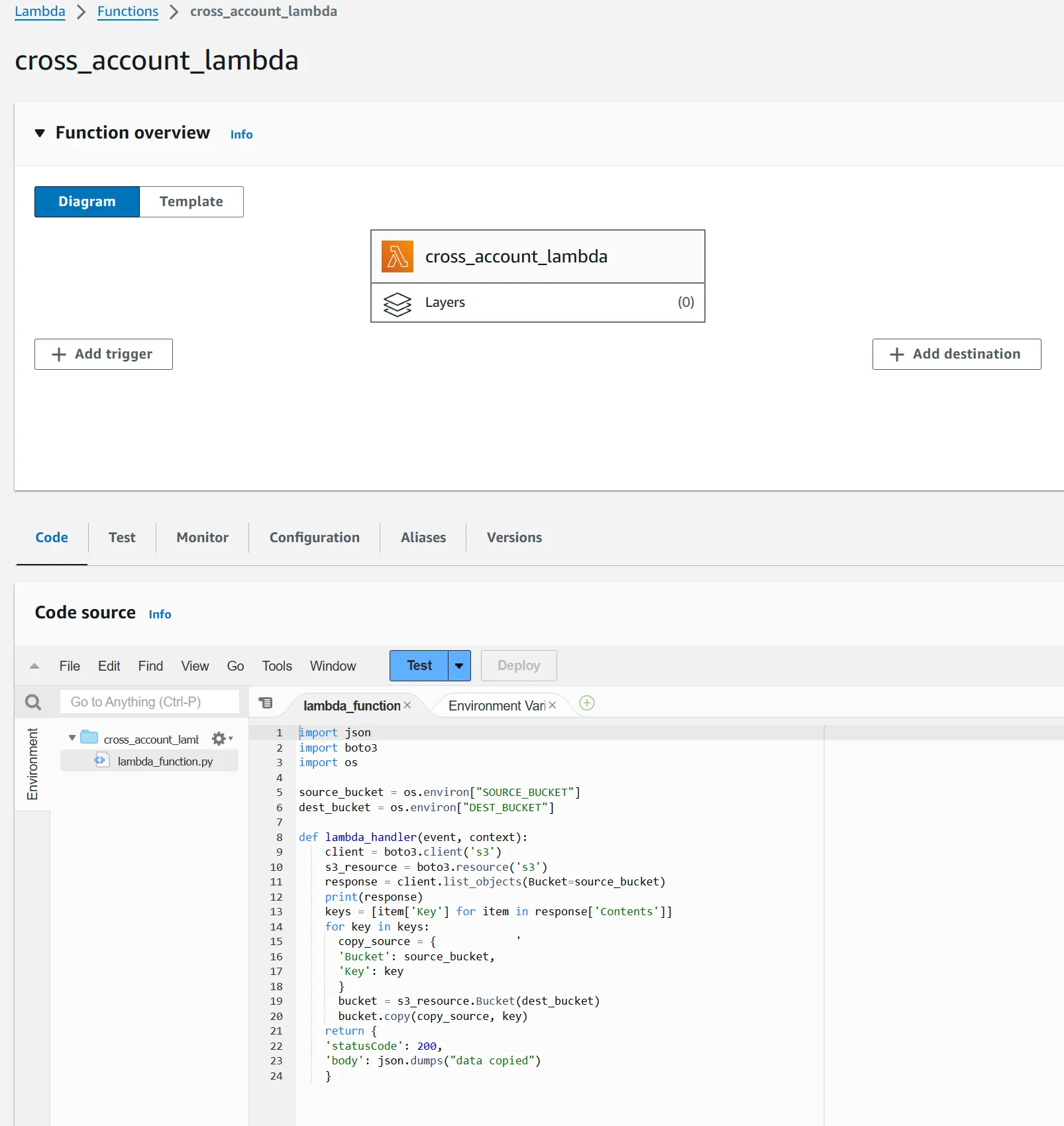

}dev Account and write contents to prod account bucket. Click create function and enter the following:- Function Name

- RunTime: Python3.12

- Switch to

Use Existing Roleoption and select the Role just created

- Create an S3 resource using the Boto3 session

- Lists all the objects in the non-prod bucket

- Parse the response to generate a list of object keys

- Iterate through the list of keys and create a source bucket dictionary named

copy_sourcewith the sourcebucket nameand the object key which needs to be copied to another bucket. - Create a Boto3 resource that represents your target AWS S3 bucket using the

s3.bucket()function. - Uses the boto3 resource

copy()function, to copy the source object to target. - Returns a

200code in response if successful and message in the body.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

import json

import boto3

import os

source_bucket = os.environ["SOURCE_BUCKET"]

dest_bucket = os.environ["DEST_BUCKET"]

def lambda_handler(event, context):

client = boto3.client('s3')

s3_resource = boto3.resource('s3')

response = client.list_objects(Bucket=source_bucket)

print(response)

keys = [item['Key'] for item in response['Contents']]

for key in keys:

copy_source = {

'Bucket': source_bucket,

'Key': key

}

bucket = s3_resource.Bucket(dest_bucket)

bucket.copy(copy_source, key)

return {

'statusCode': 200,

'body': json.dumps("data copied")

}

- SOURCE_BUCKET:

source-bucket-demo-ca - DEST_BUCKET:

dest-bucket-demo-ca

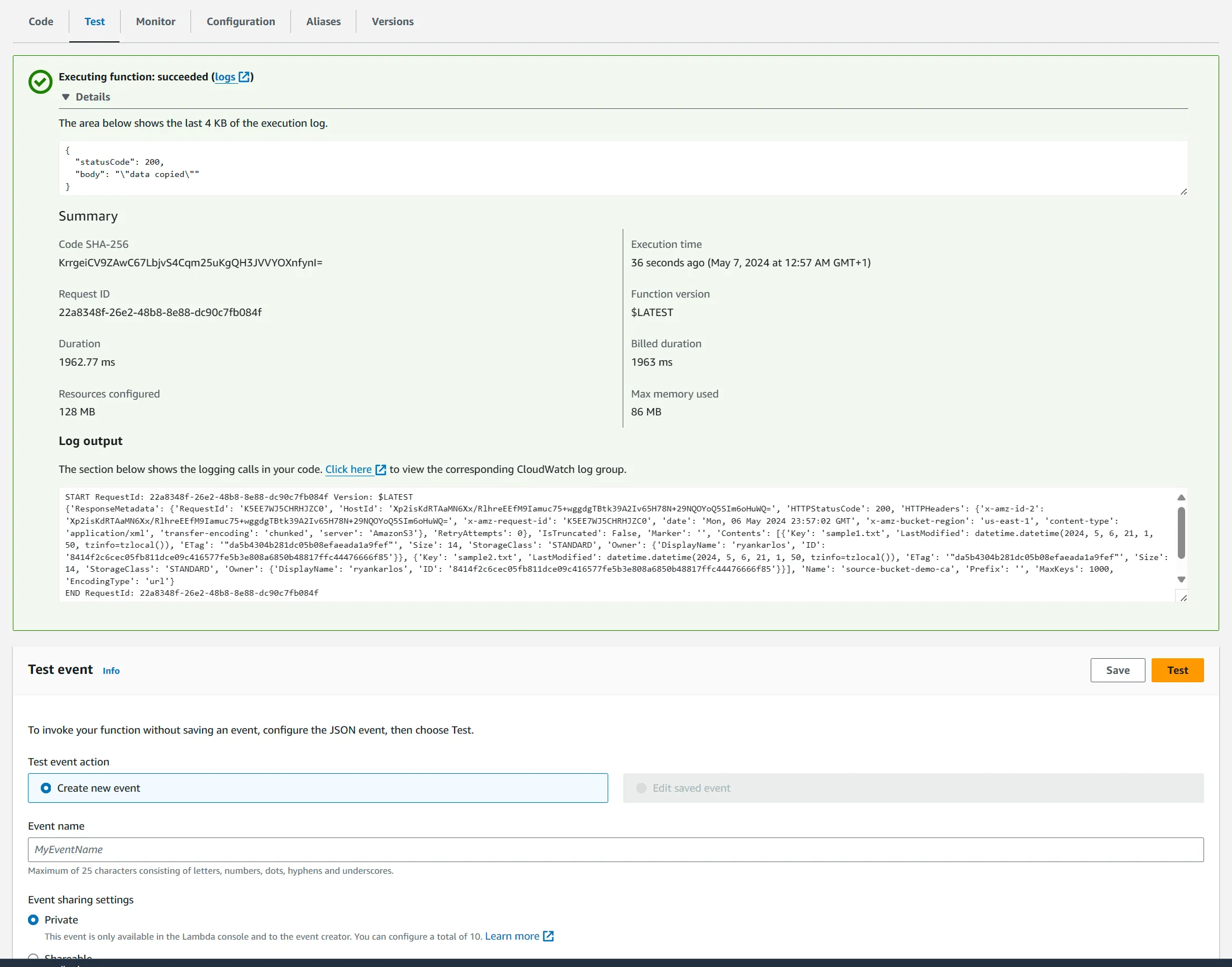

data copy message in the response body.