Sending images to Claude 3 using Amazon Bedrock.

A detailed step-by-step tutorial on how to send images to Claude 3 using Python and Amazon Bedrock.

Published May 26, 2024

Last Modified May 27, 2024

In this tutorial, I'll walk you through how to programmatically send images to Claude 3 using Amazon Bedrock and Python.

What we're going to do is write a script that sends the image of a typical serverless REST API architecture on AWS and ask Claude to describe it for us.

The code for this demo is available on my codingmatheus github. Feel free to use the notebook to follow along or to serve as basis for your own ideas and projects if you want to improve or extend it.

This is a beginner's guide so the tutorial is split into steps and each step provides the code at the top then a walkthrough of what that code does. I go into detail about the set up and every bit of the code teaching Python and generative AI concepts, as well as how to use Amazon Bedrock and Claude 3.

If you're comfortable just understanding the code, you can skip the walkthrough bits or if you're here to just look up something you can skip straight to the step that covers it.

If you're more advanced or just want the code and go or are just downright impatient, here is the full script:

Let's get started!

1/Python - this demo uses Python 3.12 but I'm not using any specific features of that version so you should be able to still run this on earlier versions. I recommend you create a virtual environment to isolate your pip installs for this project. If you're unsure about venvs check out this short video for a quick explanation or check out the official documentation.

2/ The code - we'll be following the code in this notebook.

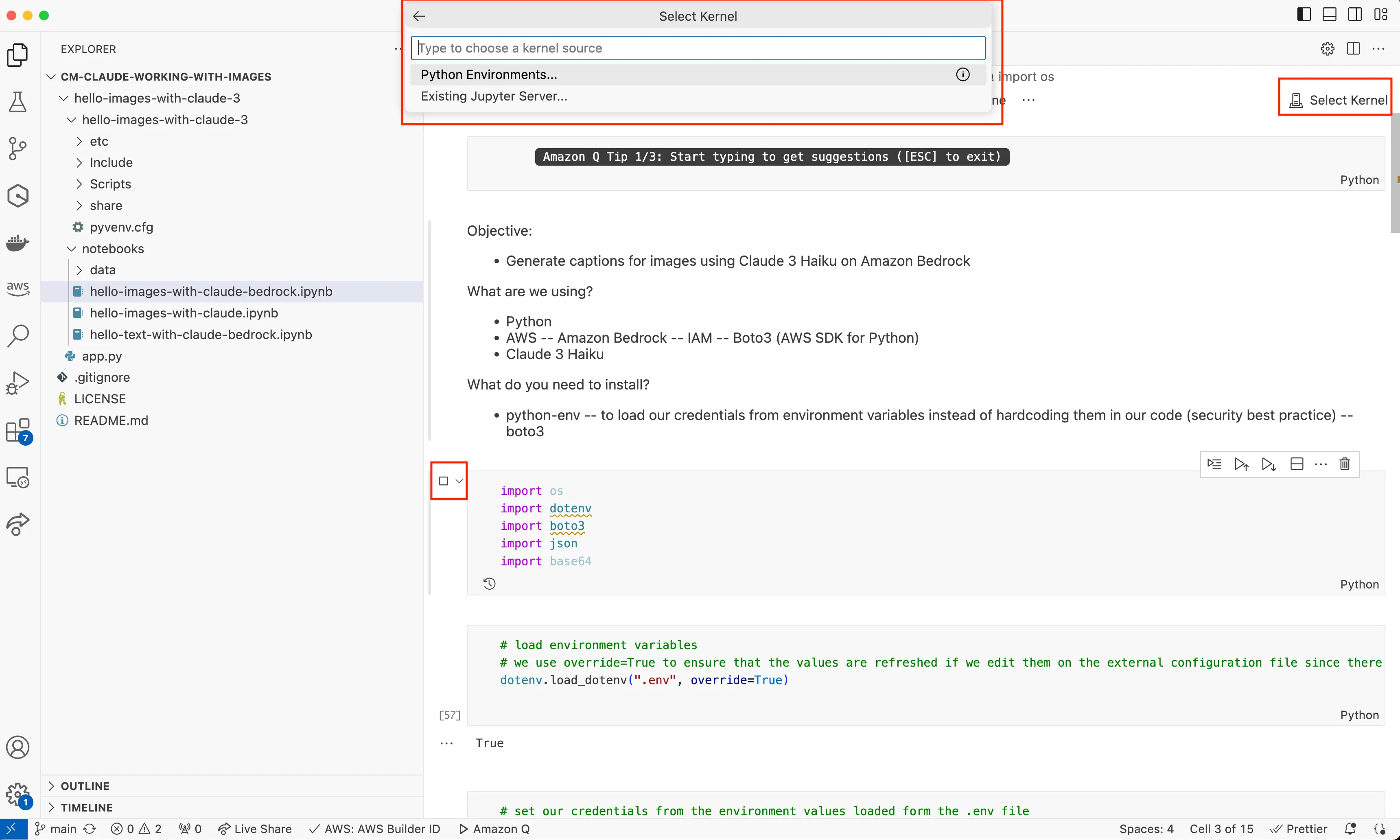

3/Jupyter Notebooks - You can open the notebook anywhere you'd like. For this tutorial, I'll be using VS Code, but all of the code you need is in the notebook so you don't have to use the same environment if you don't want to.

If you are using VS Code though, and haven't configured it yet to use Jupyter follow these simple steps to set it up . Then, once you open the notebook, configure it with the python kernel by either hitting play on any of the code blocks (which will change to a stop button like in the screenshot below) or clicking the Select Kernel button. Either way you'll be prompted to select a Python environment to run the notebook with.

Tip! VS Code may pick up on your default Python environment and select it as the kernel for your notebook. I recommend you still create a Python venv and set the kernel to run from it so any packages we install are not added to your global Python environment.

TIP! If you create your venv but still can't see it as an option when browsing Python environments after clicking on Select Kernel then first select it as a Python environment for VS Code by opening the Command Palette (Ctrl + Shift +P on Windows, CMD + Shift + P on mac) then choose "Python: Select Interpreter" and navigate to the python executable in your venv under the Scripts folder. You will then see it as an option when selecting the kernel for your notebook.

You will also have to install ipykernel to run the notebook, specially if you have created a new Python virtual environment. To do that run

pip install ipykernel.

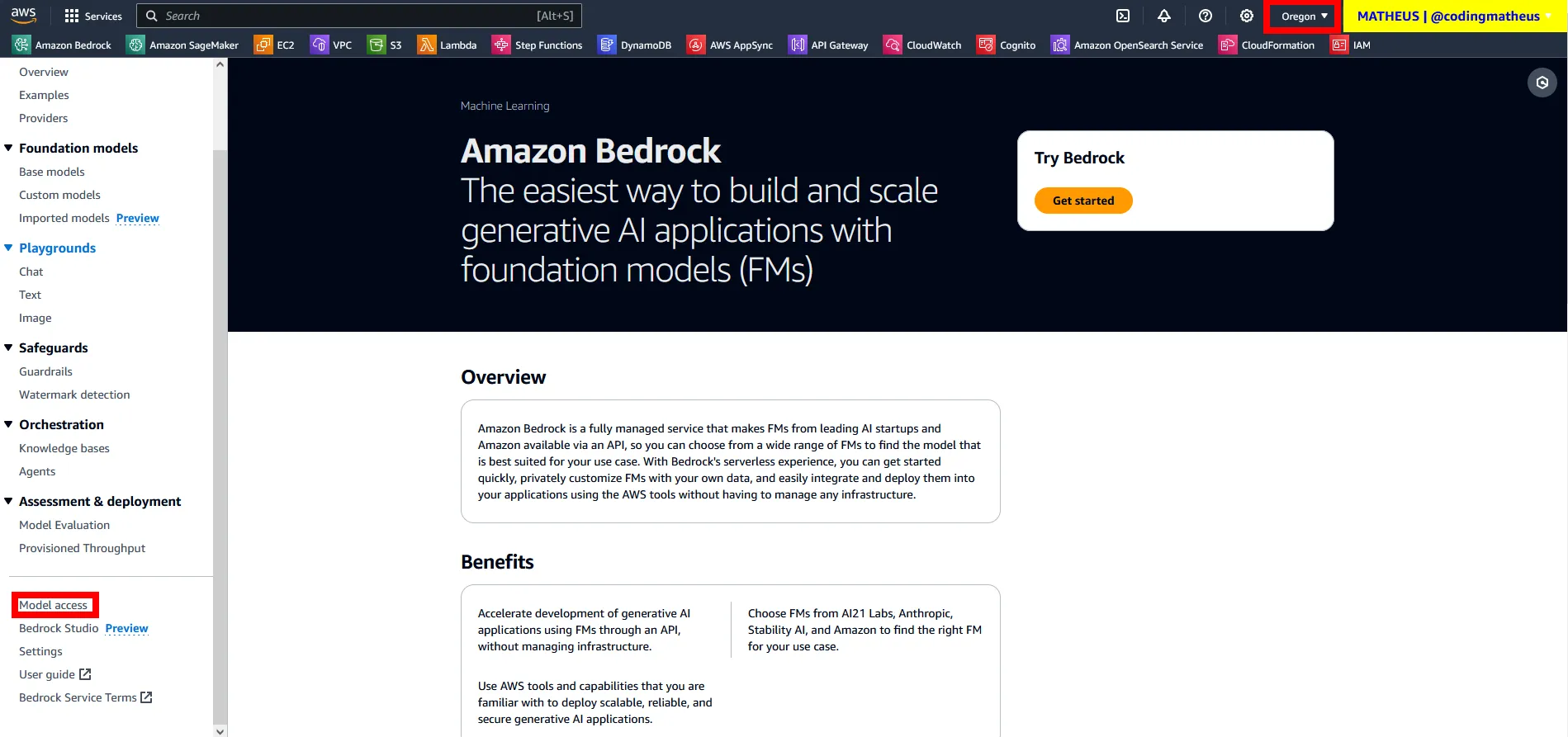

4/ Amazon Bedrock - Bedrock is a serverless AWS service that enables you to use many different Foundational Models, including AWS's own Amazon Titan family of models, but many others such as Claude, Llama, Mistral, and more. For this tutorial we're going to use Claude 3 Haiku, but you can very easily swap models at any point if you want as you'll see later in the tutorial.

To set up Amazon Bedrock you will need an AWS account. If you still don't have one, you can get started creating a free AWS account here. Then follow these steps:

- Log into your AWS Account. Make sure the IAM user or IAM role you are logging in with has access to Amazon Bedrock.

- Switch to a region where Amazon Bedrock is available. I suggest working with us-west-2 (Oregon).

- On the left-side menu (it's closed by default, just click on the menu button to open it), click on "Model Access"

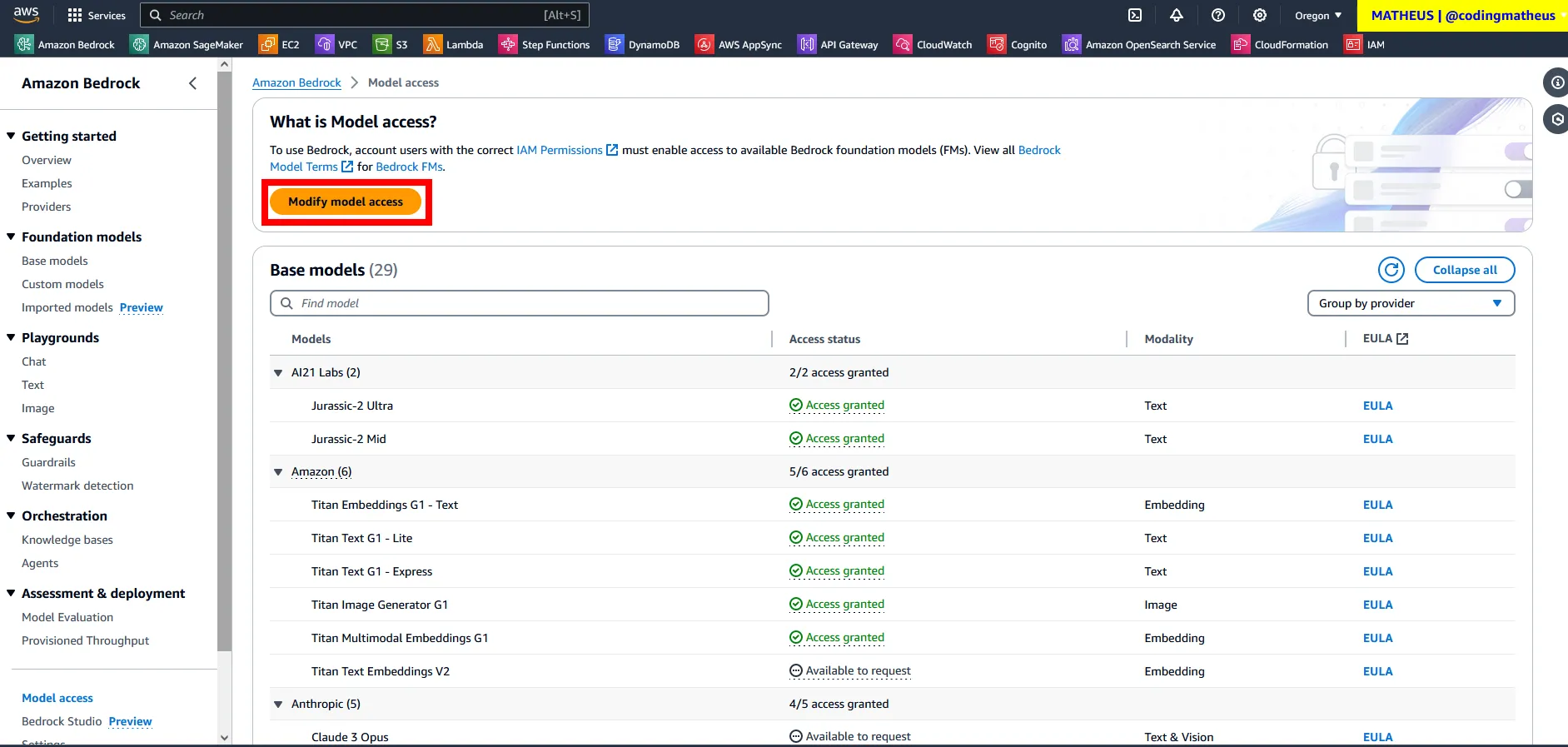

Make sure to be in a region where Amazon Bedrock is available then click on "Model Access". - As previously mentioned, Bedrock gives you access to many different foundation models, but before you can use them you have to explicitly request access to them so they are enabled for all users in that AWS account. Click on "Modify model access"

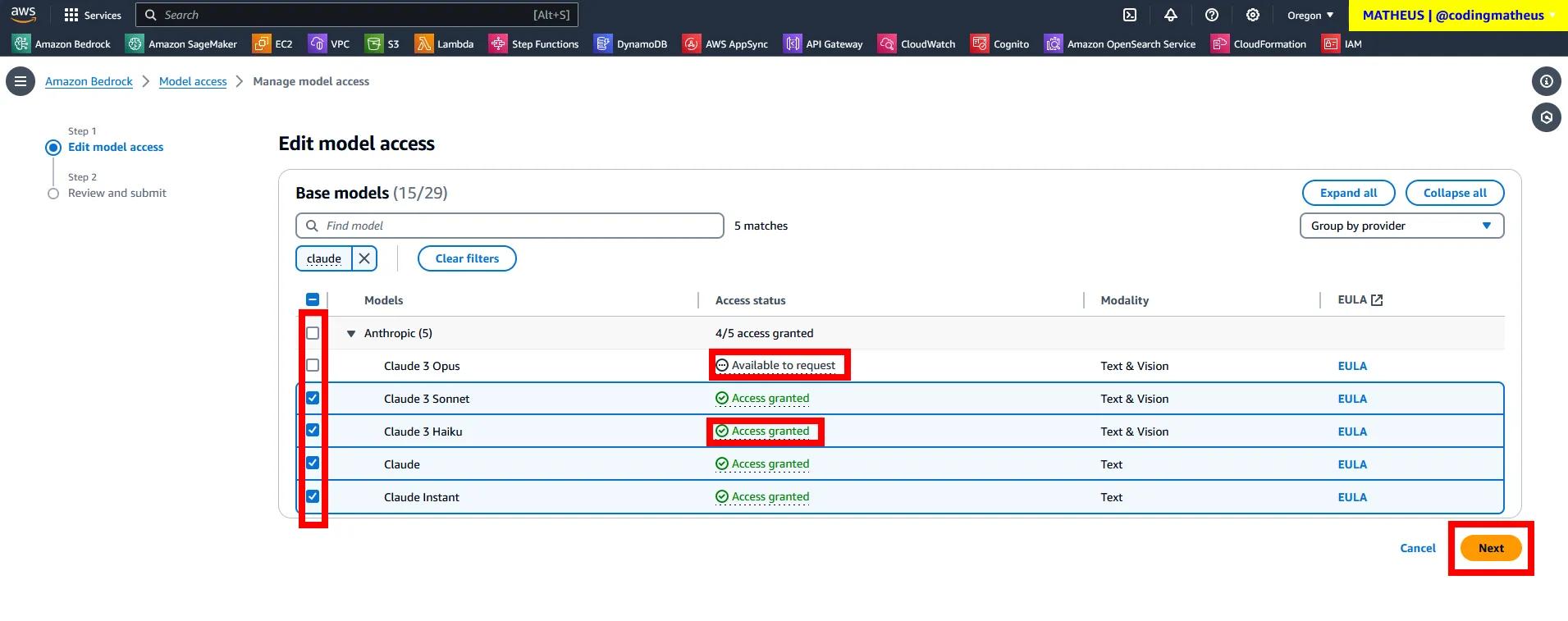

Modifying access to Foundation Models on Amazon Bedrock. - You will see a long list of models that you can request to have them available to use through Amazon Bedrock. We are going to be using Claude 3 Haiku for our demo. In my case, I already have it as evidenced by the "Access granted" status, but if you don't have it yet, then just tick the box next to it and any other models you may want to make available and click "Next".You'll be taken to a review screen where you can double check your selection and the terms and conditions based on your choices. When you're ready, submit it. Now you just need to wait till you are granted access to the models you selected. This process is automatic and you'll get an email notifying you once you it's been processed. It should be quite quick, like a minute or so.

Requesting access to Foundation Models on Amazon Bedrock. - Once you can see that Claude 3 Haiku has a status of "Access granted" , you're ready to get started!

We start by importing all those packages above. These are all the libraries we are going to use in our script so now it's a good time to pip them :) Here's what they are and what we're using them for.

These are standard built-in Python libraries. There is no need to install them.

os - standard built-in Python package. We're using it to get the value of environment variables.

json - another standard built-in Python package that you can use to work with JSON data in Python.

These are the packages we use in our application that you must install.

Tip! Make sure you install these in your venv if you're using a Python virtual environment as recommended above. In windows you can do this by running./venv/Scripts/Activatewhereas on mac you can use source ./venv/bin/activate

dotenv - Install it with

pip install python-dotenv. Careful when installing this one! There is a package called dotenv which is an old abandoned package with bugs. Although you import the library as dotenv you want to install python-dotenv. This is a very helpful and common library used to load environment variables. It will look for them in a file called ".env" in the local directory and if that doesn't exist then it will take the value from any loaded environment variables with the same name.boto3 - Install it with

pip install boto3. The official AWS SDK for Python. That's what we're going to be using to interact with AWS and Amazon Bedrock. You could also use Langchain if there are any specific features of Langchain that you'd like to use, however, you can do everything with boto3 too including not only using the Foundation Models in Bedrock, but also creating customization jobs, agents and other things.base64 - Install it with

pip install pybase64. A library that makes it really easy to encode and decode data into base64 format. We need this to work with images in Claude.Of course, if we were to productionize this project, there'd be lots of different considerations, but that is not excuse for us not to implement best practices even when just developing a demo. If anything, I always do that to keep those secure code muscles trained.

So, instead of hard-coding credentials in our script, we are going to load them from environment variables. We start by creating an ".env" and then simply add pairs of variable names and values to it. In our case, we're going to need to connect our application to AWS so we're going to add our AWS credentials to the ".env" file and then load them into memory using the dotenv library we have pip installed earlier (see above).

To get your AWS credentials, do the following:

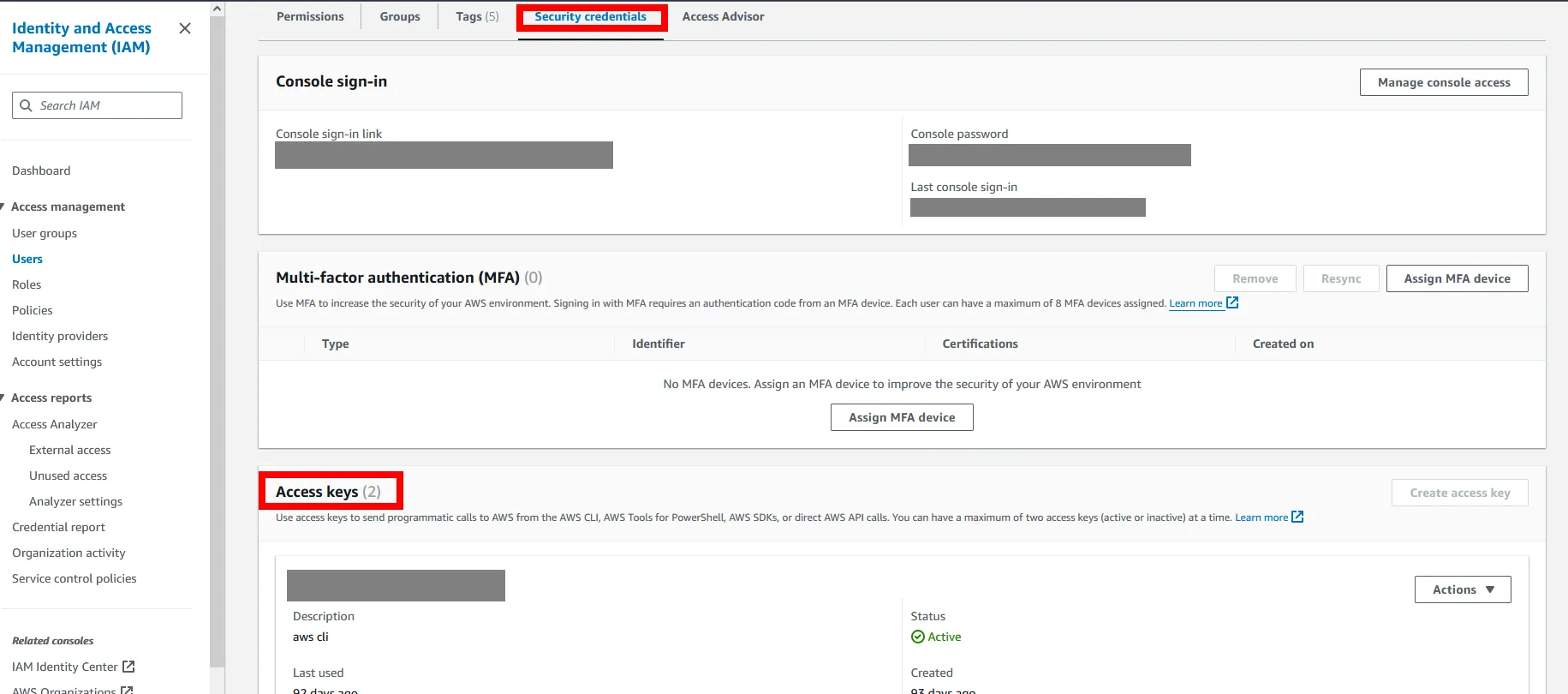

- Navigate to Identity and Access Management (IAM) on your AWS console (that's just how the web-based AWS UI is referred to)

- Click on "Users"

- Find the user that your application is going to use to connect to AWS or create a new one.

- Click on it and navigate to "Security Credentials".

- Scroll down to find the section for "Access keys"

- Generate an Access key. Copy the values for the Access Key Id and the Secret Access Key and paste them somewhere temporarily so you don't lose those.

NOTE: As I mentioned above, there are many considerations if we are to put this application into production and the way it connects to AWS is one of them. Ideally, you don't want to have long-term credentials in your applications and should favor temporary credentials using IAM roles directly or through IAM Identity Center which makes setting these up very easy.

Now, back to our application, create a file called ".env" and type the following:

AWS_ACCESS_KEY_ID="[YOUR ACCESS KEY ID]"AWS_SECRET_ACCESS_KEY="[YOUR SECRET ACCESS KEY]"AWS_REGION="us-west-2"Replace the values above with the access key and secret keys for the user you're setting up for this application to connect to your AWS account with. We will use the AWS_REGION value when we configure our Bedrock client so make that it matches the region where you have Amazon Bedrock enabled with access to Claude 3 Haiku (see "What you need" above). Make sure to save the file.

Next, we use the dotenv library that we installed above (see Step 1) to load those values in the ".env" file as environment variables in memory.

dotenv.load_dotenv(".env", override=True)We call the load_dotenv() method with the name of the file followed by overrvide=True. That is a handy option because by setting override to true we force the variables to refresh their values whenever we edit the ".env" file.

All we need to do now is set the values of the environment variables we loaded in the previous step into local variables. Create one variable for each value as shown above and use os.environ.get() to load the environment variable values.

Note that if you haven't actually got an ".env" file or the variables declared with the same name that you pass into os.get() that it will fail silently and just assign None to your variables, so make sure you've done the steps above. Of course, if this was production code, we'd address that to make sure it throw proper exceptions and react to them.

This is where things start to get interesting! To work with Amazon Bedrock we just need to use boto3 and instantiate a client that will abstract away a lot of the work for us enabling us to interact with the service very easily.

To create a Bedrock client you just call the client() method in the boto3 object with a magic string of "bedrock-runtime". We then also pass in the AWS credentials that we stored in our local variables in the previous step as well as the region where we have Bedrock available. In this case, I'm using us-west-2 (Oregon) which is what I set AWS_REGION to in the ".env" file.

As mentioned earlier, Amazon Bedrock is a serverless portal that you can use to access various Foundation Models. If you use the Bedrock console (again, just AWS lingo referring to the web-based AWS UI), then you can use the tools under the "Playgrounds" menu to test all the ones you have made available in your AWS account (see "What you need" above) and switch between easily by simply clicking a button.

Programmatically, it's just as easy. You just need to find out the model id for the model that you want to use which you will then pass in as an argument when sending a prompt to Bedrock.

There are 2 ways to find the model id:

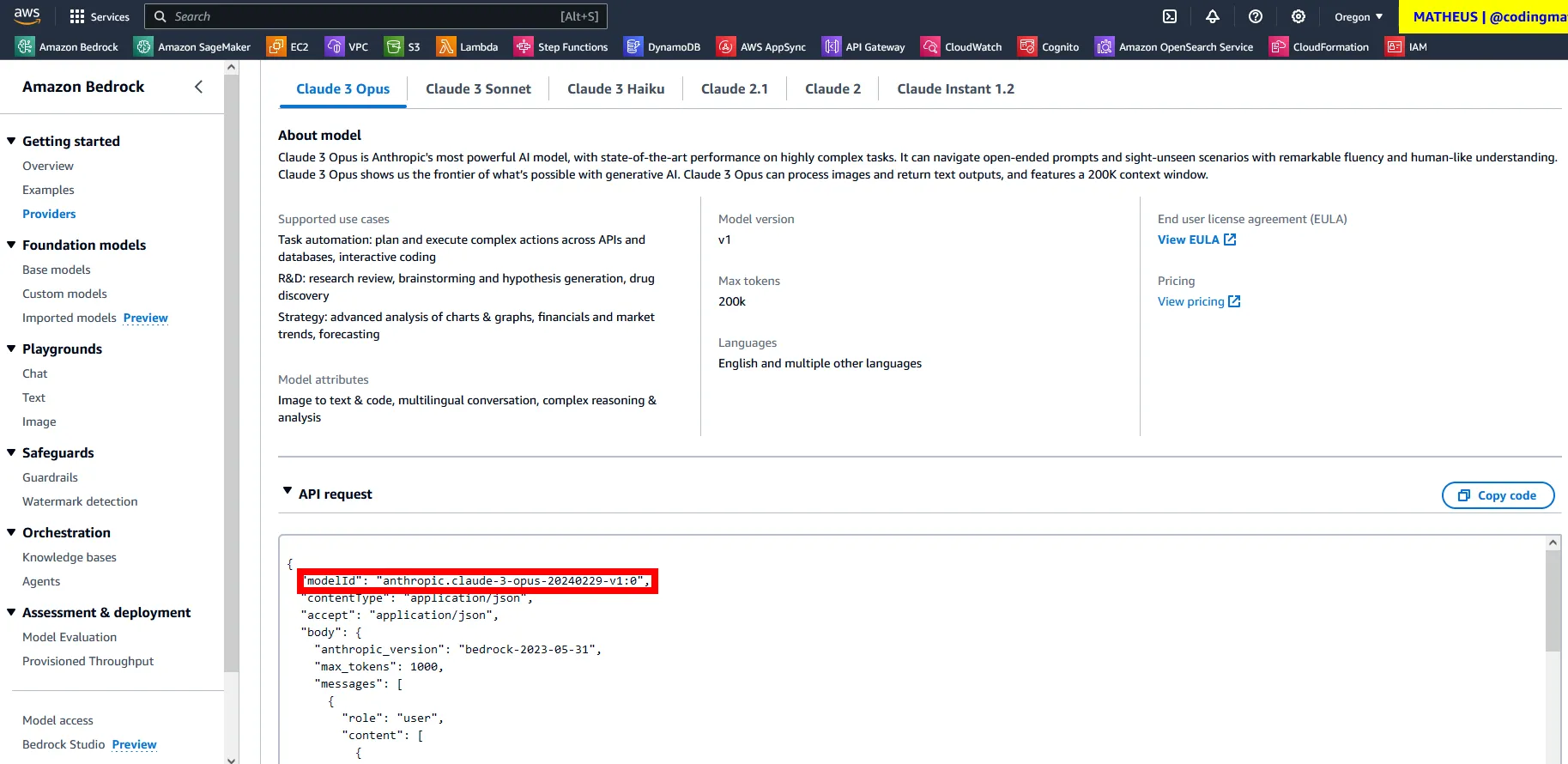

1/ Bedrock console: Click on "Base models" on the Bedrock UI. Careful here if you want to use the search box because if you just type the name of model or family of models like Claude it won't return any results. If you click on the search box you will see that a window pops up saying "Properties". If you click on "Model name" you will see available options and it'll fill in the search box with the right syntax. Or simply just scroll down and browse to the one you want.

Once you find it and click on it you will be taken to the screen where you can see more details about the model. Every model has an API request sample that you can use as reference to learn about the structure and attributes of the request payload required by the model which always include the model id. This is where you can take the model id from, so copy it and paste it on your code saving it in a local variable like the code above shows.

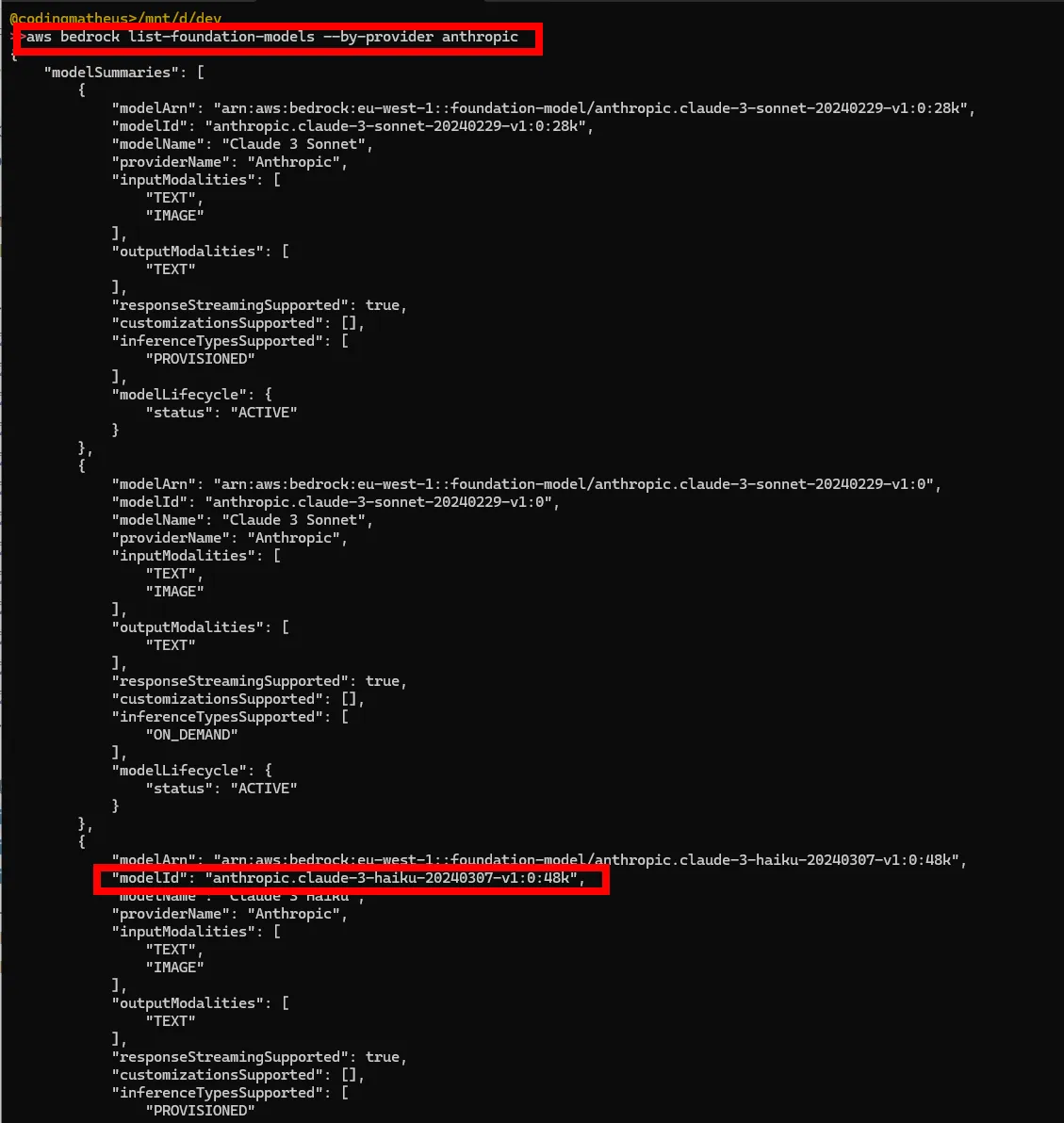

2/ AWS CLI: you can call

aws bedrock list-foundation-models to get a list of all the foundation models alongside their details which include the model id. You can use some filters to help trim the results. For example, for find out the model id for Claude 3 Haiku faster, you could filter by the provider by typing aws bedrock list-foundation-models --by-provider anthropic

This is where we start the magic! So how do you actually send an image to Claude 3 via the Bedrock API? The answer is simple: convert your image from binary to a base64 string then add it to the json payload alongside all the other attributes!

And while that may sound complicated, guess what, there's a library for that! You can use the base64 library which we installed and imported in Step 1 which makes this process very easy.

First we need an image. I create a data folder in my project and save the image of the AWS serverless REST API diagram that I'm going to ask Claude to describe in that folder.

Then we're going to open that file using Python's built-in functions and, of course, best practices which is why we use a with statement to guarantee that any resources allocated are released no matter what happens such as, perhaps, an exception while reading the file which left unchecked could result in a memory leak and crash the computer.

We use the open function to open our image file and we pass in the options "rb" which means we're opening it as ready-only and it's a file containing binary data. We then call the base64 library b64encode() method and pass it the file data stream by calling read() on it. One important step here is that you have to call decode() again after to get the string representation.

TIP! Claude 3 tries to be really helpful and it will automatically resize your images if the base64 string representation exceeds the maximum amount of input tokens allowed for the model you're using. This will delay the response and create unnecessary processing so always resize your images ahead of time. As per Claude 3's documentation: "If your image’s long edge is more than 1568 pixels, or your image is more than ~1600 tokens, it will first be scaled down, preserving aspect ratio, until it is within size limits."

Great! We now have our image as a base64 string! Now we just need to figure out how to programmatically add it to our prompt.

The next step is figuring out what data and input fields are expected by the model that you're using for your generative AI application. Every model has different requirements and a different payload message structure where you send your prompt and anything else needed.

As we did in Step 5 when selecting the model, you can go to the Bedrock console and see the details for the models you want use where there is always an API Request sample that you can copy/paste from to have your starting point.

For this demo, we're using Claude 3 Haiku. The Claude 3 payload structure is consistent across all models of the family. Here is a breakdown:

- messages (required) - this is a json array where you can send multiple message objects. This is useful to maintain stateless conversations, meaning, you can send a history of messages to Claude for context so it can pick up the conversation where it stopped if you're building a chatbot, for example. It's stateless because it doesn't depend on a database to retrieve data. You can just keep adding to the messages array and sending the request with the series of previous messages to maintain "conversation memory".

- message objects (at least one is required) - these are the unnamed objects you send inside the messages array. The two required attributes are:

- role: This will always be either "user" or "assistant". Claude 3 expects and alternating sequence if you have multiple messages with the first one always starting with "user". It is what it sounds like. Messages with the "user" role represent messages that were prompted by a human whereas "assistant" are any responses that came back from the model and are added for context or as part of building the conversation memory based on the interactions that happened within a session with a chatbot.

- content: Remember that Claude 3 models support both text and image so you have to specify the type of content you're sending to the model and then provide the value in the right format. This is an array because you can send multiple pieces of content at the same time such is our case here. I want to send an image and prompt, so I'm gonna send one content with type image and content with type text.For the prompt you just need to send it in an attribute called "text". As shortcut, you can omit the "type" attribute, if you want, and it will default to text. If it's an image then you set the "type" to "image" and then declare a "source" which requires three things:

- type, this is the data format type that you're using to send the data. Set it to "base64", which is the only value supported by Claude 3 currently.

- media_type: This is the image type. Claude 3 currently supports jpeg, png, gif and webp

- data: the base64-encoded image data which we stored in a variable in Step 6.

- max_tokens (required) - this is the maximum amount of tokens that you want the output to generate, in other words, roughly how many "characters". Tokens are not exactly characters because they're effectively bits of words which can be a few characters or a comma or other bits, but it can help to get an estimate of how much you may need by thinking of them as characters. Each model may have different limits. Haiku currently has a limit of 4096 output tokens. So why wouldn't you just keep it simple and set this attribute to maximum value? Because of cost. When using gen ai services, one of the most common units of cost is how many tokens you use, so keep that in mind. This is true also with Amazon Bedrock and for Claude 3 models you pay per 1,000 output tokens generate. You can look up the exact prices on the Amazon Bedrock pricing page.

- anthropic_version (required) - this is a fixed magic string that you just have to add to every request. Currently the value you must pass is always bedrock-2023-05-31.

In my case here, I have added the image to payload while also sending a prompt of "Explain this AWS architecture diagram.". I could do a lot better with some improved prompt engineering techniques to make the output even better, but this will do for this demo to keep it simple.

Now all that's left to do is send our payload to Bedrock and parse the response we get back to capture the output. We use the bedrock runtime client that we set up in the previous steps and call the invoke_model() method.

We give it the modelId, the contentType of "application/json" and then convert our payload Python object into json by using the json.dumps() method from the json libary that we imported at the top. Then we just need to pass that in to an attribute called body.

What we're going to get back is a binary stream of data so we just standard Python to get the full response as a string. The response from the Bedrock runtime client will always have a "body" attribute carrying the data. So we use the built-in read() method to read the whole binary data stream to the end and store it in a variable. We then use the json library again to convert this into json by using json.loads().

Now we just need to learn how to navigate Claude 3's response payload structure. The response will contain a "content" array with the content generated by the model. You would normally only need the first item so we take the item at index 0. Much like the "content" object that we put together for the input, the output one also has a type attribute even though Claude 3 only supports text as output. So you can ignore that and just get your output which will always be stored under "text".

And that's it! We just print it to the screen or at this point you can do whatever you feel like with the LLM's output.

I hope this guide has helped me understand all the steps required to use Amazon Bedrock and Claude 3 in your Python applications. It can look like a lot on paper when laid out in a tutorial like this, but once you put together you realize that it's much simpler than it seems at first and quite intuitive for the most part.

If you want to continue learning, I teach about AI/ML and generative AI on my channels so check out my codingmatheus youtube channel or just find me as @codingmatheus everywhere for more lessons and tutorials.