GenAI under the hood [Part 6] - Temperature is NOT creativity

We often explain the temperature parameter for LLMs as a proxy for creativity. Is it?

Shreyas Subramanian

Amazon Employee

Published May 16, 2024

Creativity often refers to the ability to think innovatively, make novel connections, and devise unique and effective solutions. However, evaluating the creativity of large language models (LLMs) presents several challenges.

One significant hurdle is the subjective nature of creativity. While factual questions like "What is the capital of France?" have clear, objective answers, prompts that require creative thinking, such as "Describe a futuristic city powered entirely by renewable energy," or "Imagine a world where humans could communicate telepathically," do not have definitive right or wrong answers. This open-endedness makes it difficult to judge the correctness or quality of an LLM's response.

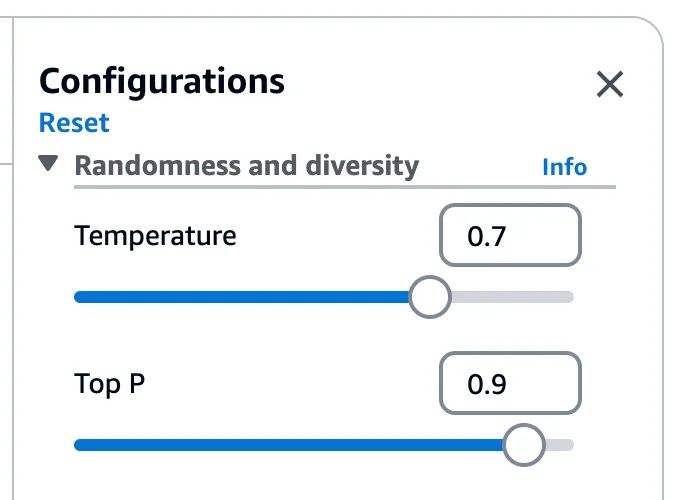

Temperature, a parameter that you will encounter often in LLM text playgrounds and API calls is often said in the same sentence as creativity; more specifically, diversity or creativity of the output is often attributed to the temperature parameter.

Somehow we have a picture in our head that "heating up" the LLM causes it to be more creative? (see splash image generated using Titan Image Generation model). Here's a quick test with Claude Sonnet on Bedrock:

| Question | Answer (temp = 0) | Answer (temp = 1) |

|---|---|---|

| What is the capital of France? | The capital of France is Paris. | The capital of France is Paris. |

| Imagine a world where humans could communicate telepathically (write a paragraph 200 words or less) | In a world where telepathy is the norm, communication would take on an entirely new dimension. Imagine being able to share your thoughts, feelings, and experiences with others directly, without the need for spoken or written words. The barriers of language and cultural differences would dissolve, allowing for a deeper understanding and connection between individuals. Telepathic communication would revolutionize the way we interact socially, professionally, and even romantically. Meetings and discussions could take place without the need for physical gatherings, as ideas and concepts could be seamlessly shared and understood. Educational systems could evolve, allowing students to absorb knowledge directly from their teachers' minds, making learning more efficient and immersive. However, with such profound mental connectivity, issues of privacy and personal boundaries would need to be addressed. Societal norms and ethical guidelines would have to be established to ensure respect for individual thoughts and the ability to maintain mental privacy when desired. Despite these challenges, a telepathic world could open up new realms of human potential, fostering greater empathy, understanding, and unity among all people. | In a world where telepathy is a reality, communication takes on a whole new dimension. Imagine being able to share your thoughts, emotions, and experiences with others without the barrier of spoken or written language. A simple mental projection would allow you to convey complex ideas, intricate details, and vivid imagery directly into someone's mind. Privacy would become a luxury, as the boundaries between personal thoughts and shared consciousness blur. Intimate relationships would deepen, as partners could truly understand each other on a level that transcends words. Business negotiations and political discourse would be transformed, with no room for deception or hidden agendas. However, the ability to read minds could also breed mistrust and fear, leading to a society where mental barriers and defenses become the norm. Psychic shields and mental fortresses might arise, creating a new form of isolation in a world meant to be more connected than ever before. Ultimately, telepathy would reshape the very fabric of human interaction, forcing us to redefine the boundaries of personal space, privacy, and the depths of emotional intimacy. |

When tested with temperature 0 and 1 (extremes), Claude sonnet is still correct, and provides useful answers. Whether you think the answers to the question on telepathy are creative is up to you to decide.

Temperature is a crucial hyperparameter in stochastic language models that regulates the randomness in the sampling process (introduced in Ackley, Hinton, and Sejnowski - 1985, and some other contemporary papers). The softmax function (Equation 1) applies a non-linear transformation to the output logits, converting them into a probability distribution (i.e., the probabilities sum to 1). That's a nice way of saying temperature "T" is a fudge factor:

The temperature parameter

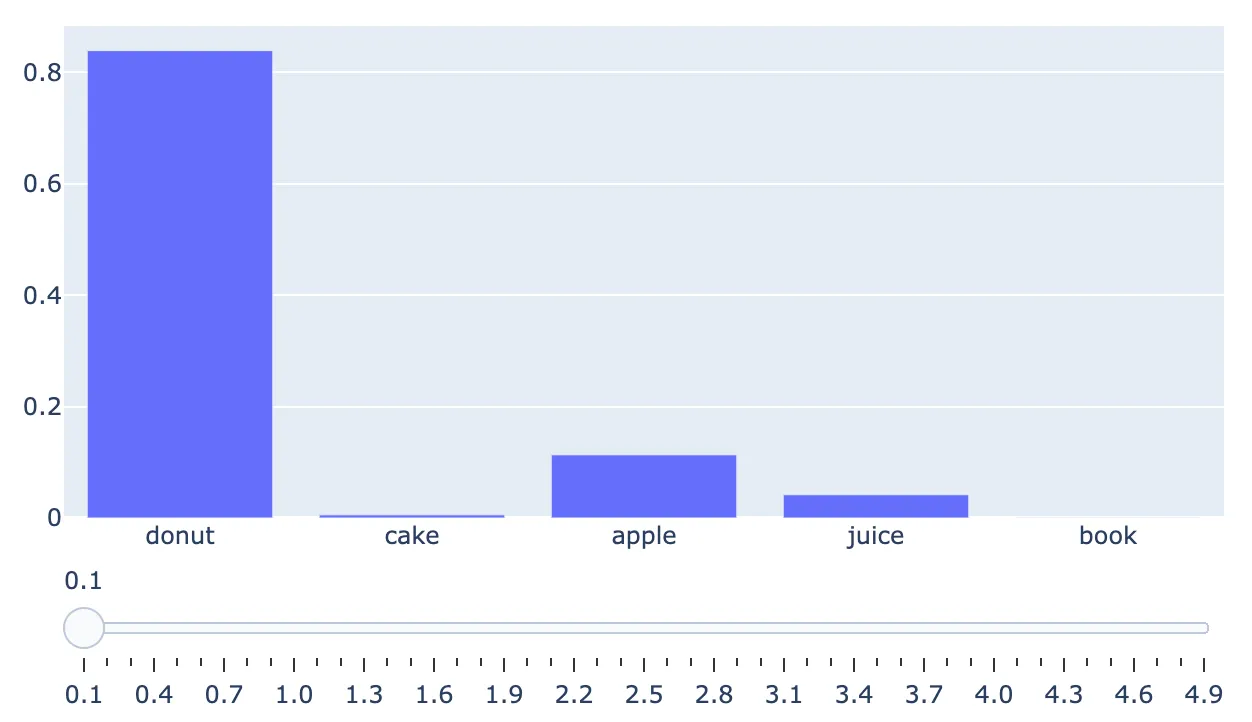

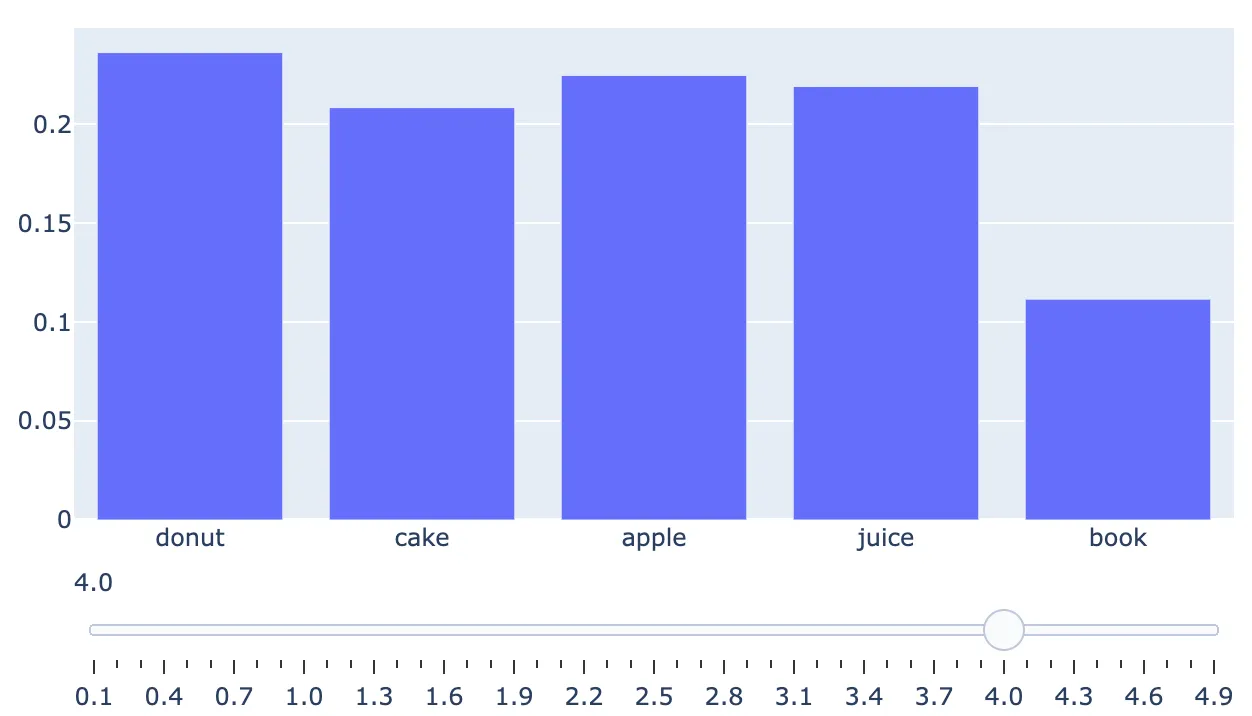

t controls the shape of this distribution, redistributing the output probability mass. When t > 1, high probabilities are decreased, and low probabilities are increased, and vice versa for t < 1. Higher temperatures increase entropy and perplexity, leading to more randomness and uncertainty in the generative process. Typically, values for t are in the range of [0, 2], and t = 0 corresponds to greedy sampling, i.e., always selecting the token with the highest probability. Lets take a look at a mock distribution with low (0.1) temperature:

And high (4.0) temperature:

As you can see the probability distribution over the vocabulary changes. This changes every time a sampling algorithm needs to pick the next best token while generating text for your task.

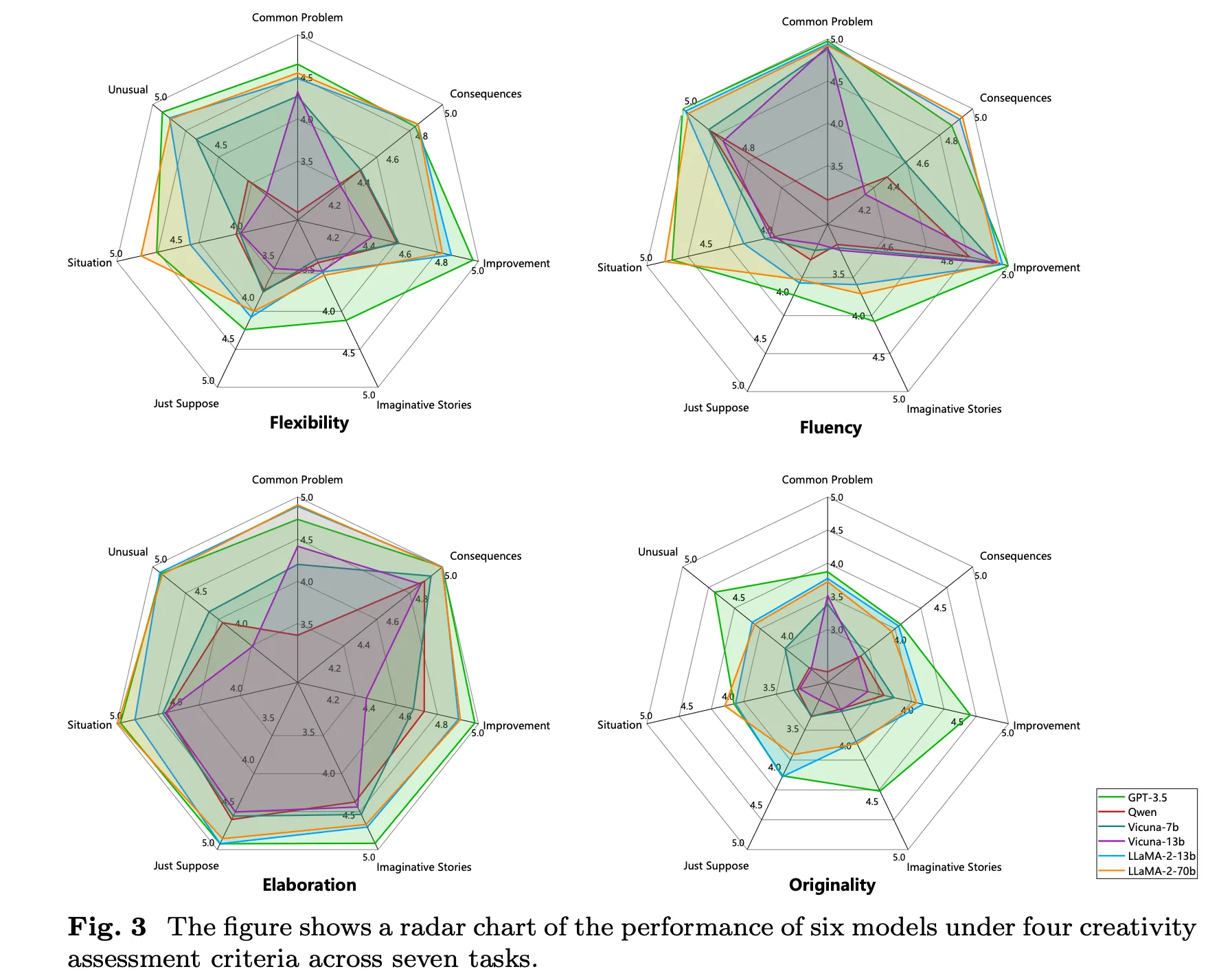

- In the paper titled "Assessing and Understanding Creativity in Large Language Models" by Yunpu Zhao et al., they explore the assessment and analysis of creativity in LLMs. They adapt the Torrance Tests of Creative Thinking (TTCT), a widely recognized tool for assessing human creativity, to construct a dataset of 700 questions across seven tasks. These tasks measure different aspects of creative thinking, such as fluency, flexibility, originality, and elaboration. The authors then employ various LLMs, including GPT-3.5, LLaMA-2, Vicuna, and Qwen, to answer these questions, and utilize GPT-4 as an evaluator to assess the creativity of the responses based on the aforementioned criteria. The authors conduct a series of experiments to investigate the factors influencing the creativity of LLMs. They explore the impact of different prompt types (basic, instructive, post-instructive, and Chain of Thought prompts) on the models' creative performance. Additionally, they examine how assigning different roles (e.g., scientist, artist, student) to the LLMs affects their creativity. Furthermore, the authors investigate the effect of collaborative interactions among multiple LLMs on their creative outputs. The authors concluded that creativity of LLMs is significantly influenced by the model architecture, prompts, and assigned roles. Models generally excel in elaboration but struggle with originality. Instructive and Chain of Thought prompts enhance creativity, while assigning the role of a scientist leads to the highest creativity levels. Interestingly, they found that changes in temperature did not significantly affect the creativity scores across the different criteria and tasks. This suggests that the inherent architecture and training of the LLM, rather than simply increasing randomness through temperature adjustments, plays a more crucial role in determining its creative capabilities. The authors conclude that while temperature can modulate the diversity of outputs, other factors like model design, prompting strategies, and even the nature of the task itself have a more profound influence on the manifestation of creativity in LLMs.

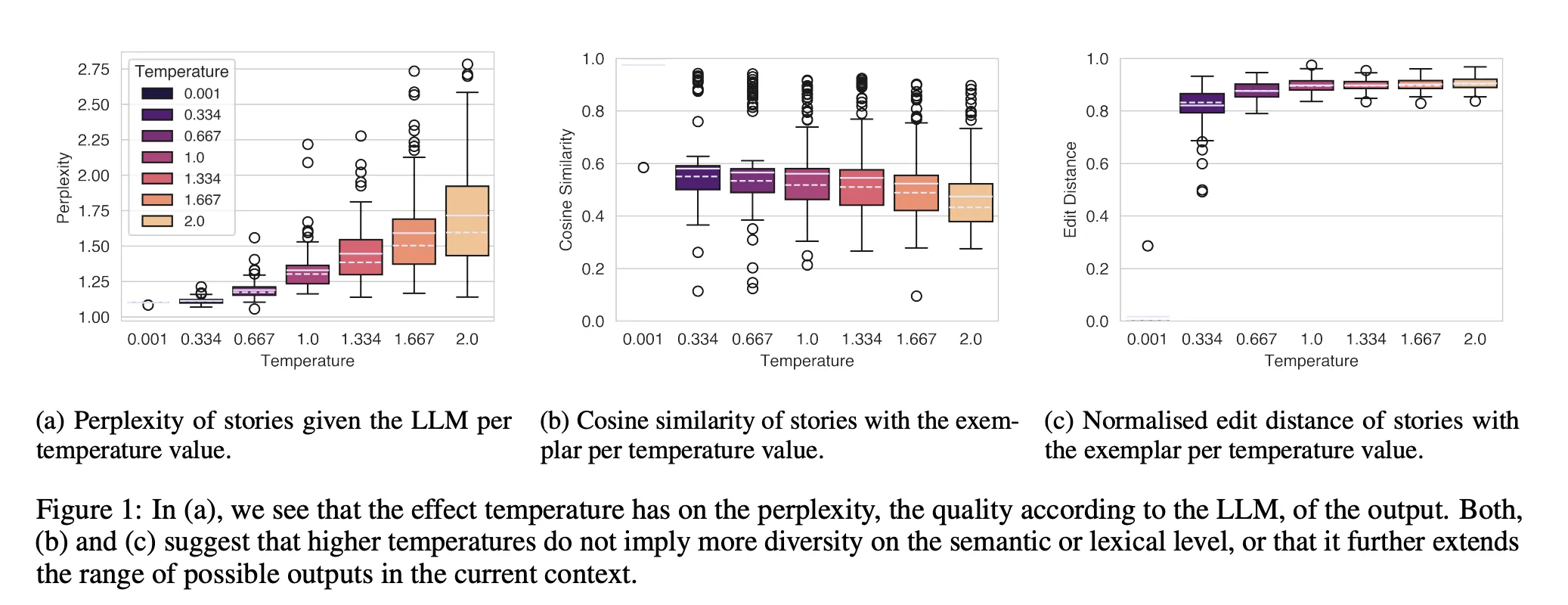

- In the paper titled "Is Temperature the Creativity Parameter of Large Language Models?" by Peeperkorn et. al., the authors investigate the claim that temperature is the "creativity parameter" for large language models (LLMs) when generating creative content, specifically narratives or stories. They set up an experiment where they use the LLAMA 2-CHAT 70B language model to generate stories with different temperature values ranging from 0.001 to 2.0. They generate 100 stories for each temperature value using a fixed prompt, "Write a story", similar to our simple tests above. They perform an analysis to measure the diversity and similarity of the generated stories compared to a baseline "exemplar" story generated with greedy sampling (temperature close to 0). They also conduct a human evaluation study where participants rate the stories on four necessary conditions for creativity in narrative generation: novelty, typicality, cohesion, and coherence. The authors conclude that while higher temperatures lead to slightly more novel outputs, the influence of temperature on creativity is far more nuanced and weak than the "creativity parameter" claim suggests. They find a weak positive correlation between temperature and novelty, and a moderate negative correlation between temperature and coherence, implying a trade-off between novelty and coherence. However, they do not observe any significant relationship between temperature and cohesion or typicality. Overall, the results suggest that temperature alone is insufficient for enabling creativity in LLMs, and the authors discuss the need for more advanced techniques, such as creativity-focused decoding strategies and better prompt design, to leverage the creative potential of LLMs more effectively.

First takeaway - temperature has very little to do with creativity. Creativity encompasses various aspects like originality, flexibility, and novelty. To accurately assess these qualities in LLMs, diverse tasks and evaluation criteria must be designed. For instance, one task could measure an LLM's ability to generate unique product ideas, with prompts like "Create a new eco-friendly transportation concept" or "Devise a novel way to recycle plastic waste." Another task could test its capacity to solve problems in unconventional ways, with prompts such as "Suggest an innovative solution to reduce traffic congestion in cities" or "Propose a creative way to promote sustainability in schools." Typically these results are evaluated for creativity metrics by an LLM (which can be flawed) or humans (who can also be flawed in their subjective ranking of creative output).

Another challenge arises from the fundamental differences between LLMs and human cognition. LLMs may sometimes provide irrelevant or logically flawed responses, necessitating additional evaluation of these aspects. For example, an LLM might generate a response like "To reduce traffic congestion, we could ask everyone to stay at home and not use their cars," which, while technically could be judged as a "creative" solution, it is definitely not a practical or viable solution.

Lastly, evaluating creativity requires striking a balance between accuracy and efficiency. Traditional human-based evaluation methods, while accurate, may not be practical or scalable for assessing large language models due to the time and resources required.

To address these challenges, researchers and developers must explore innovative evaluation techniques and metrics that can robustly and reliably measure the creative capabilities of LLMs. This could involve a combination of automated and human evaluation methods, as well as the development of specialized tasks and benchmarks tailored to assess different facets of creativity.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.