How I automated social media image creation with Gen AI (kind of)

Automate social media image creation with Gen AI

- React with TypeScript: For type safety and better code quality.

- React Query and Axios: For handling API requests.

- Ant Design (Antd): A component library, It's always fun to test something new, and Antd seemed pretty nice.

- AWS SAM: Decided to use SAM to define the infrastructure as code.

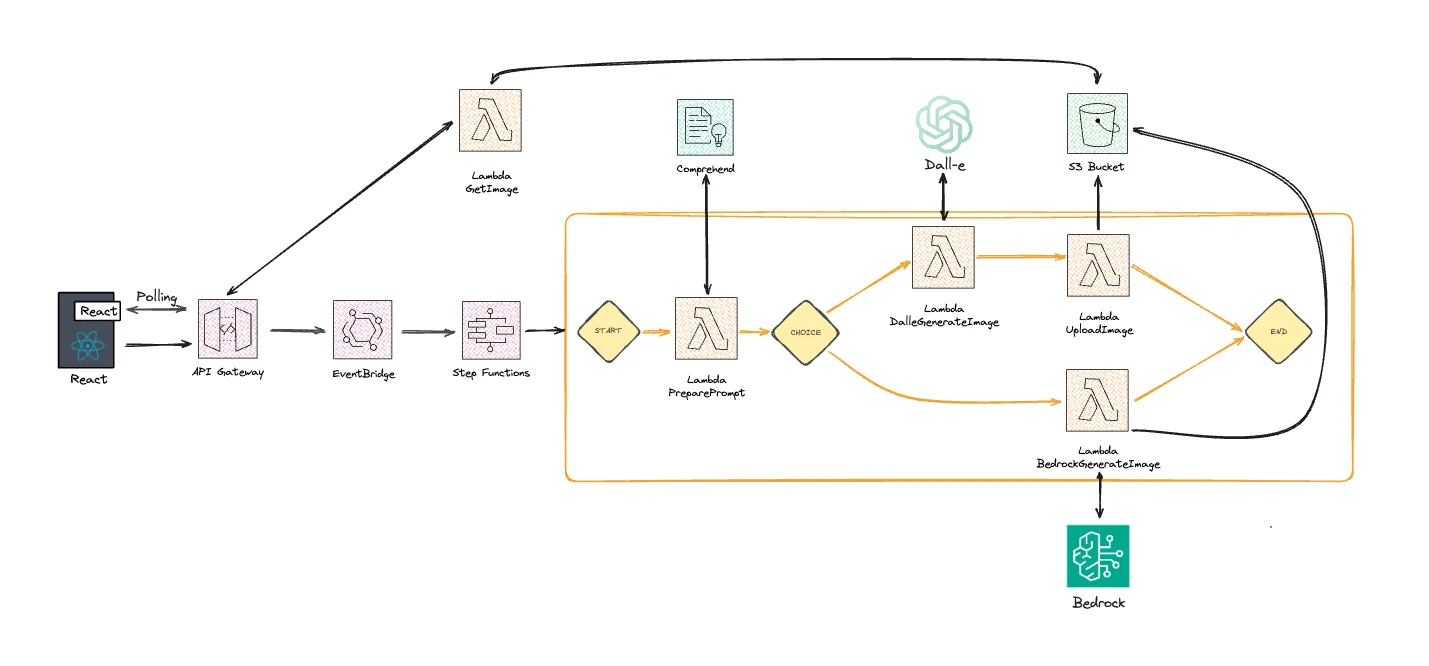

- Api-Gateway: Serves as the entry point for the backend, managing and routing incoming requests. It is integrated with eventBridge to decouple the application components.

- EventBridge: Picks up the events from API Gateway and forwards them to the right part of the application (the step function).

- Step Functions: Picks up events from eventBridge and orchestrates the workflow to process the request from the frontend, to make it clear what is happening.

- Lambda (Written in GO): Acts as individual steps within the step functions workflow, handling specific tasks such as preparing prompts and generating images.

- Comprehend: Analyzes the sentiment of the post when preparing the prompt for image generation. To provide contextual understanding which ultimately should enhance the quality of the generated image.

- Bedrock and DALL-E: Used for creating images based on the analyzed social media posts, integrated within the Lambda functions to generate the images.

- Frontend: Node and TypeScript

- Component Library: Ant Design (Antd)

npm i antd - Packages used: React Query, Axios

npm i react-query axios

- AWS: Sam CLI installation instructions

- Language: GO docs

- DALL-E: A developer account at openAI to be able to generate and retrieve a API-key.

sam init in a freshly created folder. For the options I choose to start from a template. Specifically the Hello World example template. For language I choose go (provided.al2023).template.yaml file inside of it the first step was to add the required infrastructure.1

2

3

4

5

6

AWSTemplateFormatVersion: "2010-09-09"

Transform: AWS::Serverless-2016-10-31

Resources:

Outputs:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

PostToImageEventBus:

Type: AWS::Events::EventBus

Properties:

Name: PostToImageEventBus

StateMachineEventBridgeRule:

Type: AWS::Events::Rule

Properties:

EventBusName: !Ref PostToImageEventBus

EventPattern:

source:

- "api-gateway"

detail-type:

- "PreparePrompt"

Targets:

- Arn: !GetAtt PostToImageStateMachine.Arn

Id: "PostToImageStateMachineTarget"

RoleArn: !GetAtt EventBridgeExecutionRole.Arn

State: "ENABLED"

EventBridgeExecutionRole:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service: "events.amazonaws.com"

Action:

- "sts:AssumeRole"

Policies:

- PolicyName: "EventBridgeStepFunctionsExecutionPolicy"

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- "states:StartExecution"

Resource: !GetAtt PostToImageStateMachine.Arnapi.yaml where I stored the definition of my API. The API had to have two routes.- One for kicking of the process of creating a image

- One that we could use to poll our backend for the generated image once it had been created.

template.yaml file I added the following to define my API and the required roles needed.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

PostToImageApi:

Type: AWS::Serverless::HttpApi

Properties:

DefinitionBody:

"Fn::Transform":

Name: "AWS::Include"

Parameters:

Location: "./api.yaml"

# Permission to put events to eventBridge

HttpApiEvenbridgeRole:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Principal:

Service: "apigateway.amazonaws.com"

Action:

- "sts:AssumeRole"

Policies:

- PolicyName: ApiDirectWriteEventBridge

PolicyDocument:

Version: "2012-10-17"

Statement:

Action:

- events:PutEvents

Effect: Allow

Resource:

- !GetAtt PostToImageEventBus.Arn

# Permission to invoke the GetImage Lambda

HttpApiLambdaRole:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: "Allow"

Principal:

Service: "apigateway.amazonaws.com"

Action:

- "sts:AssumeRole"

Policies:

- PolicyName: "LambdaExecutionPolicy"

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- "lambda:InvokeFunction"

Resource: !GetAtt GetImageFunction.Arn1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

openapi: "3.0.1"

info:

title: "HTTP API"

paths:

/get-image:

get:

parameters:

- name: s3Key

in: query

description: "Path to image in S3 bucket"

required: true

schema:

type: string

responses:

200:

description: "Successful response"

content:

application/json:

schema:

type: object

properties:

url:

type: string

description: "URL of the retrieved image"

x-amazon-apigateway-integration:

type: "aws_proxy"

httpMethod: "POST"

uri:

Fn::Sub: arn:aws:apigateway:${AWS::Region}:lambda:path/2015-03-31/functions/${GetImageFunction.Arn}/invocations

credentials:

Fn::GetAtt: [HttpApiLambdaRole, Arn]

payloadFormatVersion: "1.0"

passthroughBehavior: "when_no_match"

/generate-image:

post:

responses:

default:

description: "API to EventBridge"

x-amazon-apigateway-integration:

integrationSubtype: "EventBridge-PutEvents"

credentials:

Fn::GetAtt: [HttpApiEvenbridgeRole, Arn]

requestParameters:

Detail: "$request.body"

DetailType: PreparePrompt

Source: api-gateway

EventBusName:

Fn::GetAtt: [PostToImageEventBus, Name]

payloadFormatVersion: "1.0"

type: "aws_proxy"

connectionType: "INTERNET"

x-amazon-apigateway-importexport-version: "1.0"

x-amazon-apigateway-cors:

allowOrigins:

- "*"

allowHeaders:

- "*"

allowMethods:

- "PUT"

- "POST"

- "DELETE"

- "HEAD"

- "GET"1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

ImageUploadBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: !Sub "${AWS::StackName}-image-upload-bucket"

PublicAccessBlockConfiguration:

BlockPublicAcls: false

BlockPublicPolicy: false

IgnorePublicAcls: false

RestrictPublicBuckets: false

CorsConfiguration:

CorsRules:

- AllowedHeaders:

- "*"

AllowedMethods:

- PUT

- POST

- DELETE

- HEAD

- GET

AllowedOrigins:

- "*"

ExposedHeaders: []

# This makes the bucket available for everyone to do PUT and GET

ImageUploadBucketPolicy:

Type: AWS::S3::BucketPolicy

Properties:

Bucket: !Ref ImageUploadBucket

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal: "*"

Action:

- s3:PutObject

- s3:GetObject

Resource: !Sub "arn:aws:s3:::${AWS::StackName}-image-upload-bucket/*"1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

PostToImageStateMachine:

Type: "AWS::Serverless::StateMachine"

Properties:

Definition:

StartAt: PromptPreparation

States:

PromptPreparation:

Type: Task

Resource: !GetAtt PromptPreparationAPIFunction.Arn

Next: CheckBedRock

# Choice to see which route to take

CheckBedRock:

Type: Choice

Choices:

- Variable: "$.bedrock"

BooleanEquals: true

Next: BedrockImageGeneration

Default: DalleImageGeneration

BedrockImageGeneration:

Type: Task

Resource: !GetAtt BedrockImageGenerationFunction.Arn

End: true

DalleImageGeneration:

Type: Task

Resource: !GetAtt DalleImageGenerationFunction.Arn

Next: ImageUpload

ImageUpload:

Type: Task

Resource: !GetAtt ImageUploadFunction.Arn

End: true

Role: !GetAtt PostToImageStateMachineRole.Arn

# Gives permission to the step function to invoke the required lambda functions

# And permission to handle the actual step function

PostToImageStateMachineRole:

Type: "AWS::IAM::Role"

Properties:

AssumeRolePolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Principal:

Service:

- states.amazonaws.com

Action:

- "sts:AssumeRole"

Policies:

- PolicyName: "StateMachineExecutionPolicy"

PolicyDocument:

Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- "lambda:InvokeFunction"

Resource:

- !GetAtt PromptPreparationAPIFunction.Arn

- !GetAtt DalleImageGenerationFunction.Arn

- !GetAtt ImageUploadFunction.Arn

- !GetAtt BedrockImageGenerationFunction.Arn

- Effect: Allow

Action:

- "states:StartExecution"

- "states:DescribeExecution"

- "states:StopExecution"

Resource: "*"- The prompt preparation function needed permission to detect sentiment and key phrases from a text and therefore also needed permission AWS comprehend.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

PromptPreparationAPIFunction:

Type: AWS::Serverless::Function

Properties:

Handler: bootstrap

Runtime: provided.al2023

CodeUri: prepare-prompt/

Timeout: 30

Policies:

- AWSLambdaBasicExecutionRole

- Version: "2012-10-17"

Statement:

- Effect: "Allow"

Action:

- comprehend:DetectSentiment

- comprehend:DetectKeyPhrases

Resource: "*"- The DALL-E image generation function did not need any special permissions since it sent a request to openAI that is not an AWS service. Although it needed an environment variable for the API key that will be used by the lambda to authorize the request to DALL-E.

1

2

3

4

5

6

7

8

9

10

11

12

DalleImageGenerationFunction:

Type: AWS::Serverless::Function

Properties:

Handler: bootstrap

Runtime: provided.al2023

CodeUri: generate-image-dalle/

Timeout: 30

Environment:

Variables:

OPENAI_API_KEY: !Ref OpenAIApiKey

Policies:

- AWSLambdaBasicExecutionRole- The Bedrock image generation function needed to be able to invoke bedrock and therefore needed permissions to AWS Bedrock.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

BedrockImageGenerationFunction:

Type: AWS::Serverless::Function

Properties:

Handler: bootstrap

Runtime: provided.al2023

CodeUri: generate-image-bedrock/

Timeout: 30

Environment:

Variables:

BUCKET_NAME: !Ref ImageUploadBucket

Policies:

- AWSLambdaBasicExecutionRole

- Version: "2012-10-17"

Statement:

- Effect: Allow

Action:

- "bedrock:InvokeModel"

Resource: "*"- The image upload function did not need any special permissions since the S3 bucket was already public.

1

2

3

4

5

6

7

8

9

10

11

12

13

ImageUploadFunction:

Type: AWS::Serverless::Function

Properties:

Handler: bootstrap

Runtime: provided.al2023

CodeUri: upload-image/

Timeout: 30

Environment:

Variables:

BUCKET_NAME: !Ref ImageUploadBucket

REGION: !Ref AWS::Region

Policies:

- AWSLambdaBasicExecutionRole- The last function that was needed was the function used to get the image integrated with Api Gateway. No special permissions needed since the S3 bucket was public. One environment variable was needed to know which bucket to upload to.

1

2

3

4

5

6

7

8

9

10

11

12

GetImageFunction:

Type: AWS::Serverless::Function

Properties:

Handler: bootstrap

Runtime: provided.al2023

CodeUri: get-image/

Timeout: 30

Environment:

Variables:

BUCKET_NAME: !Ref ImageUploadBucket

Policies:

- AWSLambdaBasicExecutionRolesam build.- Comprehend client is initialized to analyze input text sentiment.

- Comprehend detects the sentiment of the text.

- The top sentiment is decided and converted to the responding emotion.

- The key phrases of the text it extracted and converted to a string.

- The prompt is prepared and sent to the next function.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

package main

import (

"context"

"fmt"

"log"

"strings"

"github.com/aws/aws-lambda-go/lambda"

"github.com/aws/aws-sdk-go/aws"

"github.com/aws/aws-sdk-go/aws/session"

"github.com/aws/aws-sdk-go/service/comprehend"

)

type InputEvent struct {

Detail struct {

Text string `json:"text"`

S3Key string `json:"s3Key"`

Bedrock bool `json:"bedrock"`

} `json:"detail"`

}

type OutputEvent struct {

Prompt string `json:"prompt"`

S3Key string `json:"s3Key"`

Bedrock bool `json:"bedrock"`

}

// function to get the top sentiment and convert it for the prompt

func getTopSentiment(sentimentScores *comprehend.SentimentScore) string {

sentiments := map[string]float64{

"Positive": *sentimentScores.Positive,

"Negative": *sentimentScores.Negative,

"Neutral": *sentimentScores.Neutral,

"Mixed": *sentimentScores.Mixed,

}

var topSentiment string

var maxScore float64

for sentiment, score := range sentiments {

if score > maxScore {

maxScore = score

topSentiment = sentiment

}

}

switch topSentiment {

case "Positive":

topSentiment = "happy"

case "Negative":

topSentiment = "sad"

case "Neutral":

fallthrough

case "Mixed":

topSentiment = "neutral"

}

return topSentiment

}

func handler(ctx context.Context, event InputEvent) (OutputEvent, error) {

text := event.Detail.Text

// creating a new session for comprehend and forcing eu west 1 as regions since its not available in the eu north 1 region

svc := comprehend.New(session.Must(session.NewSession(&aws.Config{

Region: aws.String("eu-west-1"),

})))

// Preparing the parameters to be sent to comprehend

sentimentParams := &comprehend.DetectSentimentInput{

Text: aws.String(text),

LanguageCode: aws.String("en"),

}

keyPhraseParams := &comprehend.DetectKeyPhrasesInput{

Text: aws.String(text),

LanguageCode: aws.String("en"),

}

// Detecting the sentiment of the provided text

sentimentResult, err := svc.DetectSentiment(sentimentParams)

if err != nil {

return OutputEvent{}, fmt.Errorf("failed to detect sentiment: %w", err)

}

keyPhraseResult, err := svc.DetectKeyPhrases(keyPhraseParams)

if err != nil {

return OutputEvent{}, fmt.Errorf("failed to process text: %w", err)

}

var keyPhrases []string

// Getting the top sentiment

topSentiment := getTopSentiment(sentimentResult.SentimentScore)

// start the phrases array with the emotion

keyPhrases = append(keyPhrases, topSentiment)

// append all phrases to string array

for _, phrase := range keyPhraseResult.KeyPhrases {

keyPhrases = append(keyPhrases, *phrase.Text)

}

// create a string from the key words

summary := strings.Join(keyPhrases, " ")

// Creating the prompt to be used for image generation

prompt := fmt.Sprintf("Generate a image based on the following key words: %s", summary)

// Creating the output and returning to the next function

outputEvent := OutputEvent{Prompt: prompt, S3Key: event.Detail.S3Key, Bedrock: event.Detail.Bedrock}

return outputEvent, nil

}

func main() {

lambda.Start(handler)

}

- Retrieve the OpenAI API key from the environment variables.

- Create a request body for the DALL-E API using the provided prompt.

- Send an request to the DALL-E API to generate an image.

- Read and parse the response from the DALL-E API.

- Extract the image URL from the response.

- Send the image URL and S3 key to the next function.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

package main

import (

"bytes"

"context"

"encoding/json"

"fmt"

"io"

"log"

"net/http"

"os"

"github.com/aws/aws-lambda-go/lambda"

)

type InputEvent struct {

Prompt string `json:"prompt"`

S3Key string `json:"s3Key"`

}

type OutputEvent struct {

Url string `json:"url"`

S3Key string `json:"s3Key"`

}

// Type for how the request to DALL-E should be structured

type DalleRequest struct {

Model string `json:"model"`

Prompt string `json:"prompt"`

Size string `json:"size"`

N int `json:"n"`

}

// Type for how the request response is structured

type ResponseObject struct {

Data []struct {

Url string `json:"url"`

} `json:"data"`

}

func handler(ctx context.Context, event InputEvent) (OutputEvent, error) {

// Grabbing our api-key from the environment variable

openaiApiKey := os.Getenv("OPENAI_API_KEY")

if openaiApiKey == "" {

log.Println("Error: OPENAI_API_KEY environment variable is not set")

return OutputEvent{}, fmt.Errorf("missing OPENAI_API_KEY")

}

// Preparing the DALL-E request

requestBody := DalleRequest{

Model: "dall-e-3",

Prompt: event.Prompt,

Size: "1024x1024",

N: 1,

}

// preparing the payload for the request

payload, err := json.Marshal(requestBody)

if err != nil {

log.Println("Error marshalling data:", err)

return OutputEvent{}, err

}

// creating request

client := &http.Client{}

req, err := http.NewRequest("POST", "https://api.openai.com/v1/images/generations", bytes.NewBuffer(payload))

if err != nil {

log.Println("Error creating request:", err)

return OutputEvent{}, err

}

// Setting headers for the request

req.Header.Set("Content-Type", "application/json")

req.Header.Set("Authorization", fmt.Sprintf("Bearer %s", openaiApiKey))

// sending request to openAI

resp, err := client.Do(req)

if err != nil {

log.Println("Error sending request:", err)

return OutputEvent{}, err

}

defer resp.Body.Close()

// Reading all data from the response

body, err := io.ReadAll(resp.Body)

if err != nil {

log.Println("Error reading response body:", err)

return OutputEvent{}, err

}

// If the response is not of status OK an error is returned

if resp.StatusCode != http.StatusOK {

log.Printf("Non-OK HTTP status: %s\nResponse body: %s\n", resp.Status, string(body))

return OutputEvent{}, fmt.Errorf("non-OK HTTP status: %s", resp.Status)

}

// Un-marshalling the response

var respData ResponseObject

err = json.Unmarshal(body, &respData)

if err != nil {

log.Println("Error unmarshalling response:", err)

return OutputEvent{}, err

}

if len(respData.Data) == 0 {

log.Println("No data received in the response")

return OutputEvent{}, fmt.Errorf("no data in the response")

}

// Extracting the URL for the image

imageURL := respData.Data[0].Url

// Creating the output and returning to the next function

outputEvent := OutputEvent{Url: imageURL, S3Key: event.S3Key}

return outputEvent, nil

}

func main() {

lambda.Start(handler)

}

- Reading the S3 bucket name from an environment variable and loading the AWS configuration.

- Preparing the payload that wraps the text prompt into parameters required for the image generation task and specifying the image dimensions etc.

- Invoking the Titan image generation model with the prepared payload.

- The Titan model processes the prompt and returns a base64 encoded image

- Decode the base64 encoded image into a byte array.

- Upload to s3

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

package main

import (

"bytes"

"context"

"encoding/base64"

"encoding/json"

"fmt"

"log"

"net/http"

"os"

"github.com/aws/aws-lambda-go/lambda"

"github.com/aws/aws-sdk-go-v2/config"

"github.com/aws/aws-sdk-go-v2/service/bedrockruntime"

"github.com/aws/aws-sdk-go/aws"

"github.com/aws/aws-sdk-go/aws/session"

"github.com/aws/aws-sdk-go/service/s3"

)

type InputEvent struct {

Prompt string `json:"prompt"`

S3Key string `json:"s3Key"`

}

type OutputEvent struct {

Url string `json:"url"`

}

// Titan specific types for requests towards bedrock

type TitanInputTextToImageInput struct {

TaskType string `json:"taskType"`

ImageGenerationConfig TitanInputTextToImageConfig `json:"imageGenerationConfig"`

TextToImageParams TitanInputTextToImageParams `json:"textToImageParams"`

}

type TitanInputTextToImageParams struct {

Text string `json:"text"`

NegativeText string `json:"negativeText,omitempty"`

}

type TitanInputTextToImageConfig struct {

NumberOfImages int `json:"numberOfImages,omitempty"`

Height int `json:"height,omitempty"`

Width int `json:"width,omitempty"`

Scale float64 `json:"cfgScale,omitempty"`

Seed int `json:"seed,omitempty"`

}

type TitanInputTextToImageOutput struct {

Images []string `json:"images"`

Error string `json:"error"`

}

// Function to decode base64 image

func decodeImage(base64Image string) ([]byte, error) {

decoded, err := base64.StdEncoding.DecodeString(base64Image)

if err != nil {

return nil, err

}

return decoded, nil

}

func handler(ctx context.Context, event InputEvent) error {

// Grabbing the name of the bucket from the environment variable

bucketName := os.Getenv("BUCKET_NAME")

if bucketName == "" {

log.Println("Error: BUCKET_NAME environment variable is not set")

return fmt.Errorf("missing BUCKET_NAME")

}

// Preparing config for the runtime and forcing us east 1 since its not available in eu north 1

cfg, err := config.LoadDefaultConfig(context.Background(), config.WithRegion("us-east-1"))

if err != nil {

return err

}

// Creating the runtime for bedrock

runtime := bedrockruntime.NewFromConfig(cfg)

// Preparing the request payload for bedrock

payload := TitanInputTextToImageInput{

TaskType: "TEXT_IMAGE",

TextToImageParams: TitanInputTextToImageParams{

Text: event.Prompt,

},

ImageGenerationConfig: TitanInputTextToImageConfig{

NumberOfImages: 1,

Scale: 8.0,

Height: 1024.0,

Width: 1024.0,

},

}

payloadString, err := json.Marshal(payload)

if err != nil {

return fmt.Errorf("unable to marshal body: %v", err)

}

accept := "*/*"

contentType := "application/json"

model := "amazon.titan-image-generator-v1"

// Sending request to bedrock

resp, err := runtime.InvokeModel(context.TODO(), &bedrockruntime.

InvokeModelInput{

Accept: &accept,

ModelId: &model,

ContentType: &contentType,

Body: payloadString,

})

if err != nil {

return fmt.Errorf("error from Bedrock, %v", err)

}

var output TitanInputTextToImageOutput

err = json.Unmarshal(resp.Body, &output)

if err != nil {

return fmt.Errorf("unable to unmarshal response from Bedrock: %v", err)

}

// Decoding base64 to be able to upload image to S3

decoded, err := decodeImage(output.Images[0])

if err != nil {

return fmt.Errorf("unable to decode image: %v", err)

}

// Creating a session for S3

sesh := session.Must(session.NewSession())

s3Client := s3.New(sesh)

objectKey := event.S3Key

// Uploading image to S3

_, err = s3Client.PutObject(&s3.PutObjectInput{

Bucket: aws.String(bucketName),

Key: aws.String(objectKey),

Body: bytes.NewReader(decoded),

ContentType: aws.String(http.DetectContentType(decoded)),

})

if err != nil {

log.Println("Error uploading image to S3:", err)

return err

}

log.Println("Successfully uploaded image to S3:", objectKey)

return nil

}

func main() {

lambda.Start(handler)

}

- Retrieve the bucket name and region from environment variables.

- Download the image from the provided URL.

- Read the image data from the HTTP response.

- Initialize an S3 client.

- Upload the image data to the specified S3 bucket using the provided S3 key.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

package main

import (

"bytes"

"context"

"fmt"

"io"

"log"

"net/http"

"os"

"github.com/aws/aws-lambda-go/lambda"

"github.com/aws/aws-sdk-go/aws"

"github.com/aws/aws-sdk-go/aws/session"

"github.com/aws/aws-sdk-go/service/s3"

)

type Event struct {

Url string `json:"url"`

S3Key string `json:"s3Key"`

}

func handler(ctx context.Context, event Event) error {

// Grabbing the name of the bucket from the environment variable

bucketName := os.Getenv("BUCKET_NAME")

if bucketName == "" {

log.Println("Error: BUCKET_NAME environment variable is not set")

return fmt.Errorf("missing BUCKET_NAME")

}

region := os.Getenv("REGION")

if region == "" {

log.Println("Error: REGION environment variable is not set")

return fmt.Errorf("missing REGION")

}

imageResp, err := http.Get(event.Url)

if err != nil {

log.Println("Error downloading image:", err)

return err

}

defer imageResp.Body.Close()

// Downloading image from provided URL

if imageResp.StatusCode != http.StatusOK {

log.Printf("Error: received non-OK HTTP status when downloading image: %s\n", imageResp.Status)

return fmt.Errorf("non-OK HTTP status when downloading image: %s", imageResp.Status)

}

imageData, err := io.ReadAll(imageResp.Body)

if err != nil {

log.Println("Error reading image data:", err)

return err

}

// Creating a session for S3

sesh := session.Must(session.NewSession())

s3Client := s3.New(sesh)

objectKey := event.S3Key

// Uploading image to S3

_, err = s3Client.PutObject(&s3.PutObjectInput{

Bucket: aws.String(bucketName),

Key: aws.String(objectKey),

Body: bytes.NewReader(imageData),

ContentType: aws.String(http.DetectContentType(imageData)),

})

if err != nil {

log.Println("Error uploading image to S3:", err)

return err

}

log.Println("Successfully uploaded image to S3:", objectKey)

return nil

}

func main() {

lambda.Start(handler)

}

- Retrieve the bucket name from the environment variables.

- Extract the s3Key query parameter from the request.

- Initialize an S3 client.

- Check if the object exists in the S3 bucket using the s3Key.

- If the object exists, return a response with the object's URL.

- If the object does not exist or there is an error, return a error message.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

package main

import (

"context"

"fmt"

"log"

"os"

"github.com/aws/aws-lambda-go/events"

"github.com/aws/aws-lambda-go/lambda"

"github.com/aws/aws-sdk-go/aws"

"github.com/aws/aws-sdk-go/aws/session"

"github.com/aws/aws-sdk-go/service/s3"

)

func handler(ctx context.Context, request events.APIGatewayProxyRequest) (events.APIGatewayProxyResponse, error) {

//Grabbing the name of the bucket from the environment variable

bucketName := os.Getenv("BUCKET_NAME")

if bucketName == "" {

log.Println("Error: BUCKET_NAME environment variable is not set")

return events.APIGatewayProxyResponse{

StatusCode: 400,

Body: "missing BUCKET_NAME",

},nil

}

// Extracting the S3 key

queryParams := request.QueryStringParameters

s3Key, exists := queryParams["s3Key"]

if !exists {

return events.APIGatewayProxyResponse{

StatusCode: 400,

Body: "Missing query parameter 's3Key'",

},nil

}

// Creating a connection to S3

sesh := session.Must(session.NewSession())

s3Client := s3.New(sesh)

input := &s3.HeadObjectInput{

Bucket: aws.String(bucketName),

Key: aws.String(s3Key),

}

// Checking if image exist

_, err := s3Client.HeadObject(input)

if err != nil {

log.Printf(`{"url": "https://%s.s3.eu-north-1.amazonaws.com/%s"}`, bucketName, s3Key)

log.Println("param:", s3Key)

log.Println("Error checking if object exists:", err)

// If it does not exist return 404

return events.APIGatewayProxyResponse{

StatusCode: 404,

Body: "Object does not exist",

}, nil

}

// If it does exist return url

return events.APIGatewayProxyResponse{

StatusCode: 200,

Body: fmt.Sprintf(`{"url": "https://%s.s3.eu-north-1.amazonaws.com/%s"}`, bucketName, s3Key),

}, nil

}

func main() {

lambda.Start(handler)

}

npx create-react-app my-app --template typescript in a new folder.components in which I added another folder named mainContent container a file named index.tsx. Since the focus was not on the frontend for this project I decided to have all my content in the same component. This component looked like this:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

import React from "react";

import { Button, Flex, Layout, Spin, Image, Switch } from "antd";

import TextArea from "antd/es/input/TextArea";

import { Typography } from "antd";

import { useMutation, useQuery } from "@tanstack/react-query";

import { getImage, sendPost } from "../../utils/api";

const { Paragraph, Text } = Typography;

const { Header, Content } = Layout;

const headerStyle: React.CSSProperties = {

textAlign: "center",

color: "#fff",

height: 64,

paddingInline: 48,

lineHeight: "64px",

backgroundColor: "#00415a",

};

const contentStyle: React.CSSProperties = {

textAlign: "center",

minHeight: 120,

lineHeight: "120px",

color: "#fff",

backgroundColor: "#00719c",

};

const layoutStyle = {

borderRadius: 8,

overflow: "hidden",

width: "calc(50% - 8px)",

maxWidth: "calc(50% - 8px)",

marginTop: "10vh",

height: "100%",

};

const textAreaStyle = {

width: "80%",

height: "80%",

};

const buttonStyle = {

width: "80%",

height: "80%",

marginBottom: 16,

backgroundColor: "#009bd6",

};

const paragraphStyle = {

margin: 10,

};

const textStyle = {

color: "white",

};

export default function MainContent() {

const id = "testing";

const [post, setPost] = React.useState("");

const [image, setImage] = React.useState("");

const [refetch, setRefetch] = React.useState(true);

const [loading, setLoading] = React.useState(false);

const [bedrock, setBedrock] = React.useState(false);

// A function to trigger the generation of an image

const { mutate } = useMutation({

mutationFn: () => {

setLoading(true);

return sendPost("/generate-image", {

text: post,

s3Key: `image/${id}`,

bedrock: bedrock,

});

},

});

// A function for polling the /get-image endpoint. When status 200 is returned polling stops and image is displayed

const { data } = useQuery({

queryKey: ["image"],

refetchInterval: 3000,

refetchIntervalInBackground: true,

enabled: refetch,

queryFn: async () => {

const imageData = await getImage("/get-image", { s3Key: `image/${id}` });

if (imageData.status === 200) {

setRefetch(false);

setImage(imageData.data);

setLoading(false);

}

return imageData.data;

},

});

const handlePostChange = (event: React.ChangeEvent<HTMLTextAreaElement>) => {

setPost(event.target.value);

};

const handleBedrockChange = (checked: boolean) => {

setBedrock(checked);

};

return (

<Spin spinning={loading}>

<Flex justify="center">

<Layout style={layoutStyle}>

<Header style={headerStyle}>Epic Post To Image POC</Header>

<Content style={contentStyle}>

<Paragraph style={paragraphStyle}>

<Text strong style={textStyle}>

Write a post as you would for a social media platform. Click

generate to create an image for your post.

</Text>

.

</Paragraph>

<TextArea

style={textAreaStyle}

rows={4}

placeholder="Write here"

onChange={handlePostChange}

/>

<Paragraph style={paragraphStyle}>

<Text strong style={textStyle}>

Use Bedrock? (Toggle to use bedrock)

</Text>

<Switch onChange={handleBedrockChange} />

</Paragraph>

{

// If the image exist it will be shown

image && (

<Image

width={"80%"}

style={{ marginTop: "10px" }}

src={image}

/>

)

}

<Button type="primary" style={buttonStyle} onClick={() => mutate()}>

Generate

</Button>

</Content>

</Layout>

</Flex>

</Spin>

);

}api.ts inside a folder called utils. The functions for the requests looked like this:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

import axios from "axios";

const API_URL = process.env.REACT_APP_API_URL;

export async function sendPost(

url: string,

data: { text: string; s3Key: string; bedrock: boolean }

): Promise<{ status: number; data: string }> {

try {

const response = await axios({

method: "post",

url: `${API_URL}${url}`,

data,

});

return {

status: response.status,

data: response.data,

};

} catch (error: any) {

return {

status: error.response?.status || error.status,

data: error.response,

};

}

}

export async function getImage(

url: string,

params: { s3Key: string }

): Promise<{ status: number; data: string }> {

try {

const response = await axios({

method: "get",

url: `${API_URL}${url}`,

params,

});

return {

status: response.status,

data: response.data.url,

};

} catch (error: any) {

return {

status: error.response?.status || error.status,

data: error.response,

};

}

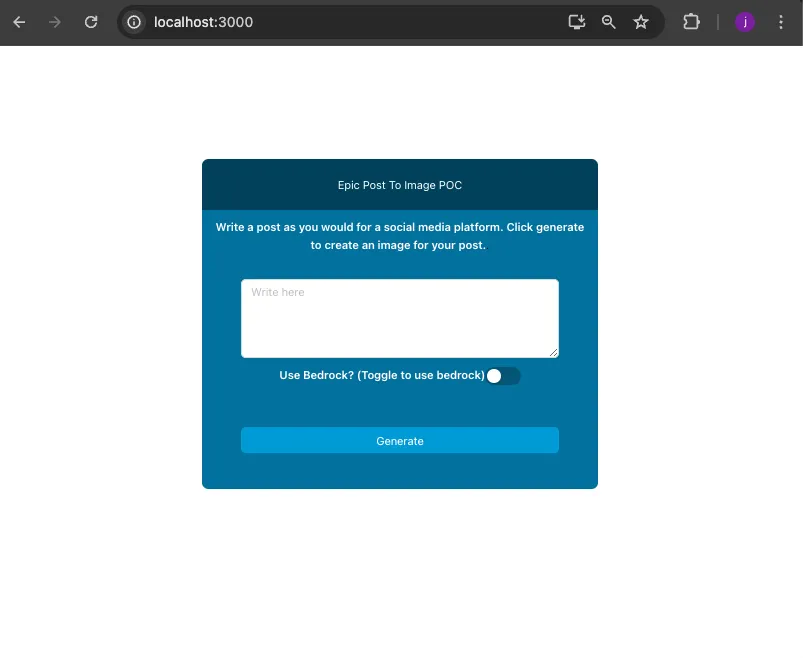

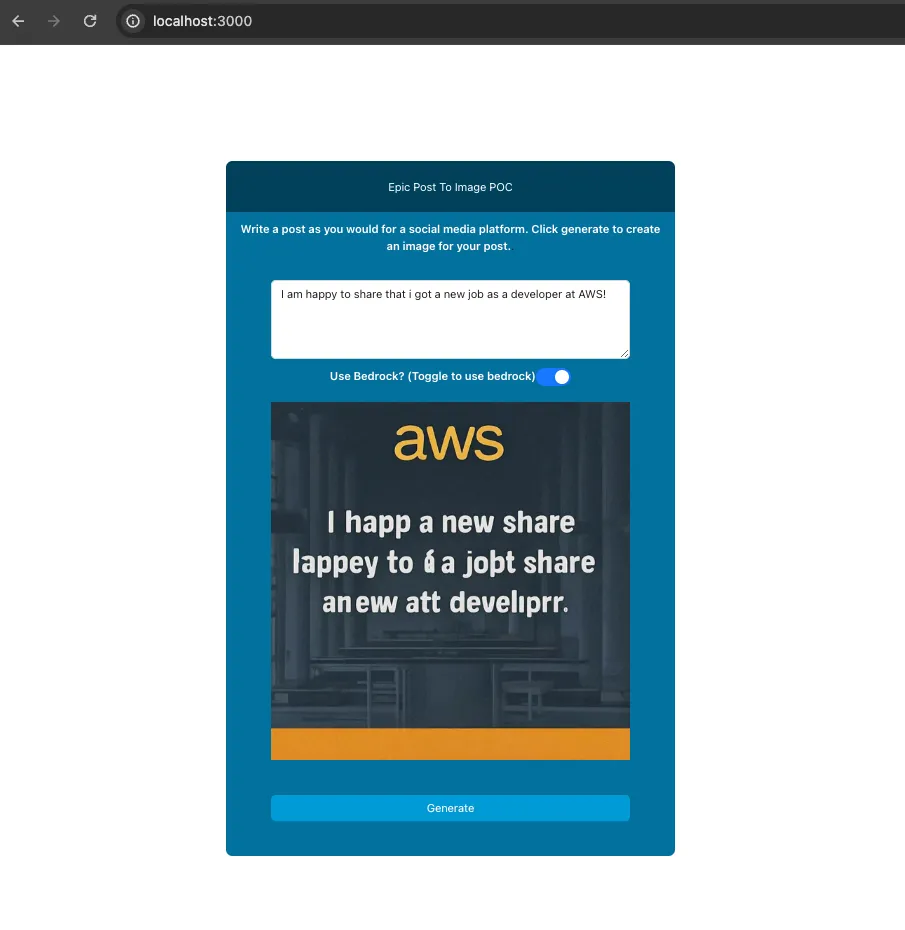

}- Display a layout with a header and content section.

- Allow the user to write a post in a text area.

- Providing a switch for the user to choose whether to use the Bedrock or DALL-E by default.

- When the "Generate" button is clicked, send the post data to the backend service to generate an image.

- Constantly poll for the generated image from the backend and display it once available.

- When the user requests for the image a loader is shown until the image is retrieved.

- Learn more about React Query

- Learn more about Antd

- Learn more about Comprehend

- Learn more about Bedrock

- Learn more about DALL-E

- My frontend github repository for this project: github

- My AWS github repository for this project: github