Generative AI Serverless - RAG using Bedrock Knowledge base, Zero Setup, Single document, Lambda and API!

RAG using Bedrock Knowledge base, Single document chat, Lambda, API via Anthropic Sonnet model

Published Jun 7, 2024

Generative AI - Has Generative AI captured your imagination to the extent it has for me?

Generative AI is indeed fascinating! The advancements in foundation models have opened up incredible possibilities. Who would have imagined that technology would evolve to the point where you can generate content summaries from transcripts, have chatbots that can answer questions on any subject without requiring any coding on your part, or even create custom images based solely on your imagination by simply providing a prompt to a Generative AI service and foundation model? It's truly remarkable to witness the power and potential of Generative AI unfold.

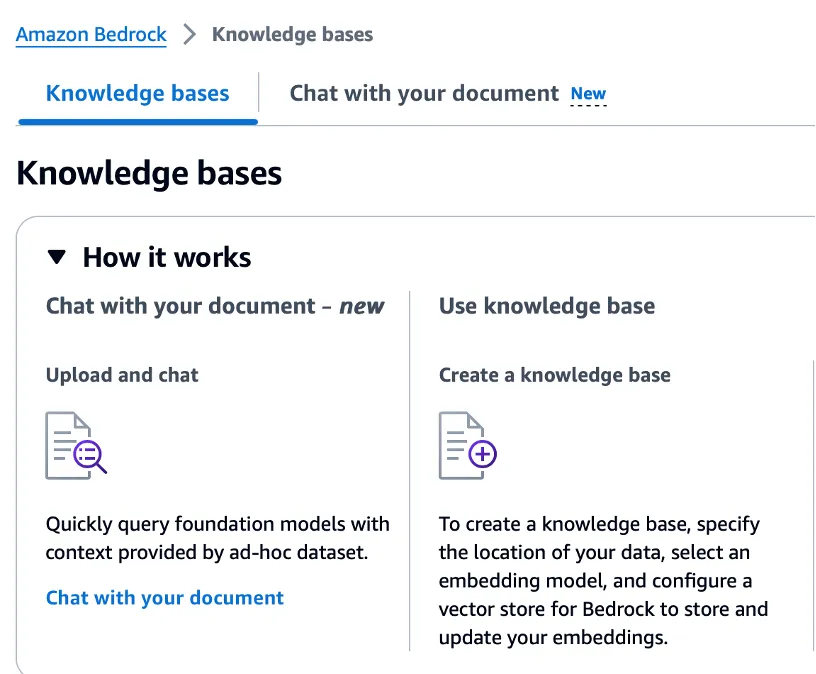

'Chat with your document' is the latest Generative AI feature added by Amazon to its already feature-rich areas of GenAI, Knowledge Base, and RAG.

RAG, which stands for Retrieval Augmented Generation, is becoming increasingly popular in the world of Generative AI. It allows organizations to overcome the limitations of LLMs and utilize contextual data for their Generative AI solutions.

Amazon Bedrock is a fully managed service that offers a choice of many foundation models, such as Anthropic Claude, AI21 Jurassic-2, Stability AI, Amazon Titan, and others.

I will use the recently released Anthropic Sonnet foundation model and invoke it via the Amazon Console Bedrock Knowledge Base & subsequently via a Lambda function via API.

As of May 2024, this is the only model supported by AWS for the single document knowledge base or 'Chat with your document' function.

There are many use cases where generative AI chat with your document function can help increase productivity. Few examples will be technical support extracting info from user manual for quick resolution of questions from the customers, or HR answering questions based on policy documents or developer using technical documentation to get info about specific function or a call center team addressing inquiries from customers quickly by chatting with product documentation.

Let's look at our use cases:

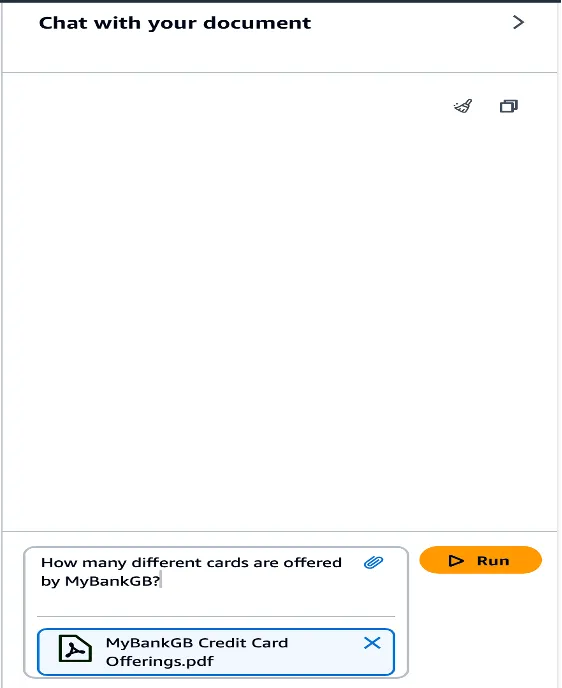

- MyBankGB, a fictitious bank, offers various credit cards to consumers. The document "MyBankGB Credit Card Offerings.pdf" contains detailed information about all the features and details of the credit cards offered by the bank.

- MyBankGB is interested in implementing a Generative AI solution using the "Chat with your document" function of Amazon Bedrock Knowledge Base. This solution will enable the call center team to quickly access information about the card features and efficiently address customer inquiries.

- The solution needs to be API-based so that it can be invoked via different applications.

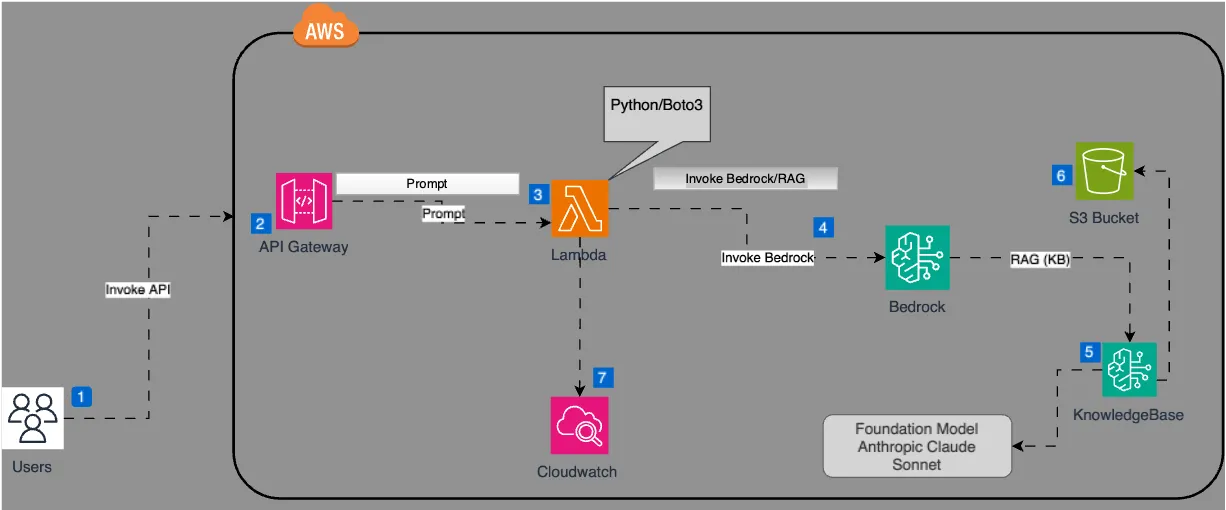

Here is the architecture diagram for our use case.

Let's see the steps to create a single document knowledge base in Bedrock and start consuming it using AWS Console and then subsequently creating a lambda function to invoke it via API.

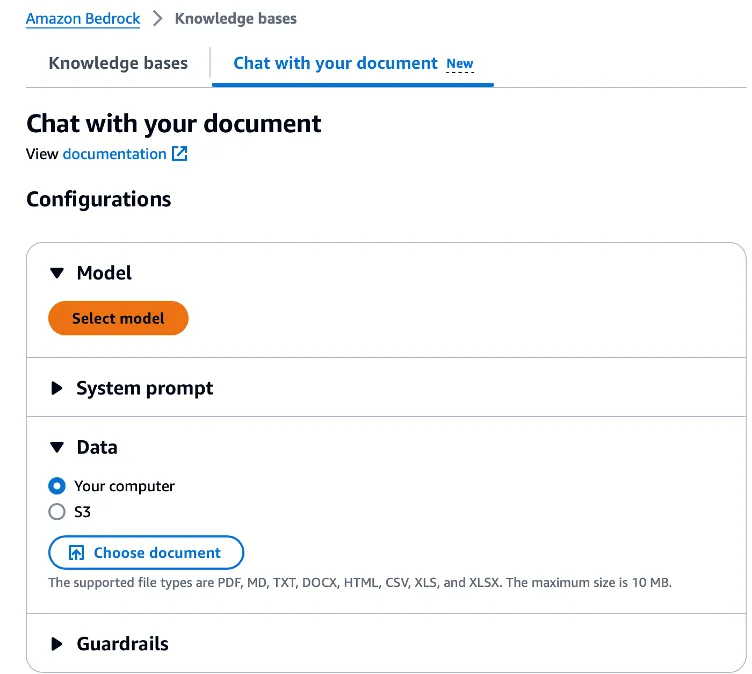

Review AWS Bedrock 'Chat with your document

Chat with document is a new feature. You can use it via AWS Console or can use SDK to invoke it via Bedrock, Lambda and API.

For Data, you can upload a file from your computer OR you can provide ARN for the file posted in the S3 bucket.

Select model. Anthropic Claude 3 Sonnet is the only supported model as of May, 2024.

Request Model Access

Before you can use the model, you must request access to the model.

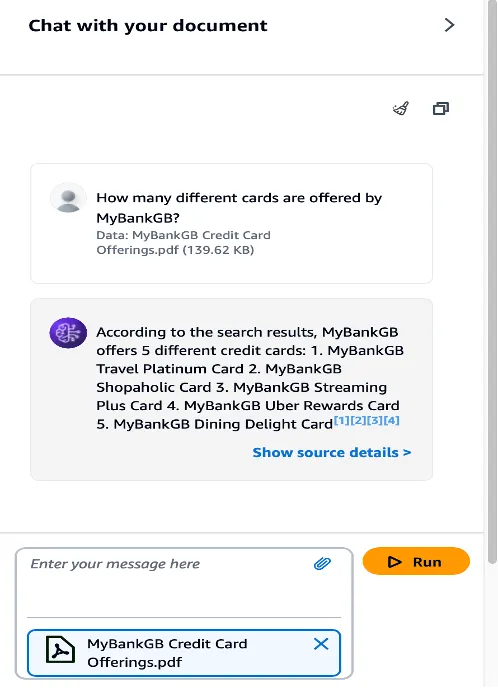

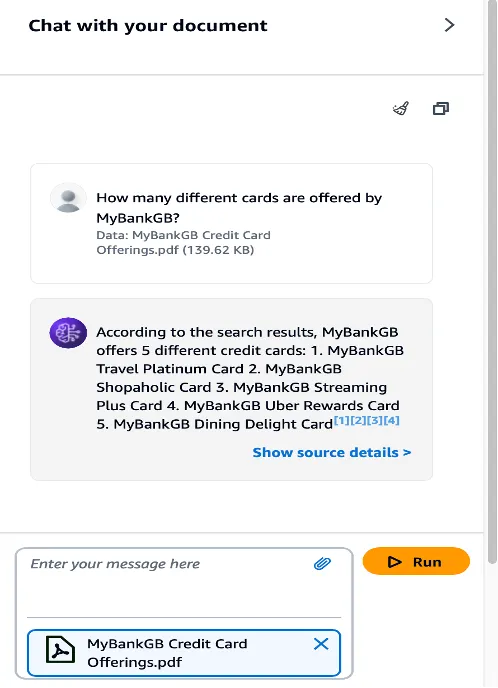

Chat with your document using AWS Console

Let’s chat with the document and review responses.

Review the response

Let's review more prompts and responses!

As you can see above, all answers are provided in the context of the document uploaded in the S3 bucket. This is where RAG makes the generative AI responses more accurate and reliable, and controls the hallucination.

However, our business use case asks for creating an API based solution hence we will extend this solution by implementing a Lambda function and API that can be invoked by the user or application.

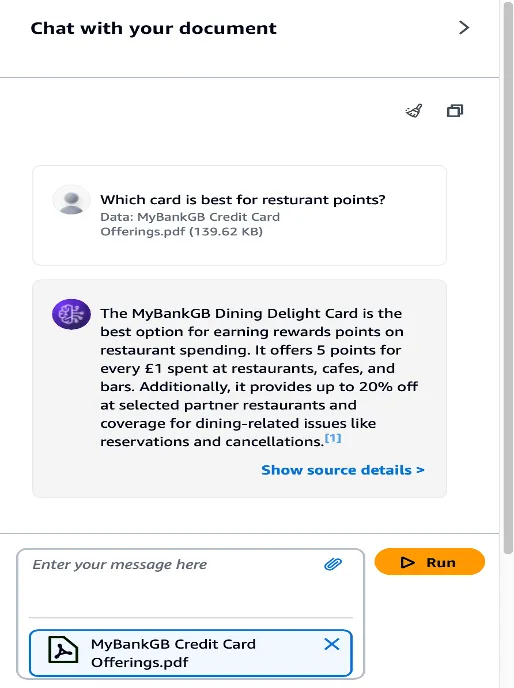

Create a SAM template

I will create a SAM template for the lambda function that will contain the code to invoke Bedrock API along with required parameters and a prompt for the RAG. Lambda function can be created without the SAM template however, I prefer to use Infra as Code approach since that allow for easy recreation of cloud resources. Here is the SAM template for the lambda function.

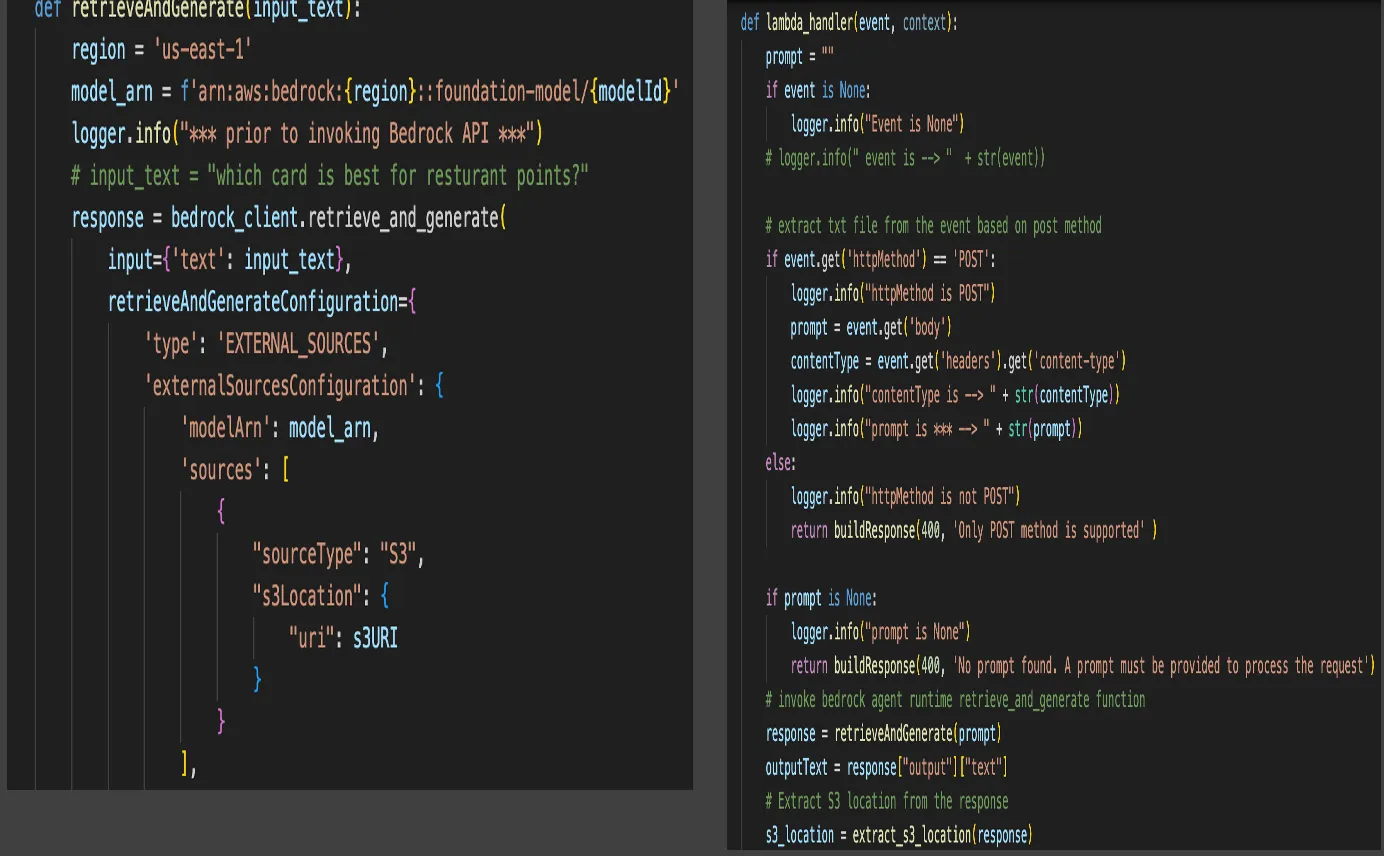

Create a Lambda Function

The Lambda function serves as the core of this automated solution. It contains the code necessary to fulfill the business requirement of creating an API for RAG based generative AI solution. This Lambda function accepts a prompt, which is then forwarded to the Bedrock API to generate a response using the single document knowledge base and Anthropic Sonnet foundation model. Now, Let’s look at the code behind it.

Build function locally using AWS SAM

Next build and validate function using AWS SAM before deploying the lambda function in AWS cloud. Few SAM commands used are:

- SAM Build

- SAM local invoke

- SAM deploy

Validate the GenAI Model response using a prompt

Prompt engineering is an essential component of any Generative AI solution. It is both art and science, as crafting an effective prompt is crucial for obtaining the desired response from the foundation model. Often, it requires multiple attempts and adjustments to the prompt to achieve the desired outcome from the Generative AI model.

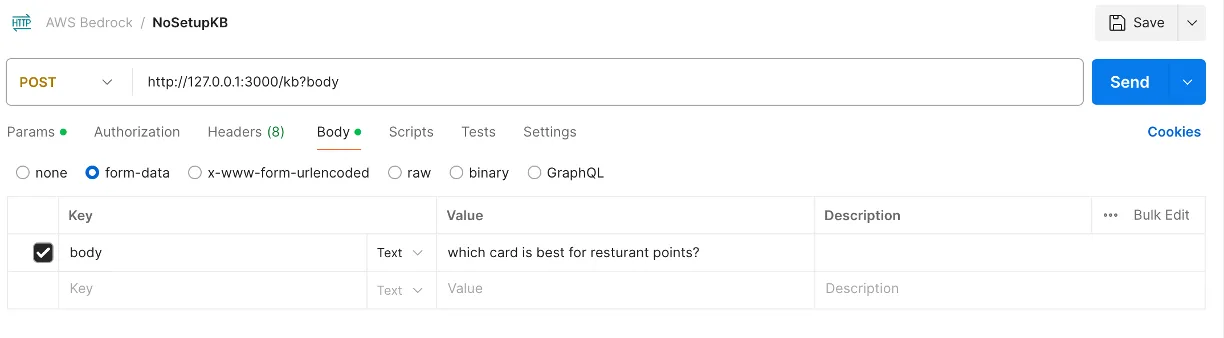

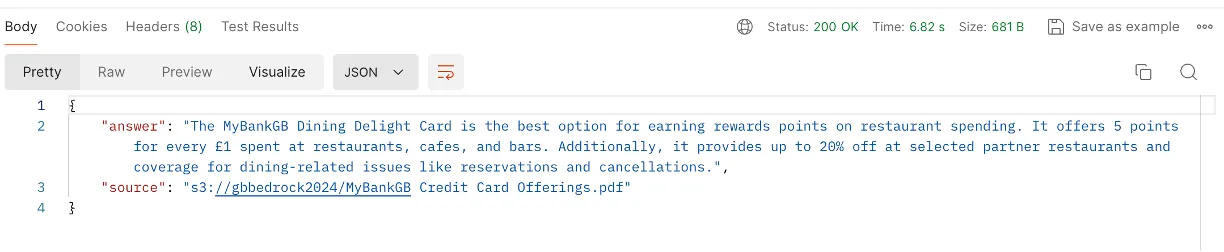

Given that I'm deploying the solution to AWS API Gateway, I'll have an API endpoint post-deployment. I plan to utilize Postman for passing the prompt in the request and reviewing the response. Additionally, I can opt to post the response to an AWS S3 bucket for later review.

Based on the prompt, requested info is returned, Source/citation is also provided in the response.

With these steps, a serverless GenAI solution has been successfully completed to implement a single-document knowledge base using Amazon Bedrock, Lambda, and API. Python/Boto3 were utilized to invoke the Bedrock API with Anthropic Sonnet.

As GenAI solutions keep improving, they will change how we work and bring real benefits to many industries. This workshop shows how powerful AI can be in solving real-world problems and creating new opportunities for innovation.

Thanks for reading!

Click here to get to YouTube video for this solution.

𝒢𝒾𝓇𝒾𝓈𝒽 ℬ𝒽𝒶𝓉𝒾𝒶

𝘈𝘞𝘚 𝘊𝘦𝘳𝘵𝘪𝘧𝘪𝘦𝘥 𝘚𝘰𝘭𝘶𝘵𝘪𝘰𝘯 𝘈𝘳𝘤𝘩𝘪𝘵𝘦𝘤𝘵 & 𝘋𝘦𝘷𝘦𝘭𝘰𝘱𝘦𝘳 𝘈𝘴𝘴𝘰𝘤𝘪𝘢𝘵𝘦

𝘊𝘭𝘰𝘶𝘥 𝘛𝘦𝘤𝘩𝘯𝘰𝘭𝘰𝘨𝘺 𝘌𝘯𝘵𝘩𝘶𝘴𝘪𝘢𝘴𝘵