How I Built a Video Chat App with almost Zero Code Writing

Building an GenAI based app to summarize videos and make them conversational without me almost writing any code.

Ebrahim (EB) Khiyami

Amazon Employee

Published Jun 7, 2024

Last Modified Jun 13, 2024

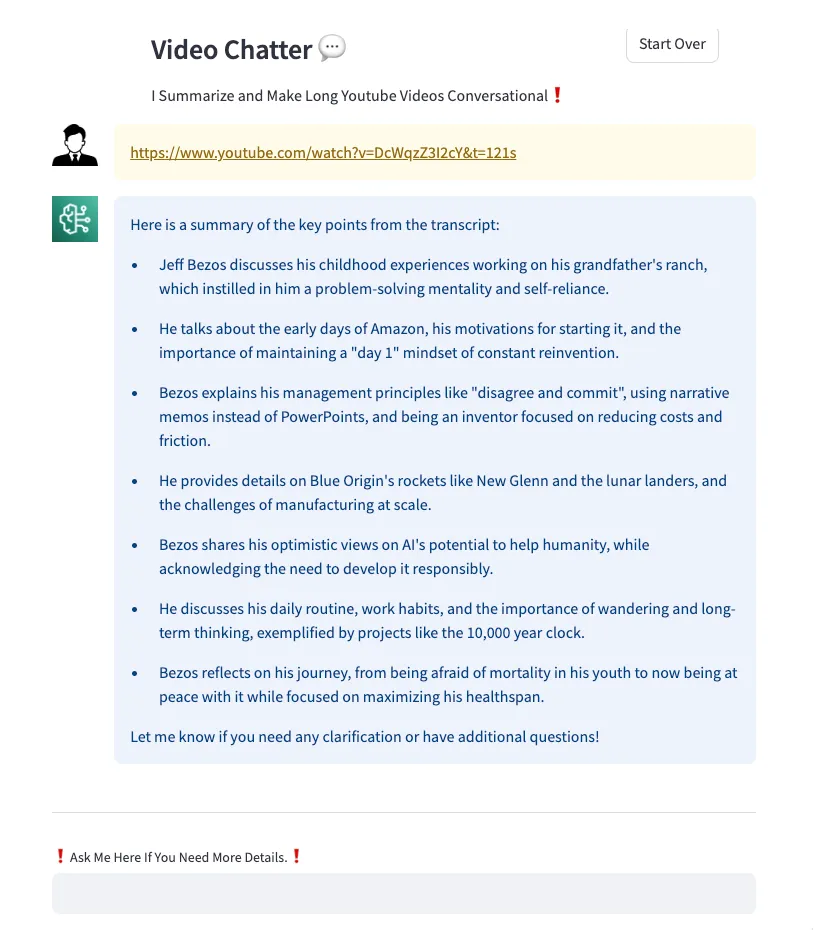

In this blog post, I will show how I built a simple app that summarizes long YouTube videos and makes them conversational. I consume a lot of YouTube videos for educational, entertainment, and work purposes. Sometimes I don’t have time to watch the whole video, but I really need to know the key parts mentioned in the video so that I can expand on specific parts that interest me.

You can try it here.

For example, I used this app to get a summary of the 1.5-hour keynote presented by Matt Wood, VP of AI at AWS at LA Summit, in just less than a minute and then I could ask questions about the key points that interested me from the talk.

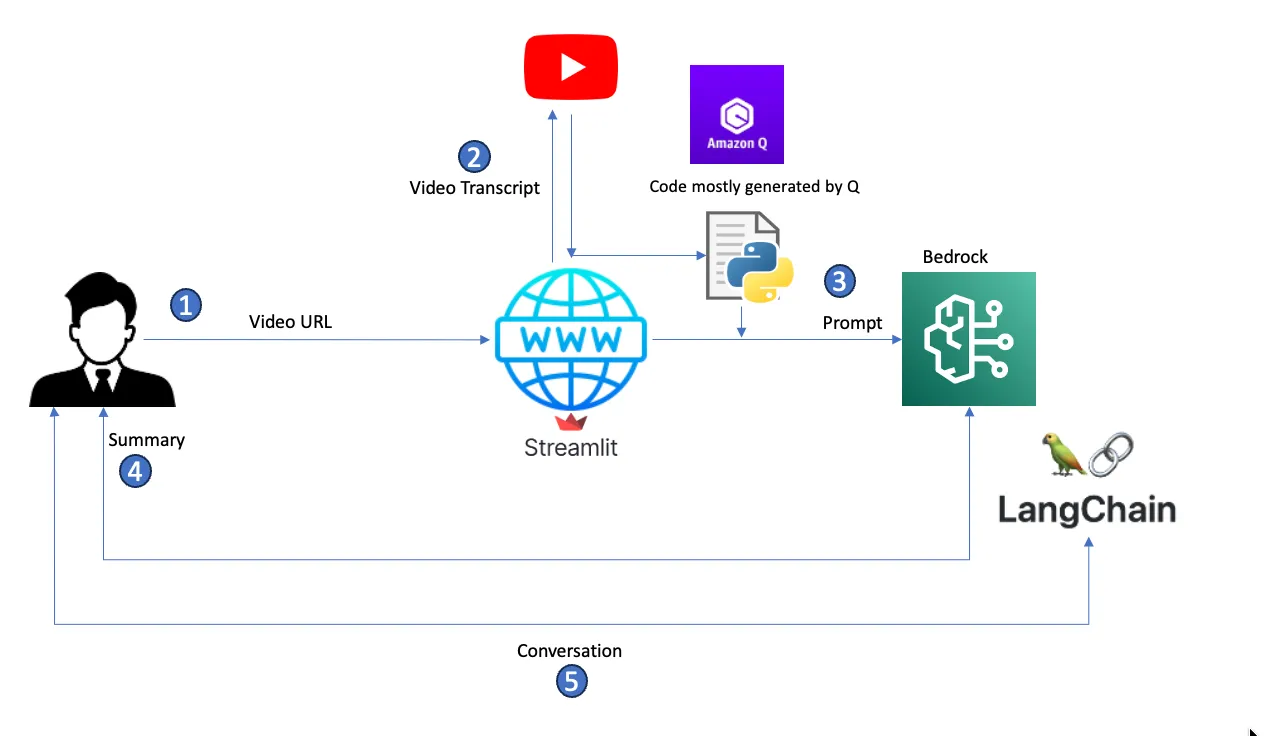

- A user enters a YouTube video URL to summarize.

- The Streamlit app takes the URL, parses it to get the video ID, and calls the YouTube API to get the video transcript.

- The app builds a prompt from the transcript and passes it to Bedrock for summarization using a predefined model.

- Bedrock summarizes the transcript based on the generated prompt and returns the summary to the user.

- If users have follow-up questions, the app builds a conversation memory using Langchain and answers follow-up questions based on content from the original transcript.

If you’re not familiar with the AWS Gen AI stack, I recommend you study it and come back. I built a good foundation of these components before I decided to get my hands dirty. Here is a good start.

- Set up access to Amazon Bedrock in the AWS region us-east-1 and set up model access for

Anthropic Claude 3. I’m using this LLM because it has a max token of 200k, which allows me to handle transcripts of videos up to 3 hours in length in the prompt without the complexity of adding a knowledge base. Check here for details. - Set up Amazon Q Developer extension with your favorite code IDE. Mine is VSC. I used this video.

- Familiarity with the Streamlit framework to deploy your Python application – it literally took a minute to publish it.

As a solution architect, it’s really important for me to understand how things work together and how changing pieces or using different practices may impact the solution as a whole. I have a good understanding of this part for Gen AI applications. However, writing the code to make things work is not my area of expertise, so as part of my learning process, I wanted to test how Amazon Q can help me minimize the efforts to write the actual code to build the app. By going through several iterations and referencing tons of examples available from other folks online, it should not be that hard.

The code has three components:

- bedrock.py: The code module that deals with the LLM, creates Bedrock runtime, summarizes, and deals with chat history using LangChain.

- utilities.py: A module that retrieves video transcripts and builds a prompt from it.

- app.py: The main app that interacts with users. It takes the video URL, returns the summary, allows chat, and deals with user sessions.

[1] Bedrock Module

I asked Amazon Q this question:

The generated example worked fine with minor adjustments to make it work from the Streamlit environment instead of the Python environment as initially generated by the code. Here is how it looks like.

[2] Utility Functions

I asked Q to build three functions:

- Get the YouTube ID from a YouTube URL

- Get the transcript of a video based on its ID

- Generate a prompt from the transcript

after a few adjustments It looks like the following

[3] Streamlit App

The third part is app.py, the main Streamlit app that presents users with the option to enter a video URL to summarize it and then to chat with it. A lot of the code in this function has to do with user sessions, UI, and how you personally like to present things in your app. The main function to look at is

handle_input(), which detects the user input (whether it’s a URL to summarize or a follow-up question), returns output (summarization or answer in a chat), and resets user states accordingly.The easiest way to get this running and shared is by using Streamlit. These guys make it really easy to deploy Gen AI apps quickly. I used the deployment guide here to deploy my app.

I was really impressed by how easily I could build this app. It took me a few weeks because I only get to work on it for an hour or two every week, but without the AI assistance, it would have taken way longer. In this blog post, I showed you how to use a combination of Amazon Q, Amazon Bedrock, and Streamlit to build a simple app that summarizes YouTube videos and makes them conversational. I also showed you how you can use Gen AI in general to leverage Amazon Q for building quick working code that you can use in your POCs. The total time it took me to build this was about 5-6 hours, including this blog post (which of course uses AI as well).

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.