How do you choose the foundation model for your Generative AI App — like your car?

Choosing the right foundation model for your Generative AI app is like picking the perfect car - focus on features, price, and user experience, not the specific model. Learn how to make the best foundation model choices in this article.

Stefan

Amazon Employee

Published Jun 7, 2024

Last Modified Jun 11, 2024

As the world of generative AI continues to evolve at a rapid pace, with new models and capabilities emerging seemingly every week, it can be tempting to always chase the latest and greatest. However, when it comes to building practical generative AI applications, a more thoughtful approach is often required. Much like how car buyers are more interested in features like entertainment systems and fuel efficiency than the technical details of the engine, users of generative AI applications care more about the overall functionality, cost, and user experience than the specific foundation model being used under the hood. In this blog post, we’ll explore why it’s important to look beyond just the raw performance of foundation models and focus on engineering a well-architected , cost-effective generative AI solution that truly meets the needs of your customers.

Before we dive into the specifics of choosing the right foundation model, let’s explore an analogy that may provide some useful insights — the world of cars. Now, I’ll admit that I’m no automotive expert, but I find that car analogies can be surprisingly effective when it comes to understanding complex technology decisions. When you think about a car, you likely don’t focus on the intricate details of the engine, transmission, or other internal components. Instead, you’re more interested in the overall product — the shape, the ability to get you from point A to B comfortably and efficiently, and the status or impression it conveys. The underlying engineering is important, of course, but it’s not necessarily what drives most consumers’ purchasing decisions. This parallels the way many users approach generative AI applications. They care more about the functionality, cost, and user experience than the specific foundation model powering the system under the hood. By keeping this user-centric perspective in mind, we can better understand how to approach the challenge of choosing the right foundation model for our own generative AI projects.

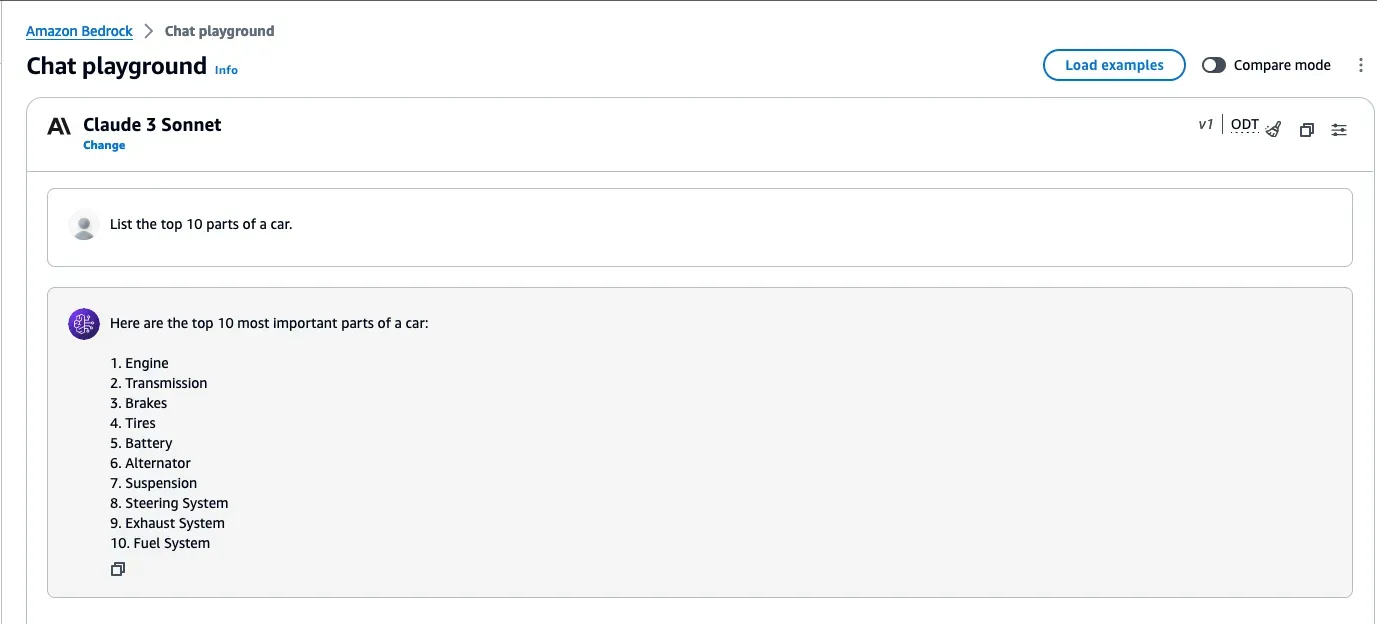

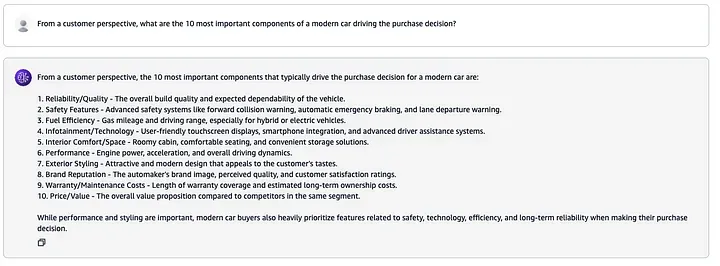

As I’m not an expert in cars, I asked an Large-Language-Model (LLM), in this case Anthropic’s Claude 3 Sonnet, to tell me more about car(see image below). I accessed the model via Amazon Bedrock. It came up with a list of parts and subsystems like the engine, brakes, and other technical components — an engineering-focused approach. However, from my personal experience, the majority of people are less interested in those intricate details and more focused on the overall product.

So why do we do this analysis? Well, the point here is that the engine, and other crucial systems like the brakes are not on top of mind of consumers and buyers of cars. How is that even possible as those are crucial for the core functionality of cars? They are commodities. Customers can safely assume that the engine and brakes of modern cars are good and fulfill their purpose. There is little differentiation here, if we exclude the small niche of motor enthusiasts.

This is a trend that has long been identified by the car industry, leading to platform approaches where the platform is providing the commodity functionality, and the specifics of different car models (which can be as thin as a nice-looking exterior or interior) are built on top of it. Gregor Hohpe talks in more detail about platform strategies in his book “Platform Strategy”, which is a highly recommended read.

Before we go back to our topic of foundation models and generative AI, let’s explore cars a little bit more. From what we’ve discussed so far, we can see that just focusing on the engine doesn’t make too much sense. Both from the customer perspective, as we’ve already seen, and from the engineering perspective. There is little value in having a strong engine without equally good brakes and a chassis that enables the driver to utilize the power of the engine. The list goes on. In the end, a car needs a well-engineered overall system in the first place.

Another observation on cars is that there are many different cars built for different purposes. Not all have the strongest engines. Why is that? Well, if we go back to the customer’s view on cars, often the strongest engine is just too expensive for the intended use, burns too much fuel, and may not be necessary for the most suitable car for a particular purpose. Parking a car for a quick shopping tour in a city comes to mind, although for me that is only a second-hand experience.

This is a trend that has long been identified by the car industry, leading to platform approaches where the platform is providing the commodity functionality, and the specifics of different car models (which can be as thin as a nice-looking exterior or interior) are built on top of it. Gregor Hohpe talks in more detail about platform strategies in his book “Platform Strategy”, which is a highly recommended read.

Before we go back to our topic of foundation models and generative AI, let’s explore cars a little bit more. From what we’ve discussed so far, we can see that just focusing on the engine doesn’t make too much sense. Both from the customer perspective, as we’ve already seen, and from the engineering perspective. There is little value in having a strong engine without equally good brakes and a chassis that enables the driver to utilize the power of the engine. The list goes on. In the end, a car needs a well-engineered overall system in the first place.

Another observation on cars is that there are many different cars built for different purposes. Not all have the strongest engines. Why is that? Well, if we go back to the customer’s view on cars, often the strongest engine is just too expensive for the intended use, burns too much fuel, and may not be necessary for the most suitable car for a particular purpose. Parking a car for a quick shopping tour in a city comes to mind, although for me that is only a second-hand experience.

Indeed I think looking at cars can tell us a thing or two about Generative AI. In the end the users are not too much interested in the model being used itself.

OK, enough about cars for the moment. Let’s get back to Generative AI. While technologists, the high and growing number of Generative AI experts, and society as a whole are discussing a lot about Foundation Models, speculating about what is the strongest and debating benchmarks, this is only of little interest for consumers of Generative AI applications. For those, it is important that the application is providing the required functionality, at an affordable price, and with the necessary security and data privacy. Which specific model is being used within the application is less interesting. Equally, what hidden capabilities this model has that are not utilized for the supported use case. Sound familiar? Yes, it is like the engine in a car, isn’t it?

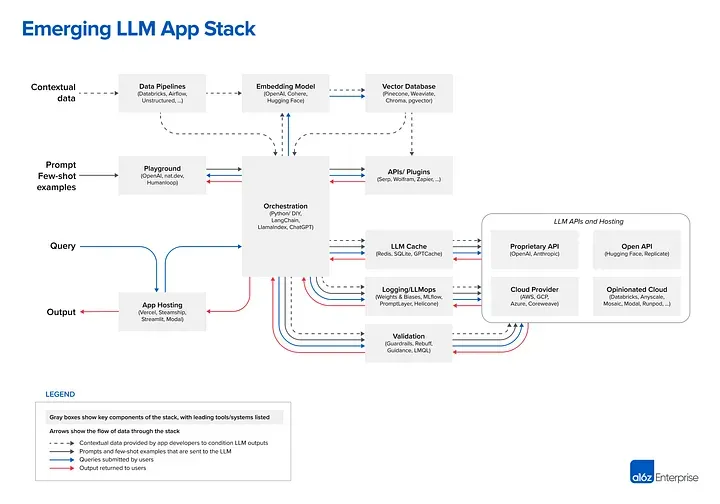

And indeed, like a car is not just the engine, a Generative AI application is not just a model. It needs a solid overall architecture. To illustrate this, I utilize the Generative AI blueprint architecture provided by Andreesen Horowitz in his blog post:

Here I only want to highlight that Andreesen’s architecture contains many different components, besides models (LLMs in the diagram). I don’t want to dive into further aspects of the architecture, but it clearly shows that the architecture of a Generative AI application is much more than just the models. Those other components are equally important. The architecture and engineering of the application as a whole is crucial for the customer of the application. And these are not new concepts — they are standard components and patterns that we already use prior to the rise of Generative AI.

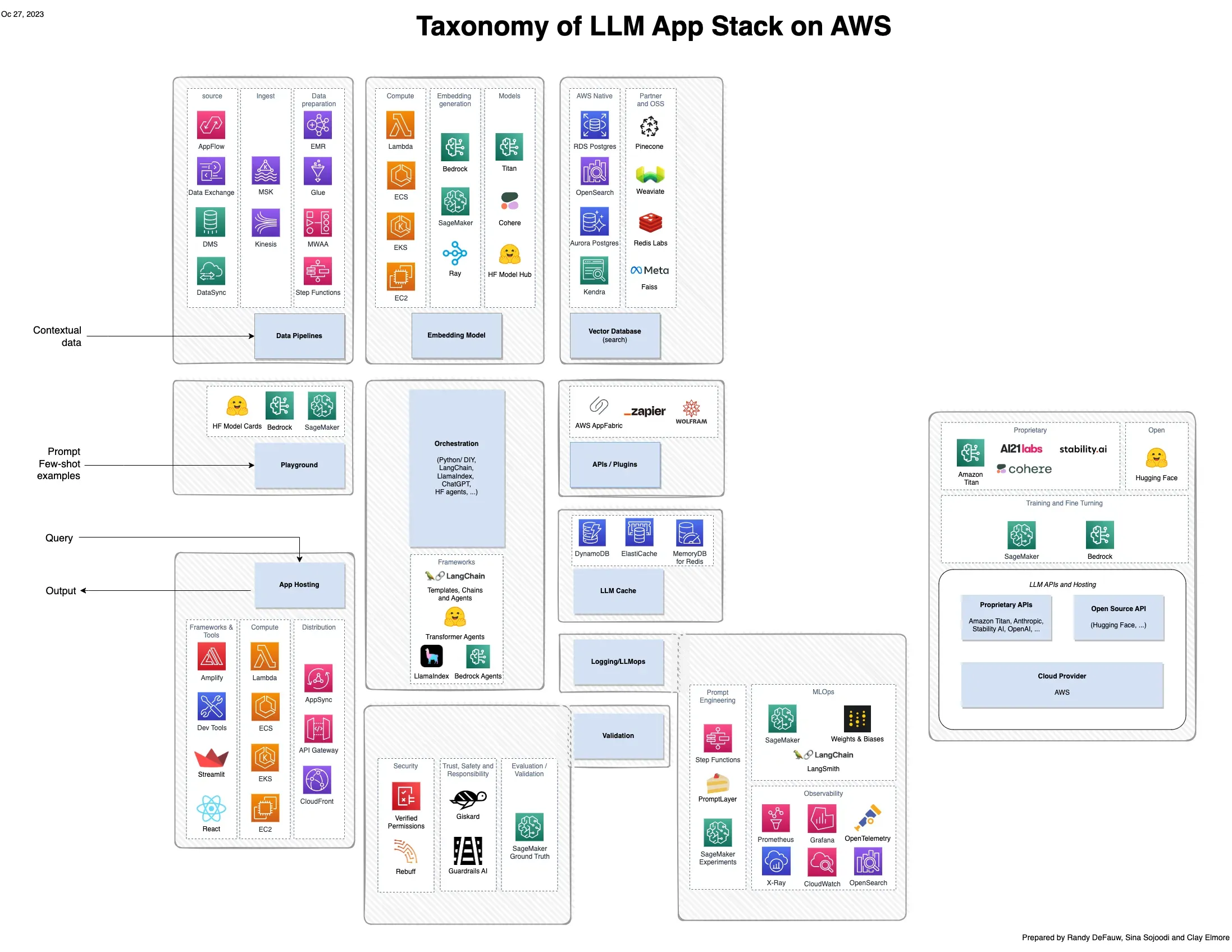

Colleagues of mine have made the effort to map those to services provided by AWS. The result is a lot of services composing the overall architecture, with just 2 out of maybe 30ish being LLM-specific (source: AWS Community Blog by Randy DeFauw).

What does this mean for us? If you’re building a Generative AI application, don’t forget what you have learned and achieved already. Using a good overall architecture and building in a well-architected way stays crucial. Don’t set all your priority on choosing the LLM. Again, like you don’t choose your car just by the engine.

What does this mean for us? If you’re building a Generative AI application, don’t forget what you have learned and achieved already. Using a good overall architecture and building in a well-architected way stays crucial. Don’t set all your priority on choosing the LLM. Again, like you don’t choose your car just by the engine.

Always aim for the strongest and then I’m good. End of story. Well — hmm — no. While it makes good sense to choose a strong model when you explore a use case for feasibility, latest when you have established that your use case can be implemented on the back of Generative AI, you need to think about what is the right model. Reasons for that are manifold.

First of all, as we see from the car analogy, cost is really driving customers. Choosing a cost-effective model for your use case often makes the difference between a red and a green business case. As cost is a proxy for sustainability, this follows right away. In essence, you need to search for the “Goldilocks” between your use case and the model you choose. You need to choose the model which is just good enough for the use case at hand. Else, you pay for more model capability than the use case actually requires, and the additional cost doesn’t create any value on your customer side.

Going back to the car analogy, it’s like driving a sports car with a very strong engine through speed limits to a grocery store and then limiting your shopping to the potentially way too small trunk not fitting your family groceries. Doesn’t make sense, does it?

First of all, as we see from the car analogy, cost is really driving customers. Choosing a cost-effective model for your use case often makes the difference between a red and a green business case. As cost is a proxy for sustainability, this follows right away. In essence, you need to search for the “Goldilocks” between your use case and the model you choose. You need to choose the model which is just good enough for the use case at hand. Else, you pay for more model capability than the use case actually requires, and the additional cost doesn’t create any value on your customer side.

Going back to the car analogy, it’s like driving a sports car with a very strong engine through speed limits to a grocery store and then limiting your shopping to the potentially way too small trunk not fitting your family groceries. Doesn’t make sense, does it?

Yes and no. Obviously there is a lot of progress in the field of Generative AI. Literally every week there are new models and model versions which provide astonishing results. Things like multi-modality, improved reasoning and many more things. But two observations come into play.

First, it takes time to absorb the new capabilities of the models, understand possible use cases, and then engineer (possibly on both the model and application side) a solution for an additional use case. Second, capabilities that are top-notch today tend to turn into commodities between model providers in a decreasing amount of time. We started with 1–2 years, and now see new best models every month.

If we take these observations into account, we see that a lot of the capabilities we can use commercially today are indeed already kind of commoditized. We also need to keep in mind that Generative AI is not a product in itself. As such, it doesn’t directly serve a customer need. It can, however, be part of a solution to a customer need.

So it makes sense to step back a little from the Generative AI hype and start again by thinking about customers and their needs. Working backwards from those, we as technologists can provide fantastic solutions, also using this exciting new tool of Generative AI, and figure out all the beautiful things we can do with it.

First, it takes time to absorb the new capabilities of the models, understand possible use cases, and then engineer (possibly on both the model and application side) a solution for an additional use case. Second, capabilities that are top-notch today tend to turn into commodities between model providers in a decreasing amount of time. We started with 1–2 years, and now see new best models every month.

If we take these observations into account, we see that a lot of the capabilities we can use commercially today are indeed already kind of commoditized. We also need to keep in mind that Generative AI is not a product in itself. As such, it doesn’t directly serve a customer need. It can, however, be part of a solution to a customer need.

So it makes sense to step back a little from the Generative AI hype and start again by thinking about customers and their needs. Working backwards from those, we as technologists can provide fantastic solutions, also using this exciting new tool of Generative AI, and figure out all the beautiful things we can do with it.

No, actually not. At least two aspects — to my limited knowledge, as you remember I might be everything but a car expert — the car analogy fails behind.

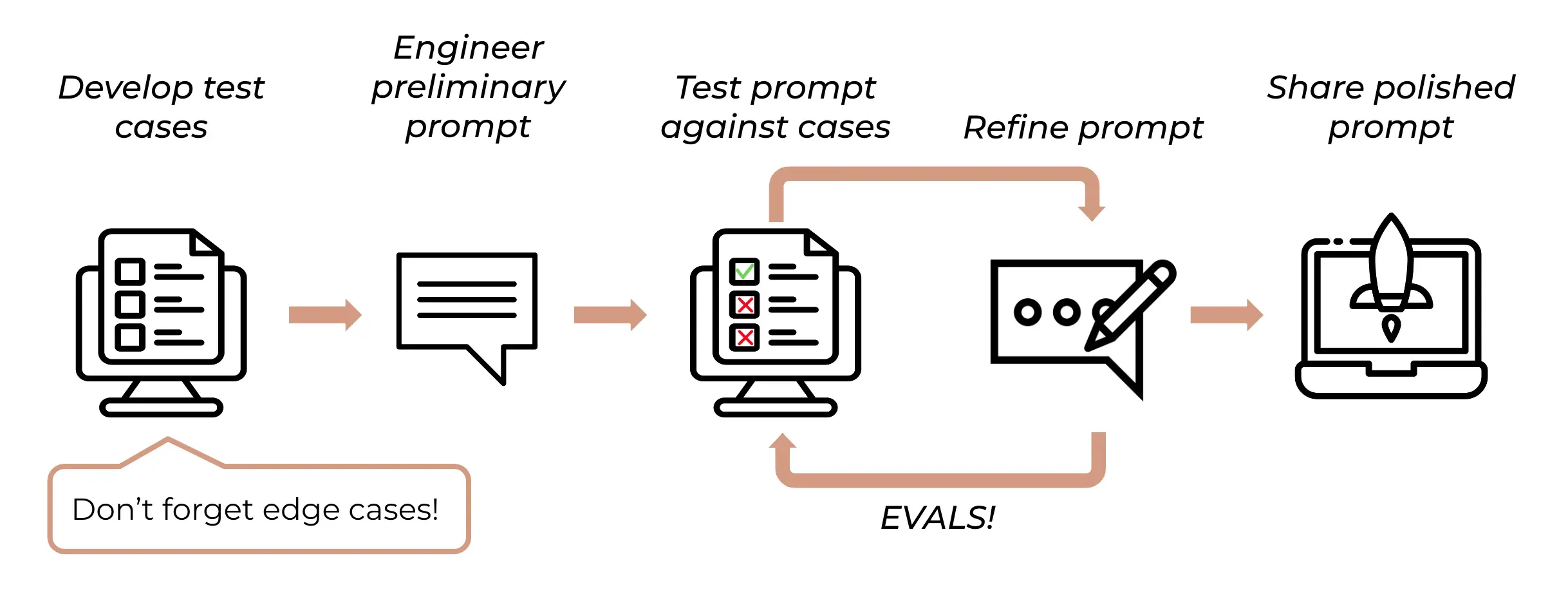

Let’s start with the first one. We live in a time where, as mentioned above, we see every week multiple new models, model versions with increasing performance, decreasing cost. This is different to cars. The evolution of engines — leaving the disruption by electric cars aside for a moment — is much, much slower. A new engine might get added to the car manufacturer’s platform but no mainstream car would get an engine replaced in favor of a new, more performant engine. But cars are hardware, while Generative AI applications are software. In software we can do updates. And we need to as the progress in Generative AI is so fast. We need to prepare to switch the model we are using in our application against another, more performant and cheaper one, to keep our customers happy and be competitive on price. Here it is key that we have an evolvable architecture for our application. Services like Amazon Bedrock, which provided managed access to a number of different models via an API help a lot here. Those enable you to adopt different models or newer versions with minimal effort. This got even easier with APIs which abstract from model specifics where suitable like the new Conversation API. But be careful, in order to really be able to make use of those technical capabilities you not just need to have your architecture prepared, but also your processes. How do you know that the new model or just model version will be working for your customers in production. You need a test-driven approach, like we all do in software engineering -don’t we. Anthropic talks about this in their documentation which you can read here: https://docs.anthropic.com/en/docs/prompt-engineering#the-prompt-development-lifecycle.

Let’s start with the first one. We live in a time where, as mentioned above, we see every week multiple new models, model versions with increasing performance, decreasing cost. This is different to cars. The evolution of engines — leaving the disruption by electric cars aside for a moment — is much, much slower. A new engine might get added to the car manufacturer’s platform but no mainstream car would get an engine replaced in favor of a new, more performant engine. But cars are hardware, while Generative AI applications are software. In software we can do updates. And we need to as the progress in Generative AI is so fast. We need to prepare to switch the model we are using in our application against another, more performant and cheaper one, to keep our customers happy and be competitive on price. Here it is key that we have an evolvable architecture for our application. Services like Amazon Bedrock, which provided managed access to a number of different models via an API help a lot here. Those enable you to adopt different models or newer versions with minimal effort. This got even easier with APIs which abstract from model specifics where suitable like the new Conversation API. But be careful, in order to really be able to make use of those technical capabilities you not just need to have your architecture prepared, but also your processes. How do you know that the new model or just model version will be working for your customers in production. You need a test-driven approach, like we all do in software engineering -don’t we. Anthropic talks about this in their documentation which you can read here: https://docs.anthropic.com/en/docs/prompt-engineering#the-prompt-development-lifecycle.

The second difference between cars and Generative AI applications, at least in the analogy I have chosen, is that a Generative application can make use of multiple models. Hybrid cars could be an example for cars having multiple engines, but in general cars only have a very limited number of engines, usually just one, because those only provide one functionality, to move the car. In contrast a Generative AI application can provide different use cases. Easy example is maybe a social media post generator. A social media post could consist of an image and a text. For sure I can take one very powerful model, providing multi-modal capabilities to provide both, but maybe it’s more cost-effective to use specific models for both generating the text and the image. If we go deeper in different functionality a Generative AI application, we might come up with an even larger number of use cases, which could be best served with different models. Essentially we can search for the Goldilocks of each of those. Rather to use one big, very costly, foundation model for every use case, we can compose from smaller, cheaper ones. Here again it pays back if you have the ability to do so easily like e.g. if you consume models through a service like Amazon Bedrock.

And now have a safe ride and go build your Generative AI applications!

This blog post has been created with the help of Anthropic’s Claude 3 models (Haiku and Sonnet).

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.