Streamlining Large Language Model Interactions with Amazon Bedrock Converse API

Amazon Bedrock's Converse API provides a consistent interface to seamlessly invoke various large language models, eliminating complex helper functions. Code examples showcase its simplicity compared to previous unique integrations per model. A demo highlights leveraging Claude 3 for multimodal image description, effortlessly harnessing large models' capabilities through this unified API.

Haowen Huang

Amazon Employee

Published Jun 11, 2024

Amazon Bedrock has introduced the Converse API, a game-changing tool that streamlines interactions with its AI models by providing a consistent interface. This API allows developers to invoke different Amazon Bedrock models without accounting for model-specific parameters or implementations. A key advantage is that it offers a consistent experience across models, eliminating the need to manage model-specific implementations. Developers can write code once and seamlessly use it with various models available on Amazon Bedrock in AWS Regions where the service is offered.

The supported models and model features of the Converse API are detailed at the following link, which you can check frequently as more models will become available via the Converse API:

To help developers quickly understand the new Converse API, I'll start with a code example from before the Converse API was released. Then I'll provide the sample Converse API code directly from the official website, allowing you to see the significant difference in how the Converse API can greatly streamline AI model interactions. Finally, I'll highlight the vision feature with Claude 3, demonstrating how easily you can leverage the Converse API to unleash the potential of Large Language Models on Amazon Bedrock.

In the past, developers had to write complex helper functions to unify the input and output formats across different AI models. For example, during an Amazon Bedrock development workshop in Hong Kong, a helper function of 116 lines of code was required to achieve a consistent way of calling various foundation models. I’ll show the code below.

The provided code is a Python function that invokes different language models from various providers (Anthropic, Mistral, AI21, Amazon, Cohere, and Meta) using the AWS Bedrock. The `invoke_model` function takes input parameters such as the prompt, model name, temperature, top-k, top-p, and stop sequences, and returns the generated output text from the specified language model. The function uses the `boto3` library to interact with the AWS Bedrock and sends the appropriate input data based on the provider's API requirements. The code also includes a main function that sets up the AWS Bedrock Runtime client, specifies a model and prompt, and calls the `invoke_model` function to generate the output text, which is then printed.

The number of lines in the code above only shows the implementation of the interface function for these few models. As the number of large models that need to be unified increases, the amount of code will continue to grow.

You can refer to the following document link for the detail of this 116-line of code sample to invoke different language models in one function:

The following code snippet from the AWS official website demonstrates how simple to call the Converse API operation with a model on Amazon Bedrock.

For the full code, you can refer to the following link:

To demonstrate how to fully utilize the Converse API, here’s an example that sends both text and an image to the Claude 3 Sonnet model using the converse() method. The code reads in an image file, creates the message payload with the text prompt and image bytes, and then prints out the model's description of the scene.

To test it with different images, simply update the input file path. This showcases the Converse API's multimedia capabilities for multimodal applications.

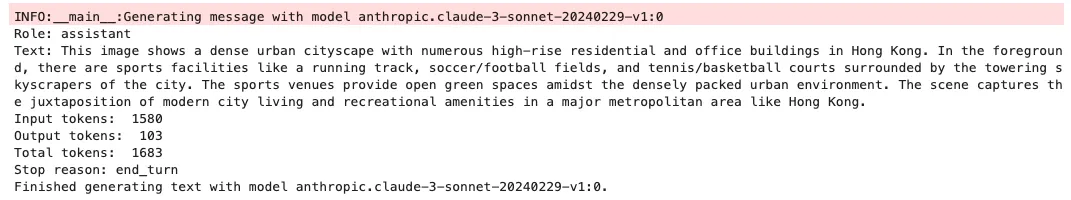

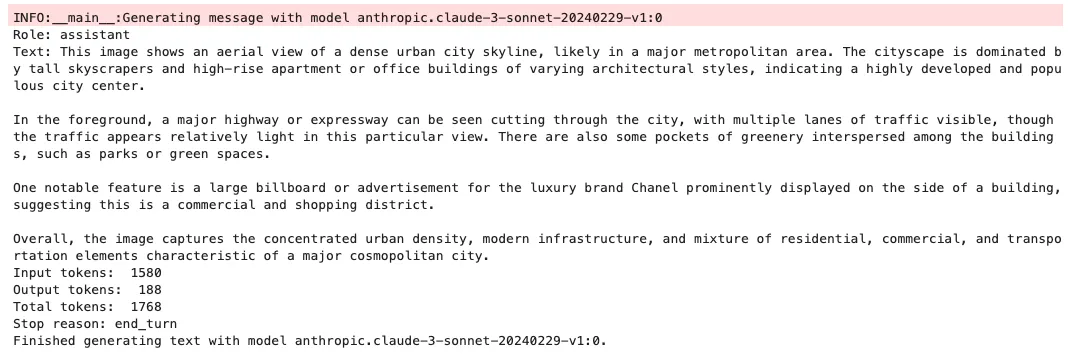

The two images are pictures taken from my window in the beautiful city of Hong Kong while writing this blog post. They show the street view of Causeway Bay in Hong Kong, as displayed below:

For the code part, I wrote a generate_conversation_with_image() function and modified some code in main() function based on the AWS official website code mentioned previously. The details are as follows:

For image #1, I obtained the following result from the model:

For your convenience, I have copied the model’s output here:

For image #2, I just simply modify the “input_image” path in the code to the new image path. When I inputted image #2 as the new image for Claude 3 Sonnet model, I obtained the following result from the model:

For your convenience, I have copied the model’s output here:

Amazon Bedrock's new Converse API simplifies interactions with large language models by providing a consistent interface, eliminating the need for model-specific implementations. Previously, developers had to write complex helper functions with hundreds of lines of code to unify input/output formats across models. The Converse API allows seamlessly invoking various models using the same API across AWS regions, significantly reducing code complexity. Code examples demonstrate the Converse API's simplicity compared to the previous approach requiring unique integrations per model provider. A demo highlights leveraging Claude 3 via the Converse API for multimodal image description, showcasing how it can effortlessly harness large language models' capabilities. Overall, the Converse API streamlines utilizing different large models from Amazon Bedrock, reducing development efforts through a consistent interface.

Note: The cover image for this blog post was generated using the SDXL 1.0 model on Amazon Bedrock. The prompt given was as follows:

“a developer sitting in the cafe, comic, graphic illustration, comic art, graphic novel art, vibrant, highly detailed, colored, 2d minimalistic”

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.