XRAI Glass - Let's Build a Startup Showcase!

Learn how XRAI Glass is making the world a better place, one word at the time, with Amazon Translate and Transcribe

Also, let us know if your startup is using tech to make the world a better place!

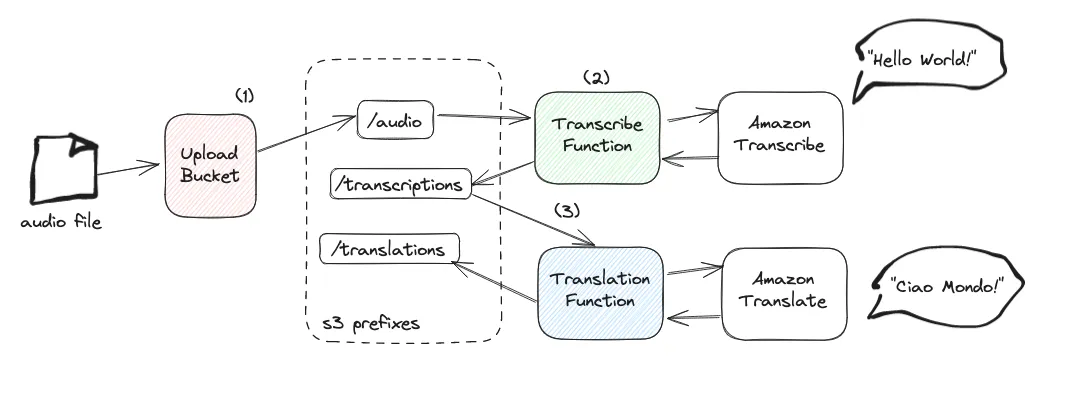

- When an audio file is uploaded to an Amazon Simple Storage Service (S3) bucket under the prefix

/audio - we trigger an AWS Lambda function that makes use of Transcribe to get text out of the audio file. This function will write the transcription in the same bucket under the prefix

/transcriptions - In turn, when a file is created under

/transcriptions, another Lambda function is triggered to perform a translation job. For simplicity we'll assume that the audio has been recorded in english and we want to translate that into italian. This lambda function will write the translation results to/translations

/audio, you'd end up with an infinite loop! If that were to happen, make sure you throttle the invocation of your functions and potentially add kill-switches to all your choreographers. A common strategy is to provide a deny-list of S3 paths to each choreographer. In case one of these paths is detected in the event triggering the function, the function may want to throttle itself, or ignore the event, send the event to a dead letter queue, and send alerts to relevant notification systems. Others prefer to keep separate buckets for every stage: just beware of your AWS account limits and file a service quota increase if you need more buckets.- Install the AWS CDK CLI

If you haven't already installed the AWS CDK, install it using npm:npm install -g aws-cdk - Create a New CDK Project

Create a new directory for your CDK project and initialize it with the following commandsmkdir transcribe-translatecd transcribe-translatecdk init app --language typescript - Add the required AWS CDK dependencies

npm install @aws-cdk/aws-s3 @aws-cdk/aws-lambda @aws-cdk/aws-iam @aws-cdk/aws-s3-notifications @aws-cdk/aws-lambda-nodejs

lib/transcribe-translate-stack.ts file to define the resources:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

import * as cdk from 'aws-cdk-lib';

import { Construct } from 'constructs';

import * as s3 from 'aws-cdk-lib/aws-s3';

import * as lambda from 'aws-cdk-lib/aws-lambda';

import * as s3n from 'aws-cdk-lib/aws-s3-notifications';

import { NodejsFunction } from 'aws-cdk-lib/aws-lambda-nodejs';

import * as iam from 'aws-cdk-lib/aws-iam';

import * as path from 'path';

export class TranscribeTranslateStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

// Create the S3 bucket

const bucket = new s3.Bucket(this, 'UploadBucket', {

removalPolicy: cdk.RemovalPolicy.DESTROY, // this is OK only for dev

autoDeleteObjects: true, // this is OK only for dev

});

// Create the Transcribe Lambda function

const transcribeFunction = new NodejsFunction(this, 'TranscribeFunction', {

runtime: lambda.Runtime.NODEJS_18_X,

entry: path.join(__dirname, '../lambda/transcribe.mjs'),

handler: 'handler',

environment: {

BUCKET_NAME: bucket.bucketName,

},

timeout: cdk.Duration.seconds(900),

});

// Grant read/write permissions on S3 objects for the transcribe function

bucket.grantReadWrite(transcribeFunction, 'transcriptions/*');

// Additional permissions for Transcribe

transcribeFunction.addToRolePolicy(new iam.PolicyStatement({

actions: ['transcribe:StartTranscriptionJob', 'transcribe:GetTranscriptionJob'],

resources: ['*'],

}));

// Add S3 event notification to trigger Transcribe function

bucket.addEventNotification(s3.EventType.OBJECT_CREATED, new s3n.LambdaDestination(transcribeFunction), {

prefix: 'audio/',

});

// Create the Translate Lambda function

const translateFunction = new NodejsFunction(this, 'TranslateFunction', {

runtime: lambda.Runtime.NODEJS_18_X,

entry: path.join(__dirname, '../lambda/translate.mjs'),

handler: 'handler',

environment: {

BUCKET_NAME: bucket.bucketName,

},

timeout: cdk.Duration.seconds(900),

});

// Grant read/write permissions on specific S3 objects for the translate function

bucket.grantRead(translateFunction, 'transcriptions/*');

bucket.grantReadWrite(translateFunction,'translations/*');

// Additional permissions for Translate

translateFunction.addToRolePolicy(new iam.PolicyStatement({

actions: ['translate:TranslateText'],

resources: ['*'],

}));

// Add S3 event notification to trigger Translate function

bucket.addEventNotification(s3.EventType.OBJECT_CREATED, new s3n.LambdaDestination(translateFunction), {

prefix: 'transcriptions/',

});

}

}

lambda/transcribe.mjs1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

import { TranscribeClient, StartTranscriptionJobCommand } from '@aws-sdk/client-transcribe';

const transcribeClient = new TranscribeClient();

export const handler = async (event) => {

const bucket = process.env.BUCKET_NAME;

const key = event.Records[0].s3.object.key;

const transcribeParams = {

TranscriptionJobName: `TranscribeJob-${Date.now()}`,

LanguageCode: 'en-US',

Media: {

MediaFileUri: `s3://${bucket}/${key}`

},

OutputBucketName: bucket,

OutputKey: `transcriptions/${key.split("/").pop()}.json`

};

try {

await transcribeClient.send(new StartTranscriptionJobCommand(transcribeParams));

console.log('Transcription job started');

} catch (err) {

console.error('Error starting transcription job', err);

return;

}

};

/transcriptions. This will trigger the next phase in the choreography.lambda/translate.mjs1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

import { S3Client, GetObjectCommand, PutObjectCommand } from '@aws-sdk/client-s3';

import { TranslateClient, TranslateTextCommand } from '@aws-sdk/client-translate';

const s3Client = new S3Client();

const translateClient = new TranslateClient();

export const handler = async (event) => {

const bucket = process.env.BUCKET_NAME;

const key = event.Records[0].s3.object.key;

// Get the transcription text from S3

let transcriptionData;

try {

transcriptionData = await s3Client.send(new GetObjectCommand({

Bucket: bucket,

Key: key

}));

} catch (err) {

console.error('Error getting transcription from S3', err);

return;

}

const { results } = JSON.parse(await streamToString(transcriptionData.Body));

const transcriptionText = results.transcripts.reduce(

(acc, curr) => acc + curr.transcript, ''

);

console.log('Transcription text:', transcriptionText);

// Translate the transcription

const translateParams = {

SourceLanguageCode: 'en',

TargetLanguageCode: 'it',

Text: transcriptionText

};

console.log(translateParams);

let translation;

try{

translation = await translateClient.send(new TranslateTextCommand(translateParams));

console.log('Translation:', translation.TranslatedText);

}catch(err){

console.error('Error translating text', err);

return;

}

// Save the translation result to S3

const translationResult = {

originalText: transcriptionText,

translatedText: translation.TranslatedText

};

try{

await s3Client.send(new PutObjectCommand({

Bucket: bucket,

Key: `translations/${key.split("/").pop()}.it.txt`,

Body: translationResult.translatedText,

ContentType: 'text/plain'

}));

}catch(err){

console.error('Error saving translation to S3', err);

return;

}

console.log('Translation saved');

};

// Helper function to convert a stream to a string

const streamToString = (stream) => {

return new Promise((resolve, reject) => {

const chunks = [];

stream.on('data', (chunk) => chunks.push(chunk));

stream.on('error', reject);

stream.on('end', () => resolve(Buffer.concat(chunks).toString('utf8')));

});

};

1

2

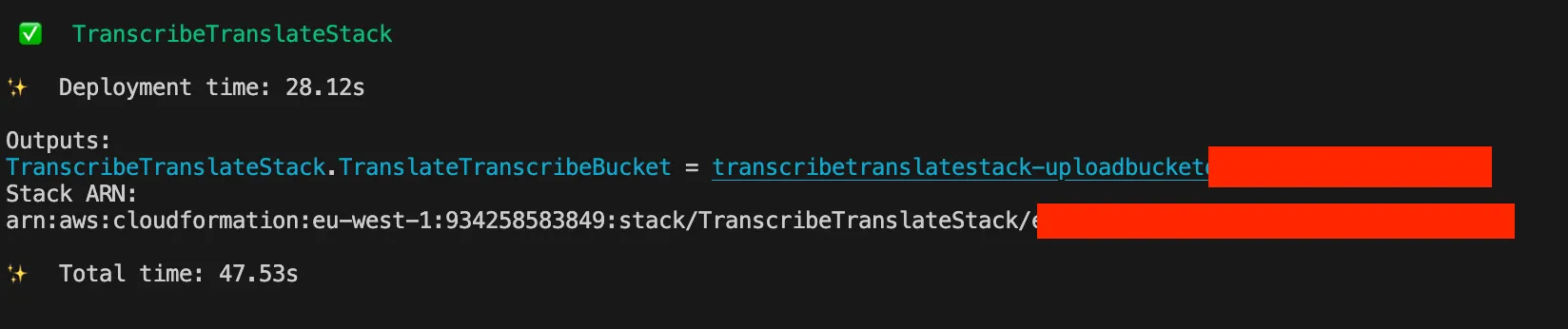

cdk bootstrap # if you haven't bootstrapped in this region before

cdk deploy

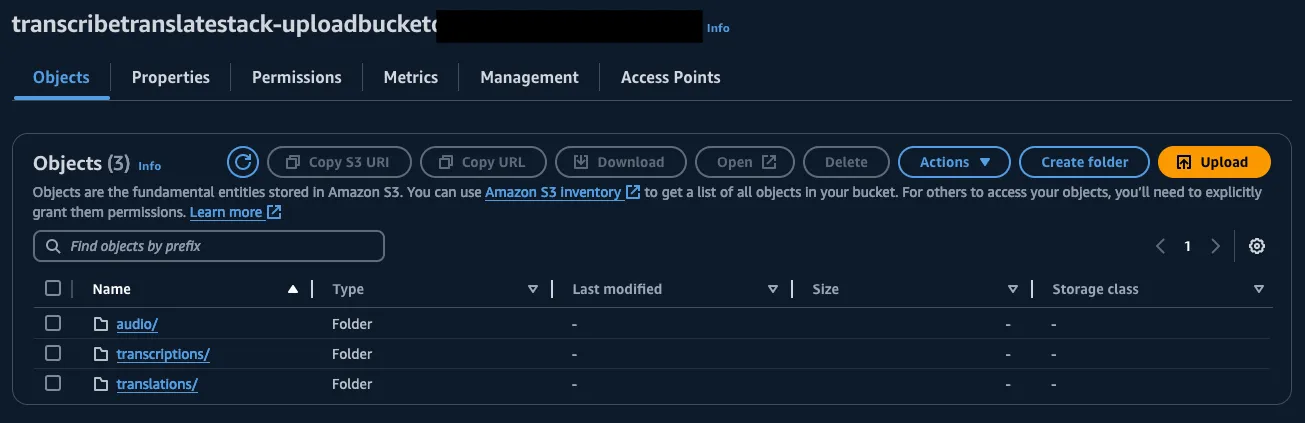

audio/. You can use the CLI1

aws s3 cp ./path/to/your/audio/file s3://your-bucket-here/audio/file.mp3audio/. After a while, you should have available transcriptions/ and translations/ where you'll find the choreography results for each category.

1

cdk destroyAny opinions in this post are those of the individual author and may not reflect the opinions of AWS.