Prod-One-Box on serverless

Releasing with confidence and reducing blast radius with AWS Lambda traffic shifting and a prod-one-box

Automating gradual production releases

Wiring it all together using CDK

Defining AWS Lambda traffic shifting

Deployment to the one-box frontend

Delaying the deployment to one lambda 10 minutes.

Defining the order of the deployment

Implementing session stickiness in Amazon Cloudfront

A Lambda@edge viewerRequest function

A Lambda@edge originRequest function

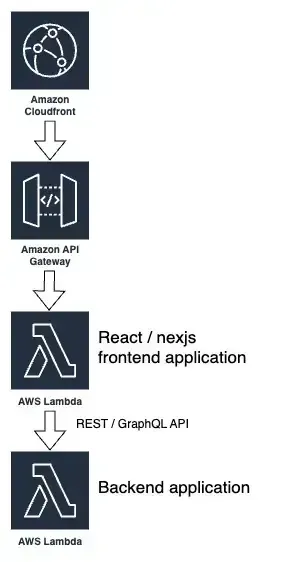

- A web frontend, using any modern javascript framework such as React / NextJS, running on AWS Lambda behind a CloudFront distribution. An HTTP API Gateway provides an endpoint to CloudFront which serves as origin.

- A stateless REST / GraphQL backend offering an API to the frontend, exposed via a REST API Gateway and potentially behind a CloudFront distribution (which could be the same as before, mapping the API endpoints under a specific path, such as typically

/api) - State, if any, stored in a persistence layer, using any database engine. For the purpose of this article, this piece in the architecture is not relevant, but it highlights the fact that the other two layers are stateless.

- Differences in data in the production environment versus the test environments. That implies there could be scenarios that are not reproducible unless in the production environment.

- Configuration differences between environments. API keys, endpoints of 3rd party services, digital certificates, and other similar configurations are often exclusive to the production environment.

- Users navigation patterns. Websites exposed to the public are subject to an indefinite and unpredictable set of user navigation patterns, which in general cannot be reproduced accurately in test environments.

- Adding new endpoints or operations to your service. The old frontend would not use them, but their existence will not make the frontend fail.

- Adding new fields to existing outgoing messages. The old frontend does not read them, as long as it safely ignores unexpected response fields, it would not fail.

- Adding optional fields to existing incoming messages. The old frontend would not send them, but if they are optional and have reasonable default values, the backend will deal with them with no errors.

- Ability to control the order in which things are deployed. The frontend needs to wait until the backend is fully rolled out.

- Session stickiness. The solution needs to be able to consistently send users traffic to specific partitions of our frontend architecture.

- Timers and traffic increments. The system needs to be able to specify delays before specific parts of the release are done.

- Alarms and canaries. The solution needs to be able to detect problems with the ongoing release and automate the abort and rollback process accordingly.

- Lambda traffic shifting for the backend. AWS Lambda provides the ability to shift traffic gradually between versions, using predefined AWS CodeDeploy templates. For example, you can choose to send traffic to the new version in increments of 10% every minute, which would imply that the backend lambda code is fully deployed in 10 minutes.

- Dual API Gateway+Lambda for the frontend.

- CloudFront session stickiness implemented using Lambda@Edge or CloudFront Functions.

- Alarms and canaries

- One box lambda and API Gateway.

- Custom resource that takes 10 minutes to deploy.

- Regular lambda and API Gateway.

X-Req-Partition) with the partition value. In order to make this decision consistent for a given user, so that request from this user session always receive the same partition, different techniques can be implemented:- Setting a cookie on the first request of the session that would be honored in subsequent requests.

- Using the User-Agent header to calculate a pseudo-random hash that would be consistent accross requests, put this hash in module 100 and assign the one-box partition if the value is smaller than 20 (if I want 20% of the traffic assigned to the one-box partition).

X-Req-Partition request header and honors it. Our Cloudfront distribution will be configured with the regular frontend API Gateway as origin, this function will change the Host header of the origin request to the URL of the one-box API Gateway if the X-Req-Partition header says so.X-Req-Partition header. Therefore, this function adds to the Vary response header from our origin the value X-Req-Partition. Additionally, I can configure the cache behaviour of the Cloudfront distribution to include this header in the cache key, and also I can add it to the http response, for debugging purposes (by observing the traffic with the browser network inspector I can see if a specific user is being served by the one-box or regular frontend).Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.