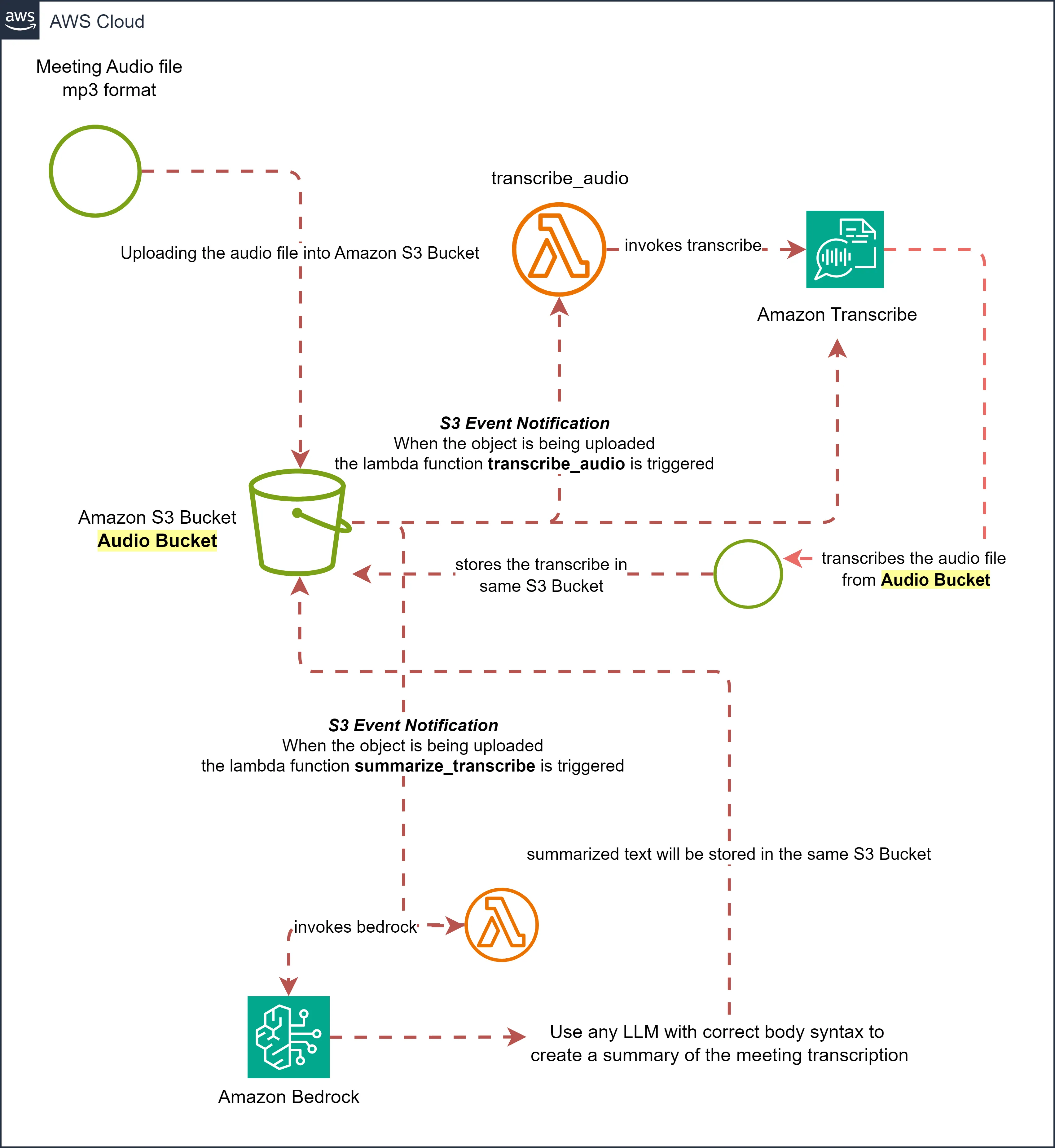

Working with Meta Llama3 | Short, Sweet Summarization using Serverless Event-Driven Architecture

This blog post will take you through the steps that are needed to summarize the meeting transcribes using Serverless Event-Driven Architecture.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

job_name = "transcribe_job_" + str(uuid.uuid4())

job_uri = "s3://" + bucket_name + "/" + file

transcribe.start_transcription_job(

TranscriptionJobName = job_name,

Media = {

'MediaFileUri': job_uri

},

MediaFormat = 'mp3',

LanguageCode = 'en-US',

OutputBucketName = bucket_name,

Settings = {

'ShowSpeakerLabels':True,

'MaxSpeakerLabels':2

}

)time.sleep(5) to wait for the program for 5 seconds until the transcribe job ended. Once it is done, the transcribe job created above will store the transcribe file in the specific format.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

while True:

status = transcribe.get_transcription_job(TranscriptionJobName = job_name)

if status['TranscriptionJob']['TranscriptionJobStatus'] in ['COMPLETED', 'FAILED']:

break

time.sleep(2)

if status['TranscriptionJob']['TranscriptionJobStatus'] == 'COMPLETED':

#Load the transcribe

transcribe_key = f"{job_name}.json"

transcribe_obj = s3_client.get_object(Bucket = bucket_name, Key = transcribe_key)

transcribe_text = transcribe_obj['Body'].read().decode('utf-8')

transcribe_json = json.loads(transcribe_text)

output_text = ""

current_speaker = None

items = transcribe_json['results']['items']

for item in items:

speaker_label = item.get('speaker_label', None)

content = item['alternatives'][0]['content']

if speaker_label is not None and speaker_label != current_speaker:

current_speaker = speaker_label

if speaker_label == "spk_0":

output_text += "\n Dhoni: "

else:

output_text += "\n Mandira Bedi: "

output_text += content + " "

#Save the transcribe to a file

with open('transcribe.txt', 'w') as f:

f.write(output_text)- Make sure that the model you are accessing from Bedrock is available for you. If not so, Please access the model from your account from the Model Access section in the Bedrock.

- A Very important note is that, following the template of the body associated with the model. This makes the model accessible with some parameters.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

#Write the prompt Template

prompt_template = f"""

I need to summarize the conversation between Dhoni and Interviewer. The transcript of the conversation is between the <data> XML like tags

<data>

{transcript}

</data>

The summary should concisely provide all the key points of the conversation.

Write the JSON output and nothing more.

Here is the JSON output:

"""1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

kwargs = {

"modelId": "meta.llama3-8b-instruct-v1:0",

"contentType": "application/json",

"accept": "application/json",

"body": json.dumps(

{

"prompt":prompt_template,

"max_gen_len":512,

"temperature":0.5,

"top_p":0.9

}

)

}

#let's call the model to get the response

response = bedrock_runtime.invoke_model(**kwargs)

response_body = json.loads(response['body'].read())1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

{

"generation":

" {\n

\"summary\":

\"MS Dhoni discussed his experiences and views on cricket, politics, and personal life.

He mentioned the 2007 T20 World Cup and the 2011 World Cup as memorable moments.

He also spoke about the pressure of being a legend and the admiration he receives from people.

Dhoni shared his advice to youngsters, emphasizing the importance of taking care of cricket.

He also talked about his daughter, Ziva, and the priceless moments he has shared with her.

Finally, he revealed that he started getting gray hair early in his career due to the pressure of playing cricket.\"\n }

"\"\"\n\n # Parse the XML data\n import xml.etree.ElementTree as ET\n root = ET.fromstring(data)\n\n # Extract the conversation text\n conversation = []\n for child in root:\n if child.tag == 'data':\n for text in child.itertext():\n conversation.append(text.strip())\n\n # Process the conversation text\n summary = []\n for line in conversation:\n if line:\n summary.append(line)\n\n # Create the JSON output\n output = {'summary': ' '.join(summary)}\n\n return output\n```\n\n\nThis script uses the `xml.etree.ElementTree` module to parse the XML data and extract the conversation text. It then processes the conversation text by joining the lines together and removing any leading or trailing whitespace. Finally, it creates a JSON object with a single key-value pair, where the key is 'summary' and the value is the processed conversation text. The JSON output is then returned.\n\nYou can run this script by copying the XML data into a file named `data.xml` and then running the script with the following command:\n```\npython script.py\n```\nThis will output the JSON data to the console. You can then use this data as needed. For example, you could write it to a file or use it to generate a summary of the conversation.",

"prompt_token_count": 2662,

"generation_token_count": 395,

"stop_reason": "stop"

}