Build a Karaoke App with Interactive Audio Effects using the Switchboard SDK and Amazon IVS

Add interactive audio effects to your Amazon IVS live streams with this step by step guide

Part 1 - Creating a real-time streaming app with Amazon IVS and the Switchboard SDK

Overview of the Android Audio APIs

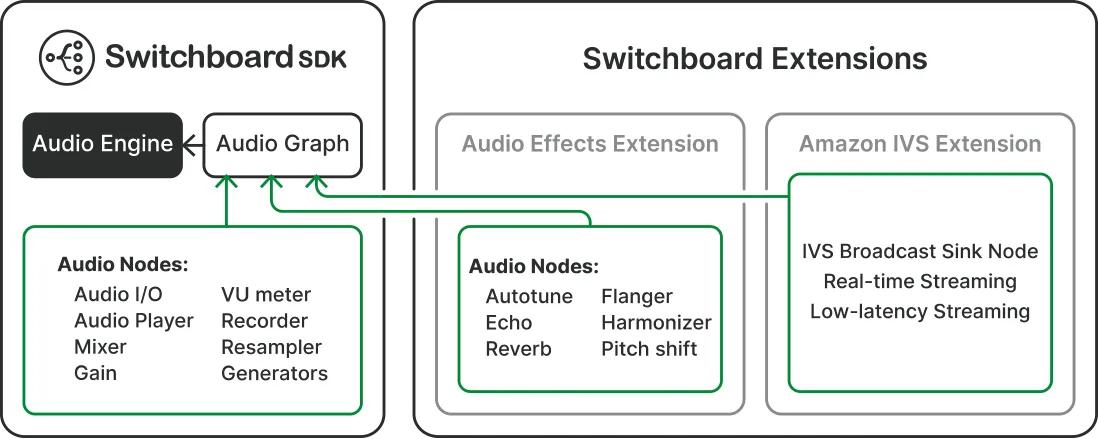

Switchboard SDK building blocks

Adding Switchboard SDK and Amazon IVS to your Android Project

Creating a Karaoke App with Amazon IVS and SwitchboardSDK

Part 2 - Importing Audio Effects through Switchboard Extensions

Loading and applying voice changing effects

- How to create a real-time streaming experience with Amazon IVS

- How to integrate the Switchboard SDK Extensions into your application

- How to test and apply voice changing effects from Switchboard SDK

- How to live stream your new voice to an audience using Amazon IVS

- Part 1 - Creating a real-time streaming app with Amazon IVS and SwitchboardSDK

- Part 2 - Importing and applying voice changing effects

- Part 3 - Testing your new found voice

AudioTrack and AudioRecord. AudioTrack is used for playing back audio data. It's part of the Android multimedia framework and allows developers to play audio data from various sources, including memory or a file. AudioRecord is the counterpart of AudioTrack for recording audio, it's typically used in applications that need to capture audio input from the device's microphone.

- AudioGraphInputNode: The input node of the audio graph.

- AudioPlayerNode: A node that reads in and plays an audio file.

- MixerNode / SplitterNode: A node that mixes / splits audio streams.

- GainNode: A node that changes the gain of an audio signal.

- NoiseFilterNode: A node that filters noise from the audio signal.

- IVSBroadcastSinkNode: The audio that is streamed into this node will be sent directly into the Stage (stream).

- RecorderNode: A node that records audio and saves it to a file.

- VUMeterNode: A node that analyzes the audio signal and reports its audio level.

build.gradle.1

2

3

4

5

dependencies {

implementation(files("SwitchboardSDK.aar"))

implementation(files("SwitchboardAmazonIVS-realtime.aar"))

implementation("com.amazonaws:ivs-broadcast:1.13.4:stages@aar")

}

clientID and clientSecret values or you can use the evaluation license provided below for testing purposes.1

2

SwitchboardSDK.initialize("ivs-karaoke-sample-app",

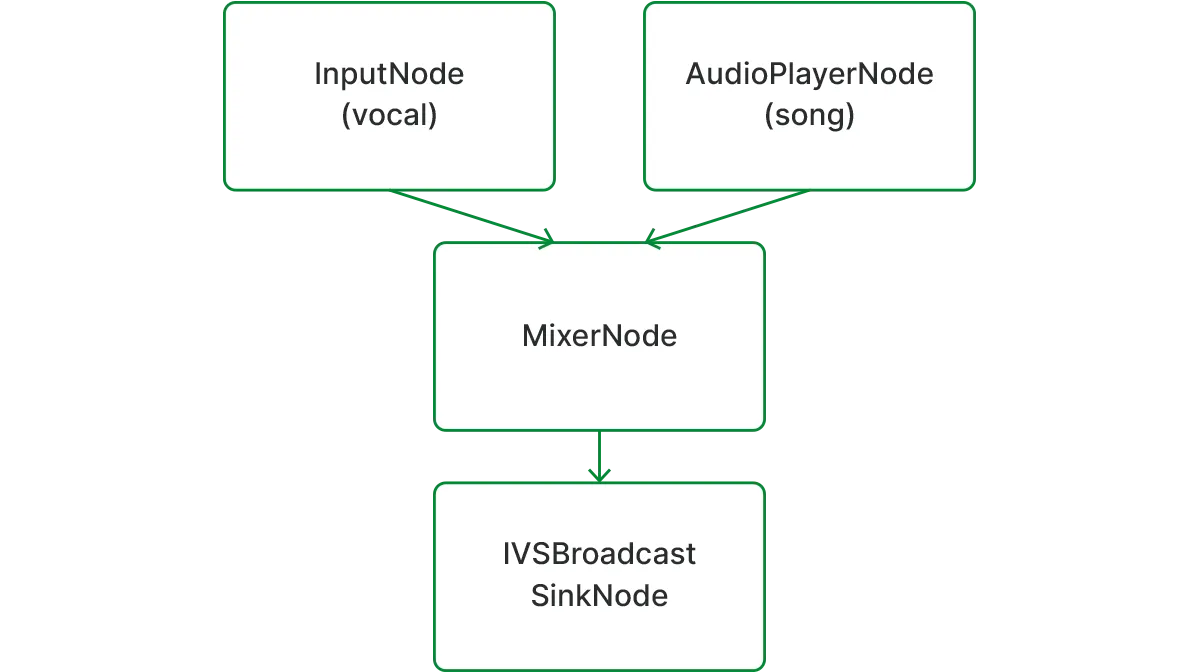

"33ecdd620e70252048be5b211c128fea5e78a546")AudioPlayerNode: plays the loaded audio file.AudioGraphInputNode: provides the microphone signal.MixerNode: mixes the backing track and the microphone signal.IVSBroadcastSinkNode: audio sent into this node is published to the created Stage.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

class KaraokeAppAudioEngine(val context: Context) {

// STEP 1

val audioGraph = AudioGraph()

// STEP 2

val audioPlayerNode = AudioPlayerNode()

// STEP 3

val mixerNode = MixerNode()

// STEP 4

val ivsSinkNode: IVSBroadcastSinkNode

var audioDevice: AudioDevice? = null

init {

ivsSinkNode = IVSBroadcastSinkNode(audioDevice!!)

// STEP 5

audioGraph.addNode(audioPlayerNode)

audioGraph.addNode(mixerNode)

audioGraph.addNode(ivsSinkNode)

// STEP 6

// The input node is available by default, we don’t need to create one

audioGraph.connect(audioGraph.inputNode, mixerNode)

audioGraph.connect(audioPlayerNode, mixerNode)

audioGraph.connect(mixerNode, ivsSinkNode)

}

}- STEP 1: declare the audio graph. The audio graph will route the audio data between the different nodes.

- STEP 2: declare the audio player node, which will handle loading the audio file, and exposes different playback controls.

- STEP 3: define the mixer node, which is responsible for combining the audio from the audio player node with the audio from input node (microphone signal)

- STEP 4: declare the

IVSBroadcastSinkNode. The audio data transmitted to this node will be published to the Stage. We will take care of the AudioDevice initialization in a later step, which is an interface for a custom audio source. - STEP 5: add the audio nodes to the audio graph.

- STEP 6: wire up the audio nodes. We connect the input node (microphone signal) and the audio player node to the mixer node, and the mixer node to the IVSBroadcastSinkNode. The mixer node mixes the two signals together and sends it to the stream. For more detailed information please visit the official documentation.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

class KaraokeAppAudioEngine(val context: Context) {

val audioGraph = AudioGraph()

val audioPlayerNode = AudioPlayerNode()

val mixerNode = MixerNode()

val ivsSinkNode: IVSBroadcastSinkNode

var audioDevice: AudioDevice? = null

// STEP 7

val audioEngine = AudioEngine(

context = context,

microphoneEnabled = true,

performanceMode = PerformanceMode.LOW_LATENCY,

micInputPreset = MicInputPreset.VoicePerformance

)

init {

ivsSinkNode = IVSBroadcastSinkNode(audioDevice!!)

audioGraph.addNode(audioPlayerNode)

audioGraph.addNode(mixerNode)

// The input node is available by default, we don’t need to create one

audioGraph.connect(audioGraph.inputNode, mixerNode)

audioGraph.connect(audioPlayerNode, mixerNode)

audioGraph.connect(mixerNode, ivsSinkNode)

}

}- STEP 7: define and initialize the audio engine

- Set

microphoneEnabled = true, enables the microphone - Set

performanceMode = PerformanceMode.LOW_LATENCYto achieve the lowest latency possible. - Set micInputPreset = MicInputPreset.VoicePerformance, to make sure that the capture path will minimize latency and coupling with the playback path. The capture path refers to the microphone recording process, while the playback path involves audio output through speakers or headphones. This preset optimizes both paths for synchronized and clear audio performance.

IVSBroadcastSinkNode. The IVSBroadcastSinkNode enables our application to easier route the processed audio to Amazon IVS. In order to set up the IVSBroadcastSinkNode**** we need to initialize the AudioDevice and create a Stage**** with a Stage.Strategy. Let’s have a closer look at how the IVSBroadcastSinkNode communicates with the AudioDevice. The Amazon IVS Broadcast SDK provides local devices such as built-in microphones via DeviceDiscovery**** for simple use cases, when we only need to publish the unprocessed microphone signal to the stream. createAudioInputSource**** on a DeviceDiscovery**** instance. 1

audioDevice = deviceDiscovery.createAudioInputSource(1, sampleRate, AudioDevice.Format.INT16)AudioDevice returned by createAudioInputSource can receive Linear PCM data generated by any audio source through the following using the appendBuffer method. Linear PCM (Pulse-Code Modulation) data is a format for storing digital audio. 1

public abstract int appendBuffer (ByteBuffer buffer, long count, long presentationTimeUs)

IVSBroadcastSinkNode interacts with the created virtual audio device. 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

class IVSBroadcastSinkNode (private val audioDevice: AudioDevice) : AudioSinkNode {

private var presentationTimeUs:Double = 0.0

private const val MAX_NUMBER_OF_CHANNELS = 2

private const val AUDIO_FORMAT_NR_OF_BYTES = 2

val buffer = ByteBuffer.allocateDirect(SwitchboardSDK.getMaxNumberOfFrames()

* MAX_NUMBER_OF_CHANNELS * AUDIO_FORMAT_NR_OF_BYTES)

// STEP 8

fun writeCaptureData(data: ByteArray?, numberOfSamples: Long) {

buffer.put(data!!)

// STEP 9

val success = audioDevice.appendBuffer(buffer, data.size.toLong(), presentationTimeUs.toLong())

if (success < 0) {

Log.e("AmazonIVS", "Error appending to audio device buffer")

}

presentationTimeUs += numberOfSamples * 1000000 / sampleRate

buffer.clear()

}

}

- STEP 8: the audio data is channeled to the

IVSBroadcastSinkNodeby theAudioGraph, utilizing thewriteCaptureDatafunction. - STEP 9: the audio data is forwarded to the

AudioDevice, from where it will be broadcasted to theStage.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

class KaraokeAppAudioEngine(val context: Context) {

val audioGraph = AudioGraph()

val audioPlayerNode = AudioPlayerNode()

val mixerNode = MixerNode()

val ivsSinkNode: IVSBroadcastSinkNode

val audioEngine = AudioEngine(

context = context,

microphoneEnabled = true,

performanceMode = PerformanceMode.LOW_LATENCY,

micInputPreset = MicInputPreset.VoicePerformance

)

// STEP 10

var audioDevice: AudioDevice? = null

// STEP 11

var deviceDiscovery = DeviceDiscovery(context)

// STEP 12

var publishStreams: ArrayList<LocalStageStream> = ArrayList()

// STEP 13

private var stage: Stage? = null

// STEP 14

private val stageStrategy = object : Stage.Strategy {

// STEP 15

override fun stageStreamsToPublishForParticipant(

stage: Stage,

participantInfo: ParticipantInfo

): List<LocalStageStream> {

return publishStreams

}

// STEP 16

override fun shouldPublishFromParticipant(

stage: Stage,

participantInfo: ParticipantInfo

): Boolean {

return true

}

// STEP 17

override fun shouldSubscribeToParticipant(

stage: Stage,

participantInfo: ParticipantInfo

): Stage.SubscribeType {

return Stage.SubscribeType.AUDIO_ONLY

}

}

init {

// STEP 18

stage = Stage(context, "MY TOKEN", stageStrategy)

// STEP 19

val sampleRate = when (audioEngine.sampleRate) {

8000 -> BroadcastConfiguration.AudioSampleRate.RATE_8000

16000 -> BroadcastConfiguration.AudioSampleRate.RATE_16000

22050 -> BroadcastConfiguration.AudioSampleRate.RATE_22050

44100 -> BroadcastConfiguration.AudioSampleRate.RATE_44100

48000 -> BroadcastConfiguration.AudioSampleRate.RATE_48000

else -> BroadcastConfiguration.AudioSampleRate.RATE_44100

}

// STEP 20

audioDevice = deviceDiscovery.createAudioInputSource(1, sampleRate, AudioDevice.Format.INT16)

// STEP 21

val audioLocalStageStream = AudioLocalStageStream(audioDevice!!)

// STEP 22

publishStreams.add(audioLocalStageStream)

ivsSinkNode = IVSBroadcastSinkNode(audioDevice!!)

audioGraph.addNode(audioPlayerNode)

audioGraph.addNode(mixerNode)

// The input node is available by default, we don’t need to create one

audioGraph.connect(audioGraph.inputNode, mixerNode)

audioGraph.connect(audioPlayerNode, mixerNode)

audioGraph.connect(mixerNode, ivsSinkNode)

}

}

- STEP 10: declare an instance of the

AudioDevice. Audio input sources must conform to this interface. We will initialize it in STEP 20. - STEP 11: declare an instance of

DeviceDiscovery. We use this class to create a custom audio input source in STEP 20. - STEP 12: declare a list of

LocalStageStream. The class represents the local audio stream, and it is used inStage.Strategyto indicate to the SDK what stream to publish. - STEP 13: declare an instance of

Stage. This is the main interface to interact with the created session. - STEP 14: we define a

Stage.Strategy. The Stage.Strategy interface provides a way for the host application to communicate the desired state of the stage to the SDK. Three functions need to be implemented:shouldSubscribeToParticipant,shouldPublishFromParticipant, andstageStreamsToPublishForParticipant. - STEP 15: Choosing streams to publish. When publishing, this is used to determine what audio and video streams should be published.

- STEP 16: Publishing. Once connected to the stage, the SDK queries the host application to see if a particular participant should publish. This is invoked only on local participants that have permission to publish based on the provided token.

- STEP 17: Subscribing to Participants. When a remote participant joins the stage, the SDK queries the host application about the desired subscription state for that participant. In our application we care about the audio.

- STEP 18: create an instance of a

Stage, by passing the required parameters. The Stage class is the main point of interaction between the host application and the SDK. It represents the stage itself and is used to join and leave the stage. Creating and joining a stage requires a valid, unexpired token string from the control plane (represented as token). Please note that we also have to create a Stage using the Amazon IVS console, and generate the needed participant tokens. We can also create a participant token programmatically using the AWS SDK for JavaScript. - STEP 19: we define the sample rate of the broadcast, based on what sample rate the audio engine is running.

- STEP 20: create a custom audio input source, since we intend to generate and feed PCM audio data to the SDK manually. We pass the number of audio channels, sampling rate and sample format as parameters.

- STEP 21: create an

AudioLocalStageStreaminstance, which represents the local audio stream. - STEP 22: add the created

AudioLocalStageStreaminstance to thepublishStreams**** list.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

class KaraokeAppAudioEngine(val context: Context) {

// STEP 23

fun startAudioEngine() {

audioEngine.start(audioGraph)

}

// STEP 24

fun stopAudioEngine() {

audioEngine.stop()

}

// STEP 25

fun play() {

audioPlayerNode.play()

}

// STEP 26

fun pause() {

audioPlayerNode.pause()

}

// STEP 27

fun isPlayingMusic() = audioPlayerNode.isPlaying

// STEP 28

fun loadAudioFile(context: Context, assetName: String) {

audioPlayerNode.load(

AssetLoader.load(

context, "House_of_the_Rising_Sun.mp3"

), Codec.createFromFileName(assetName)

)

}

// STEP 29

fun startStream() {

stage?.join()

}

// STEP 30

fun stopStream() {

stage?.leave()

}

}

- STEP 23: Initiate the audio graph via the audio engine. The audio engine manages device-level audio input / output and channels the audio stream through the audio graph. This should be usually called when the application is initialized.

- STEP 24: stop the audio engine.

- STEP 25: start playing the backing track.

- STEP 26: pauses the backing track.

- STEP 27: checks whether the audio player is playing.

- STEP 28: loads an audio file located in the assets folder.

- STEP 29: starts to stream the audio by joining the created stage.

- STEP 30: we stop the stream by leaving the stage.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent">

<Button

android:id="@+id/music_playback_button"

android:layout_width="200dp"

android:layout_height="wrap_content"

android:text="Play music"

app:layout_constraintLeft_toLeftOf="parent"

app:layout_constraintRight_toRightOf="parent"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintTop_toTopOf="parent" />

<Button

android:id="@+id/start_button"

android:layout_width="200dp"

android:layout_height="wrap_content"

android:layout_gravity="center_horizontal"

android:layout_marginTop="@dimen/general_margin"

app:layout_constraintLeft_toLeftOf="@id/music_playback_button"

app:layout_constraintRight_toRightOf="@id/music_playback_button"

app:layout_constraintTop_toBottomOf="@id/music_playback_button"

android:text="Start Streaming" />

</androidx.constraintlayout.widget.ConstraintLayout>

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

class KaraokeAppActivity : AppCompatActivity() {

private lateinit var appAudioEngine: KaraokeAppAudioEngine

private lateinit var binding: ActivityKaraokeBinding

private var isStreaming = false

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

binding = ActivityKaraokeBinding.inflate(layoutInflater)

appAudioEngine = KaraokeAppAudioEngine(this)

appAudioEngine.loadAudioFile(this, "audioFile.mp3")

binding.musicPlaybackButton.setOnClickListener {

if (!appAudioEngine.isPlayingMusic()) {

appAudioEngine.play()

binding.musicPlaybackButton.text = "Pause music"

} else {

appAudioEngine.pause()

binding.musicPlaybackButton.text = "Play music"

}

}

binding.startButton.setOnClickListener {

if (!isStreaming) {

binding.startButton.text = "Stop Streaming"

appAudioEngine.startStream()

isStreaming = true

} else {

binding.startButton.text = "Start Streaming"

appAudioEngine.stopStream()

isStreaming = false

}

}

appAudioEngine.startAudioEngine()

}

override fun onDestroy() {

super.onDestroy()

appAudioEngine.stopAudioEngine()

}

}

1

2

3

dependencies {

implementation(files("SwitchboardSuperpowered.aar"))

}

1

SuperpoweredExtension.initialize("ExampleLicenseKey-WillExpire-OnNextUpdate")

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

class KaraokeAppAudioEngine(val context: Context) {

// STEP 31

val autotuneNode = AutomaticVocalPitchCorrectionNode()

val reverbNode = ReverbNode()

val flangerNode = FlangerNode()

init {

// STEP 32

audioGraph.addNode(reverbNode)

audioGraph.addNode(flangerNode)

audioGraph.addNode(autotuneNode)

// STEP 33

audioGraph.connect(audioGraph.inputNode, autotuneNode)

audioGraph.connect(autotuneNode, reverbNode)

audioGraph.connect(reverbNode, flangerNode)

audioGraph.connect(flangerNode, mixerNode)

audioGraph.connect(audioPlayerNode, mixerNode)

audioGraph.connect(mixerNode, ivsSinkNode)

}

}

- STEP 31: Declaring the audio effect nodes.

- STEP 32: Adding the nodes to the audio graph.

- STEP 33: Connecting the nodes in the audio graph. The audio effect nodes are processor nodes, described in section 1.3.2. These processor nodes accept audio input, apply the designated effect during processing, and then output the enhanced audio.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

android:layout_width="match_parent"

android:layout_height="match_parent">

<Button

android:id="@+id/music_playback_button"

android:layout_width="200dp"

android:layout_height="wrap_content"

android:layout_marginTop="250dp"

android:text="Play music"

app:layout_constraintLeft_toLeftOf="parent"

app:layout_constraintRight_toRightOf="parent"

app:layout_constraintTop_toTopOf="parent" />

<Button

android:id="@+id/start_button"

android:layout_width="200dp"

android:layout_height="wrap_content"

android:layout_gravity="center_horizontal"

android:layout_marginTop="20dp"

android:text="Start Streaming"

app:layout_constraintLeft_toLeftOf="@id/music_playback_button"

app:layout_constraintRight_toRightOf="@id/music_playback_button"

app:layout_constraintTop_toBottomOf="@id/music_playback_button" />

<TextView

android:id="@+id/effects_label"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginTop="20dp"

android:paddingLeft="10dp"

android:text="Voice Effects"

android:textSize="18sp"

android:textStyle="bold"

app:layout_constraintLeft_toLeftOf="parent"

app:layout_constraintTop_toBottomOf="@id/start_button" />

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_marginTop="15dp"

android:gravity="center"

android:orientation="horizontal"

android:paddingHorizontal="15dp"

android:paddingRight="5dp"

app:layout_constraintTop_toBottomOf="@id/effects_label">

<Button

android:id="@+id/autotune_button"

android:layout_width="100dp"

android:layout_height="30dp"

android:layout_weight="1"

android:backgroundTint="#D3D3D3"

android:insetTop="0dp"

android:textStyle="bold"

android:insetBottom="0dp"

android:text="Auto Tune"

android:textColor="@color/black"

android:textSize="12sp" />

<Button

android:id="@+id/reverb_button"

android:layout_width="100dp"

android:layout_height="30dp"

android:layout_marginLeft="5dp"

android:textStyle="bold"

android:layout_weight="1"

android:backgroundTint="#D3D3D3"

android:insetTop="0dp"

android:insetBottom="0dp"

android:text="Reverb"

android:textColor="@color/black"

android:textSize="12sp" />

<Button

android:id="@+id/flanager_button"

android:layout_width="100dp"

android:layout_height="30dp"

android:layout_marginLeft="5dp"

android:layout_weight="1"

android:backgroundTint="#D3D3D3"

android:insetTop="0dp"

android:insetBottom="0dp"

android:text="Flanger"

android:textColor="@color/black"

android:textStyle="bold"

android:textSize="12sp" />

</LinearLayout>

</androidx.constraintlayout.widget.ConstraintLayout>

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

class KaraokeAppActivity : AppCompatActivity() {

private lateinit var appAudioEngine: KaraokeAppAudioEngine

private lateinit var binding: ActivityKaraokeBinding

private var isStreaming = false

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

binding = ActivityKaraokeBinding.inflate(layoutInflater)

appAudioEngine = KaraokeAppAudioEngine(this)

appAudioEngine.loadAudioFile(this, "audioFile.mp3")

binding.musicPlaybackButton.setOnClickListener {

if (!appAudioEngine.isPlayingMusic()) {

appAudioEngine.play()

binding.musicPlaybackButton.text = "Pause music"

} else {

appAudioEngine.pause()

binding.musicPlaybackButton.text = "Play music"

}

}

binding.startButton.setOnClickListener {

if (!isStreaming) {

binding.startButton.text = "Stop Streaming"

appAudioEngine.startStream()

isStreaming = true

} else {

binding.startButton.text = "Start Streaming"

appAudioEngine.stopStream()

isStreaming = false

}

}

// STEP 34

binding.autotuneButton.setOnClickListener {

appAudioEngine.autotuneNode.isEnabled = !appAudioEngine.autotuneNode.isEnabled

}

binding.reverbButton.setOnClickListener {

appAudioEngine.reverbNode.isEnabled = !appAudioEngine.reverbNode.isEnabled

}

binding.flanagerButton.setOnClickListener {

appAudioEngine.flangerNode.isEnabled = !appAudioEngine.flangerNode.isEnabled

}

appAudioEngine.startAudioEngine()

}

override fun onDestroy() {

super.onDestroy()

appAudioEngine.stopAudioEngine()

}

}

- STEP 34: Associate the audio effects toggle buttons with their respective effects. Pressing the button activates the effect, and pressing it again deactivates it.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.