Image Text Validation using Amazon Rekognition and Bedrock

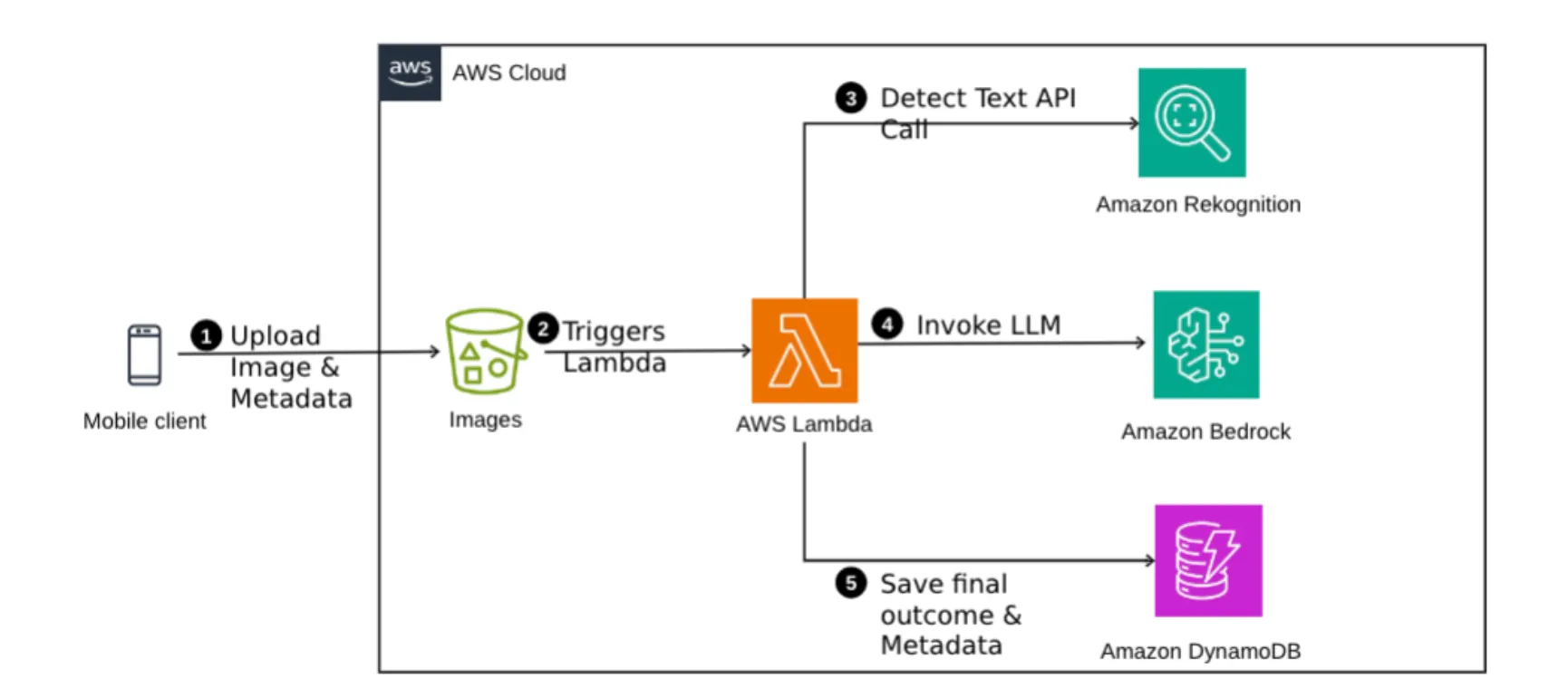

This post provides details of a serverless image text validation solution built using AWS AI services and Bedrock.

- A mobile client app uploads the image along with metadata (including restaurant name, driver id, driver name, timestamp when picture was taken) to an S3 bucket.

- The picture upload triggers an event, which in turn invokes a Lambda function.

- The Lambda function initiates the orchestration flow, first invoking

detectTextapi in Amazon Rekognition. - The api response contains the text, in this case, restaurant hours of operations.

- Next, the Lambda function invokes an LLM, passing it the extracted text in step #4 and the metadata containing the time when the picture was taken.

- The LLM processes the information provided in the prompt and, in response, informs whether the restaurant is open or closed along with the reasoning.

- Finally, the LLM response along with the metadata is stored in Amazon DynamoDB table for analysis.

amazon-bedrock-samples git repository here.1

2

3

4

Human:Current day is MONDAY and time is 8:00 AM. Take into account the current day and time and Based on the following text, tell if the restaurant is Closed or not closed.

Show your reasoning and respond with Closed or Not Closed. <text>Hours of Operation MON-FRI 10AM - 8PM SAT-SUN 10AM - 1PM</text>

Assistant:The current day is Monday and the current time is 8:00 AM. Since it is Monday and the restaurant is open 10AM - 8PM on weekdays, the restaurant is closed at the current time of 8:00 AM.