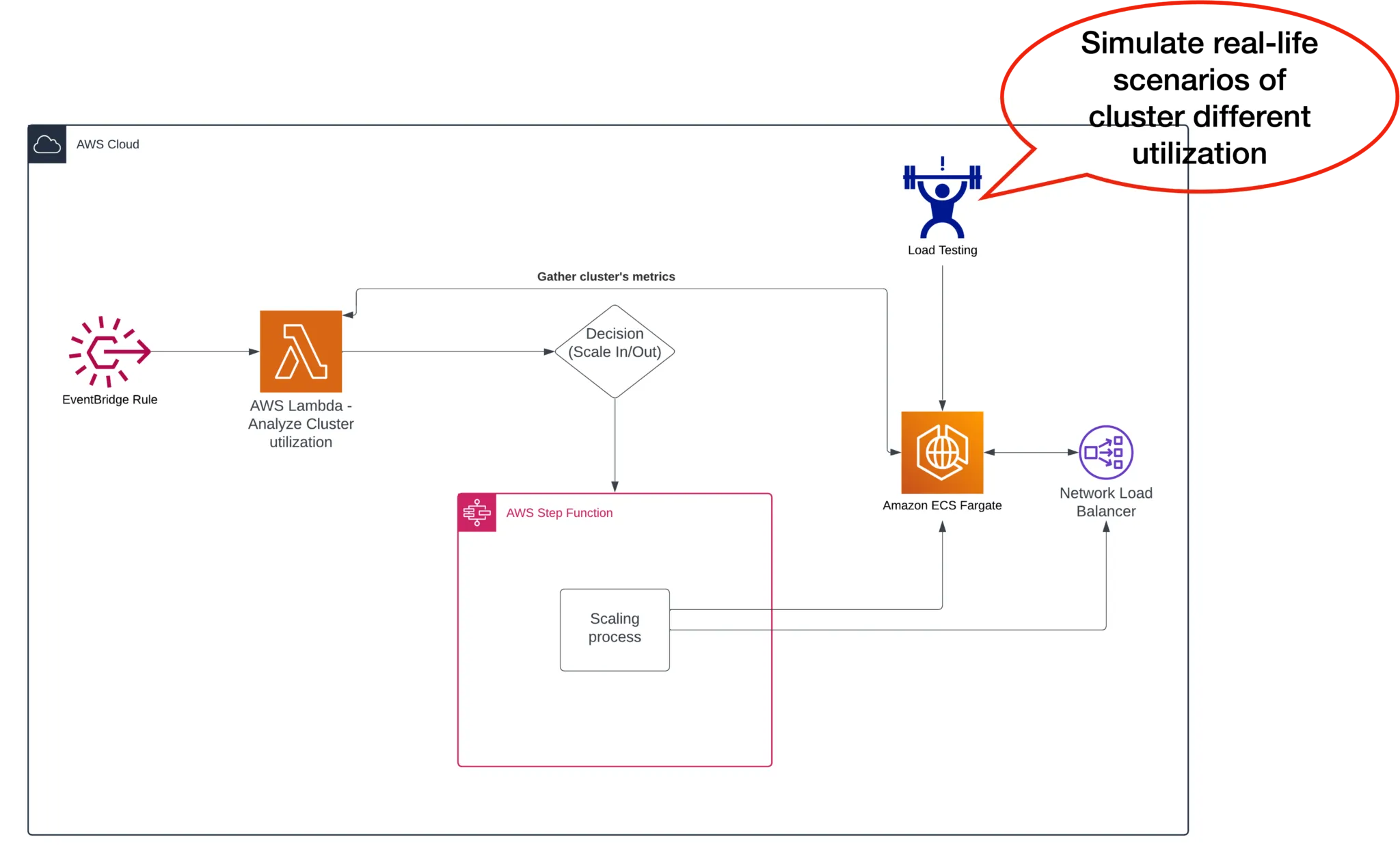

Autoscaling solution for Amazon ECS Cluster

Explore autoscaling solution for Amazon ECS, unveiling strategies and best practices for seamless cluster adaptation to varying workloads.

What is challenging when it comes to autoscaling?

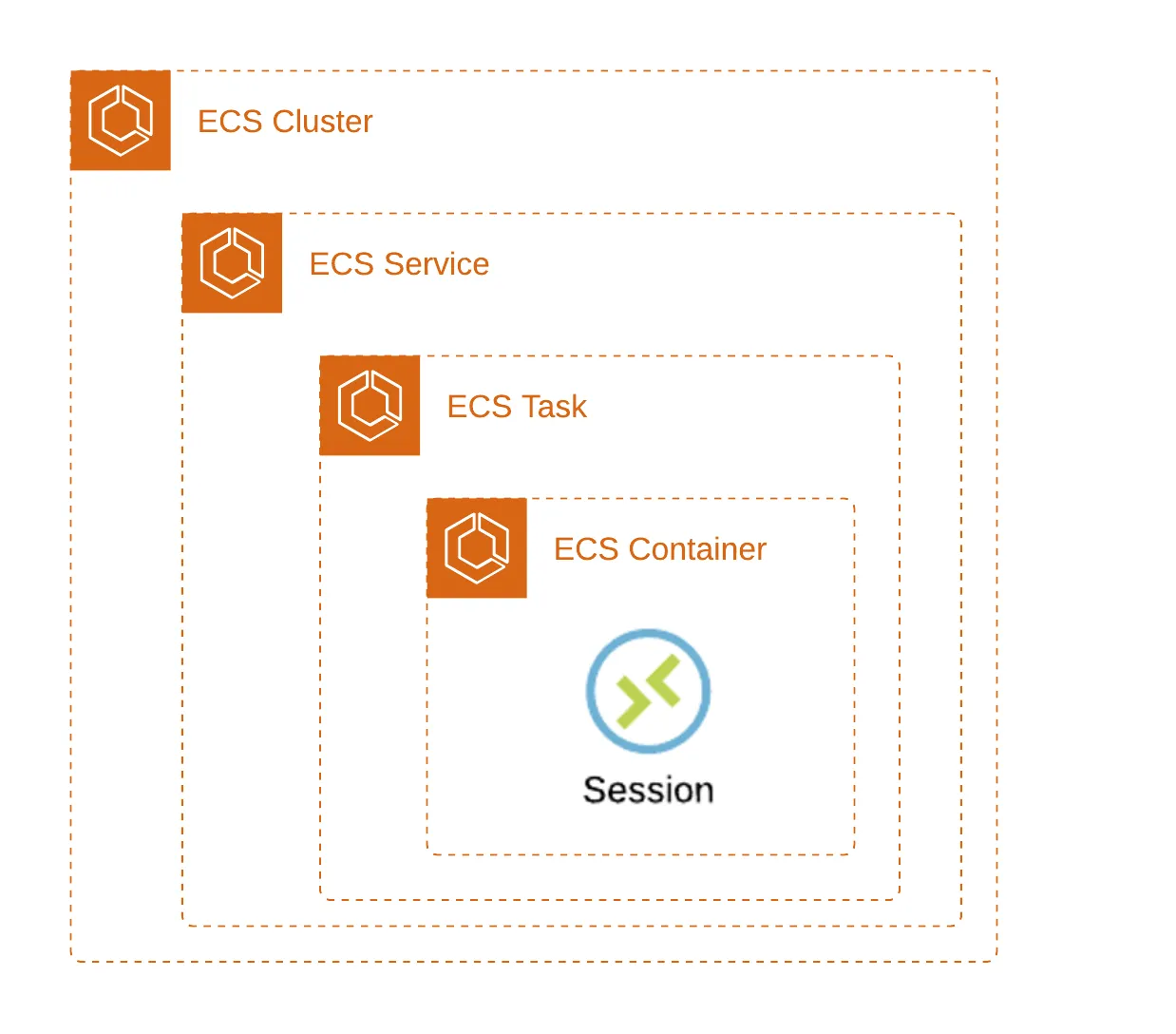

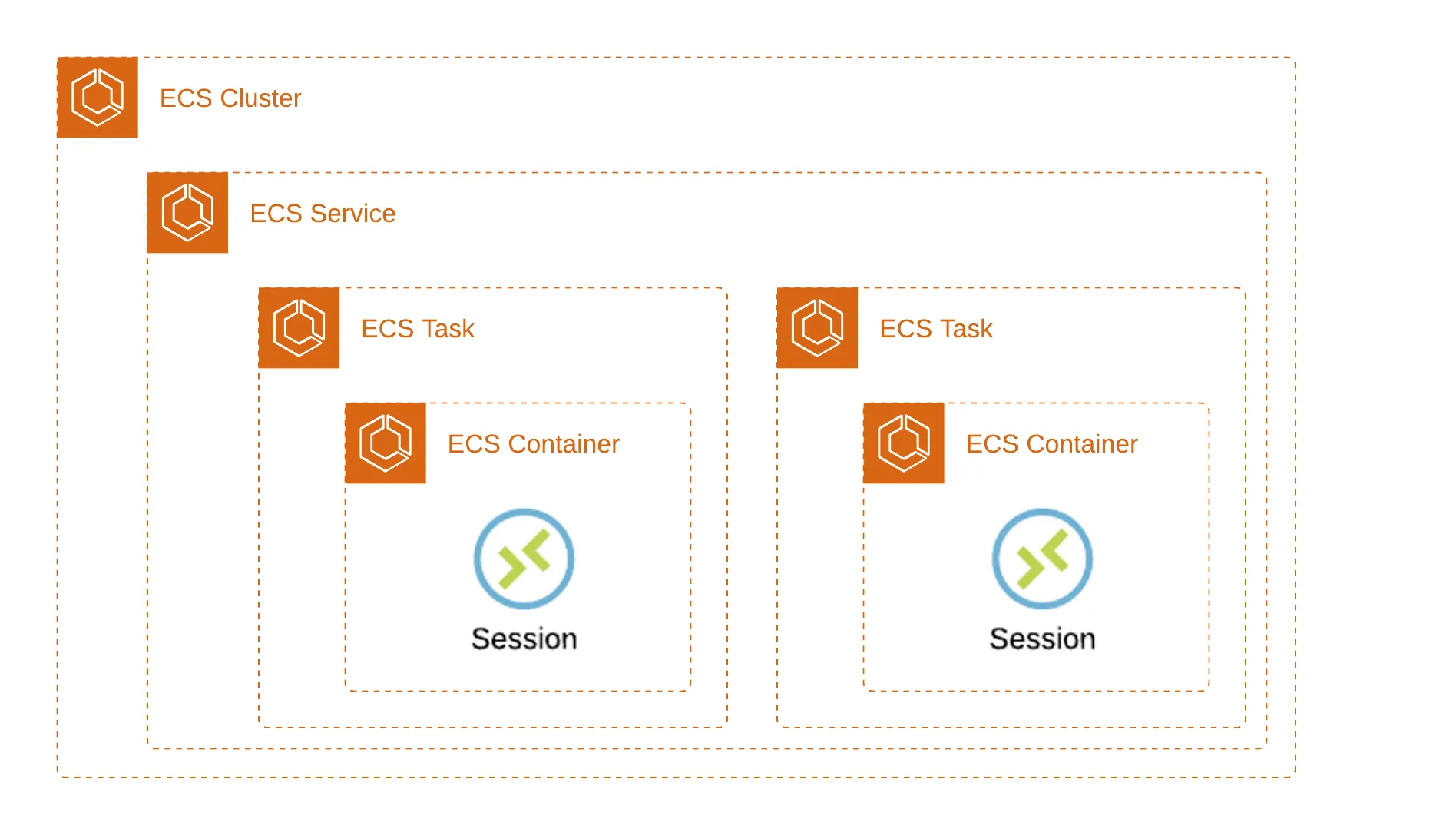

ECS Fargate cluster under the hood

So… how to autoscale this beast?

Let’s collect requirements for autoscaling solution

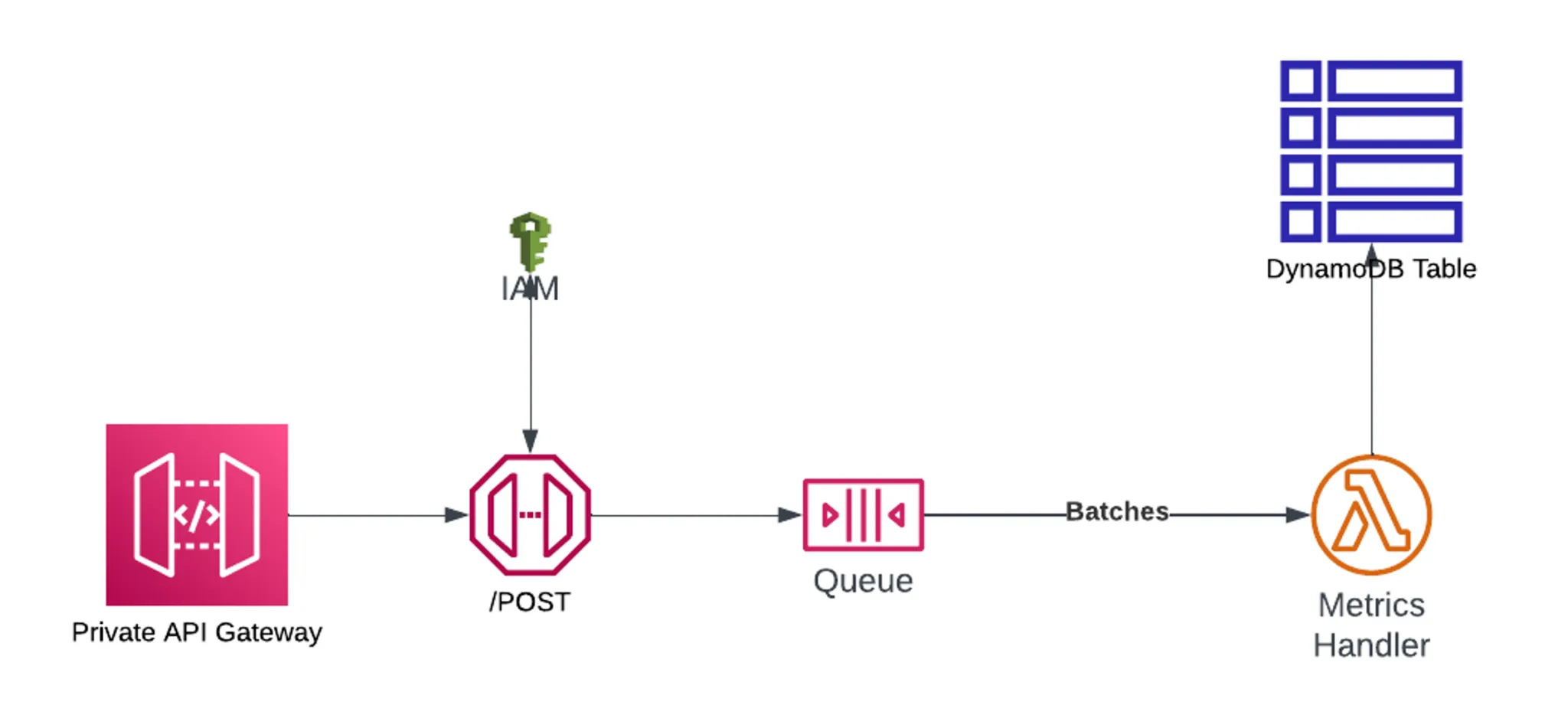

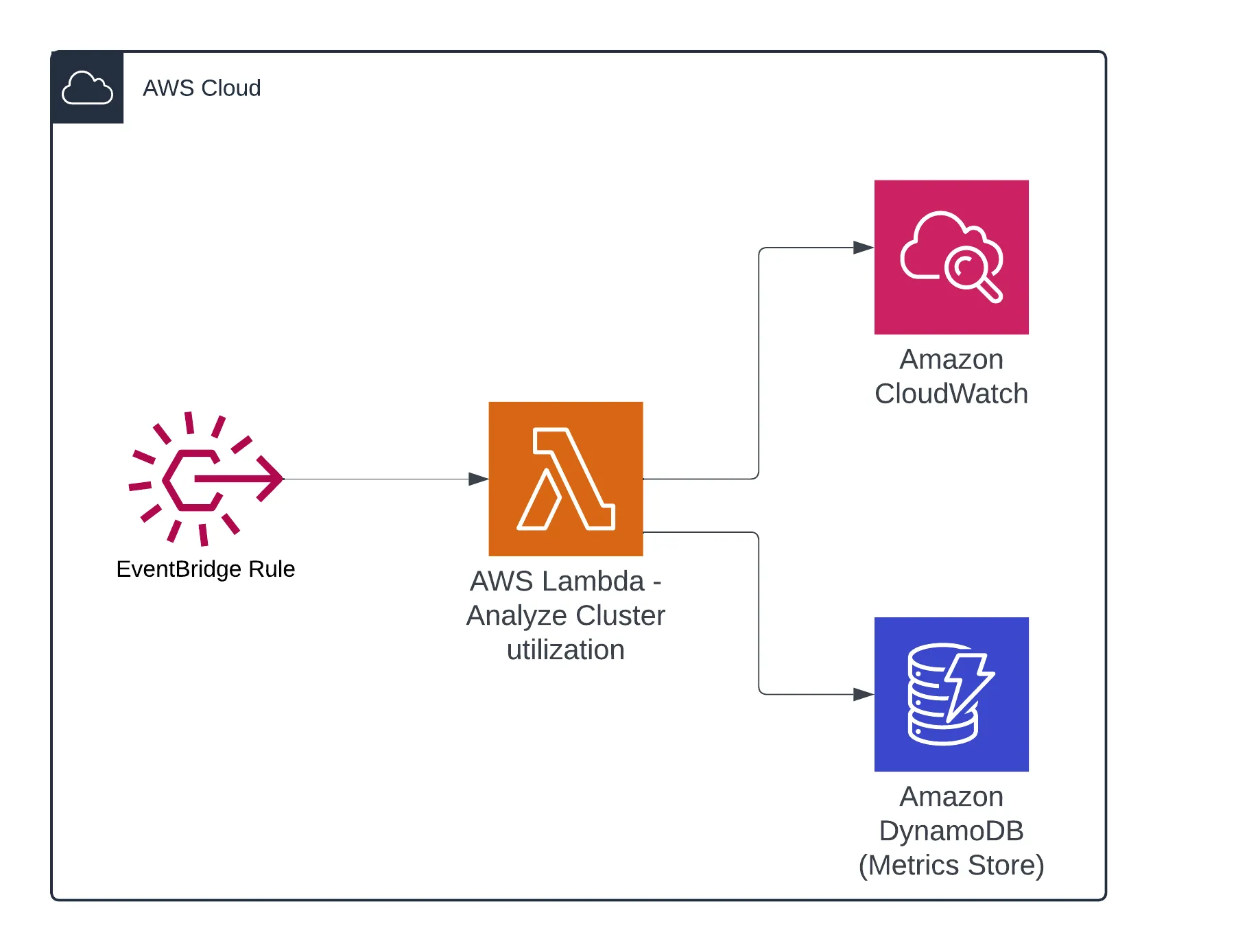

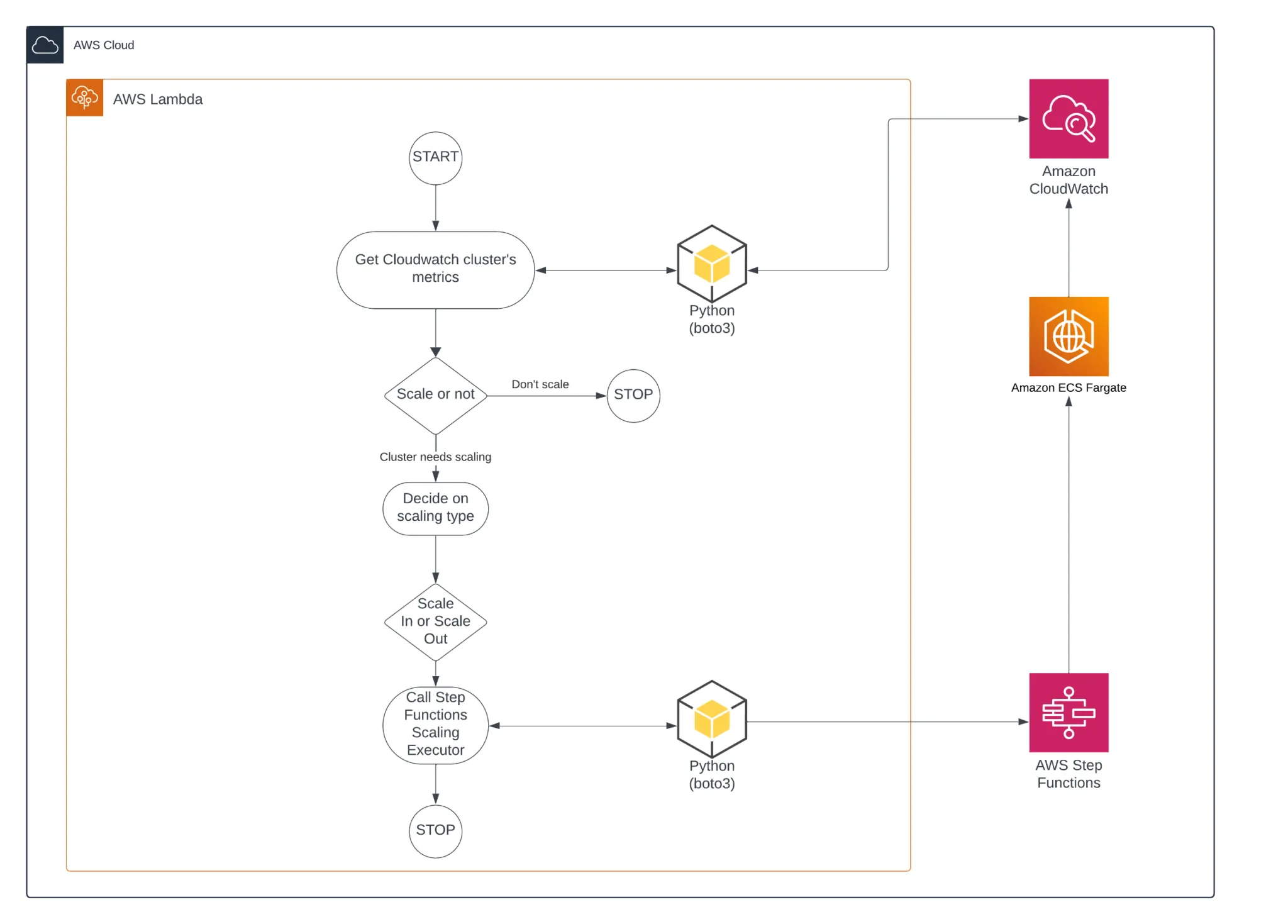

Collecting information (metrics) about sessions that are active in specific task

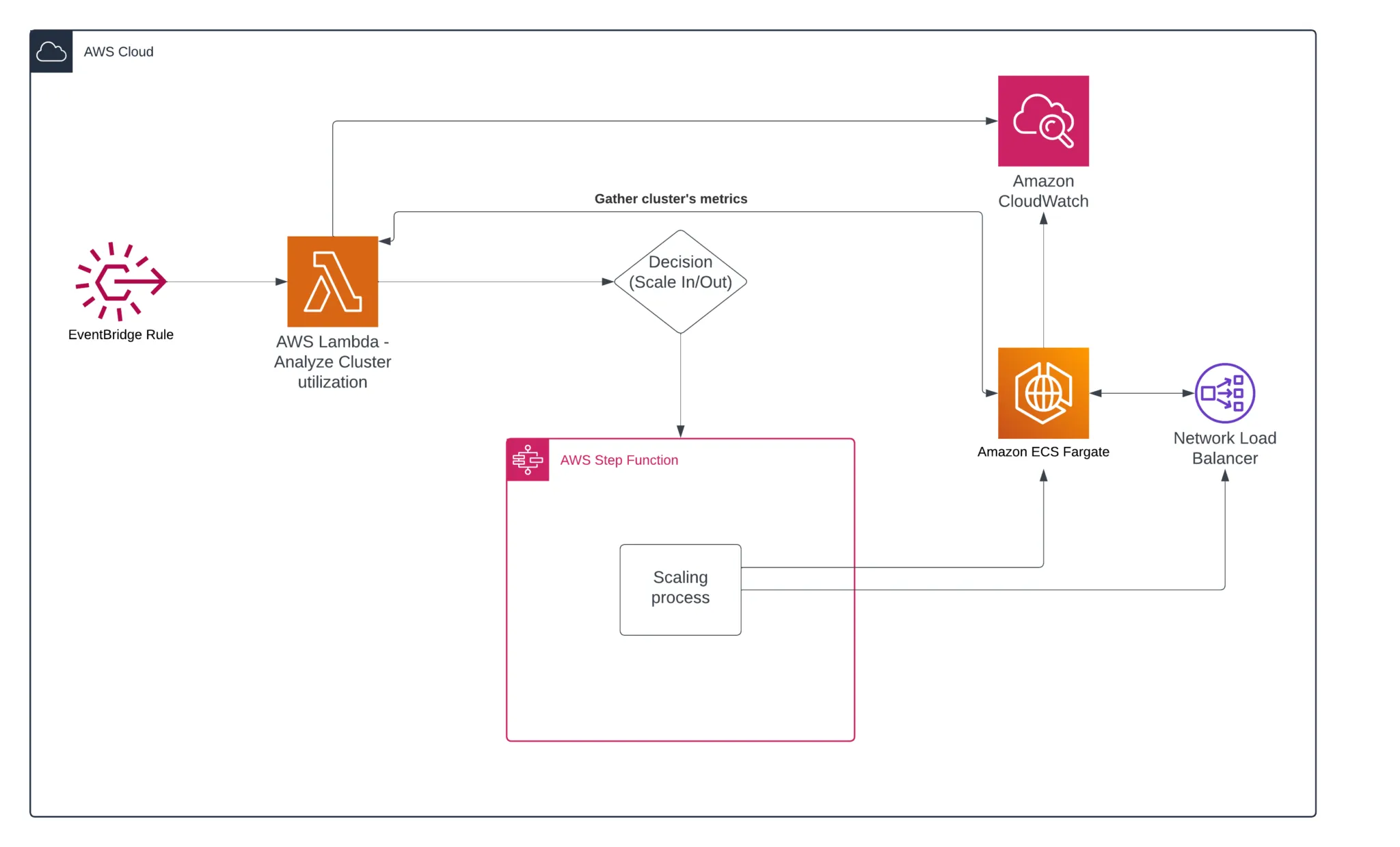

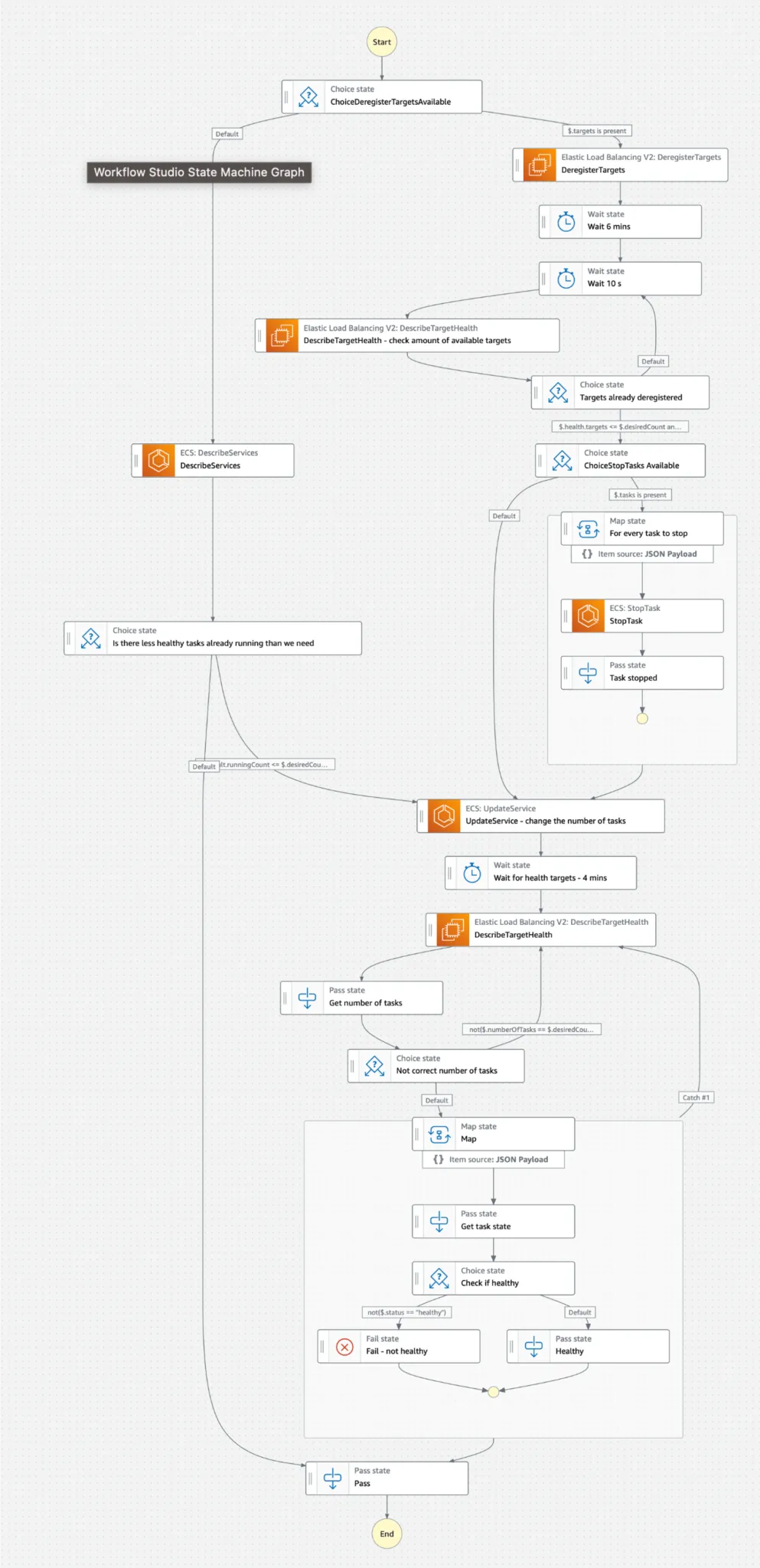

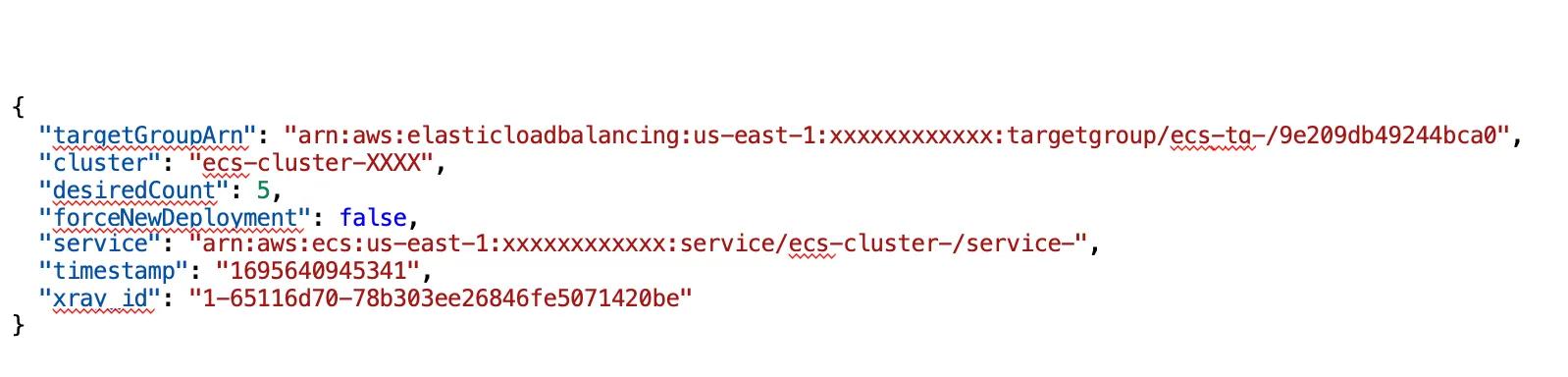

AWS Step Functions as an autoscaling executor

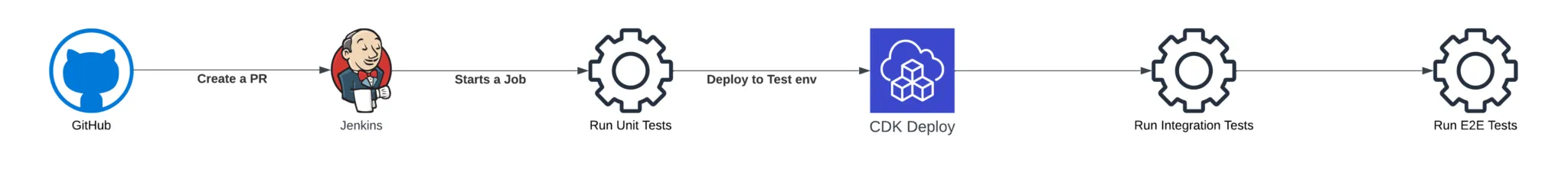

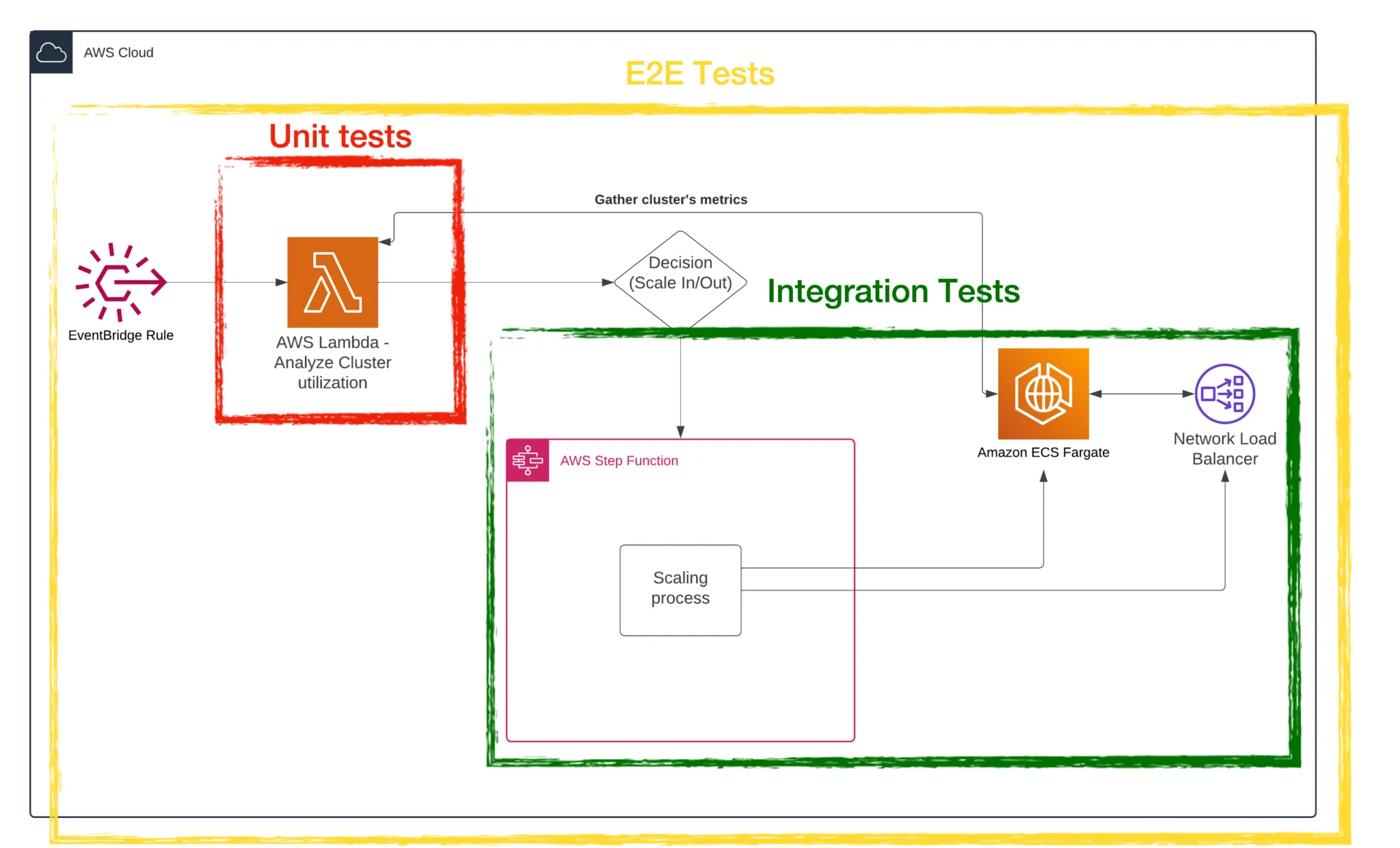

Assignment of infrastructure elements to test types

Unit tests of the Lambda function

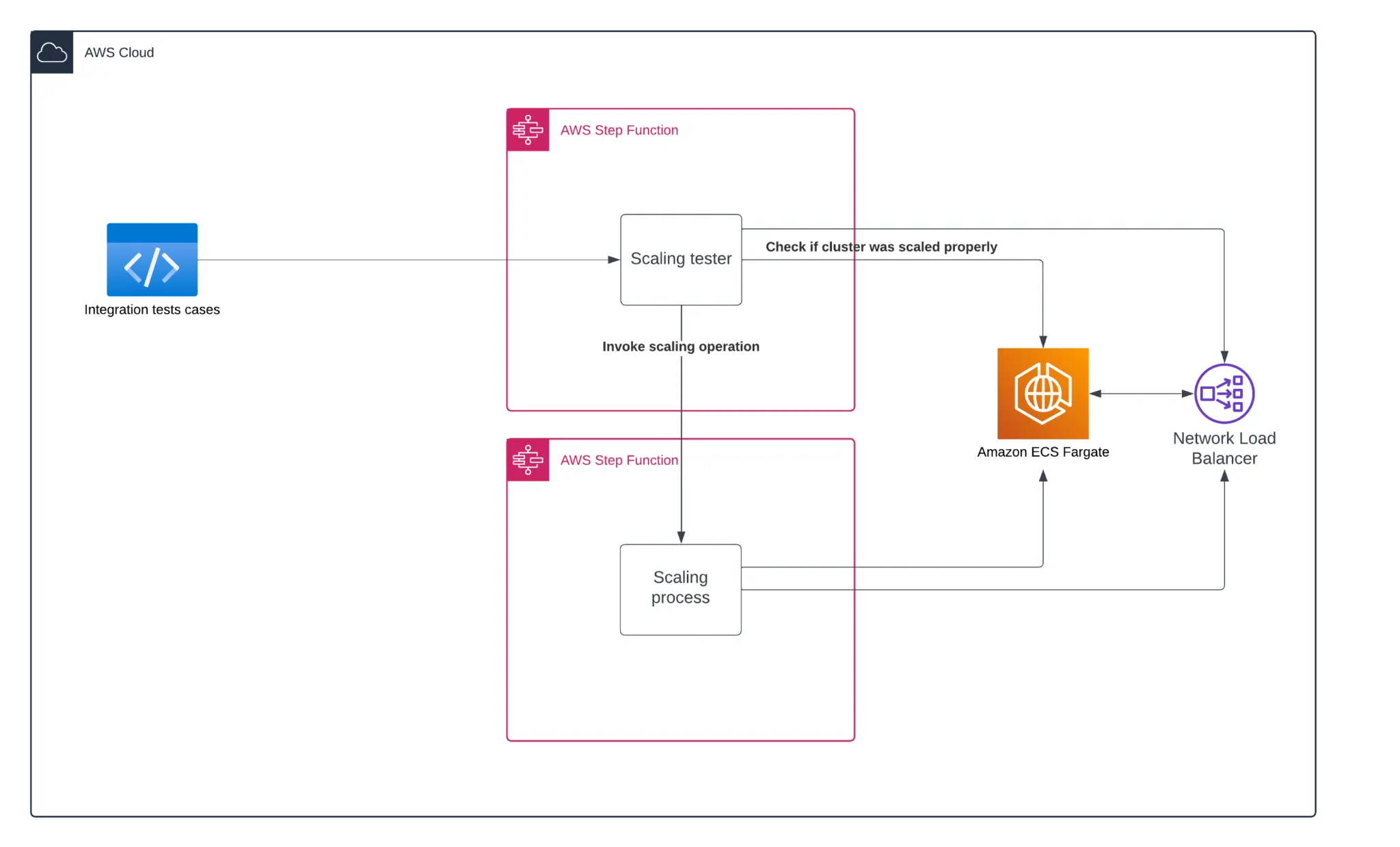

Integration tests of the autoscaling infrastructure

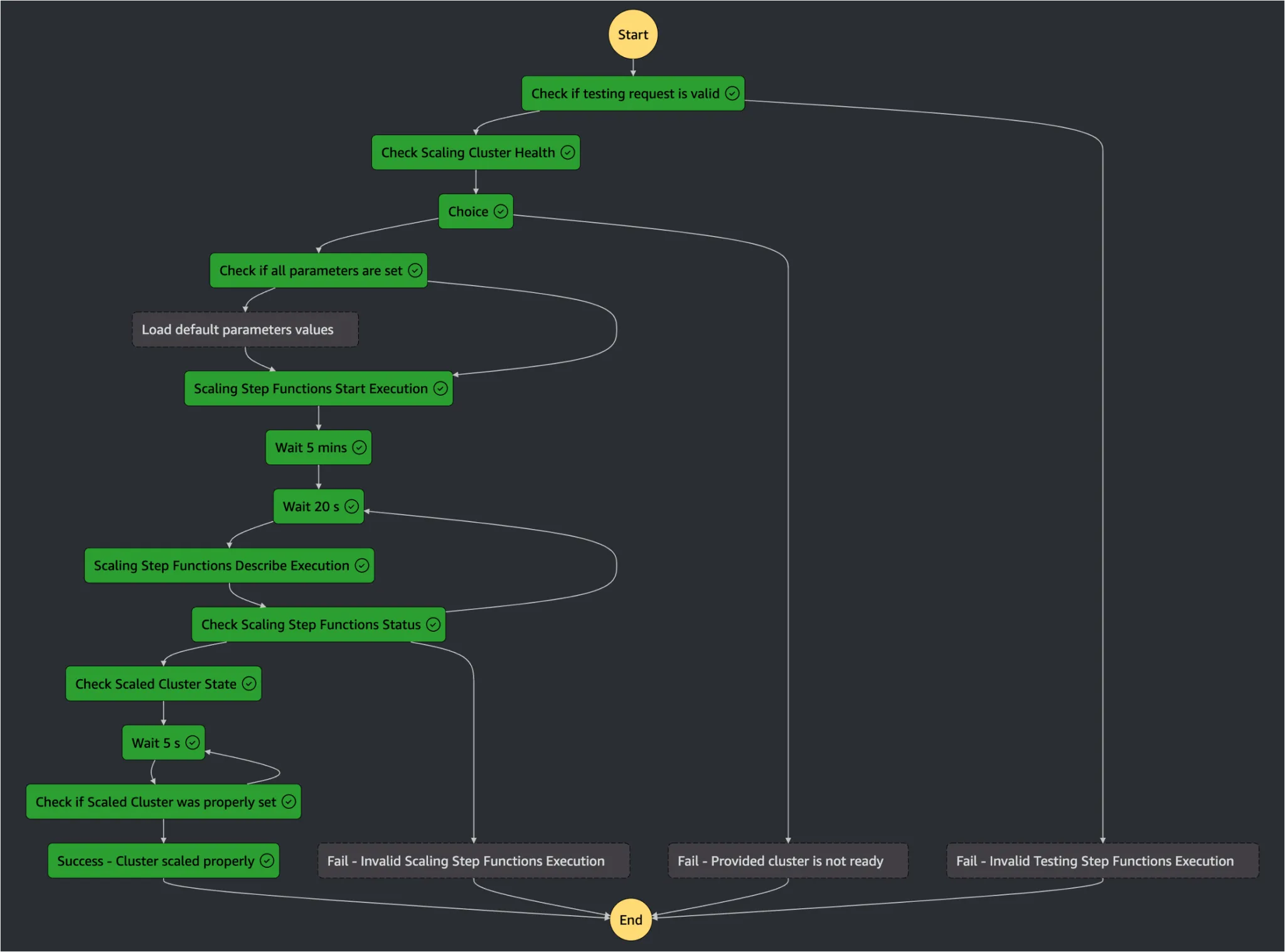

E2E tests of the autoscaling infrastructure

CI/CD and the autoscaling solution

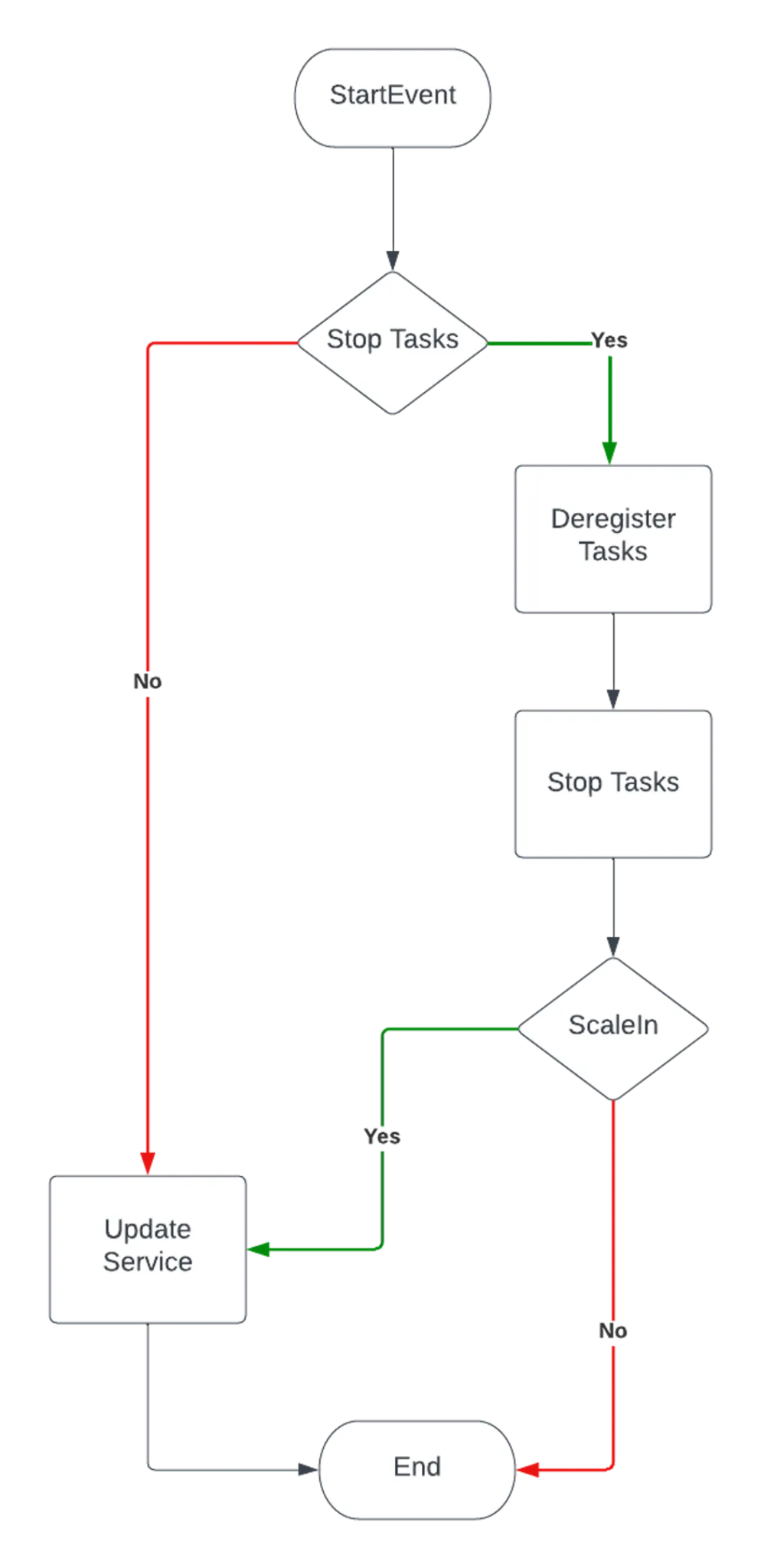

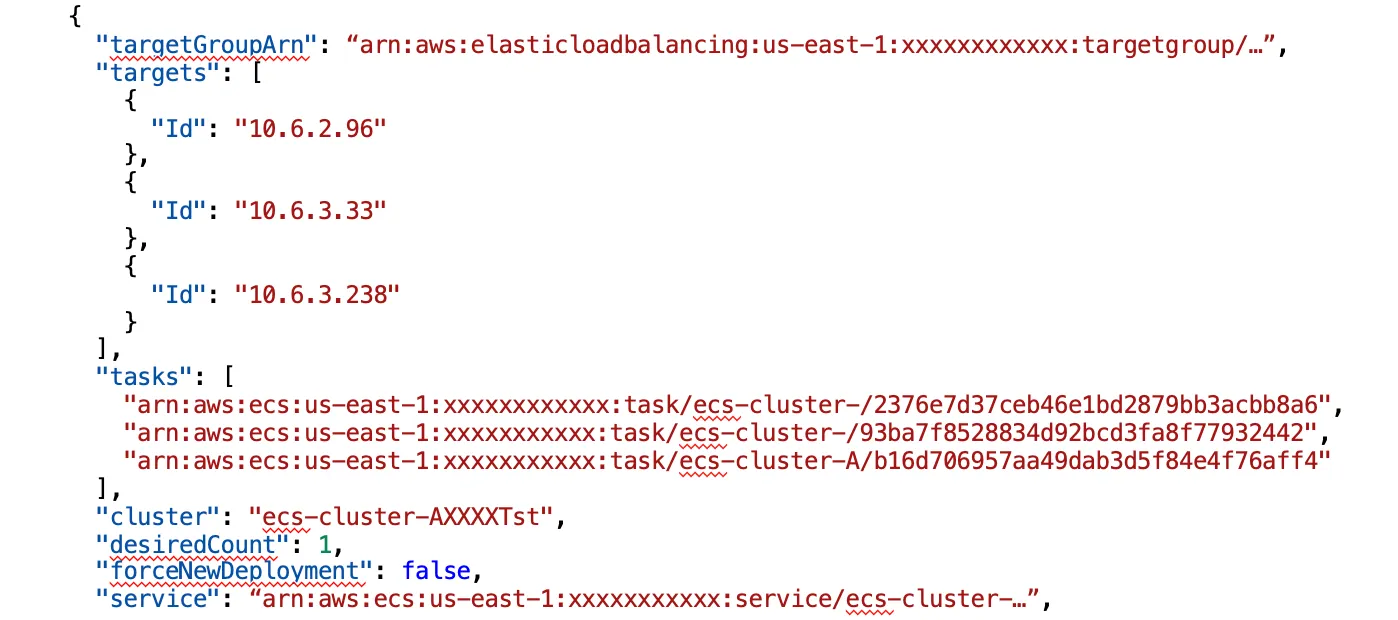

- Deregister specific Task from Load balancer’s targets,

- Wait for it to be deregistered from the Load balancer,

- Stop this task in the ECS’s Service,

- Remove it from the ECS’s Service.

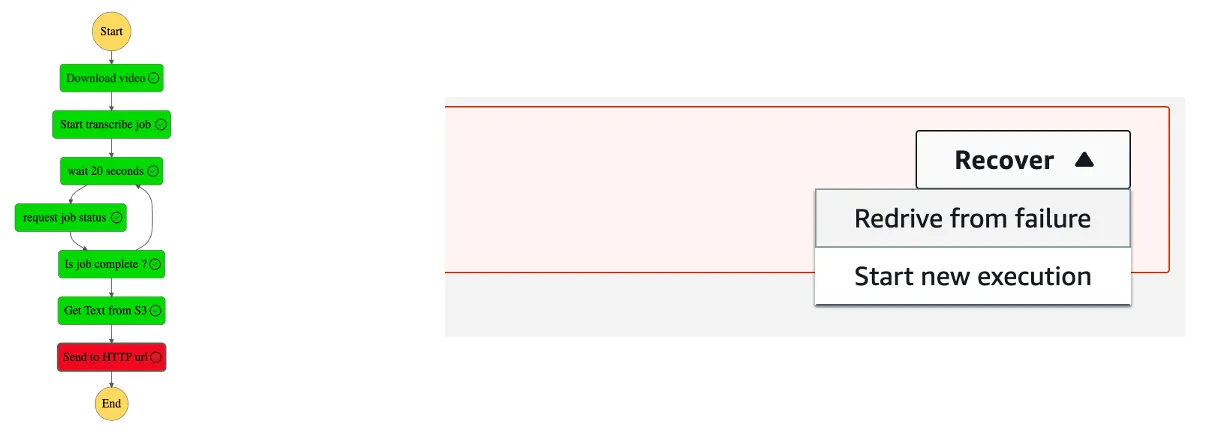

Everything Fails All the Time

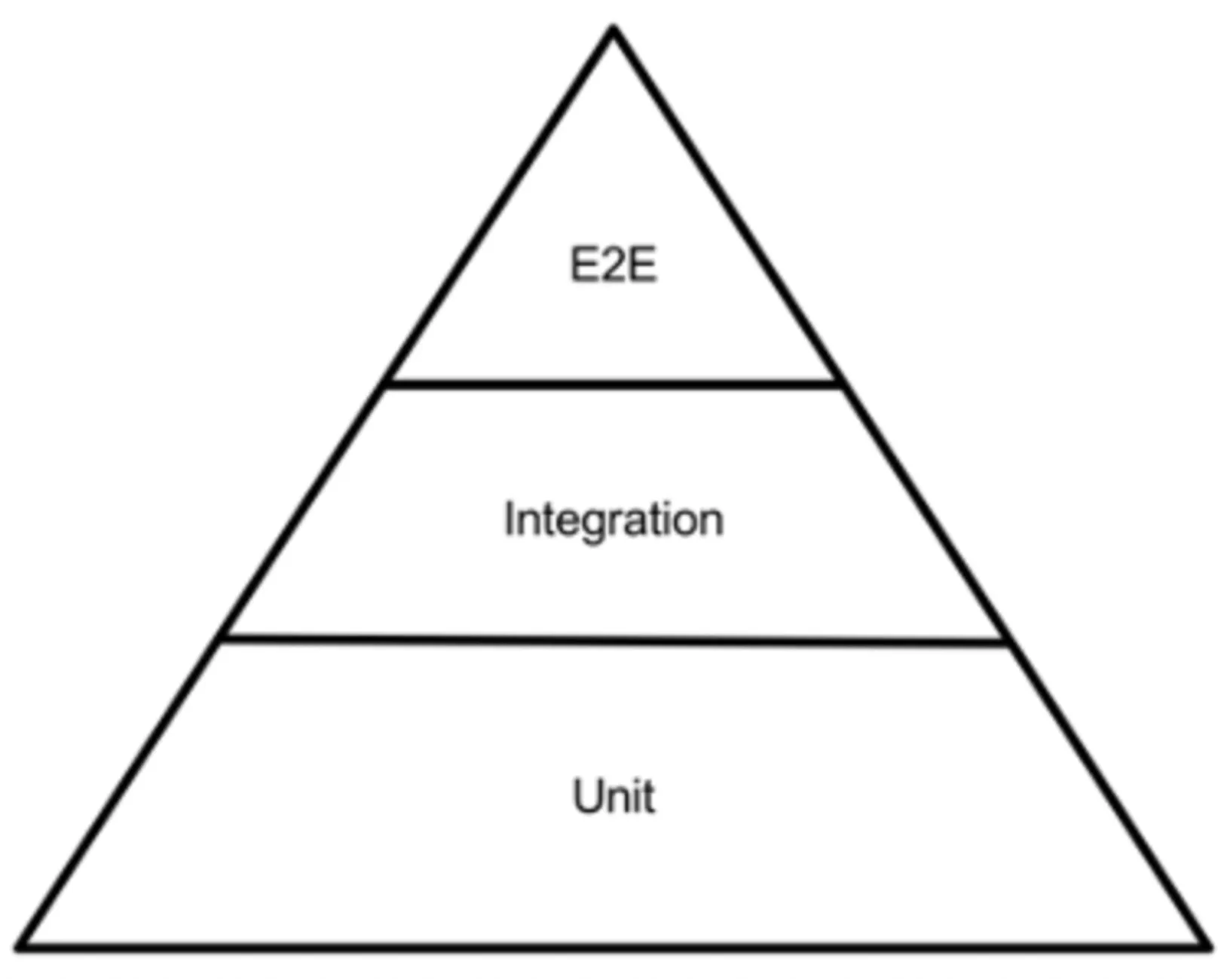

- Unit tests, focusing on testing the smallest possible fragments of software (especially in terms of code) in simulated conditions,

- Integration tests, focusing on checking the behaviour of individual infrastructure elements correlating between themselves,

- E2E tests that check the behaviour of the entire service in conditions that are the most similar to real life.

- Disabling the cluster state analyzer,

- Preparing the cluster, launching the appropriate number of tasks,

- Creating a test scenario (e.g. scale the cluster down to 1 task),

- Sending the scenario to Testing Step Function, which tests the cluster’s behavior,

- Waiting for the result.