Diagrams to CDK/Terraform using Claude 3 on Amazon Bedrock

Use AI to generate infrastructure as code from Architecture diagrams and images

Update #1: Updated content to incorporate feedback.

- An AWS account ready with CDK bootstrap.

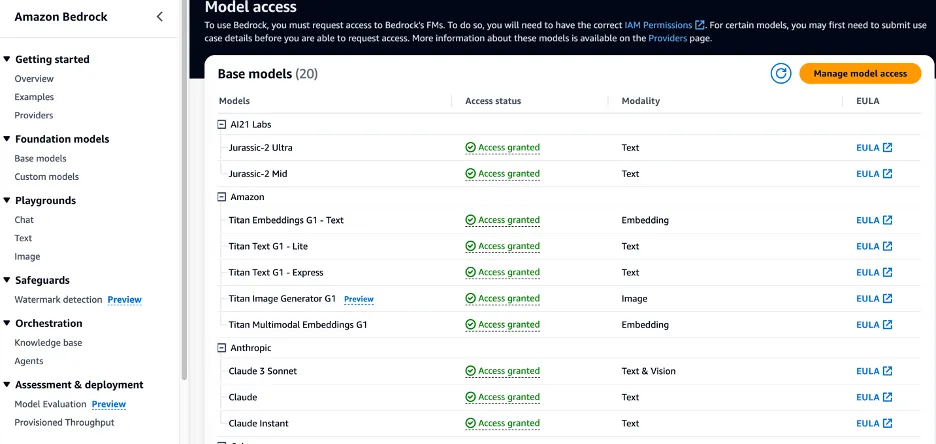

- Amazon Bedrock and model access enable for Claude 3 Sonnet

claude_vision.py and copy below code

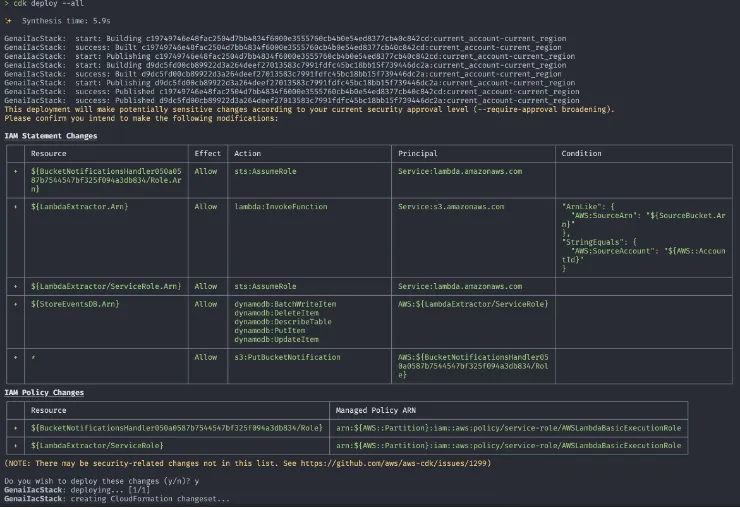

lambda folder at the root and create a sample hello.js file with below sample code.cdk synth and cdk deploy –all. Voila!!

- Run

cdk destroy - Disable Amazon Bedrock Model access.

- Seamless Automation with AI-Powered Assistance: Harnessing AI capabilities to read diagrams and generate code, even if it produces initial boilerplate code if not the fully structured code, streamlines the development process. As AI continues to evolve, the potential for generating more sophisticated and structured code increases, promising even greater efficiency gains in the future.

- Accessibility for Non-Programmers: Diagram-to-code tools empower team members without extensive programming backgrounds to contribute to infrastructure development. By providing a user-friendly interface for creating diagrams and generating code, these tools democratize the process, enabling more team members to participate effectively in infrastructure-as-code initiatives.

- Accelerated Prototyping and Iteration: The ability to quickly generate boilerplate code from diagrams accelerates prototyping and iteration cycles. Teams can rapidly translate architectural designs into functional code, enabling faster feedback loops and more agile development practices.

- Facilitated Learning and Skill Development: For individuals looking to enhance their coding skills, diagram-to-code tools provide a valuable learning resource. By observing the generated code and its relationship to the architectural diagrams, team members can gain insights into coding principles and practices, fostering skill development over time.

Next up, a streamlit web UI based approach which provides a friendly interactive UI to use this solution for those who hate CLIsAny opinions in this post are those of the individual author and may not reflect the opinions of AWS.