Live Streaming from Unity - Real-Time Playback (Part 7)

Let's add live stream *playback* in a game built with Unity!

🐉 Here Be Dragons! 🐉: The method used in this post uses some undocumented functionality to obtain the URL used for subscribing to real-time playback with Amazon IVS. This is likely to change (or not work) in the future, so be warned!

WebRTC package that we used for broadcasting for playback, and we'll also require a stage token for playback, so if you've not yet read part 2 in this series, now would be a great time to do that. The main difference for playback is that we'll need to render the incoming frames to the UI, and we'll need to modify the URL that we use to connect based on the contents of the stage token. We'll also need to know the participantId of the stream that we'd like to subscribe to, so we'll need to construct a way to obtain that. Let's start by getting that participantId. WebRTCPlayback script below, but you can refer to the final script as a reference.participantId, and we can use the Amazon IVS integration with EventBridge to get notified when another participant has joined the stage. For this, I've created an AWS Lambda function that is triggered by an EventBridge rule filtered to look for events with the detail-type of IVS Stage Update with the an event_name of Participant Published or Participant Unpublished. This rule will trigger the UnityParticipantUpdated function and here is the SAM yaml used to create the rule.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

EventRule1:

Type: AWS::Events::Rule

Properties:

Description: >-

Rule to send a custom chat event when an a stage participant joins or leaves the unity demo stage.

EventBusName: default

EventPattern:

source:

- aws.ivs

detail-type:

- IVS Stage Update

detail:

event_name:

- Participant Published

- Participant Unpublished

Name: unity-demo-stage-participant-update

State: ENABLED

Targets:

-

Arn:

Fn::GetAtt:

- "UnityParticipantUpdated"

- "Arn"

Id: "UnityParticipantUpdateTarget"UnityParticipantUpdated function is also defined in yaml. This function needs two variables, the UNITY_CHAT_ARN that we'll need to send a message to the game via the WebSocket connection, and the UNITY_STAGE_ARN to make sure that we're only notifying the message bus when a participant has joined/left the specific Amazon IVS stage that we're interested in. 1

2

3

4

5

6

7

8

9

10

11

UnityParticipantUpdated:

Type: 'AWS::Serverless::Function'

Properties:

Environment:

Variables:

UNITY_CHAT_ARN: '[YOUR CHAT ARN]'

UNITY_STAGE_ARN: '[YOUR STAGE ARN]'

Handler: index.unityParticipantUpdated

Layers:

- !Ref IvsChatLambdaRefLayer

CodeUri: lambda/1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

{

"version": "0",

"id": "12345678-1a23-4567-a1bc-1a2b34567890",

"detail-type": "IVS Stage Update",

"source": "aws.ivs",

"account": "123456789012",

"time": "2020-06-23T20:12:36Z",

"region": "us-west-2",

"resources": [

"[YOUR STAGE ARN]"

],

"detail": {

"session_id": "st-...",

"event_name": "Participant Published",

"user_id": "[Your User Id]",

"participant_id": "xYz1c2d3e4f"

}

}

SendEvent (docs) method of the IvsChatClient to send a custom event with the name STAGE_PARTICIPANT_UPDATED to the chat room.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

import { IvschatClient, SendEventCommand } from "@aws-sdk/client-ivschat";

const ivsChatClient = new IvschatClient();

export const unityParticipantUpdated = async (event) => {

if (event.resources.findIndex((e) => e === process.env.UNITY_STAGE_ARN) > -1) {

const sendEventInput = {

roomIdentifier: process.env.UNITY_CHAT_ARN,

eventName: 'STAGE_PARTICIPANT_UPDATED',

attributes: {

event: JSON.stringify(event.detail),

},

};

const sendEventRequest = new SendEventCommand(sendEventInput);

await ivsChatClient.send(sendEventRequest);

}

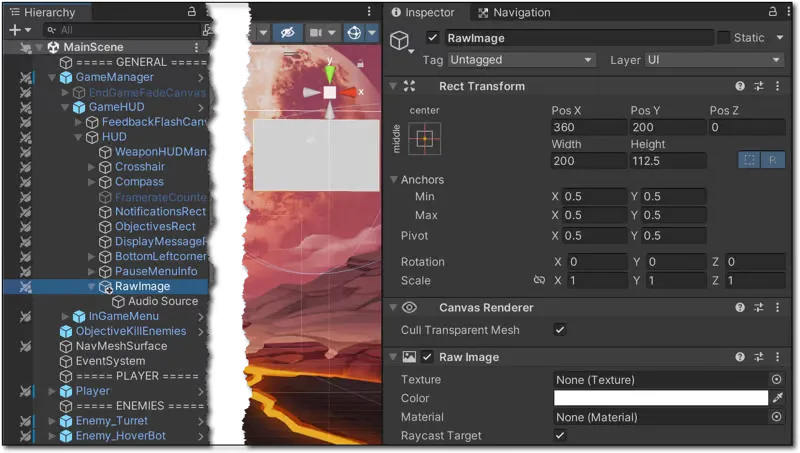

};Raw Image in the FPS demo game's HUD that we'll ultimately use to render the live stream. We'll add a child Audio Source as well for the live stream audio playback.

WebRTCPlayback to the Raw Image to handle listening for participants and rendering the video. WebRTCPlayback script. When we receive the publish event that our AWS Lambda function publishes, we'll establish the peerConnection and connect the live stream for playback. If the event is an 'unpublish' event, we'll clear the render texture and dispose of the peerConnection.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

websocket.OnMessage += (bytes) =>

{

var msgString = System.Text.Encoding.UTF8.GetString(bytes);

Debug.Log("Chat Message Received! " + msgString);

ChatMessage chatMsg = ChatMessage.CreateFromJSON(msgString);

Debug.Log(chatMsg);

if (chatMsg.Type == "EVENT" && chatMsg.EventName == "STAGE_PARTICIPANT_UPDATED")

{

if (chatMsg.Attributes.particpantUpdatedEvent.event_name == "Participant Published")

{

//receiveImage.gameObject.SetActive(true);

participantId = chatMsg.Attributes.particpantUpdatedEvent.participant_id;

Debug.Log("Participant ID: " + participantId);

EstablishPeerConnection();

StartCoroutine(DoWHIP());

}

else

{

receiveImage.texture = null;

if (peerConnection != null)

{

peerConnection.Close();

peerConnection.Dispose();

peerConnection = null;

}

}

}

};

whip_url from the token and use that to get our SDP. Let's create a class to model the stage token.1

2

3

4

5

6

7

8

9

10

[]

public class StageJwt

{

public string whip_url;

public string[] active_participants;

public static StageJwt CreateFromJSON(string jsonString)

{

return JsonUtility.FromJson<StageJwt>(jsonString);

}

}GetStageToken() function.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

// decode and parse token to get `whip_url`

var parts = participantToken.token.Split('.');

if (parts.Length > 2)

{

var decode = parts[1];

var padLength = 4 - decode.Length % 4;

if (padLength < 4)

{

decode += new string('=', padLength);

}

var bytes = System.Convert.FromBase64String(decode);

var userInfo = System.Text.ASCIIEncoding.ASCII.GetString(bytes);

StageJwt stageJwt = StageJwt.CreateFromJSON(userInfo);

whipUrl = stageJwt.whip_url;

}WebRTCPlayback script for the RawImage that we'll use to render the video.1

RawImage receiveImage;EstablishPeerConnection() function renders the live stream to the RawImage by setting the texture of the receiveImage every time a new frame is received1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

void EstablishPeerConnection()

{

peerConnection = new RTCPeerConnection();

peerConnection.AddTransceiver(TrackKind.Audio);

peerConnection.AddTransceiver(TrackKind.Video);

peerConnection.OnIceConnectionChange = state => { Debug.Log(state); };

Debug.Log("Adding Listeners");

peerConnection.OnTrack = (RTCTrackEvent e) =>

{

Debug.Log("Remote OnTrack Called:");

if (e.Track is VideoStreamTrack videoTrack)

{

videoTrack.OnVideoReceived += tex =>

{

Debug.Log("Video Recvd");

receiveImage.texture = tex;

};

}

if (e.Track is AudioStreamTrack audioTrack)

{

Debug.Log("Audio Recvd");

receiveAudio.SetTrack(audioTrack);

receiveAudio.loop = true;

receiveAudio.Play();

}

};

}DoWhip() we use the participantId and the whipUrl from the StageToken to construct the URL used to obtain the SDP.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

IEnumerator DoWHIP()

{

Task getStageTokenTask = GetStageToken();

yield return new WaitUntil(() => getStageTokenTask.IsCompleted);

Debug.Log(participantToken.token);

Debug.Log(participantToken.participantId);

var offer = peerConnection.CreateOffer();

yield return offer;

var offerDesc = offer.Desc;

var opLocal = peerConnection.SetLocalDescription(ref offerDesc);

yield return opLocal;

var filteredSdp = "";

foreach (string sdpLine in offer.Desc.sdp.Split("\r\n"))

{

if (!sdpLine.StartsWith("a=extmap"))

{

filteredSdp += sdpLine + "\r\n";

}

}

Debug.Log("Join?");

using (UnityWebRequest publishRequest =

new UnityWebRequest(

whipUrl + "/subscribe/" + participantId

)

)

{

publishRequest.uploadHandler = new UploadHandlerRaw(System.Text.Encoding.ASCII.GetBytes(filteredSdp));

publishRequest.downloadHandler = new DownloadHandlerBuffer();

publishRequest.method = UnityWebRequest.kHttpVerbPOST;

publishRequest.SetRequestHeader("Content-Type", "application/sdp");

publishRequest.SetRequestHeader("Authorization", "Bearer " + participantToken.token);

yield return publishRequest.SendWebRequest();

if (publishRequest.result != UnityWebRequest.Result.Success)

{

Debug.Log(publishRequest.error);

}

else

{

var answer = new RTCSessionDescription { type = RTCSdpType.Answer, sdp = publishRequest.downloadHandler.text };

var opLocalRemote = peerConnection.SetRemoteDescription(ref answer);

yield return opLocalRemote;

if (opLocalRemote.IsError)

{

Debug.Log(opLocalRemote.Error);

}

}

}

}

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.