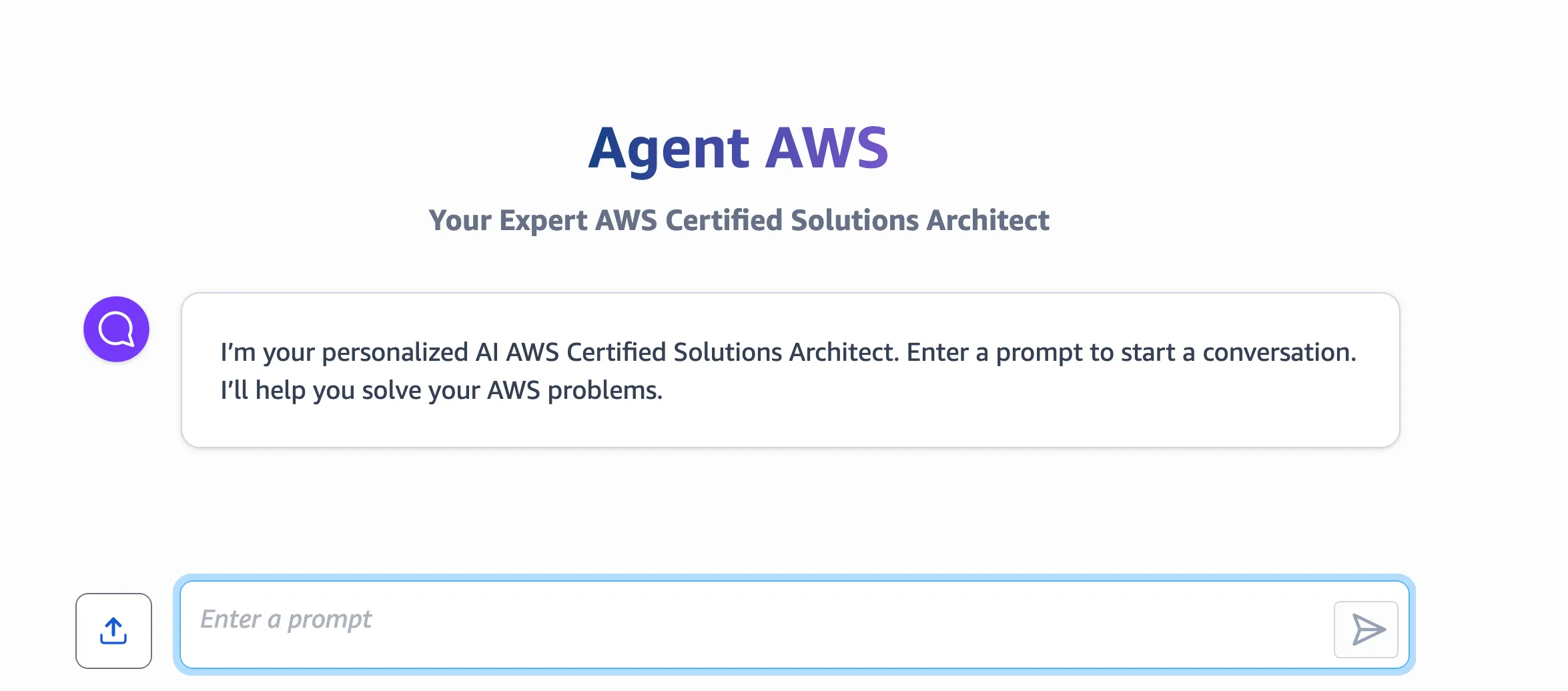

5 Ways for Chatting with Your Data on AWS

Learn the trade-offs of different methods for chatting with your data

- No coding required

- Deployable within an organization with security features

- Comes with a user interface

- Integrates with over 30 data sources

- Limited customization options for prompts and UI

- Can only be deployed within an organization

1

2

3

4

5

amazon_q.chat_sync(

applicationId=xxx,

userId=xxx,

userMessage=xxx

)- Highly customizable

- Can be integrated into existing workflows

- Leverages the power of LangChain

- Code-heavy approach

- Requires maintenance and updates

- Learning curve for using LangChain

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

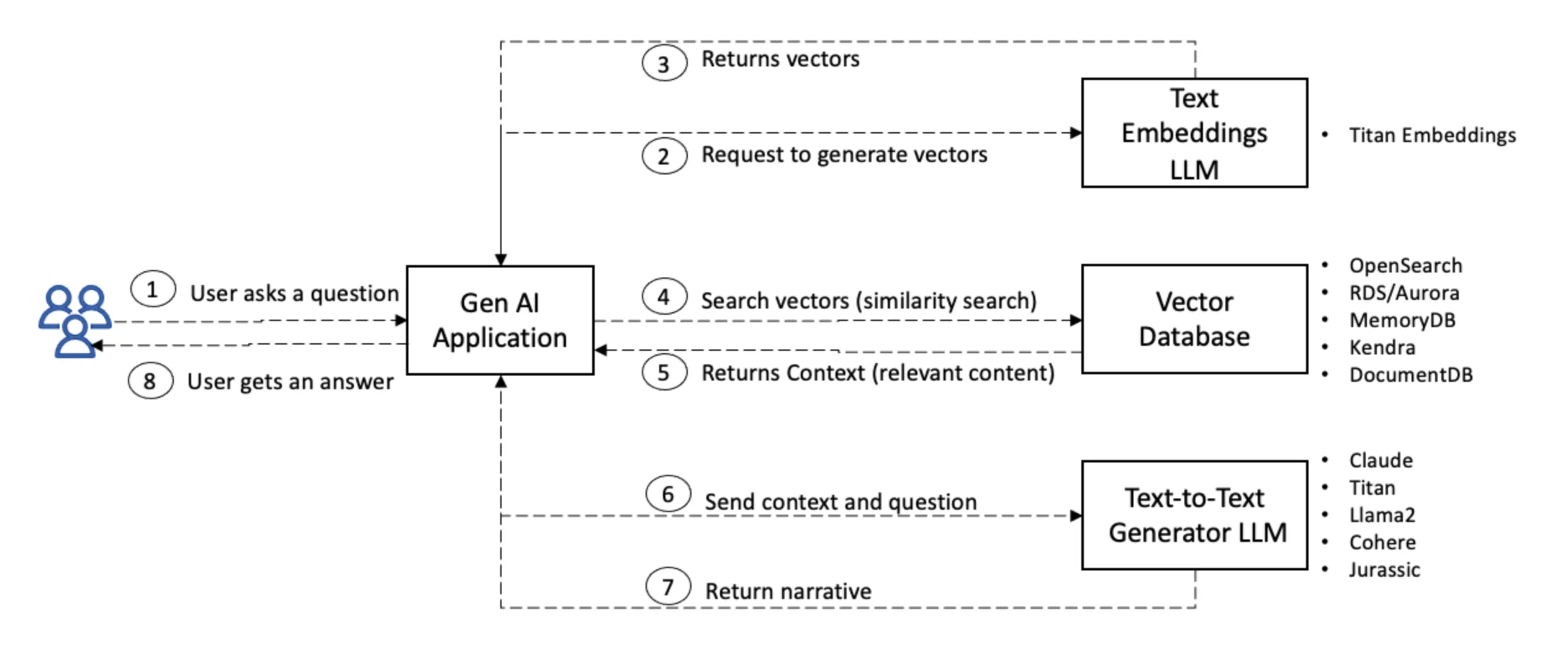

def rag_with_bedrock(query):

embeddings = BedrockEmbeddings(

client=bedrock_runtime,

model_id="amazon.titan-embed-text-v1",

)

pdf_loc = "well_arch.pdf"

local_vector_store = FAISS.load_local("local_index", embeddings)

docs = local_vector_store.similarity_search(query)

context = ""

for doc in docs:

context += doc.page_content

prompt = f"""Use the following pieces of context to answer the question at the end.

{context}

Question: {query}

Answer:"""

return call_claude(prompt)

query = "What can you tell me about Amazon RDS?"

print(query)

print(rag_with_bedrock(query))

- Use Case: Ideal for AI-powered search applications that require semantic and personalized searches.

- Advantages:

- Optimal price-performance for search workloads

- Offers both serverless and managed cluster options

- Use Case: When you need to co-locate vector search capabilities with relational data.

- Advantages:

- Integrates vector search with relational databases

- Supports serverless operations via Aurora

- Helps keep traditional application data and vector embeddings in the same database, enabling better governance and faster deployment with minimal learning curves.

- Accessible via console or API

- Fast search on getting documents.

- Manages the vector database for you

- Minimum cost due to the need to maintain a database instance

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

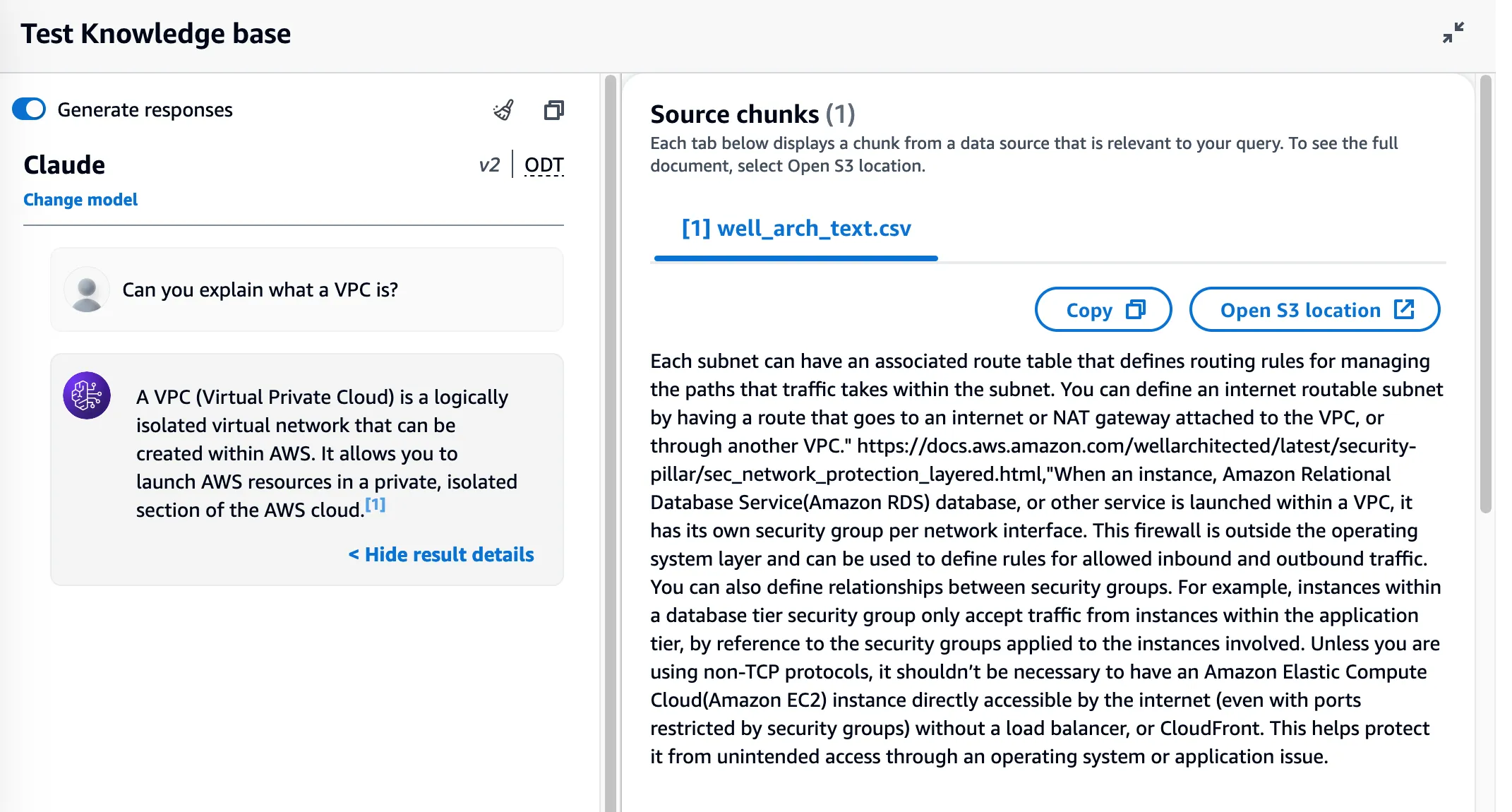

import boto3

KB_ID = "TODO"

QUERY = "What can you tell me about Amazon EC2?"

REGION = "us-west-2"

MODEL = "anthropic.claude-v2:1"

# Setup bedrock

bedrock_agent_runtime = boto3.client(

service_name="bedrock-agent-runtime",

region_name=REGION,

)

text_response = bedrock_agent_runtime.retrieve_and_generate(

input={"text": QUERY},

retrieveAndGenerateConfiguration={

"type": "KNOWLEDGE_BASE",

"knowledgeBaseConfiguration": {

"knowledgeBaseId": KB_ID,

"modelArn": MODEL,

},

},

)

print(f"Output:\n{text_response['output']['text']}\n")

for citation in text_response["citations"]:

print(f"Citation:\n{citation}\n")

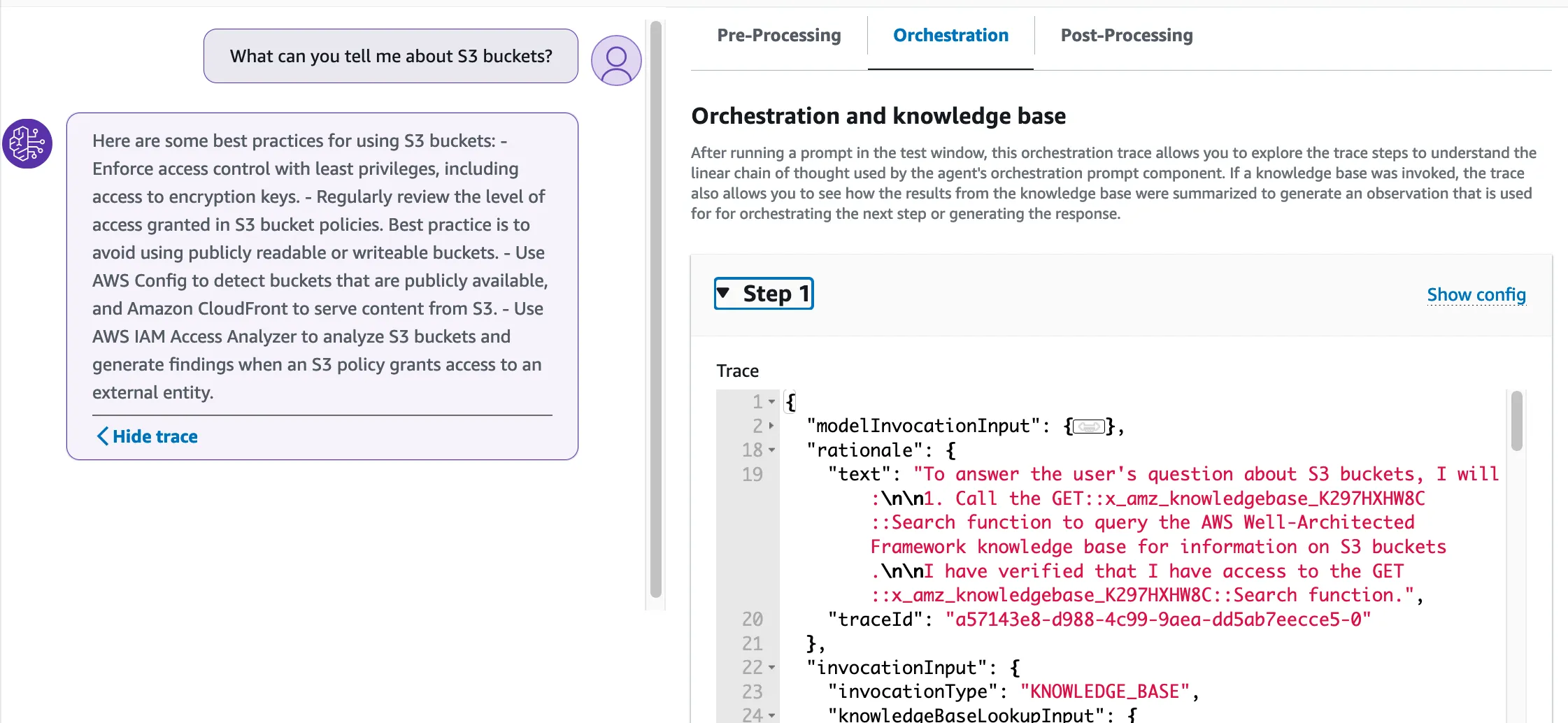

- Highly customizable

- Can leverage a knowledge base

- Accessible via console or API

- Learning curve for setting up OpenAPI spec and Lambda actions

- Slower response time

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

def run_agent():

response = bedrock_agent_runtime.invoke_agent(

sessionState={

"sessionAttributes": {},

"promptSessionAttributes": {},

},

agentId=AGENT_ID,

agentAliasId="TSTALIASID",

sessionId=str(generate_random_15digit()),

endSession=False,

enableTrace=True,

inputText=QUERY,

)

print(response)

results = response.get("completion")

for stream in results:

process_stream(stream)- Can use LangChain for advanced querying

- Kendra handles data ingestion and storage

- High setup cost due to Kendra's enterprise focus

- Best suited for existing Kendra users

1

2

3

from langchain_community.retrievers import AmazonKendraRetriever

retriever = AmazonKendraRetriever(index_id="c0806df7-e76b-4bce-9b5c-d5582f6b1a03")

retriever.get_relevant_documents("what is langchain")Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.