GenAI under the hood [Part 1] - Tokenizers and why you should care

This is the first post in a series "GenAI under the hood" diving in on a basic concept - tokenizers! Read on to learn about what they are, how you can create one for your own data, and the connection tokens have with cost and performance.

Sentences > sub-words > token-IDs

- Reversibility and Losslessness - BPE allows for converting tokens back into the original text without any loss of information.

- Flexibility: BPE can handle arbitrary text, even if it's not part of the tokenizer's training data.

- Compression BPE compresses the text, resulting in a token sequence that is typically shorter than the original byte representation.

- Subword Representation: BPE attempts to represent common subwords as individual tokens, allowing the model to better understand grammar and generalize to unseen text.

1

2

3

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")1

2

3

4

5

6

text = "This is an example sentence."

tokens = tokenizer.encode(text)

print(tokens) # Output: [101, 1188, 1110, 170, 1642, 1580, 119, 102]

decoded_text = tokenizer.decode(tokens)

print(decoded_text) # Output: "This is an example sentence."- Performance Optimization: Different tokenization strategies can affect model size, training time, and inference speed, all of which are critical factors in real-world applications.

- Multilingual Support: If your application needs to handle multiple languages, tokenization becomes even more critical, as different languages have unique characteristics and challenges.

- Domain-Specific Optimization: In specialized domains like medicine or law, where terminology and jargon play a crucial role, tailored tokenization techniques can improve the model's understanding and generation capabilities.

- Interpretability and Explainability: By understanding tokenization, you can gain insights into the inner workings of language models, aiding in debugging, model analysis, and ensuring trustworthiness.

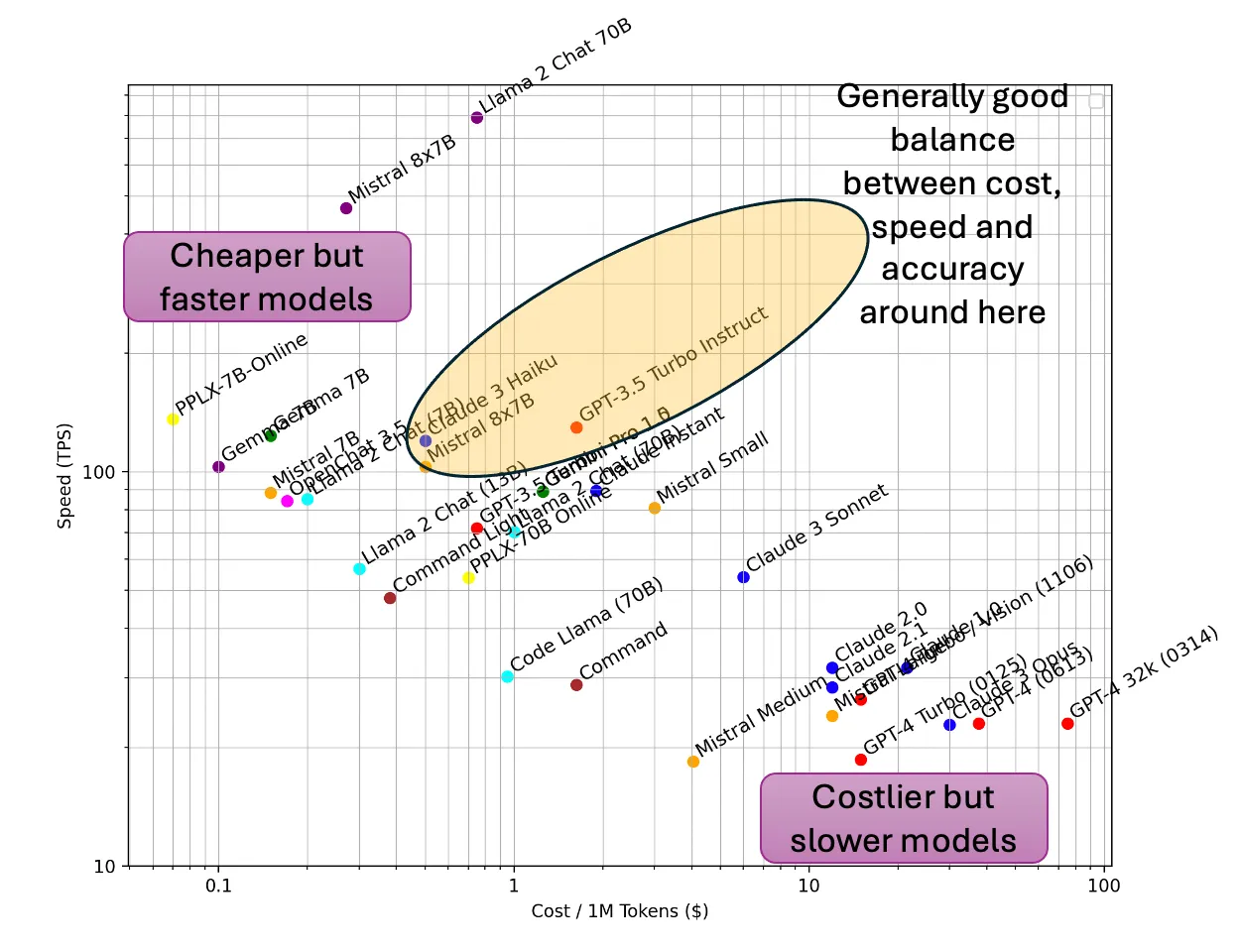

- Cost Optimization: Optimizing tokenization can potentially reduce computational resources and lead to significant cost savings, especially in large-scale deployments.

- Why can't language models spell words correctly? Tokenization.

- Why can't they perform simple string processing tasks like reversing a string? Tokenization.

- Why do they struggle with non-English languages like Japanese? Tokenization.

- Why are they bad at simple arithmetic? Tokenization.

- Why did GPT-2 have trouble coding in Python? Tokenization.

- Why do LLM based agent runs break sometimes? Tokenization

- Why do some critical numbers, code, or key words get messed up on processing/extraction when involving LLMs? Tokenization

- Why do models fail to understand complex logical operations or reasoning? Tokenization.

- Why do language models sometimes generate biased or offensive content? Tokenization.

- Why do models have difficulty with domain-specific jargon or technical terminology? Tokenization.

- Why do language models struggle with tasks that require spatial or visual reasoning? Tokenization.

- Why do models sometimes fail to maintain consistency or coherence in generated text? Tokenization.

- Why do language models have trouble understanding ambiguous or idiomatic language? Tokenization.

- Why do models sometimes generate text that violates grammatical or stylistic rules? Tokenization.

- "Just bought some $AAPL stocks. Feeling optimistic!"

- "Earnings report for $NFLX is out! Expecting a surge in prices."

- "Discussing potential mergers in the tech industry. $MSFT $GOOGL $AAPL"

- "Great analysis on $TSLA by @elonmusk. Exciting times ahead!"

- "Watching closely: $AMZN's impact on the e-commerce sector.

1

2

from datasets import load_dataset

raw_datasets = load_dataset("StephanAkkerman/stock-market-tweets-data", "train")1

2

3

4

5

6

7

def get_training_corpus():

dataset = raw_datasets["train"]

for start_idx in range(0, len(dataset), 1000):

samples = dataset[start_idx: start_idx + 1000]

yield samples["text"]

training_corpus = get_training_corpus()1

2

from transformers import AutoTokenizer

old_tokenizer = AutoTokenizer.from_pretrained("gpt2")1

new_tokenizer = old_tokenizer.train_new_from_iterator(training_corpus, 64000)1

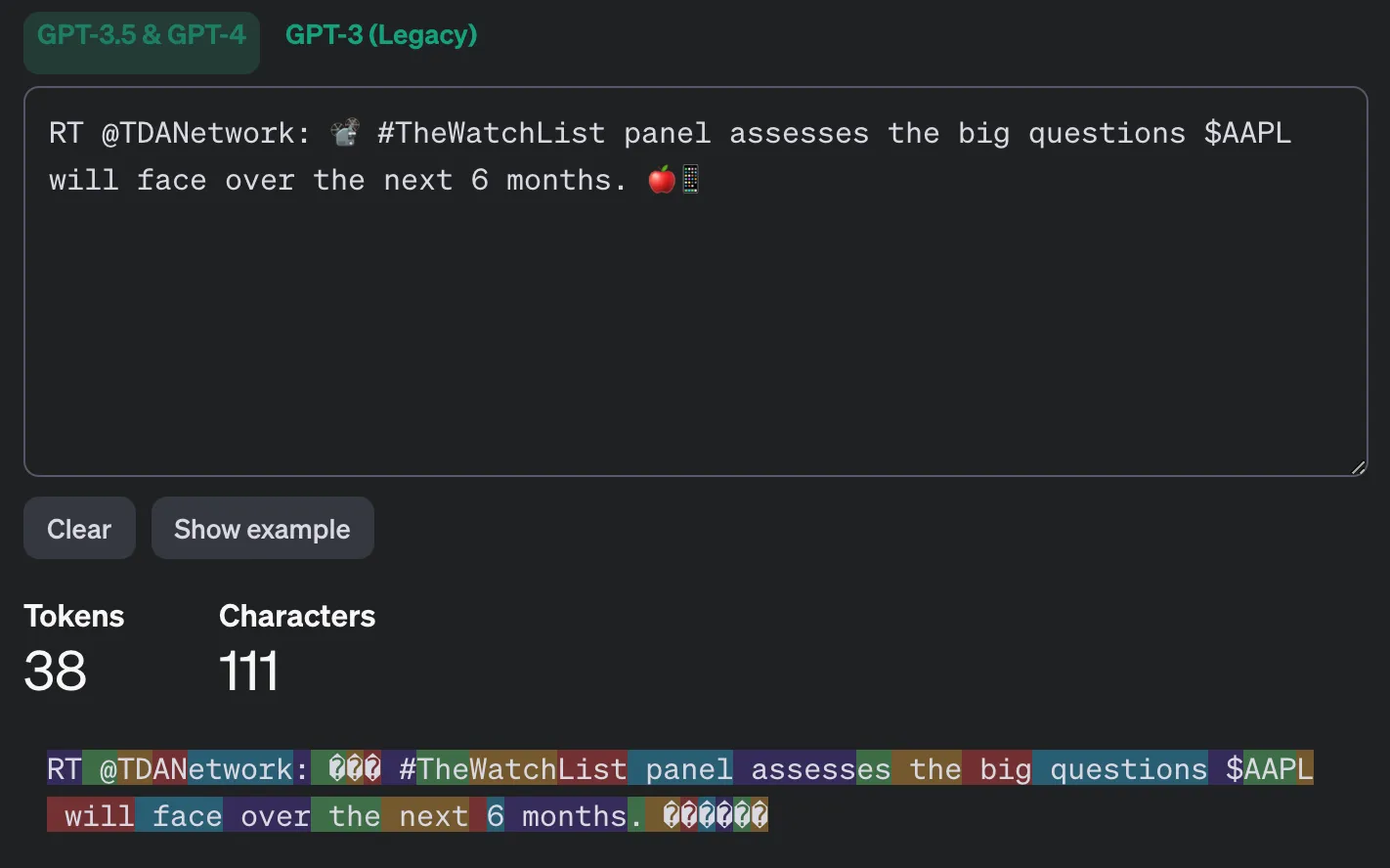

example_tweet = "RT @TDANetwork: 📽️ #TheWatchList panel assesses the big questions $AAPL will face over the next 6 months. 🍎📱"

| Old Tokenizer tokens | Trained new tokenizer tokens |

|---|---|

| RT | RT |

| @ | @ |

| TD , AN, etwork | TDANetwork |

| : | : |

| # | # |

| The, Watch, List | TheWatchList |

| panel | panel |

| assesses, es | assess, es |

| the | the |

| big | big |

| questions | questions |

| $, AAP, L | $AAPL |

| will | will |

| face | face |

| over | over |

| the | the |

| next | next |

| six | six |

| months | months |

| ?????? | 🍎📱 |

- Prompt Engineering: Carefully crafting prompts to minimize unnecessary tokens can reduce the overall token count without sacrificing performance.

- Tokenization Strategies: Exploring alternative tokenization techniques, such as SentencePiece or WordPiece, may yield better compression and reduce token counts.

- Token Recycling: Instead of generating text from scratch, reusing or recycling tokens from previous generations can reduce the overall token count and associated costs.

- Model Compression: Techniques like quantization, pruning, and distillation can reduce the model size, leading to more efficient token processing and lower inference costs.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.