Mapping embeddings: from meaning to vectors and back

My adventures with RAGmap 🗺️🔍 and RAGxplorer 🦙🦺 with a light introduction to embeddings, vector databases, dimensionality reduction and advanced retrieval mechanisms.

"What I cannot create, I do not understand" ―Richard Feynman

"If you can't explain it simply, you don't understand it well enough." ―Albert Einstein

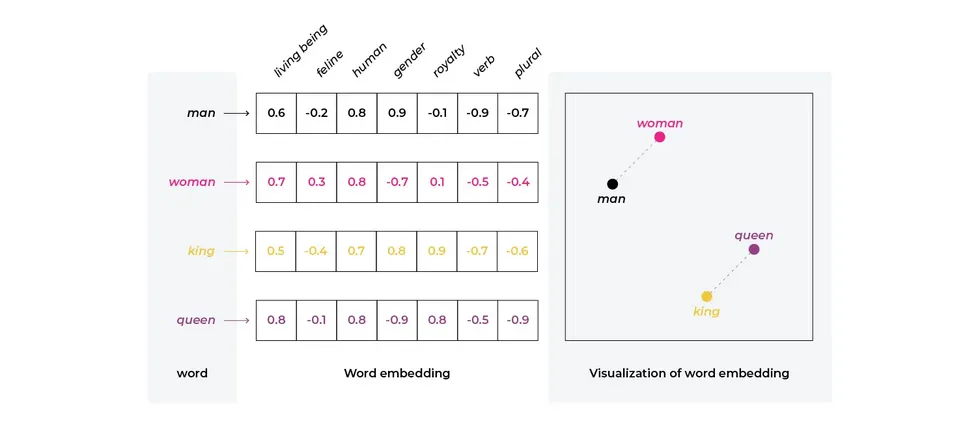

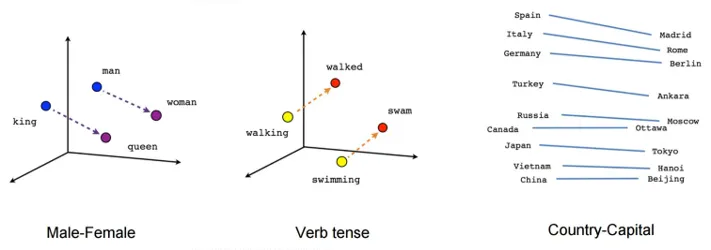

"Embeddings are numerical representations of real-world objects that machine learning (ML) and artificial intelligence (AI) systems use to understand complex knowledge domains like humans do." (AWS)

"Embeddings are vectorial representations of text that capture the semantic meaning of paragraphs through their position in a high dimensional vector space." (Mistral AI)

"Embeddings are vectors that represent real-world objects, like words, images, or videos, in a form that machine learning models can easily process." (Cloudflare)

"learnt vector representations of pieces of data" (Sebastian Bruch)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

"""

Retrieves information about the embedding models

available in Amazon Bedrock

"""

import json

import boto3

bedrock = boto3.client("bedrock")

response = bedrock.list_foundation_models(

byOutputModality="EMBEDDING",

byInferenceType="ON_DEMAND"

)

print(json.dumps(response['modelSummaries'], indent=4))

🧐 Interested in training your own embedding model? Check out this article from Hugging Face on how to Train and Fine-Tune Sentence Transformers Models.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

[

{

"modelArn": "arn:aws:bedrock:us-east-1::foundation-model/amazon.titan-embed-g1-text-02",

"modelId": "amazon.titan-embed-g1-text-02",

"modelName": "Titan Text Embeddings v2",

"providerName": "Amazon",

"inputModalities": [

"TEXT"

],

"outputModalities": [

"EMBEDDING"

],

"customizationsSupported": [],

"inferenceTypesSupported": [

"ON_DEMAND"

],

"modelLifecycle": {

"status": "ACTIVE"

}

},

{

"modelArn": "arn:aws:bedrock:us-east-1::foundation-model/amazon.titan-embed-text-v1",

"modelId": "amazon.titan-embed-text-v1",

"modelName": "Titan Embeddings G1 - Text",

"providerName": "Amazon",

"inputModalities": [

"TEXT"

],

"outputModalities": [

"EMBEDDING"

],

"responseStreamingSupported": false,

"customizationsSupported": [],

"inferenceTypesSupported": [

"ON_DEMAND"

],

"modelLifecycle": {

"status": "ACTIVE"

}

},

{

"modelArn": "arn:aws:bedrock:us-east-1::foundation-model/amazon.titan-embed-image-v1",

"modelId": "amazon.titan-embed-image-v1",

"modelName": "Titan Multimodal Embeddings G1",

"providerName": "Amazon",

"inputModalities": [

"TEXT",

"IMAGE"

],

"outputModalities": [

"EMBEDDING"

],

"customizationsSupported": [],

"inferenceTypesSupported": [

"ON_DEMAND"

],

"modelLifecycle": {

"status": "ACTIVE"

}

},

{

"modelArn": "arn:aws:bedrock:us-east-1::foundation-model/cohere.embed-english-v3",

"modelId": "cohere.embed-english-v3",

"modelName": "Embed English",

"providerName": "Cohere",

"inputModalities": [

"TEXT"

],

"outputModalities": [

"EMBEDDING"

],

"responseStreamingSupported": false,

"customizationsSupported": [],

"inferenceTypesSupported": [

"ON_DEMAND"

],

"modelLifecycle": {

"status": "ACTIVE"

}

},

{

"modelArn": "arn:aws:bedrock:us-east-1::foundation-model/cohere.embed-multilingual-v3",

"modelId": "cohere.embed-multilingual-v3",

"modelName": "Embed Multilingual",

"providerName": "Cohere",

"inputModalities": [

"TEXT"

],

"outputModalities": [

"EMBEDDING"

],

"responseStreamingSupported": false,

"customizationsSupported": [],

"inferenceTypesSupported": [

"ON_DEMAND"

],

"modelLifecycle": {

"status": "ACTIVE"

}

}

]🤔 Did you know? Long before embeddings became a household name, data was often encoded as hand-crafted feature vectors. These too were meant to represent real-world objects and concepts.

love.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

"""

Sends love to Amazon Titan for Embeddings ❤️

and gets numbers in return 🔢

"""

import json

import boto3

bedrock_runtime = boto3.client("bedrock-runtime")

response = bedrock_runtime.invoke_model(

modelId="amazon.titan-embed-text-v1",

body="{\"inputText\": \"love\"}"

)

body = json.loads(response.get('body').read())

print(body['embedding'])love is a great choice for two reasons:- First, most models will represent

lovewith a single token. In the case of Amazon Titan for Embeddings, you can check that this is the case by printing theinputTextTokenCountattribute of the model output (in English,1 token ~=``4``chars). So what we get is a pure, unadulterated representation of the word. - Second,

loveis one of the most complex and layered words there is. One has to wonder, looking at the giant vector below, where exactly are we hiding those layers and how can we peel that lovely onion. Where is the actuallove?

"Love loves to love love." ―James Joyce

🔮 The usual way to judge how similar two vectors are is to call a distance function. We won't have to worry about this though, since the vector database we're going to use has built-in support for common metrics viz. Squared Euclidean (L2 Squared)l2, Inner Productipand Cosine similaritycosinedistance functions. If you'd like to change this value (hnsw:spacedefaults tol2), please refer to Chroma > Usage Guide > Using collections > Changing the distance function.

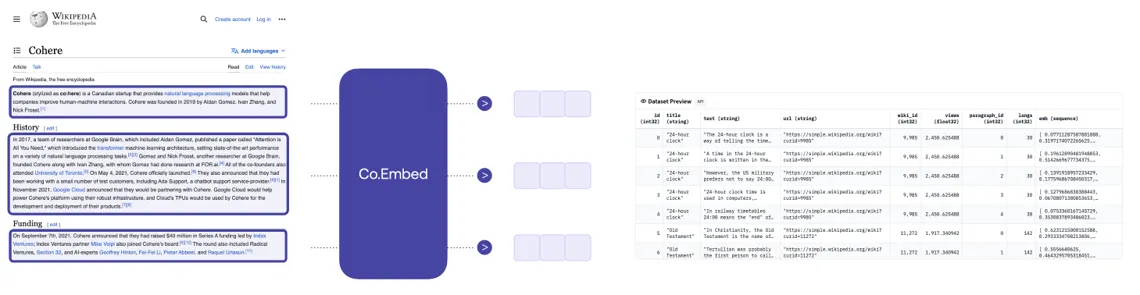

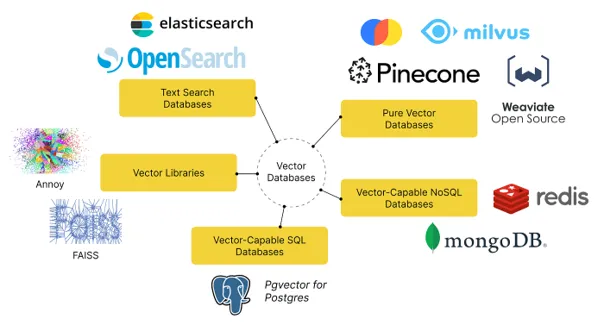

"The most important piece of the preprocessing pipeline, from a systems standpoint, is the vector database." ―Andreessen Horowitz

"Every database will become a vector database, sooner or later." ―John Hwang

- Partner solutions

- Others?

<10MB) documents chunked into bits of fixed size, this will do just fine.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

"""

Creates a Chroma collection and indexes some sample sentences

using Amazon Bedrock.

☝️⚠️ As of this writing, Chroma only supports Titan models:

https://github.com/chroma-core/chroma/pull/1675

If you want to use Cohere Embed models, install Chroma by running the command:

> pip install git+https://github.com/JGalego/chroma@bedrock-cohere-embed

"""

import boto3

import chromadb

from chromadb.utils.embedding_functions import AmazonBedrockEmbeddingFunction

# Create embedding function

session = boto3.Session()

bedrock_ef = AmazonBedrockEmbeddingFunction(

session=session,

model_name="cohere.embed-multilingual-v3"

)

# Create collection

client = chromadb.Client()

collection = client.create_collection(

"demo",

embedding_function=bedrock_ef

)

# Index data samples

collection.add(

documents=["Hello World", "Olá Mundo"],

metadatas=[{'language': "en"}, {'language': "pt"}],

ids=["hello_world", "ola_mundo"]

)

print(collection.peek())

love?

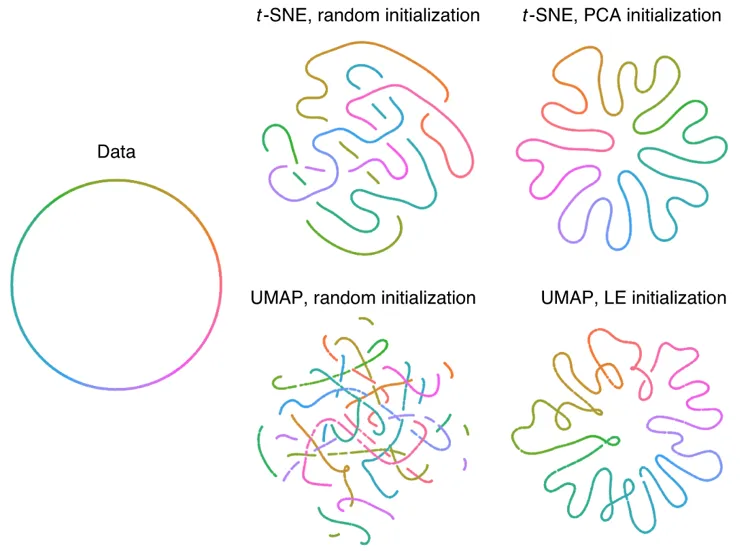

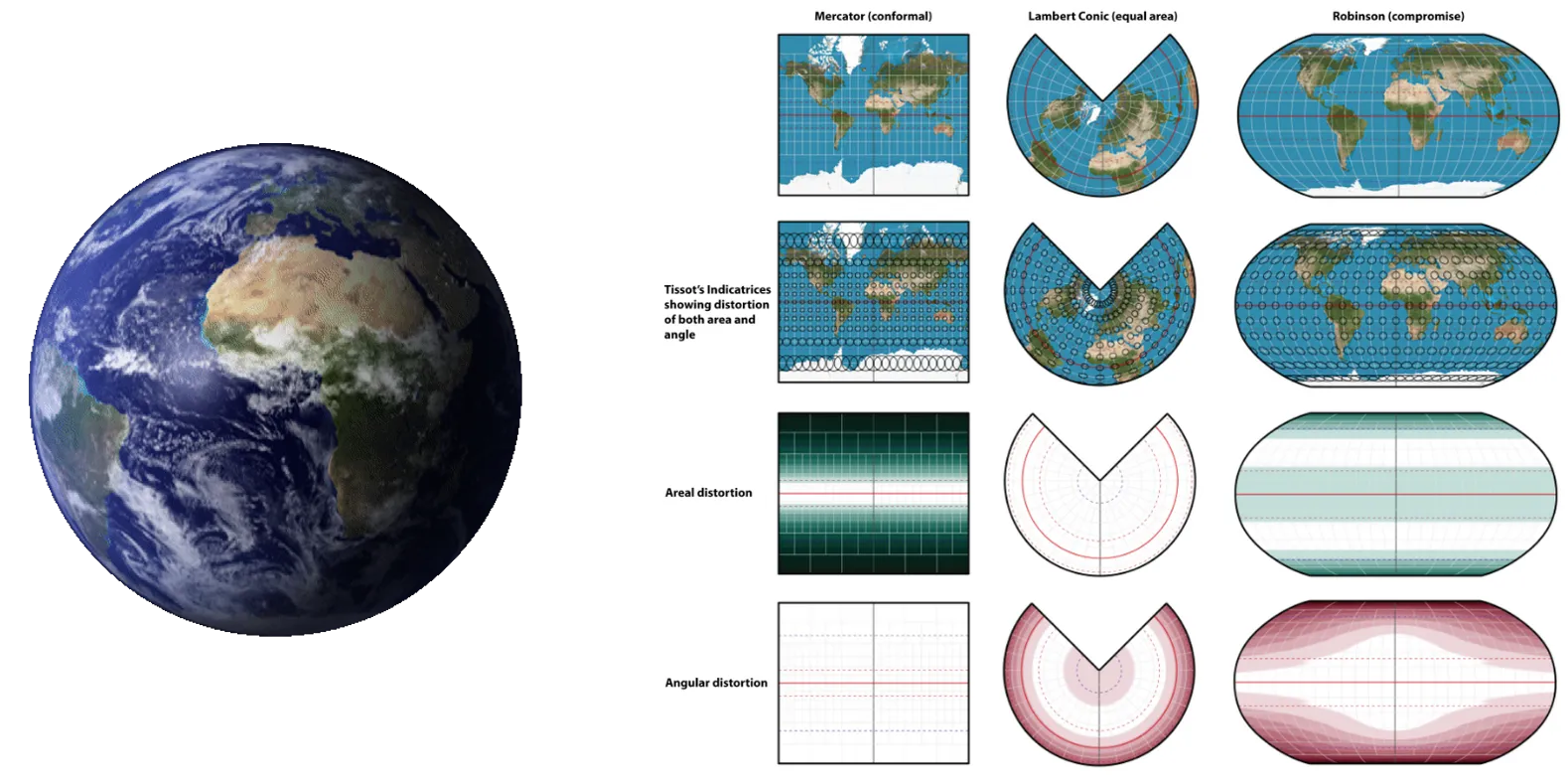

- global vs local - local methods preserve the local characteristics of the data, while global methods provide an "holistic" view of the data

- linear vs non-linear - in general, nonlinear methods can handle very nuanced data and are usually more powerful, while linear methods are more robust to noise.

- parametric vs non-parametric - parametric techniques generate a mapping function that can be used to transform new data, while non-parametric methods are entirely "data-driven", meaning that new (test) data cannot be directly transformed with the mapping learnt on the training data.

- deterministic vs stochastic - given the same data, deterministic methods will produce the same mapping, while the output of a stochastic method will vary depending on the way you seed it.

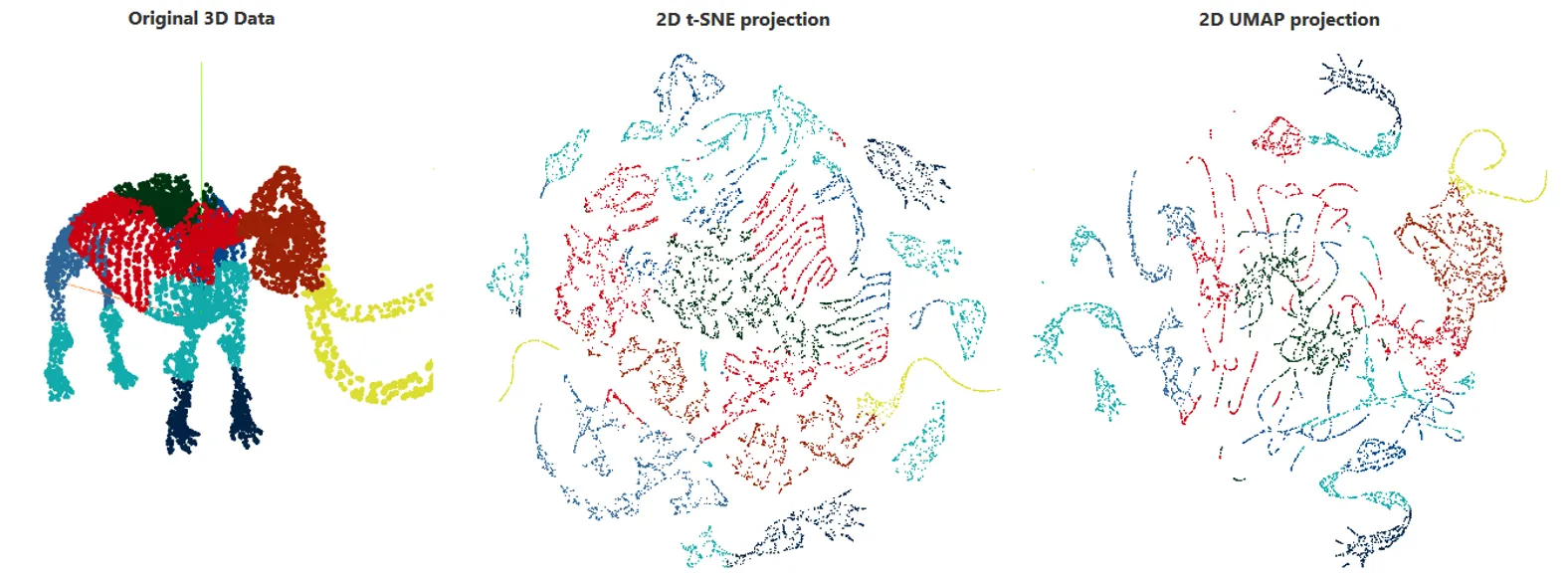

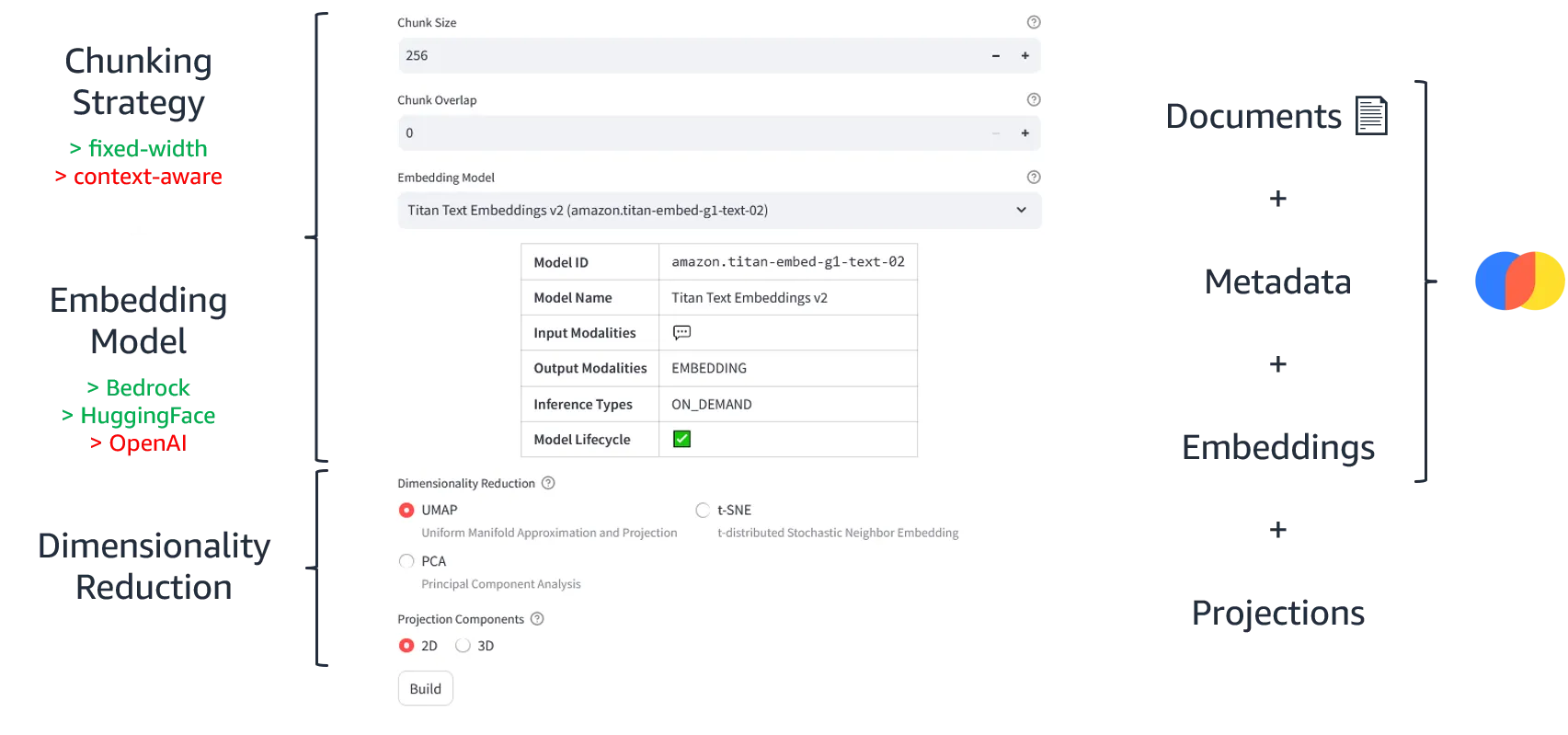

- PCA (Principal Component Analysis) is great for capturing global patterns

- t-SNE (t-Distributed Stochastic Neighbor Embedding) emphasizes local patterns and clusters

- UMAP (Uniform Manifold Approximation and Projection) can handle complex relationships

"With four parameters I can fit an elephant, and with five I can make him wiggle his trunk" ―John von Neumann

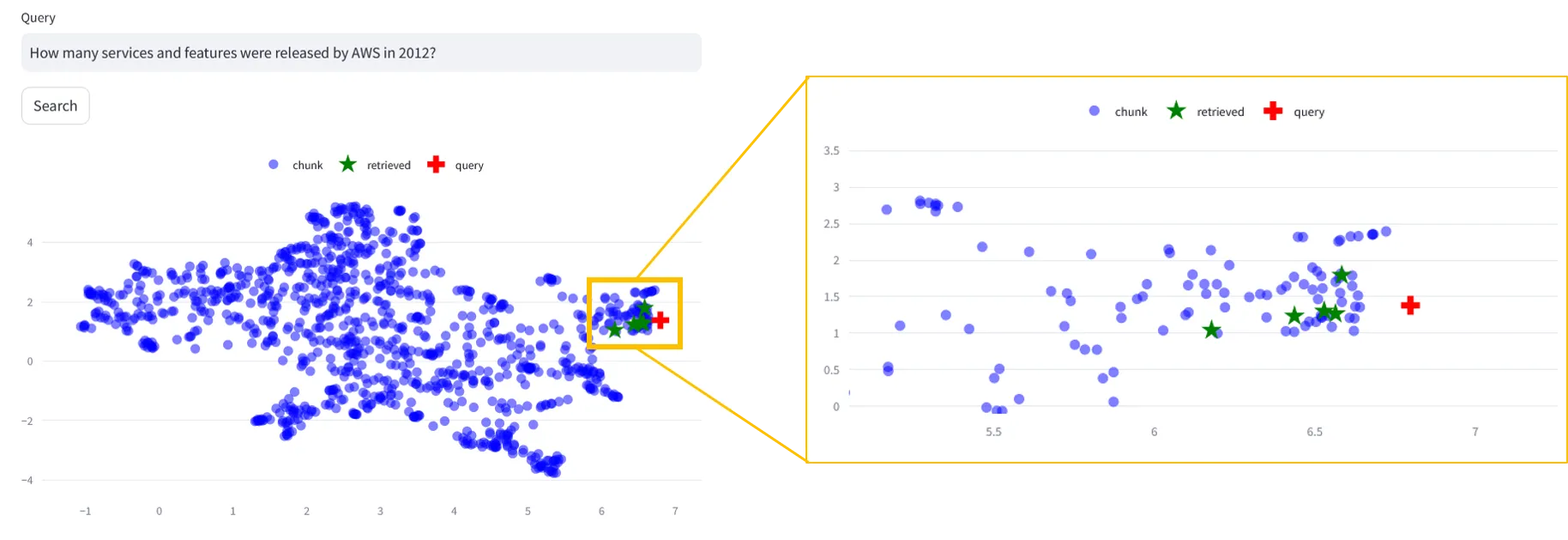

- Embeddings represent raw data as vectors in a way that preserves meaning

- Vector databases can be used to store, search and do all kinds of operations on them

- Dimensionality reduction is a good but imperfect way to make them more tangible

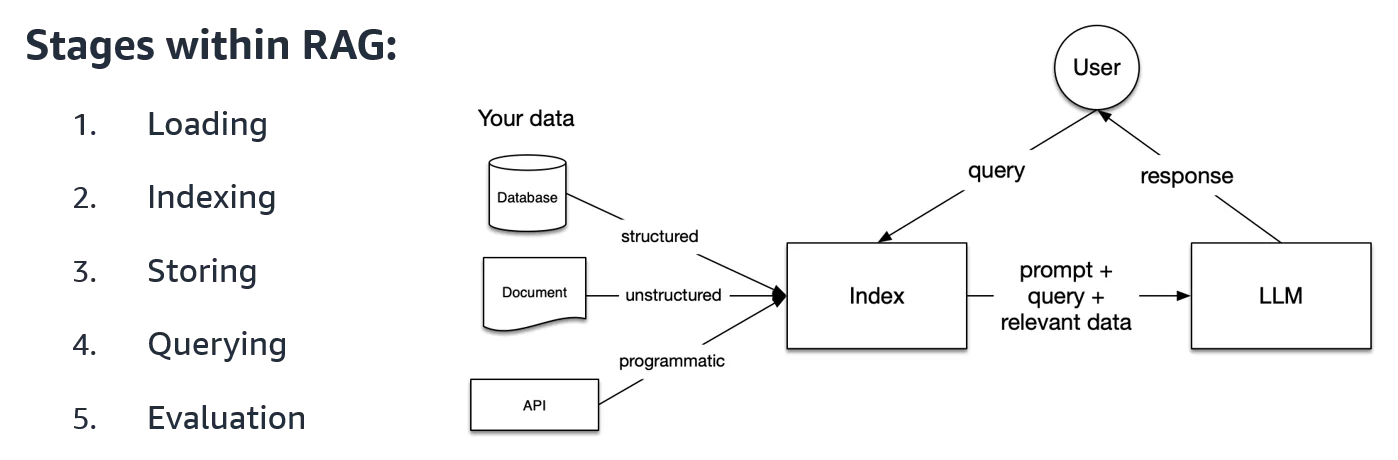

- Get your data from where it lives (datalake, database, API, &c.)

- Chunk it into smaller pieces that can be consumed by the embeddings model

- Send it to an embeddings model to generate the vector representations (indexes)

- Store those indexes as well as some metadata in a vector database

- (Repeat steps 1-4 for as long as there is new data to index)

- They both work on the scale of a single document, and

- There's no LLM involved, just embeddings and their projections.

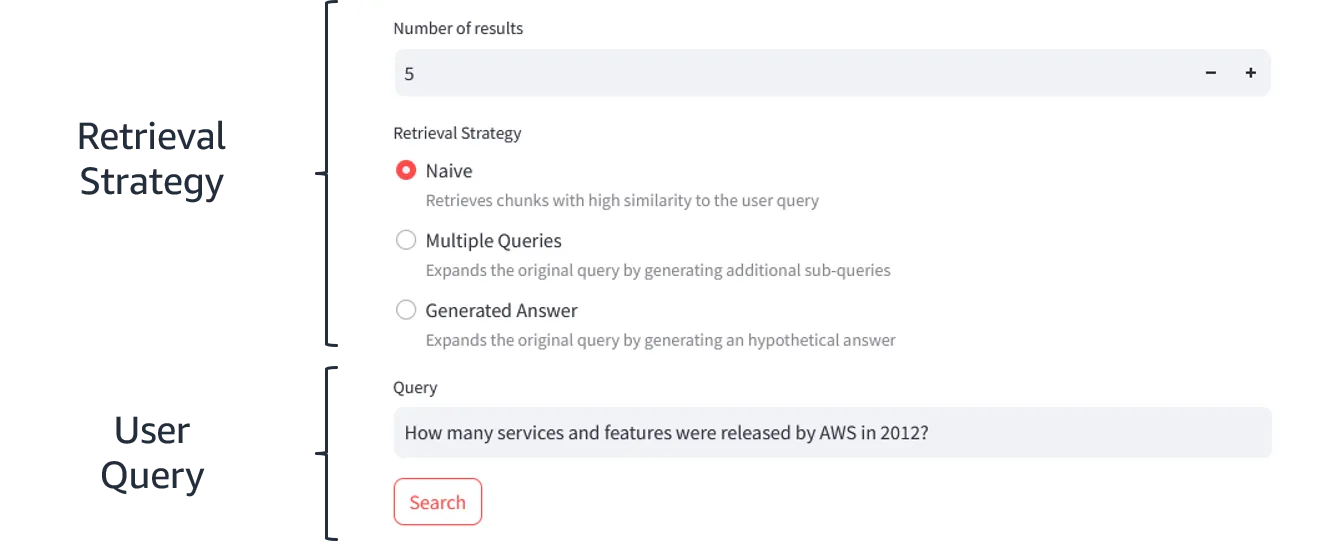

👆 There's one notable exception related to advanced retrieval techniques. A naive query search won't always work and we'll need to move on to more advanced methods. As of this writing, RAGmap has built-in support for simple query transformations like generated answers (HyDE) /query --> LLM --> hypothetical answerand multiple sub-queries /query --> LLM --> sub-queriesusing Anthropic Claude models via Amazon Bedrock.

If you don't want to follow these steps, feel free to watch the video instead 📺

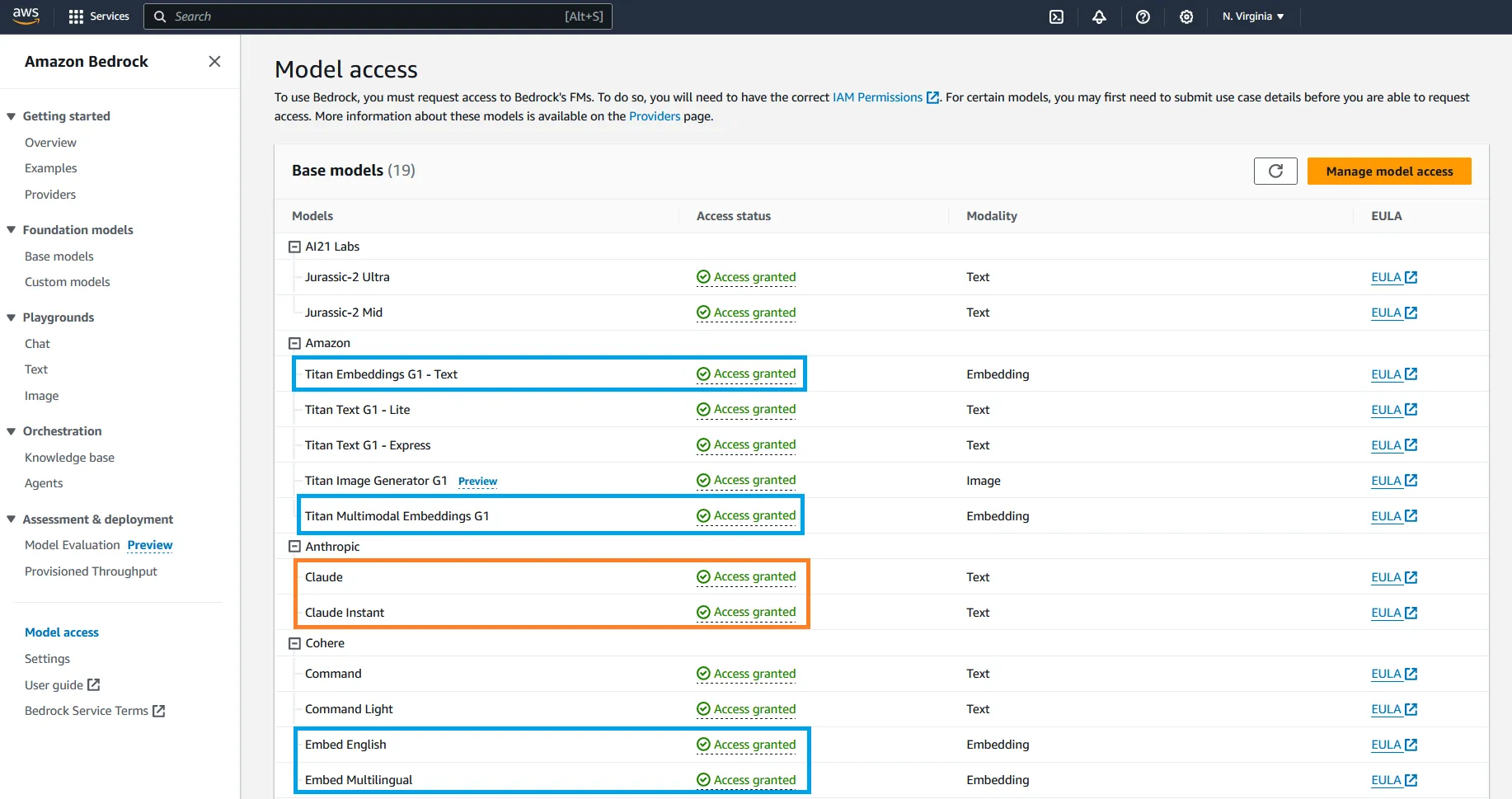

- Enable access to the embedding (Titan Embeddings, Cohere Embed) and text (Anthropic Claude) models via Amazon Bedrock.

For more information on how to request model access, please refer to the Amazon Bedrock User Guide (Set up > Model access)

1

2

3

4

5

6

7

8

9

# Clone the repository

git clone https://github.com/JGalego/RAGmap

# Install dependencies

cd RAGmap

pip install -r requirements.txt

# Start the application

streamlit run app.pyPDF, DOCX and PPTX.

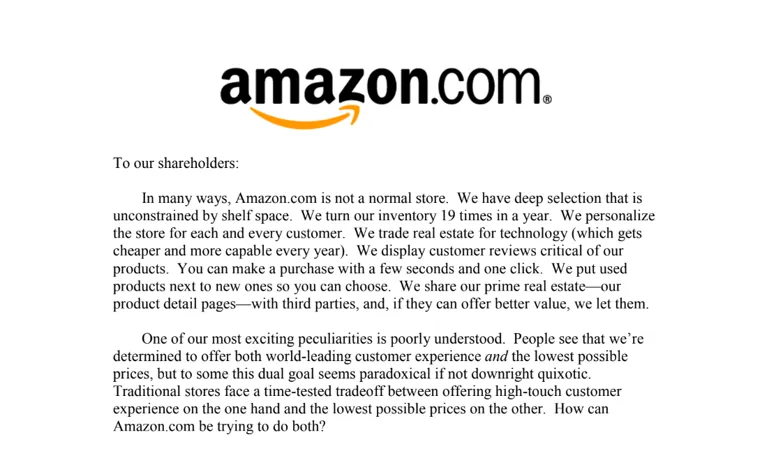

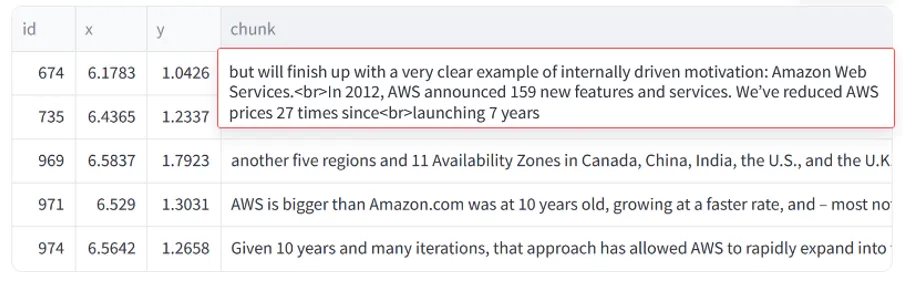

1-2 minutes ⏳.How many services and features were released by AWS in 2012?, select a retrieval method and hit Search.

Fun fact: the first critical bug 🐛 in RAGxplorer was caught thanks to RAGmap. While testing both tools, I noticed that the retrieved IDs were too far away from the original query and were inconsistent with the results I was getting from RAGmap. Since the tools use different models - RAGmap (Amazon Bedrock, Hugging Face) and RAGxplorer (OpenAI) - there was bound to be a difference, but not one this big. It turns out that the issue was related to the way chroma returns documents and orders them.

- RAGmap and RAGxplorer

- (Mistral) Embeddings

- (Cloudflare) What are embeddings in machine learning?

- (Hugging Face) Train and fine-tune sentence transformer models

- (PAIR) Understanding UMAP

- (Latent Space) RAG is a Hack

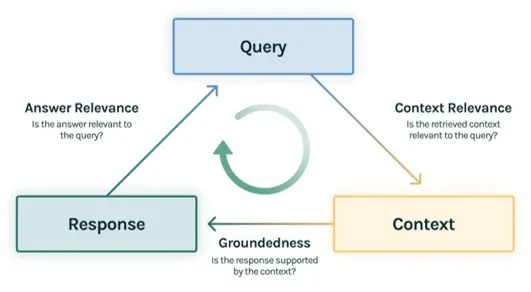

- (TruLens) The RAG Triad

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.