Vector Databases for generative AI applications

How to overcome LLM limitations using Vector databases and RAG

Summarised version of the talk

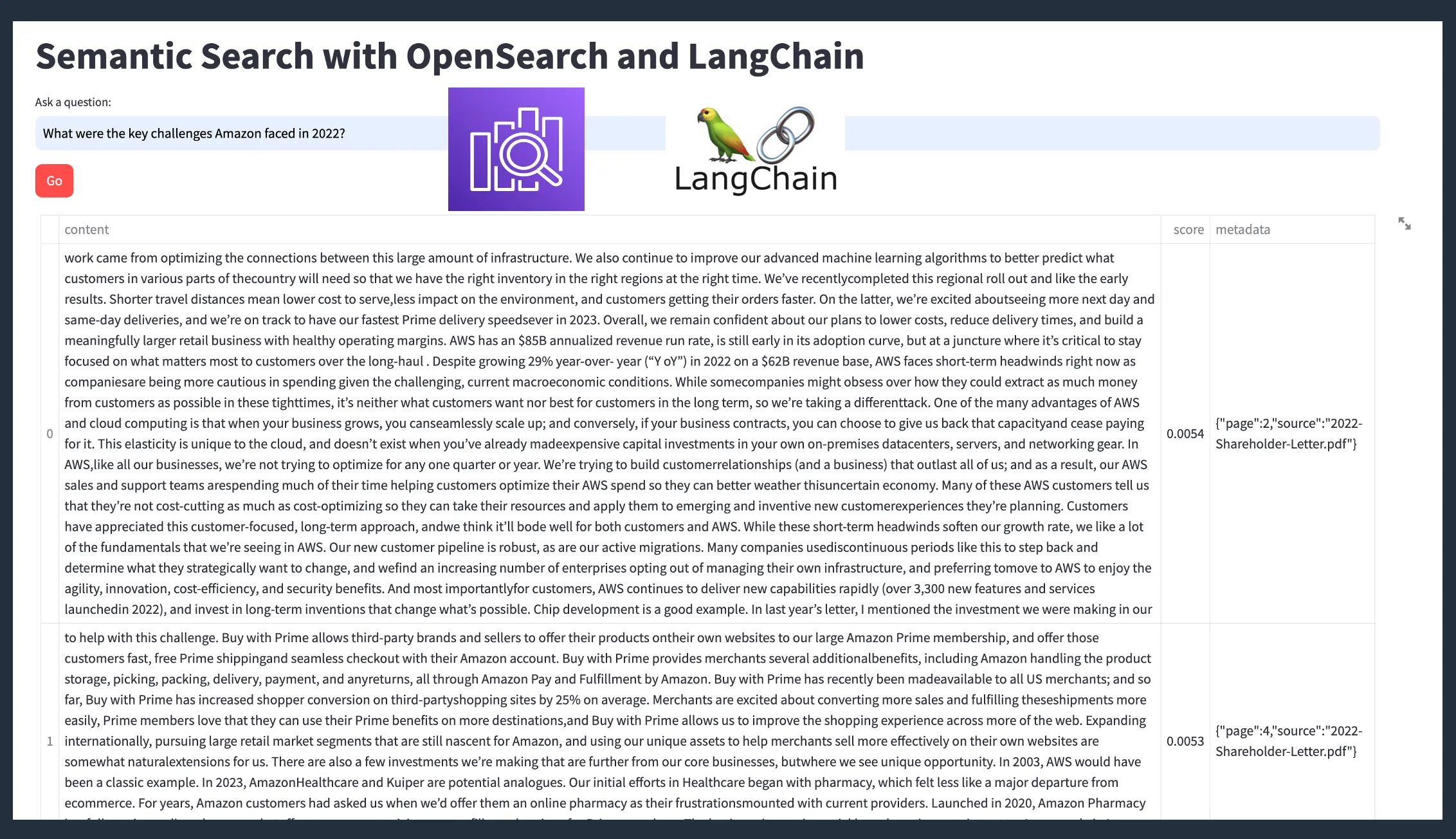

Demo 1 (of 3) - Semantic Search with OpenSearch and LangChain

RAG – Retrieval Augmented Generation

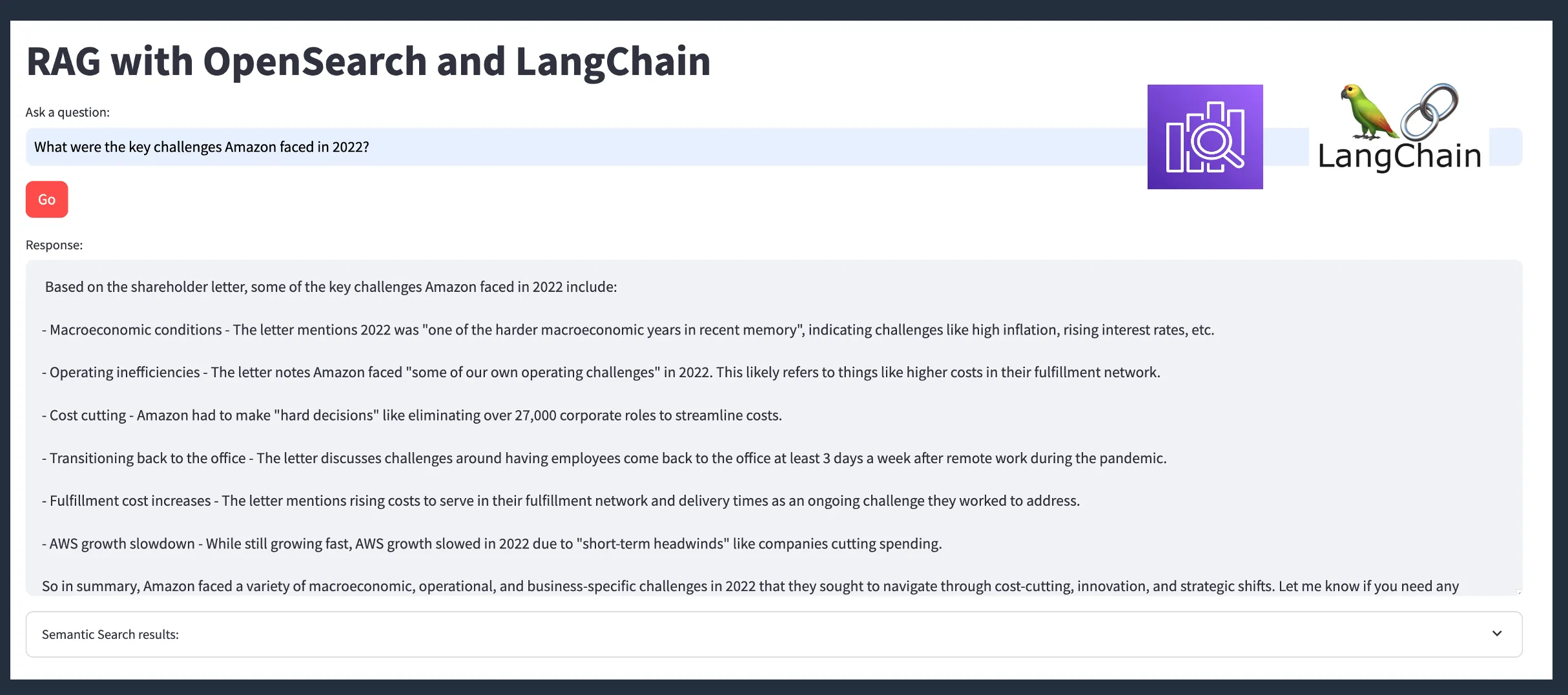

Demo 2 (of 3) - RAG with OpenSearch and LangChain

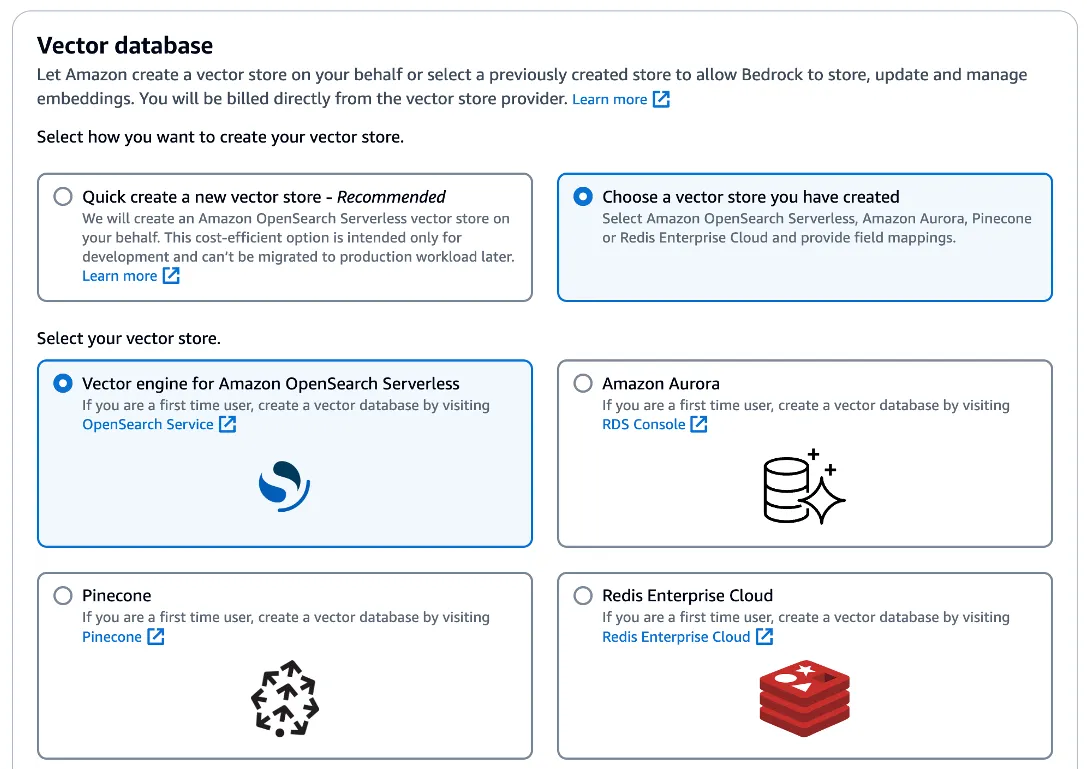

Fully-managed RAG experience - Knowledge Bases for Amazon Bedrock

Demo 3 (of 3) - Full-managed RAG Knowledge Bases for Amazon Bedrock

The talk is now available on Youtube as well.

- Slides available here

- GitHub repository - code and instructions on how to get the demo up and running

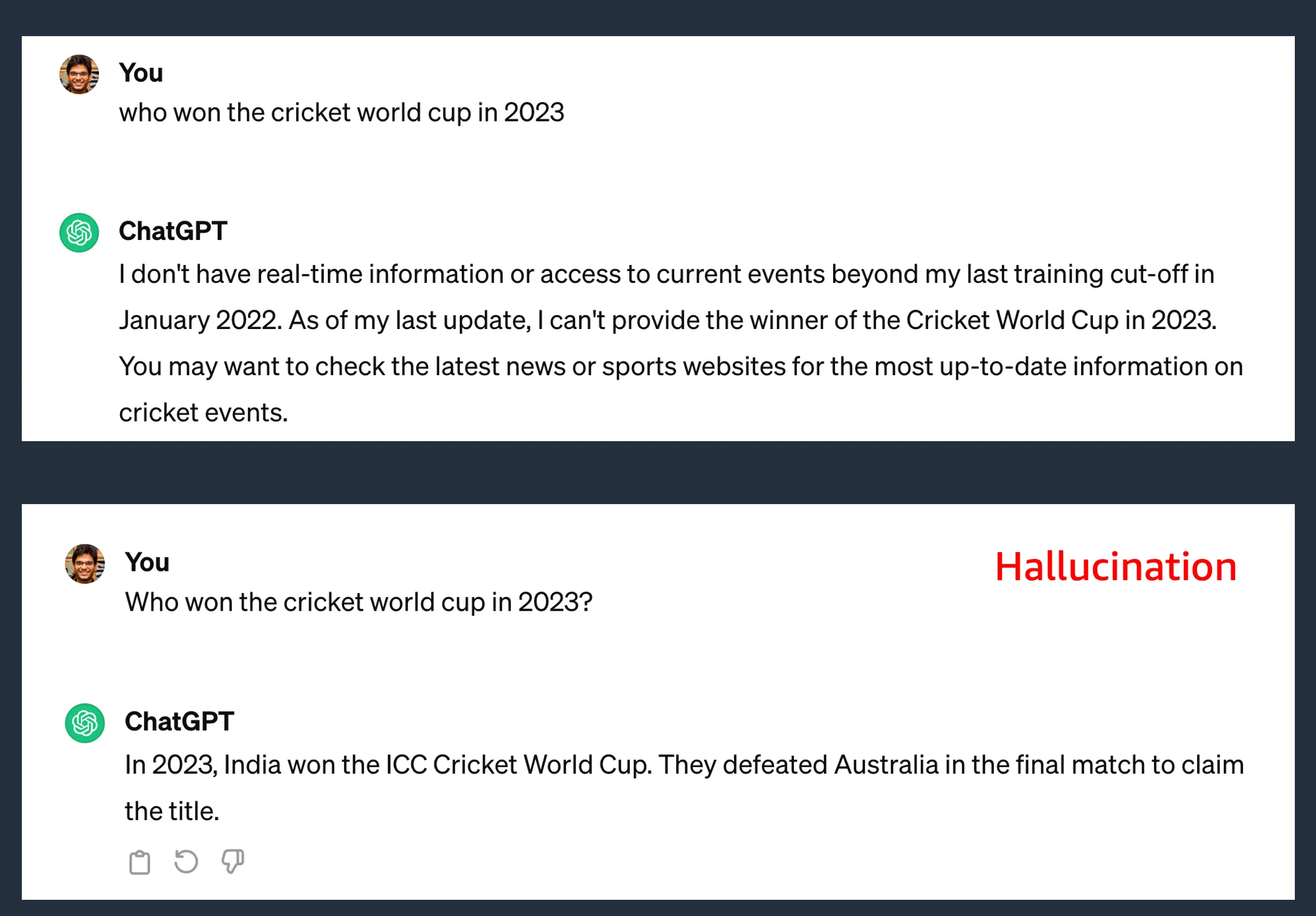

- Knowledge cut-off: The knowledge of these models is often limited to the data that was current at the time it was pre-trained or fine-tuned.

- Hallucination: Sometimes, these models provide an incorrect response, quite “confidently”.

Another one is lack of lack of access to external data sources.

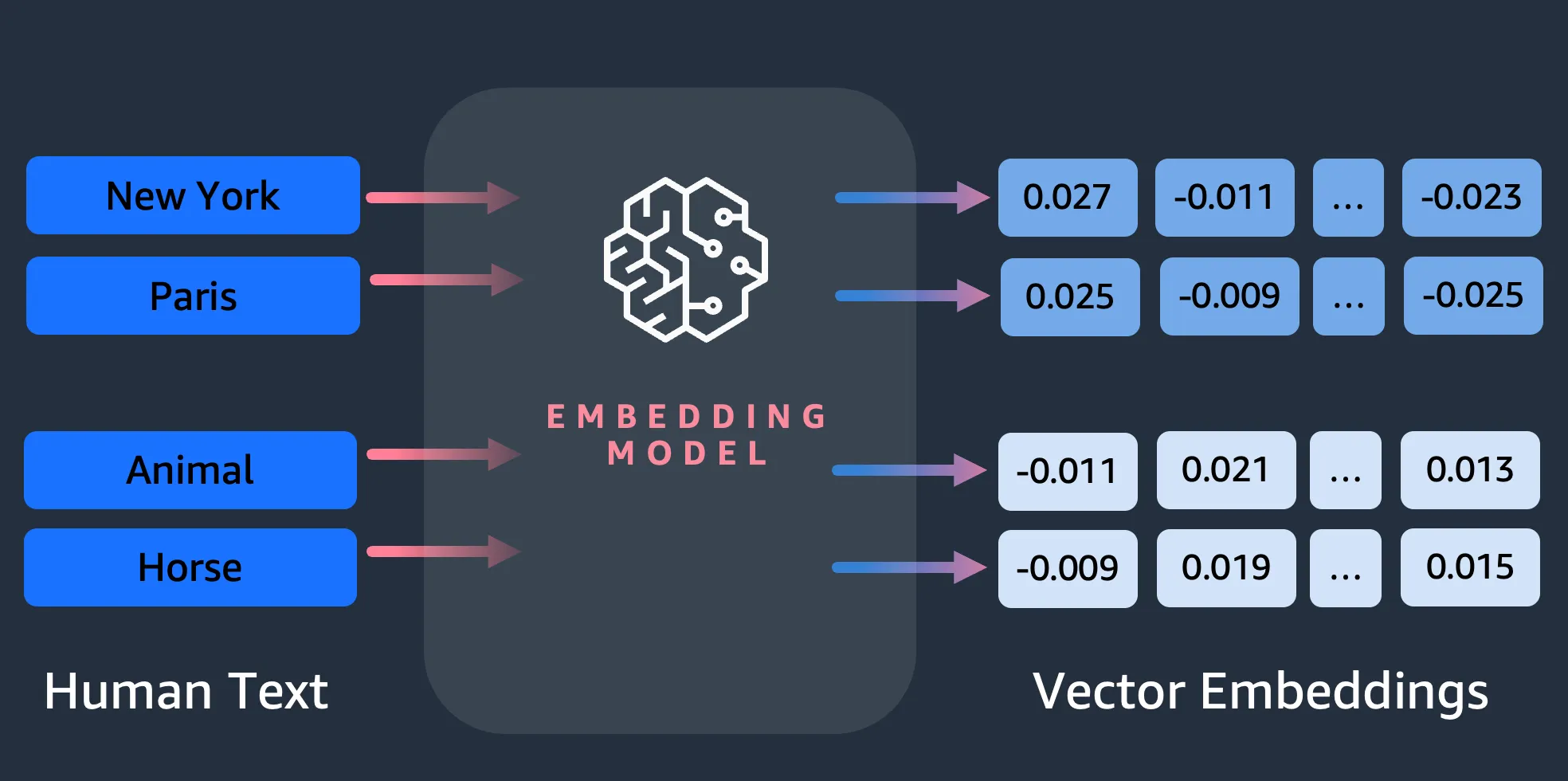

- There is input text (also called prompt)

- You pass it through something called an embedding model - think of as a stateless function

- You get an output which is an array of floating point numbers

- Vector data type support within existing databases, such as PostgreSQL, Redis, OpenSearch, MongoDB, Cassandra, etc.

- And the other category is for specialised vector databases, like Pinecone, Weaviate, Milvus, Qdrant, ChromaDB, etc.

- Amazon MemoryDB for Redis which currently has Vector search in preview (at the time of writing)

- You take your domain-specific data, split/chunk them up

- Pass them through an embedding model - This gives you these vectors or embeddings,

- Store these embeddings in a vector database

- And, then there are applications that execute semantic search queries and combine them in various ways (RAG being one of them)

- Prompt-engineering techniques: zero-shot , few-shot etc. Sure this is cost-effective but how would this apply to domain-specific data?

- Fine-tuning: Take an existing LLM and train it using specific dataset. But what about the infra and costs involved? Do you want to become a model development company or focus on your core business?

- RetrieveAndGenerate: Call the API, get the response - that's it. Everything (query embedding, semantic search, prompt engineering, LLM orchestration) is handled!

- Retrieve: For custom RAG workflows, where you simply extract the ton-N responses (like semantic search) and integrate the rest as per your choice.

- Documentation is a great place to start! Specifically, Knowledge bases

- Lots of content and practical solutions on the generative AI community space!

- Ultimately, there is no replacement for hands-on learning. Head over to Amazon Bedrock and start building!

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.