Empowering SQL Developers with Real-Time Data Analytics and Apache Flink

Learn how to use Amazon Managed Service for Apache Flink to analyse streaming data with Flink SQL and deploy a streaming application

Create an Amazon S3 bucket and upload the sample data

Ingest data stored in S3 bucket to Kinesis Data Stream

Create a note in MSF Studio Notebooks (Zeppelin)

Analyse data in KDS with SQL via MSF Studio Notebooks

Query 1: Count number of trips for every borough in NYC

Query 2: Count number of trips that occurred in Manhattan every hour

Build and deploy the MSF Studio Notebooks as a long running app

Some common use cases for real-time data analytics include:

- Clickstream analytics to determine customer behaviour

- Analyse real-time events from IoT devices

- Feed real-time dashboards

- Trigger real-time notifications and alarms

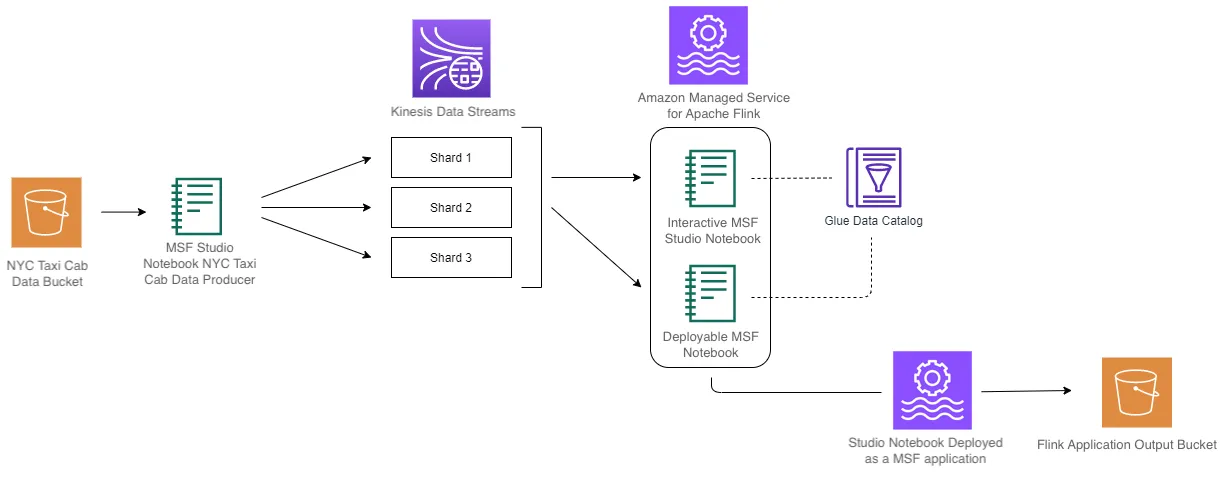

In this blog post we will ingest data into Kinesis Data Streams and analyse it using Amazon Managed Service for Apache Flink (MSF).

Apache Flink enables developers familiar with SQL to process and analyse streaming data with ease. Flink offers SQL syntax that supports event processing, time windows, and aggregations. This combination makes it a highly effective streaming query engine.

| About | |

|---|---|

| ✅ AWS Level | 200 |

| ⏱ Time to complete | 45 mins - 60 mins |

| 🧩 Prerequisites | An AWS Account |

| 🔨 Services used | - Kinesis Data Streams - Amazon Managed Service for Apache Flink - S3 bucket - Glue Data Catalog |

| ⏰ Last Updated | 2024-05-20 |

- How to run SQL queries on streaming data with MSF Studio Notebooks

- How to deploy MSF studio notebook as a long-running MSF application

- Our sample data is NYC Taxi Cab Trips data set that includes fields capturing pick-up and drop-off dates/times/locations, trip distances and more. This will behave as a streaming data. However, this data does not contain information which borough the pick-up and drop-off belong to, therefore we are going to enrich this data with a Taxi Zone Geohash data provided in another file.

- Ingest the data from S3 to KDS via MSF Studio Notebooks

- Analyse data in KDS with SQL via MSF Studio Notebooks

- Write processed data to S3

- Build and deploy the MSF application

- Go to the S3 Console

- Click on Create bucket

- Enter a name for the bucket

- Leave all other settings as is and click on Create bucket

- Upload the NYC Taxi Cab Trips file that will represent our stream data and the reference file Taxi Zone Geohash that enriches the stream data.

- Go to the Kinesis Console

- Under data streams click on Create data stream

- Enter a name for the Kinesis Data Stream yellow-cab-trip

- Leave all other settings as is and click on Create data stream

- Go to the MSF Console

- Click on Studio

- Click on Create Studio Notebook

- Click on Create with custom settings under the creation method section

- Enter a studio notebook name

- Select Apache Flink 1.13, Apache Zeppelin 0.9

- Click on Next

- Under the IAM role section, select Create / Update IAM role ... with required policies. Record the name of the IAM role

- Under the AWS Glue database, click on Create. This will open a new tab/window in your web browser

- In the new window opened click on Add database

- Enter a database name

- Click on Create

- Close the browser window and return to the browser window where you were previously configuring the MSF studio deployment

- Under the AWS Glue Database section click on the small refresh button and select the database you just created from the drop down

- Leave the rest of the settings with the default configurations and click on Next at the bottom of the screen

- Under the Deploy as application configuration - optional section, click on Browse and select the S3 bucket you created earlier

- Leave the rest of the setting with the default configurations and click on Next at the bottom of the screen

- Click on Create Studio Notebook at the bottom of the screen

- Go to the IAM Console Roles

- Search for the name of the role that you created earlier in Step 8 during the MSF Studio creation.

- Click on Add permission and then select Attach policies

- Search for and add AmazonS3FullAccess, AmazonKinesisFullAccess and AWSGlueServiceRole

- Go to the MSF Console

- Click on Studio

- Click on the MSF studio instance you created

- Click Run

- Click on Open in Apache Zeppelin

- Click on Create new note and select Flink for the Default Interpreter

<BUCKET_NAME> in the path with the name of the S3 bucket you created earlier.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

%flink.ssql

DROP TEMPORARY TABLE IF EXISTS s3_yellow_trips;

CREATE TEMPORARY TABLE s3_yellow_trips (

`VendorID` INT,

`tpep_pickup_datetime` TIMESTAMP(3),

`tpep_dropoff_datetime` TIMESTAMP(3),

`passenger_count` INT,

`trip_distance` FLOAT,

`RatecodeID` INT,

`store_and_fwd_flag` STRING,

`PULocationID` INT,

`DOLocationID` INT,

`payment_type` INT,

`fare_amount` FLOAT,

`extra` FLOAT,

`mta_tax` FLOAT,

`tip_amount` FLOAT,

`tolls_amount` FLOAT,

`improvement_surcharge` FLOAT,

`total_amount` FLOAT,

`congestion_surcharge` FLOAT

)

WITH (

'connector' = 'filesystem',

'path' = 's3://<BUCKET_NAME>/reference_data/yellow_tripdata_2020-01_noHeader.csv',

'format' = 'csv'

);1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

%flink.ssql

DROP TEMPORARY TABLE IF EXISTS kinesis_yellow_trips;

CREATE TEMPORARY TABLE kinesis_yellow_trips (

`VendorID` INT,

`tpep_pickup_datetime` TIMESTAMP(3),

`tpep_dropoff_datetime` TIMESTAMP(3),

`passenger_count` INT,

`trip_distance` FLOAT,

`RatecodeID` INT,

`store_and_fwd_flag` STRING,

`PULocationID` INT,

`DOLocationID` INT,

`payment_type` INT,

`fare_amount` FLOAT,

`extra` FLOAT,

`mta_tax` FLOAT,

`tip_amount` FLOAT,

`tolls_amount` FLOAT,

`improvement_surcharge` FLOAT,

`total_amount` FLOAT,

`congestion_surcharge` FLOAT

)

WITH (

'connector' = 'kinesis',

'stream' = 'yellow-cab-trip',

'aws.region' = 'us-east-1',

'format' = 'json'

);INSERT INTO statement and insert all data from the S3 source table into Kinesis sink table.1

2

3

%flink.ssql(type=update, parallelism=1)

INSERT INTO kinesis_yellow_trips SELECT * FROM s3_yellow_tripsIngestion_to_KDS will send data to Kinesis Data Stream for approximately 30 minutes. You may need to periodically rerun the notebook to sample data sent to Kinesis Data Stream. If you are working on the subsequent notebook and do not see any results check that your note is still running and does not need to be restarted.trips table contains pickup location id (PULocationID), however, it does not contain information in which borough the trip was initiated. Luckily, we have the information which borough does every LocationID correspond to, in the separate file on S3 named taxi_zone_with_geohash.csv.taxi_zone_with_geohash.csv file.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

%flink.ssql

DROP TABLE IF EXISTS locations;

CREATE TABLE locations (

`LocationID` INT,

`borough` STRING,

`zone` STRING,

`service_zone` STRING,

`latitude` FLOAT,

`longitude` FLOAT,

`geohash` STRING

) WITH (

'connector'='filesystem',

'path' = 's3://<BUCKET_NAME>/taxi_zone_with_geohash.csv',

'format' = 'csv',

'csv.ignore-parse-errors' = 'true'

)trips with the reference table locations.1

2

3

4

5

6

7

8

9

%flink.ssql(type=update)

SELECT

locations.borough as borough,

COUNT(*) as total_trip_pickups

FROM trips

JOIN locations

ON trips.PULocationID = locations.LocationID

GROUP BY locations.borough1

2

3

4

5

6

7

8

9

10

11

%flink.ssql(type=update)

SELECT COUNT(*) AS total_trip_pickups

FROM trips

WHERE EXISTS (

SELECT *

FROM locations

WHERE locations.LocationID = trips.PULocationID

AND locations.Borough = 'Manhattan'

)

GROUP BY TUMBLE(trips.tpep_dropoff_datetime, INTERVAL '60' MINUTE)INSERT INTO statement. First, we would need to define a sink table, where we would like to write the data into and then perform the corresponding query.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

%flink.ssql(type=update)

DROP TABLE IF EXISTS s3_query1;

CREATE TABLE s3_query1 (

`window_start` TIMESTAMP(3),

`borough` STRING,

total_trip_pickups BIGINT

) PARTITIONED BY (`window_start`) WITH (

'connector'='filesystem',

'path' = 's3://nyc-yellow-cab-trip/',

'format' = 'json',

'sink.partition-commit.policy.kind'='success-file',

'sink.partition-commit.delay' = '1 s'

)1

2

3

4

5

6

7

8

9

10

11

%flink.ssql(type=update, parallelism=1)

INSERT INTO s3_query1

SELECT

TUMBLE_START(trips.tpep_dropoff_datetime, INTERVAL '1' DAY) as window_start,

locations.borough as borough,

COUNT(*) as total_trip_pickups

FROM trips

JOIN locations

ON trips.PULocationID = locations.LocationID

GROUP BY locations.borough, TUMBLE(trips.tpep_dropoff_datetime, INTERVAL '1' DAY)To build and deploy the MSF Studio notebooks you need to follow the below steps:

- Select the first option Build

<Filename>, on the top-right dropdown Actions for<Filename> - Wait for the Build to complete. It might take several minutes

- Select Deploy

<Filename>, on the top-right dropdown Actions for<Filename> - Leave all the others as default and click Create Streaming Application

- Wait for the deployment to complete. It might take several minutes

- Once the deployment is complete, go to MSF Console

- Notice your application under the Streaming applications

- To start the application, first select it and then click on Run

- Now your MSF Studio Notebook is deployed as a MSF application

- Select the data stream

- Click on Actions and select Delete

- Go to S3 console

- Select the S3 bucket and click on Delete

- Go to MSF Console

- Select the Streaming applications

- Click on Actions and select Delete

- Click on Studio

- Select the Studio notebooks

- Click on Actions and select Delete

You can also analyse data using programming languages like Java, Python and Scala as explained in this Workshop.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.