AWS open source newsletter, #197

A round up of the latest open source news, projects, and events that every open source developer should know about.

Sr Open Source Developer Advocate - Valkey

evaluating-large-language-models-using-llm-as-a-judge

Demos, Samples, Solutions and Workshops

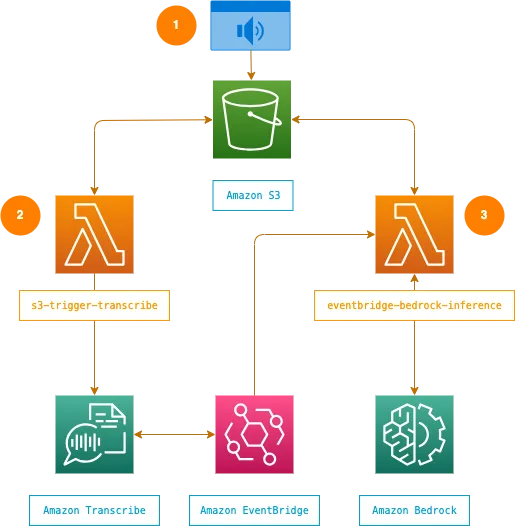

amazon-bedrock-audio-summarizer

The best from around the Community

Keynote Fifteen years of formal methods at AWS

Talking with Management About Open Source

PyCon Italia May 22nd-25th, Florence Italy

BSides Exeter July 27th, Exeter University, UK

Cortex Every other Thursday, next one 16th February

OpenSearch Every other Tuesday, 3pm GMT

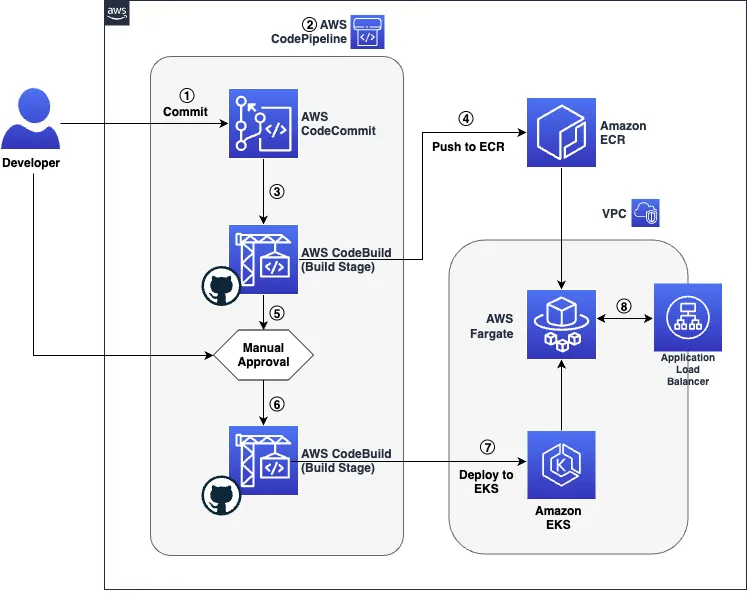

- Simplify Amazon EKS Deployments with GitHub Actions and AWS CodeBuild is a great look at how to simplify Amazon EKS deployments with GitHub Actions and AWS CodeBuild [hands on]

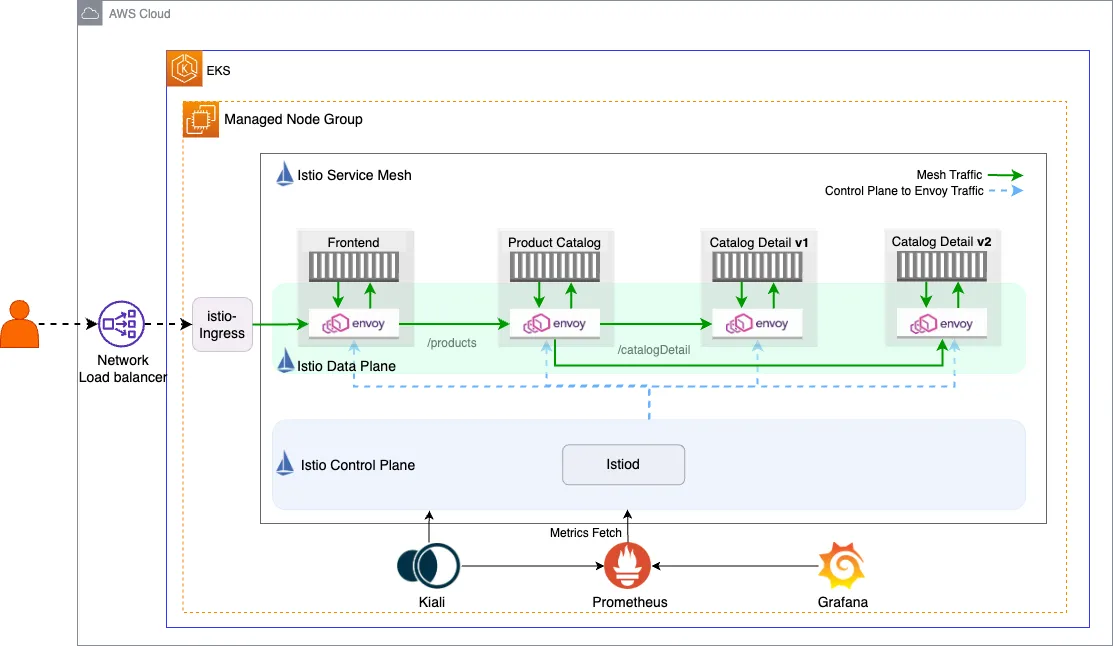

- Enhancing Network Resilience with Istio on Amazon EKS dives into how Istio on Amazon EKS can enhance network resilience for microservices, by providing critical features like timeouts, retries, circuit breakers, rate limiting, and fault injection, Istio enables microservices to maintain responsive communication even when facing disruptions [hands on]

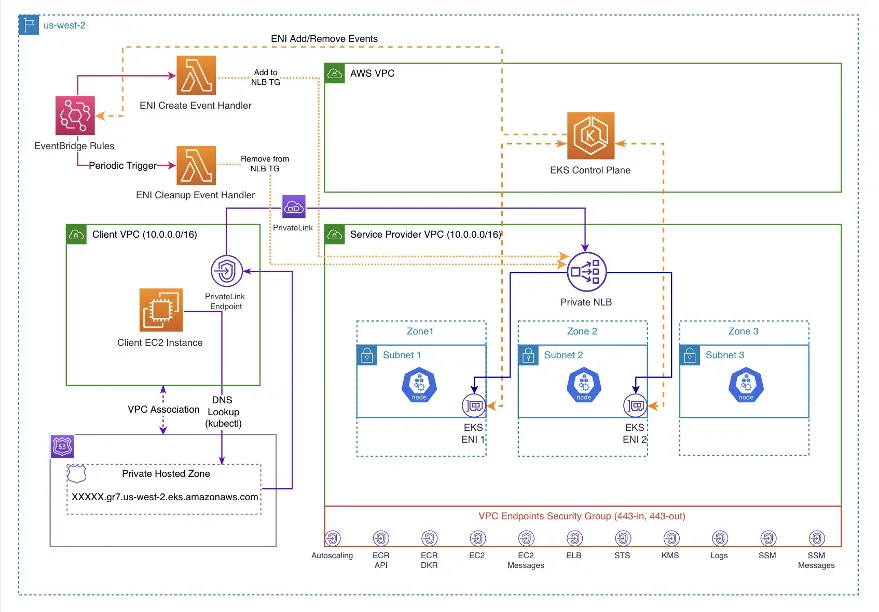

- Enable Private Access to the Amazon EKS Kubernetes API with AWS PrivateLink provides an innovative blueprint for harnessing AWS PrivateLink as a solution to enable private, cross-VPC, and cross-account access to the Amazon EKS Kubernetes API [hands on]

- Dive deep into security management: The Data on EKS Platform is a comprehensive guide on permission management in big data, particularly within the Amazon EKS platform using Apache Ranger, that equips you with the essential knowledge and tools for robust data security and management [hands on]

- How Slack adopted Karpenter to increase Operational and Cost Efficiency shows Slack’s journey to modernise its container platform on Amazon EKS and how they increased the cost savings and improved operational efficiency by leveraging Karpenter

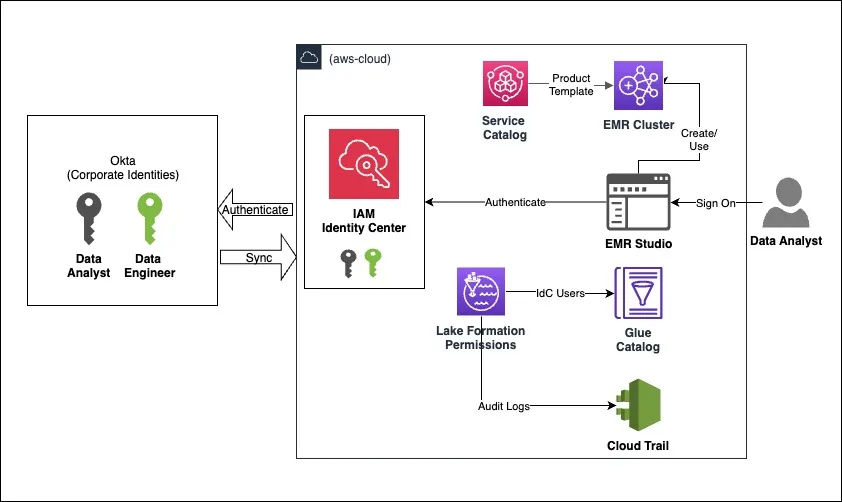

- Use your corporate identities for analytics with Amazon EMR and AWS IAM Identity Centerprovides guidance on how to bring your IAM Identity Center identities to EMR Studio for analytics use cases, directly manage fine-grained permissions for the corporate users and groups using Lake Formation, and audit their data access [hands on]

- Use Kerberos authentication with Amazon Aurora MySQL shows you how to integrate self-managed Microsoft AD with AWS Managed Microsoft AD, enable Kerberos authentication in Amazon Aurora MySQL, and authenticate with Kerberos from Windows and Linux clients [hands on]

- Enhance PostgreSQL database security using hooks with Trusted Language Extensions has a look at enhancements made to hooks in TLE, and shows an example of how to create a client authentication hook [hands on]

- Managing object dependencies in PostgreSQL – Overview and helpful inspection queries (Part 1)and (Part 2) is a two part post that explores object binding in PostgreSQL, and how understanding and managing object dependencies is crucial for ensuring data integrity and making changes to the database without causing unexpected issues [hands on]

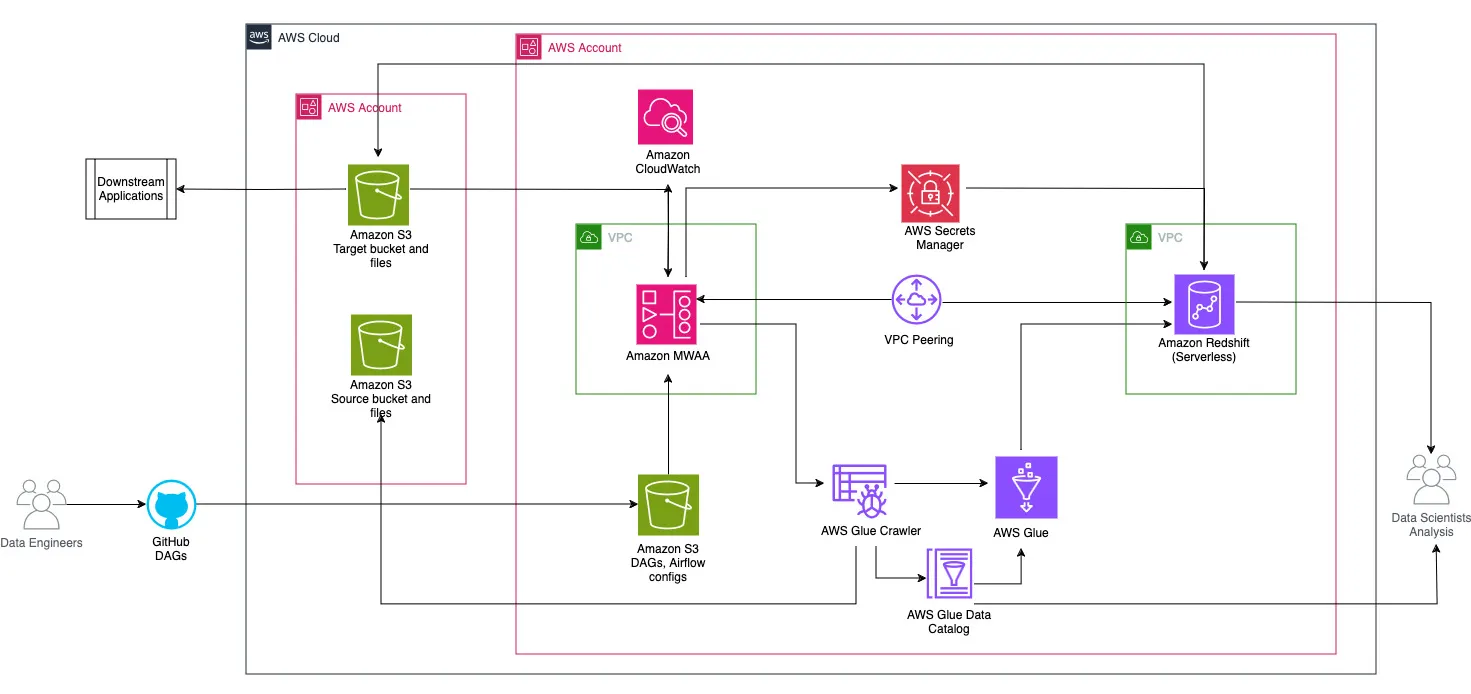

- Orchestrate an end-to-end ETL pipeline using Amazon S3, AWS Glue, and Amazon Redshift Serverless with Amazon MWAA demonstrates how to orchestrate an end-to-end extract, transform, and load (ETL) pipeline using Amazon Simple Storage Service (Amazon S3), AWS Glue, and Amazon Redshift Serverless with Amazon MWAA [hands on]

- Build APIs using OpenAPI, the AWS CDK and AWS Solutions Constructs shows you how you can build a robust, functional REST API based on an OpenAPI specification using the AWS CDK and AWS Solutions Constructs [hands on]

- Using ProxySQL to Replace Deprecated MySQL 8.0 Query Cache is a hands on guide that walks you through how to optimise Amazon Aurora/RDS MySQL 8.0 performance by using ProxySQL to replace deprecated Query Cache [hands on]

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.