Bridging the Last Mile in LangChain Application Development

Deploying LangChain apps can be complex due to the need for various cloud services. This article explores the challenges devs face with AWS CDK or console, and introduces Pluto, enabling devs to focus on business logic over tedious config.

langchain-aws-template GitHub repository in the Resources list at the end of the video. It contains two example applications integrating AWS with LangChain, complete with deployment guides. The deployment process includes four steps:- Create a specific Python environment using Conda;

- Configure keys and other necessary application data;

- Execute a Bash script to package the application;

- Deploy the application using AWS CDK.

- Prone to errors: Both methods essentially involve manually creating granular service instances, which can lead to configuration omissions and errors that are hard to detect during deployment and only surface when the application runs.

- Requires AWS background knowledge: Whether defining service instances through CDK code or manually creating them via the console, developers need an in-depth understanding of AWS services, including direct dependencies like DynamoDB, S3, and indirect ones like IAM.

- Tedious permission configuration: For security reasons, we usually adhere to the principle of least privilege when configuring permissions for resource service instances. If developers manually manage these permissions through CDK or the console, it will undoubtedly be a very cumbersome process. Moreover, it's easy to forget to update permission configurations after modifying business code.

- Dependency management: When publishing a LangChain application as an AWS Lambda function instance, we need to package the application's SDK dependencies during the packaging process. This requires manual management by developers, which can lead to missed dependencies. If the local device's operating system or CPU architecture doesn't match AWS's platform, the packaging process becomes even more troublesome.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

import os

from pluto_client import Router, HttpRequest, HttpResponse

from langchain_core.pydantic_v1 import SecretStr

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_openai import ChatOpenAI

prompt = ChatPromptTemplate.from_template("tell me a short joke about {topic}")

model = ChatOpenAI(

model="gpt-3.5-turbo",

api_key=SecretStr(os.environ["OPENAI_API_KEY"]),

)

output_parser = StrOutputParser()

def handler(req: HttpRequest) -> HttpResponse:

chain = prompt | model | output_parser

topic = req.query.get("topic", "ice cream")

joke = chain.invoke({"topic": topic})

return HttpResponse(status_code=200, body=joke)

router = Router("pluto")

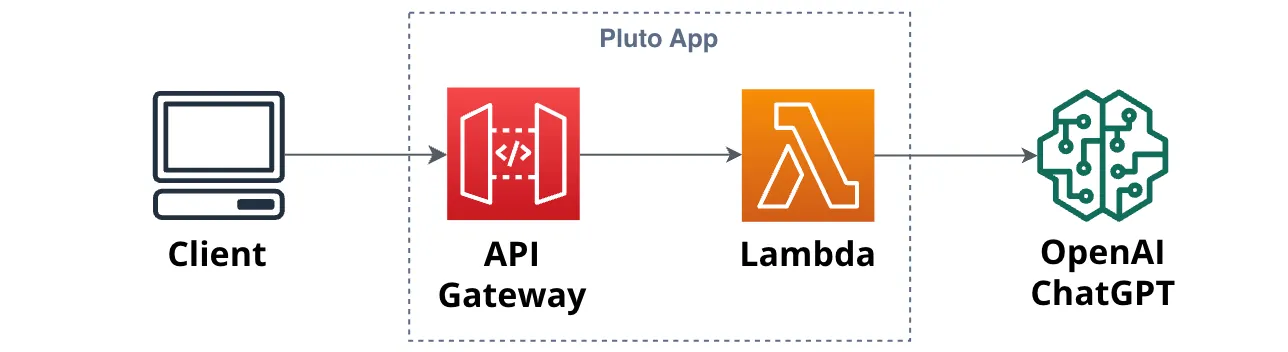

router.get("/", handler)pluto deploy, Pluto can construct the application architecture shown in the figure below on the AWS platform. During the process, it will automatically create instances for the API Gateway and Lambda, and configure the routing, triggers, permissions, etc., from API Gateway to Lambda.

pluto deploy, Pluto will automatically update the infrastructure configuration of the application without any extra steps from the developer, thus solving the previously mentioned issues of error-proneness, code packaging, and cumbersome permission configurations.