Your idea is my command: multi-agent collaboration for software development 💡🚀

Create your own virtual software company with Amazon Bedrock ⛰️ and ChatDev 👨🏼💻 and use it to build custom applications through LLM-powered multi-agent interactions.

João Galego

Amazon Employee

Published Jun 3, 2024

🦥 "Procrastination is like a credit card: it's a lot of fun until you get the bill" ―Christopher Parker

We all have that one super-secret, this-might-just-work idea that we're too afraid to share with others.

Yes, that one... the one that has always been there, a constant gnawing in the back of your mind reminding you not to procrastinate. The one that is just too good to pass up, but that you never seem to have the time nor the resources to work on.

What if we could change that? What if you had a whole team working for you 24/7? A dedicated workforce whose sole purpose in "life" is to turn your ideas... into reality?

If this sounds too good to be true, think again.

Today's blog post is about ChatDev 👨💻, an open source project for creating virtual software companies powered by AI agents, and some of my attempts to hack it using Amazon Bedrock.

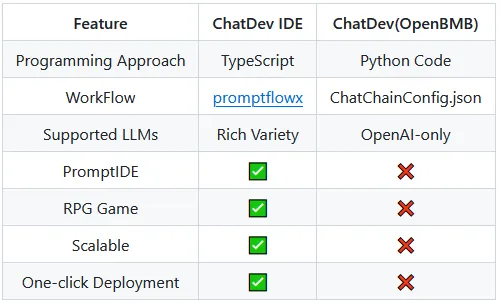

If you never tried ChatDev (not to be confused with ChatDev IDE), here's the general idea.

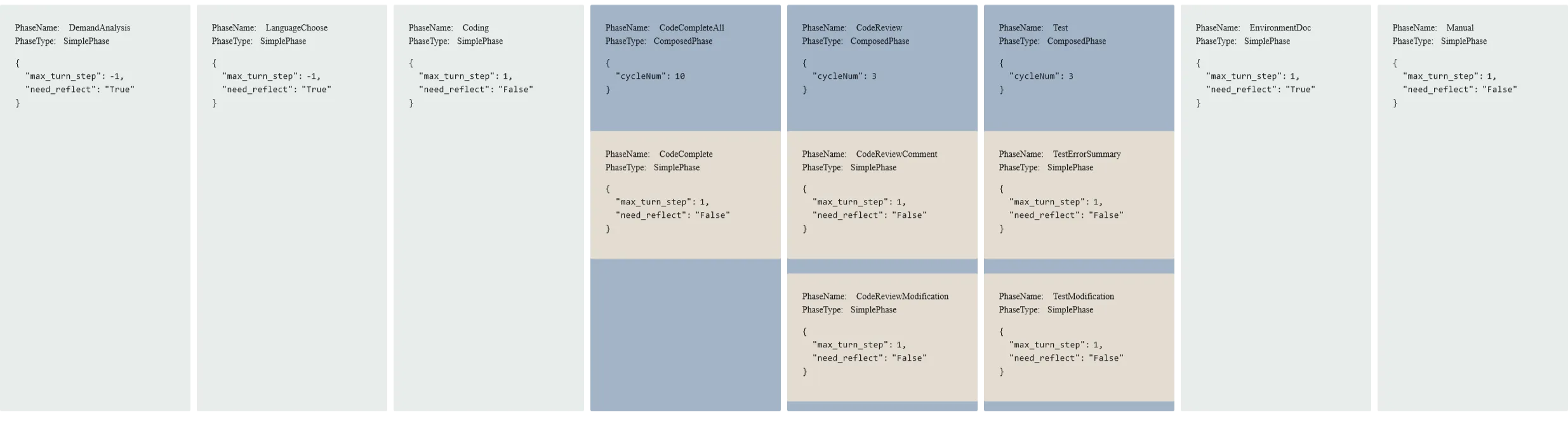

You start by choosing a "team" (

RoleConfig.json)

and defining how the project will be structured (

ChatChainConfig.json) - zoom in if needed 🔎

Both configurations are optional, but you'll get better results if you tweak the default configurations.

Once this is done, you just give "everyone" an idea to work on and just wait a couple of minutes ⏳

Need inspiration? 🤔💡✨ Check out the Community Contribution Software list.

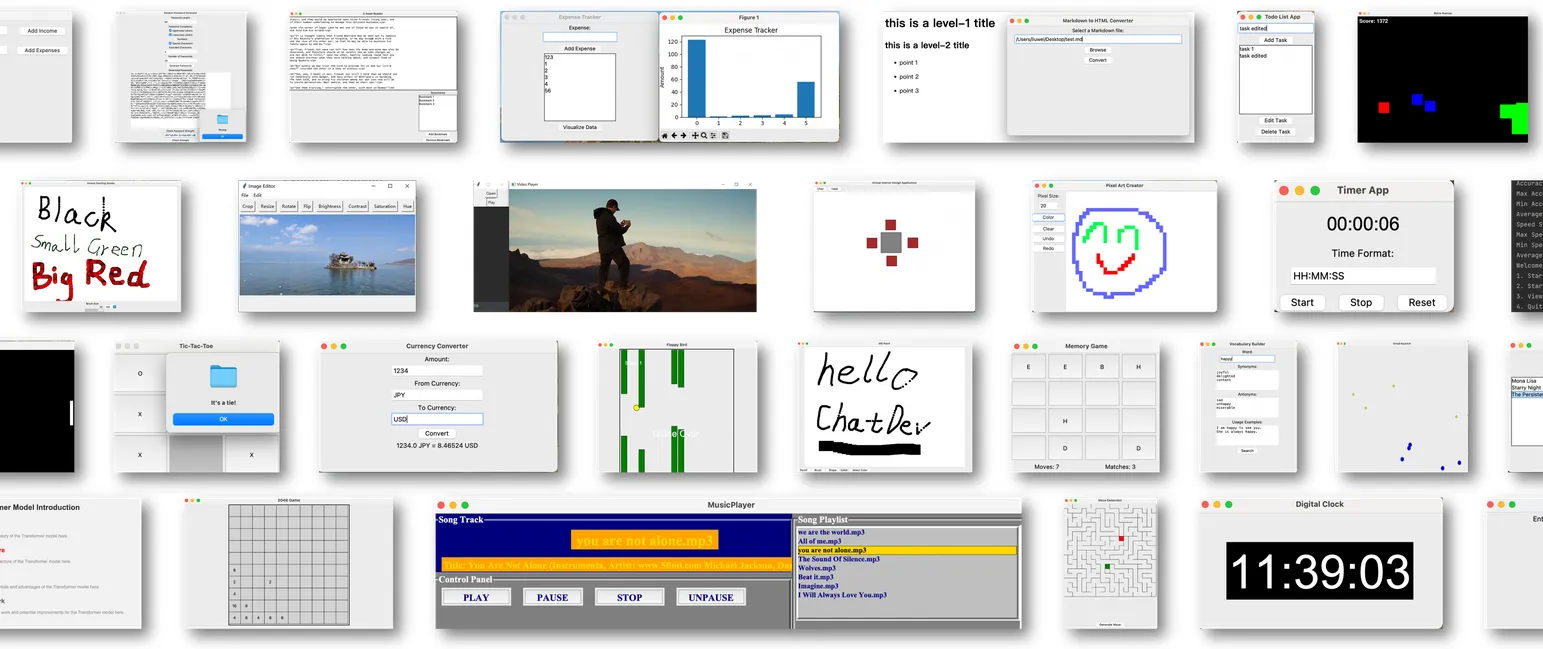

ChatDev will be able to generate code, documentation and more, depending on the deliverables specified in the project configuration. Cool, right?

I'll dive deeper into the implementation in the next section, but for now, suffice it to say that every team member (agent) will be powered by one or more models through Amazon Bedrock.

Enough chit-chat about ChatDev... let's take it for a spin! 🎰

Want to learn more about ChatDev? 👀 Scroll all the way down to the References section!

🙋♂️ "There is only one boss. The customer" ―Sam Walton

Before we get started, take some time perform the following prerequisite actions:

- Make sure these tools are installed and properly configured:

- Request model access via Amazon Bedrock

💡 For more information on how enable model access, please refer to the Amazon Bedrock User Guide (Set up > Model access)

Let's start by creating a new Conda environment to keep everything isolated

Instead of original project (OpenBMB/ChatDev), we'll clone the one attached to this pull request (PR)

The idea behind this PR is to add support for custom models beyond those offered by OpenAI. The hack-y part is that we want to implement this without affecting the original functionality and with minimal code changes.

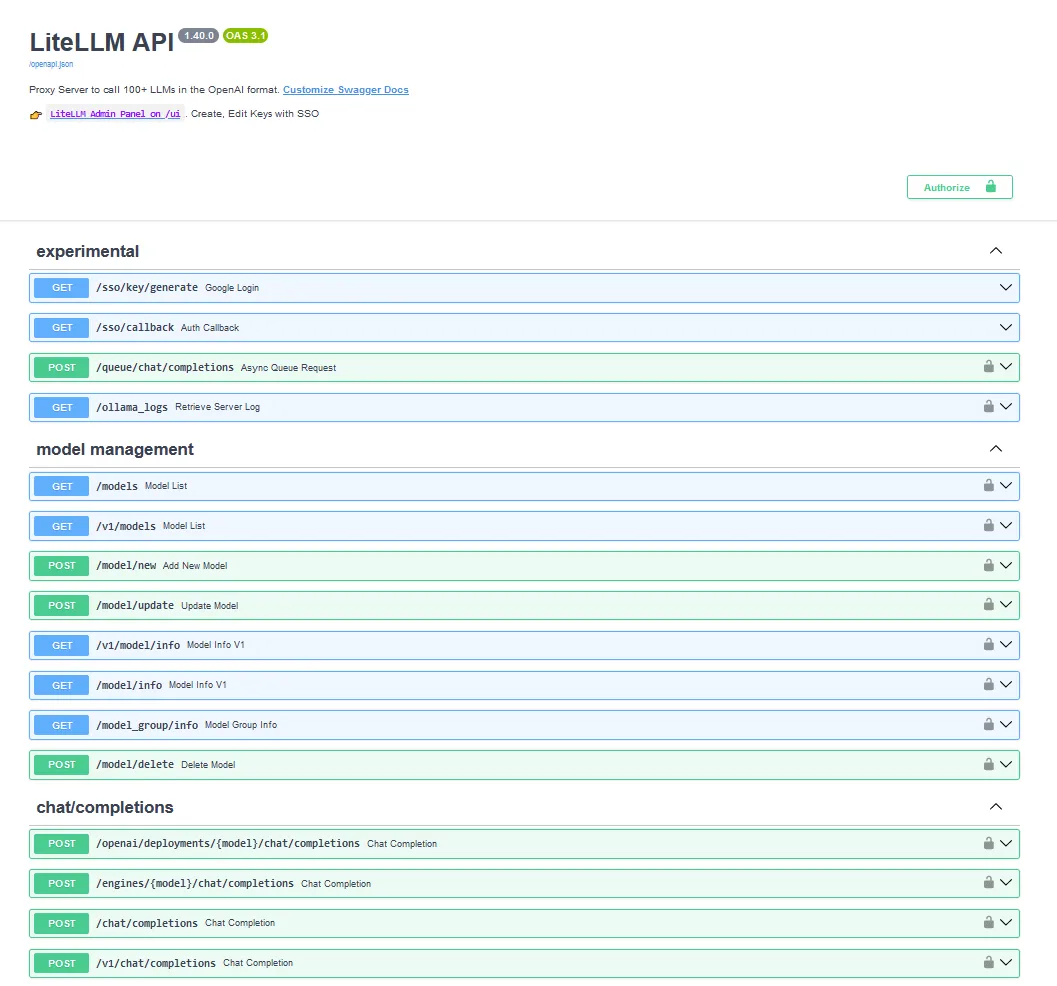

The solution, which is still under review (so you're free to think of a better one), is to use an OpenAI-compatible proxy like LiteLLM or Bedrock Access Gateway, and adjust the OpenAI environment variables (

OPENAI_BASE_URL and OPENAI_API_KEY) accordingly. We also need to add some environment variables to select the models for generating text (

CHATDEV_CUSTOM_MODEL) and images (CHATDEV_CUSTOM_IMAGE_MODEL), but that's about it.Let's go ahead and install the ChatDev dependencies

plus the LiteLLM Proxy with Boto3 so we can call Amazon Bedrock

Next, let's create a proxy configuration for the text (Claude 3 Sonnet) and image (SDXL) models

add some AWS credentials

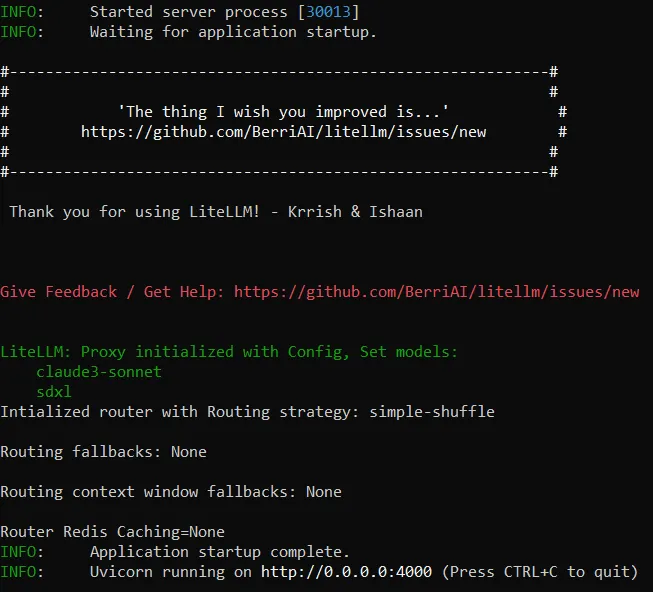

and fire up the LiteLLM proxy

At this point, you should be able to open the LiteLLM API server on your browser (defaults to port 4000)

Finally, let's open a new window or tab

🔔 Don't forget to open the ChatDev folder and activate the Conda environment.

set up the OpenAI+ChatDev environment variables

and kick things off

ChatDev usually takes a few minutes ⏱️ to create the app and everything in it. The actual runtime will vary depending on the task complexity, model latency and several other factors.

As an optional step, you can open (yet another) window to start the Visualizer app

and track all communications within the team

✨ Take it for a spin, let me know what you think and feel free to share your creations in the comments section below ⬇️

Thanks for reading all the way to the end - see you next time! 🖖

PS: I'm sure you can create something better than a boring game of tic-tac-toe ❌⭕ so let's pump those creative juices!

🎨 "You can't use up creativity. The more you use, the more you have" ―Maya Angelou

- (Qian et al., 2023a) Communicative Agents for Software Development

- (Qian et al., 2023b) Experiential Co-Learning of Software Developing Agents

- (Qian et al., 2024) Iterative Experience Refinement of Software-Developing Agents

- OpenBMB/ChatDev: the original implementation

- JGalego/ChatDev: the hack-y PR

- 10cl/ChatDev (IDE): alternative implementation with a richer experience

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.