Intelligent Document Processing with Anthropic Claude 3

Prompt excellence for maximum accuracy

Natallia Bahlai

Amazon Employee

Published Jun 3, 2024

Last Modified Jun 12, 2024

The objective of this article is to provide recommendations on how to effectively utilize Anthropic's Claude 3 Large Language Models (LLMs) for Intelligent Document Processing use cases and maximize the accuracy of Claude's responses.

Let's consider a scenario where we need to automate the processing of receipts and extract all relevant details to perform further tasks.

For this purpose, we can leverage the multi-modality feature of Anthropic's Claude 3 LLM, which allows us to supply images of the receipts and extract specific fields based on the accompanying prompt.

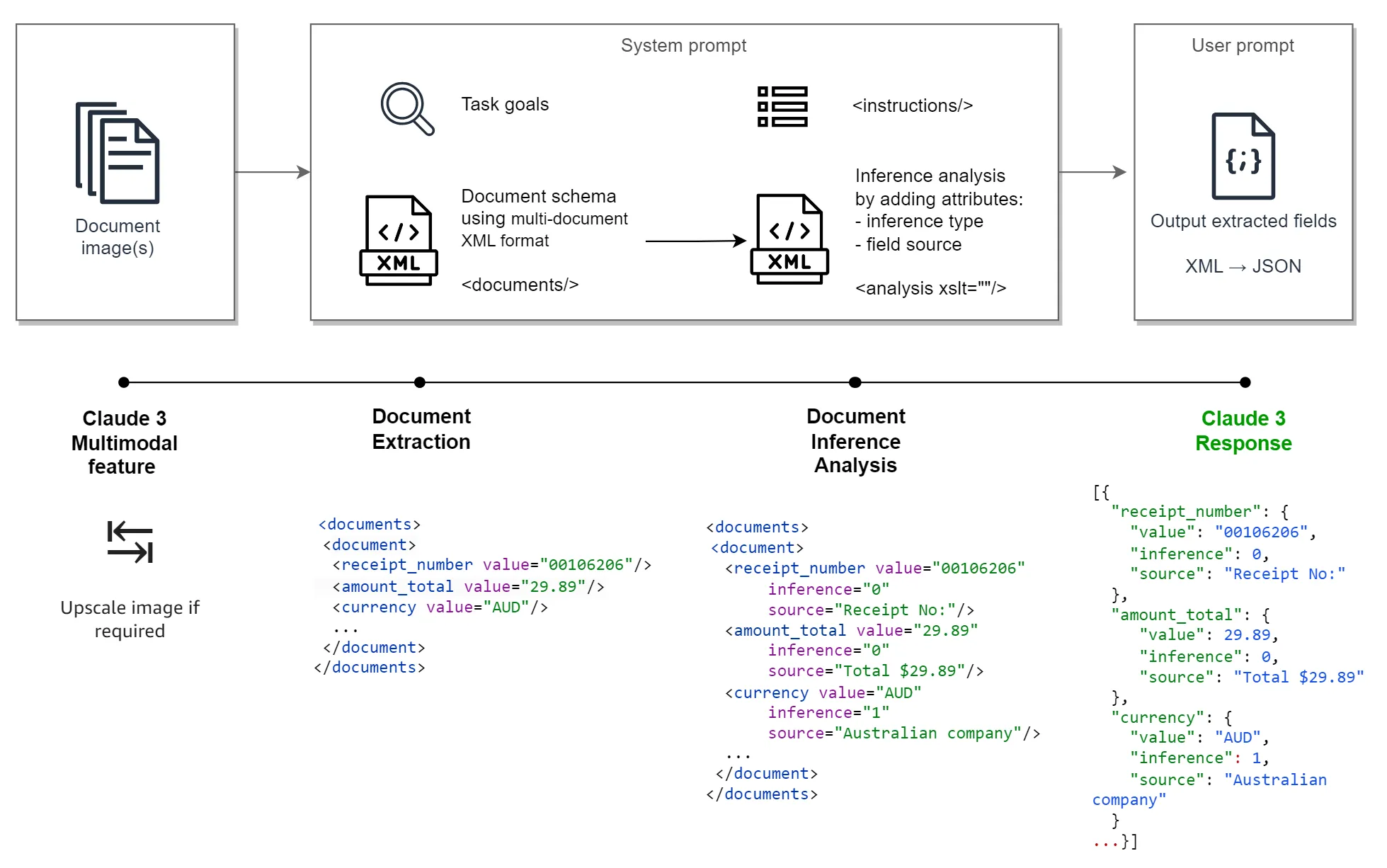

To maximize efficiency and accuracy of Claude's output, we should structure the LLM inputs as follows leveraging Anthropic prompt engineering techniques:

- One or more images of the receipts with appropriate resolution.

- A system prompt containing the following:

- A clear statement of the task goal

- A well-rounded document definition or schema within

<document/>wrapped in<documents/>to transcribe multiple images - Detailed instructions

<instructions/> - Inference analysis

<analysis/>

- A user prompt with instruction to convert the extracted <documents/> XML to JSON format

This structured approach will enable Claude 3 to effectively process the receipt images and provide accurate field extraction, facilitating further automation and data processing tasks.

Now let’s focus on the recommendations for organizing the LLM inputs to maximize efficiency and accuracy.

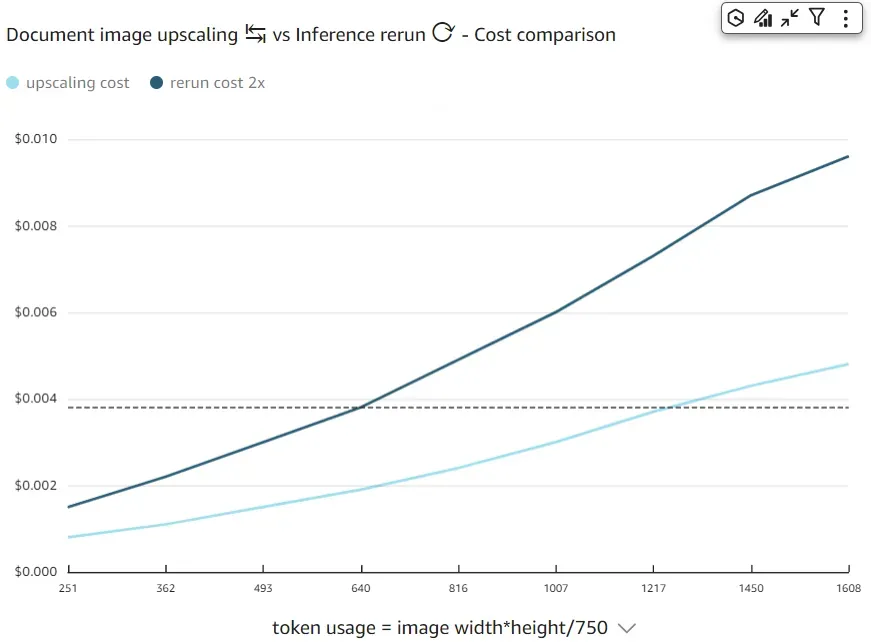

Low-resolution images may lead to incorrect or partially extracted values. To noticeably increase accuracy in such cases, a workaround is to upscale the image through image width and height resizing before passing the image to the Claude LLM.

It's important to note that if your image's long edge exceeds 1568 pixels, or your image is more than ~1600 tokens, it will first be scaled down while preserving the aspect ratio, until it meets the size limits. Therefore, when upscaling, avoid exceeding these limits to prevent an automatic (and potentially lengthy) downscaling process by Claude.

Claude guidelines for image size are outlined here: https://docs.anthropic.com/en/docs/vision#image-size

Keep in mind that upscaling an image (increasing width*height and hence input token usage) is more cost-effective than performing a second invocation in an attempt to extract document field values.

Now let’s review prompting techniques.

To achieve efficient document recognition, it is crucial to structure the prompts in a way that provides clear guidance and context to the Claude LLM.

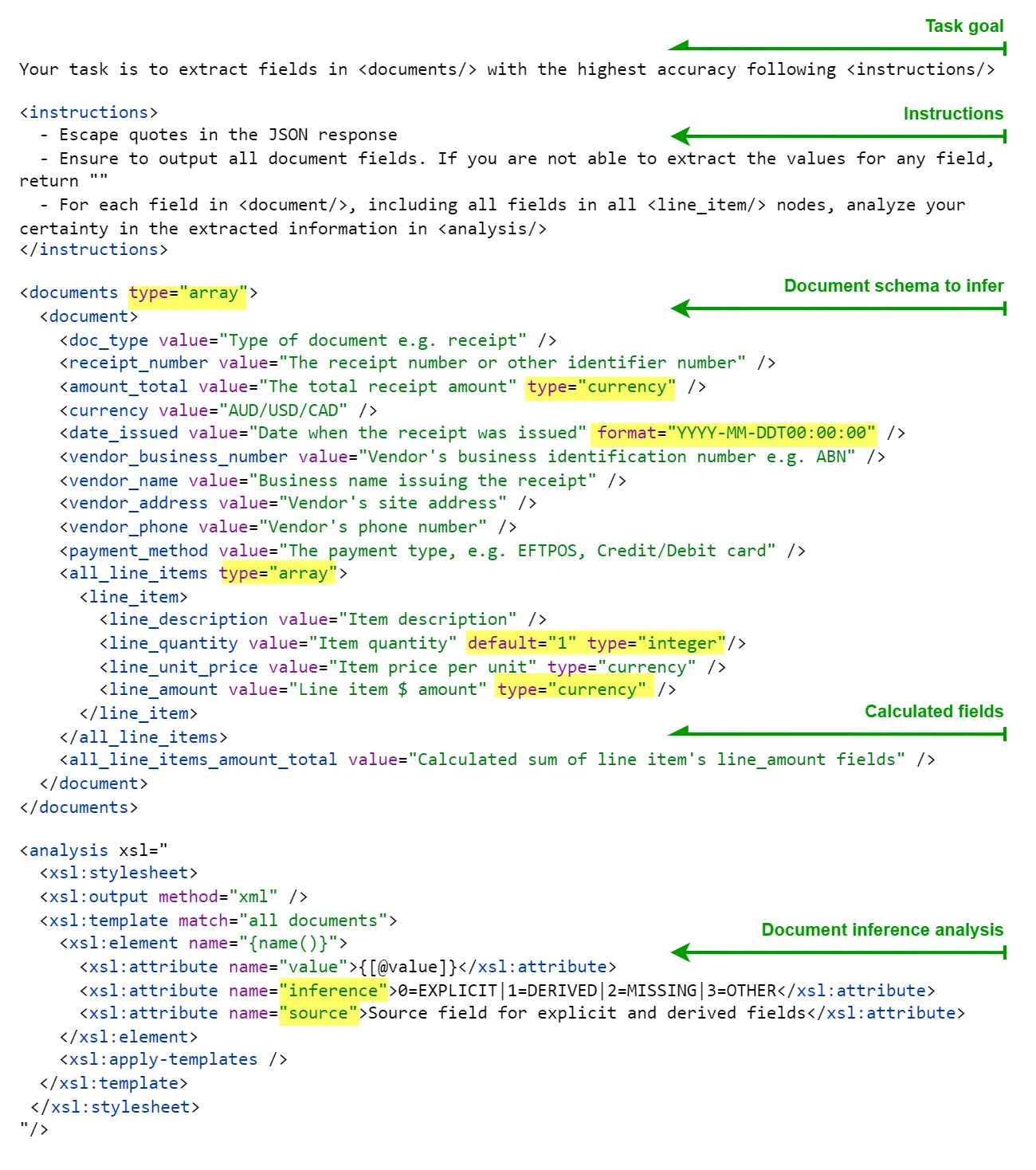

The following figure demonstrates the structure of the system prompt, followed by a detailed explanation of prompting techniques for maximum efficiency in the context of IDP use cases.

- Provide a clear goal for Claude LLM following guidance: be clear and direct.

- Using a system prompt is an efficient practice to help Claude understand the task objectives, comprehend document definition and schema, and follow instructions to maximize robust field extraction.

- When incorporating lengthy documents in the system prompt, you can use the multi-document XML format to help Claude better understand and utilize the provided information. This XML format allows you to structure and organize the relevant documents, making it easier for Claude to process the information.

- To ensure Claude understands your task, provide as much context and detail as possible by supplying instructions in the

<instructions/>section and defining the document data schema in the<documents/>section. Providing crisp instructions and a well-defined schema will help Claude generate more informed and accurate responses. - XML tags are a powerful tool for structuring prompts and guiding Claude's responses. Defining the document schema using XML tags can help generate significantly more accurate outputs. This technique is especially useful when working with complex documents containing a large volume of fields to recognize.

- Enforce arrays/lists using a construct like

<documents type="array">. - Enforce particular data types using constructs like

<line_quantity ... type="integer"/>. - Enforce data formats by specifying the expected format within the XML tags:

<due_date ... format="YYYY-MM-DD"/>

- To achieve more deterministic responses across repeated invocations and minimize Claude's hallucinations, you can achieve this by lowering the

temperatureof Claude's responses. Temperature is a measurement of answer creativity between 0 and 1, with 1 being more unpredictable and less standardized, and 0 being the most consistent. - Additionally, you can request Claude to incorporate calculated fields that can facilitate reconciliation processes. For instance, a calculated total of line item dollar amounts can be compared against the inferred total amount, allowing you to determine whether the receipt has been accurately recognized or requires human review.

- Examining the quality of fields recognized by Claude, including those potentially missed or implicitly derived from other document details, can provide valuable insights to guide subsequent decision-making. Allowing Claude to reason through the data and analyze its certainty in the extracted information before responding can lead to more accurate outputs. For the IDP use case, this can be accomplished by applying an XSLT transformation to include additional attributes like the inference type and the field source. Such analysis leveraging XSLT constructs provides an efficient technique to dynamically combine extracted document fields (including nested line items) with inference insights (

inferenceandsourceattributes), eliminating the need for lengthy prompts and instructions or complex document schema. - To minimize output token consumption and associated costs, the desired response should be provided in JSON format. User prompts can include directions to transform any inferred data in

<documents/>into JSON structure e.g. :Output as JSON: { "output": ["</analysis> as JSON"] }

By implementing these best practices, you can unlock the full potential of Anthropic's Claude 3 LLMs, streamlining your document processing workflows and ensuring accurate and reliable results.

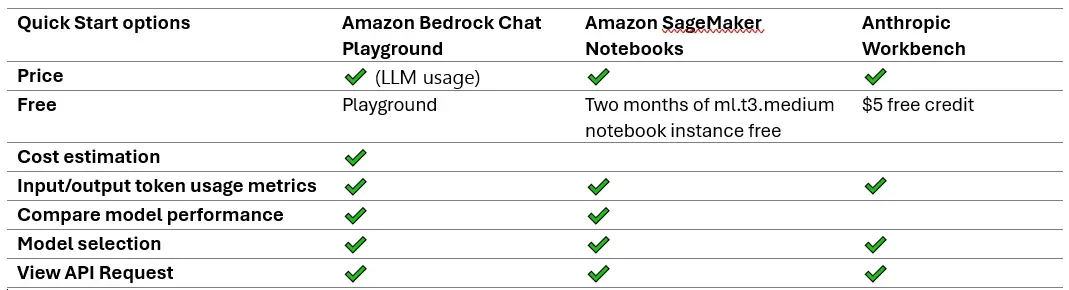

Amazon Bedrock's chat playground provides a convenient and intuitive way to begin exploring the capabilities of Anthropic's Claude models. If you have an AWS account, navigate to the Amazon Bedrock → Playgrounds → Chat and choose the Anthropic Claude 3 Sonnet model. Then, supply a system prompt and a user prompt along with the document image you wish to analyze. Once you click "Run," Claude will process the document and provide a response containing the extracted information. This code-free experience can help validate the potential for optimizing business processes and refine the prompts you use for intelligent document processing tasks.

The Amazon Bedrock chat playground offers a valuable advantage by providing transparency into the input and output token counts, as well as cost estimates based on token usage. Additionally, it allows you to compare up to three models simultaneously, such as Claude Sonnet vs Claude Haiku. This feature enables you to gain insights into the comparative costs and performance of the various Claude 3 models under consideration, facilitating an informed decision-making process.

Another viable option is to leverage the power of Amazon SageMaker Notebook, which offers an interactive way for dealing with machine learning models including LLMs. By creating a Notebook instance with the default settings and crafting the Jupyter notebook (or uploading this notebook), it empowers you to accelerate the development of programmatic solutions tailored to your specific requirements. With Amazon SageMaker Notebook, you can seamlessly transition from experimentation and prototyping to production-ready functions that you can port into your business workflow or data pipeline.

Furthermore, you have the option to utilize the Anthropic Workbench, a powerful tool that enables you to run document inference by sending prompts with various configurations and evaluating different large language models (LLMs).

In summary, to maximize the efficiency and accuracy of intelligent document processing tasks using Anthropic's Claude 3 LLMs, it is essential to carefully structure the LLM inputs. By following the recommendations outlined in this document, such as providing high-quality images, creating clear and detailed system prompts, and leveraging the power of XML tags to define document schemas, you can significantly enhance the performance of the LLM.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.