How I Built a Video Chat App with almost Zero Code Writing

Building an GenAI based app to summarize videos and make them conversational without me almost writing any code.

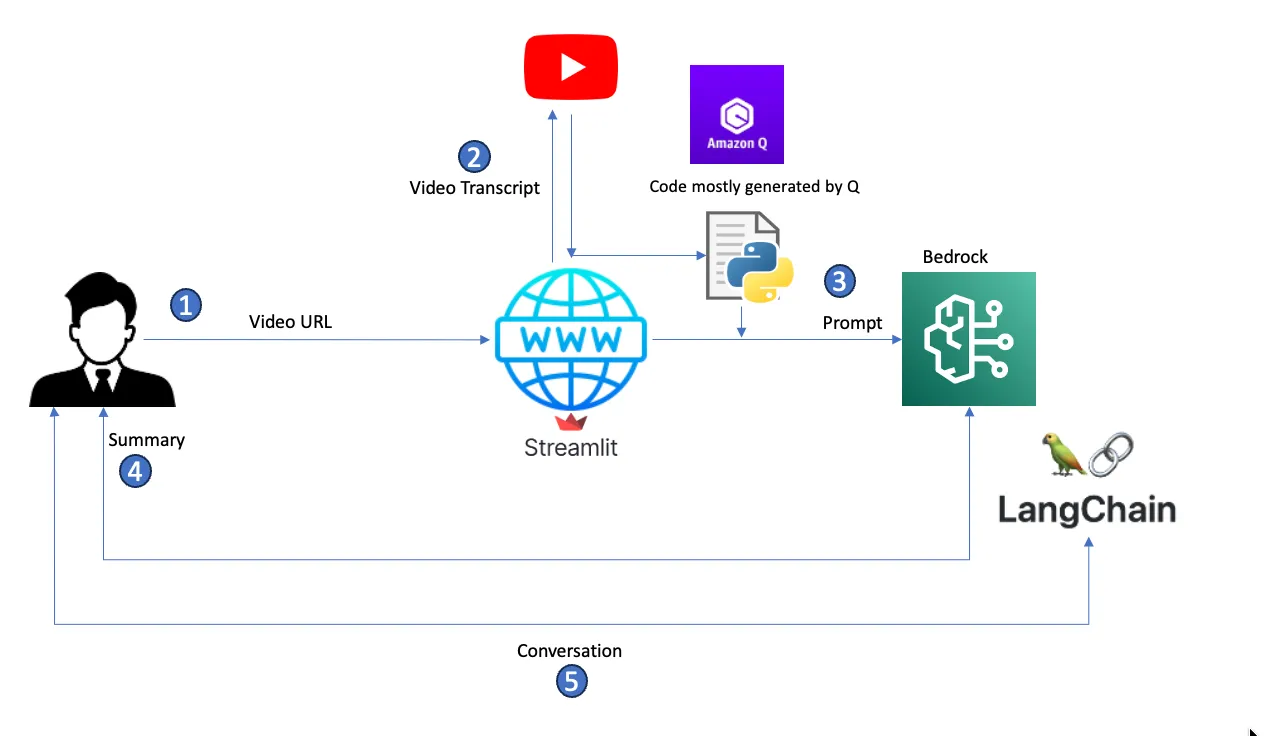

- A user enters a YouTube video URL to summarize.

- The Streamlit app takes the URL, parses it to get the video ID, and calls the YouTube API to get the video transcript.

- The app builds a prompt from the transcript and passes it to Bedrock for summarization using a predefined model.

- Bedrock summarizes the transcript based on the generated prompt and returns the summary to the user.

- If users have follow-up questions, the app builds a conversation memory using Langchain and answers follow-up questions based on content from the original transcript.

- Set up access to Amazon Bedrock in the AWS region us-east-1 and set up model access for

Anthropic Claude 3. I’m using this LLM because it has a max token of 200k, which allows me to handle transcripts of videos up to 3 hours in length in the prompt without the complexity of adding a knowledge base. Check here for details. - Set up Amazon Q Developer extension with your favorite code IDE. Mine is VSC. I used this video.

- Familiarity with the Streamlit framework to deploy your Python application – it literally took a minute to publish it.

- bedrock.py: The code module that deals with the LLM, creates Bedrock runtime, summarizes, and deals with chat history using LangChain.

- utilities.py: A module that retrieves video transcripts and builds a prompt from it.

- app.py: The main app that interacts with users. It takes the video URL, returns the summary, allows chat, and deals with user sessions.

- Get the YouTube ID from a YouTube URL

- Get the transcript of a video based on its ID

- Generate a prompt from the transcript

handle_input(), which detects the user input (whether it’s a URL to summarize or a follow-up question), returns output (summarization or answer in a chat), and resets user states accordingly.Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.