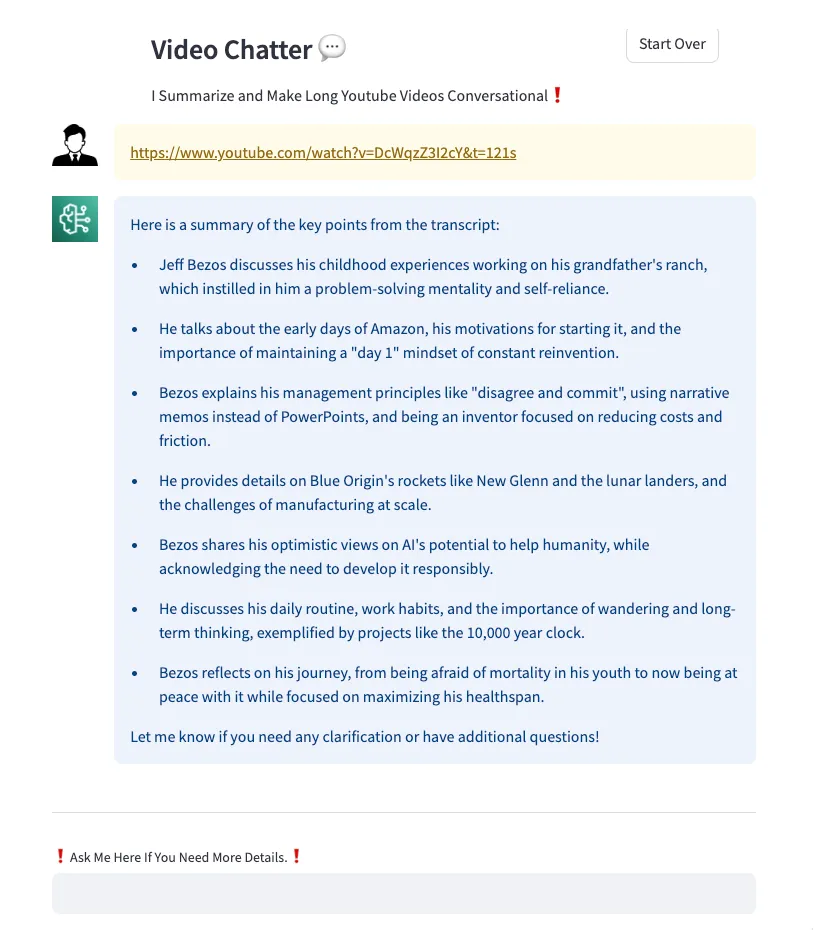

How I Built a Video Chat App with almost Zero Code Writing

Building an GenAI based app to summarize videos and make them conversational without me almost writing any code.

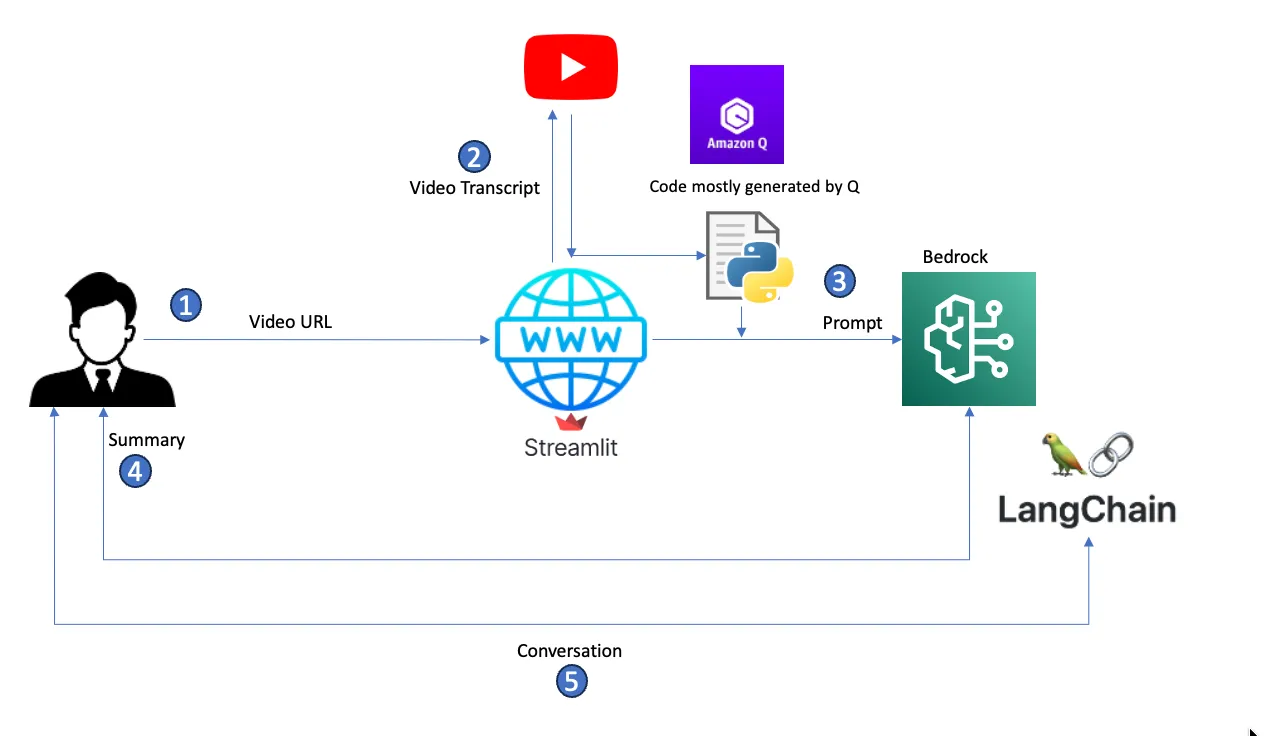

- A user enters a YouTube video URL to summarize.

- The Streamlit app takes the URL, parses it to get the video ID, and calls the YouTube API to get the video transcript.

- The app builds a prompt from the transcript and passes it to Bedrock for summarization using a predefined model.

- Bedrock summarizes the transcript based on the generated prompt and returns the summary to the user.

- If users have follow-up questions, the app builds a conversation memory using Langchain and answers follow-up questions based on content from the original transcript.

- Set up access to Amazon Bedrock in the AWS region us-east-1 and set up model access for

Anthropic Claude 3. I’m using this LLM because it has a max token of 200k, which allows me to handle transcripts of videos up to 3 hours in length in the prompt without the complexity of adding a knowledge base. Check here for details. - Set up Amazon Q Developer extension with your favorite code IDE. Mine is VSC. I used this video.

- Familiarity with the Streamlit framework to deploy your Python application – it literally took a minute to publish it.

- bedrock.py: The code module that deals with the LLM, creates Bedrock runtime, summarizes, and deals with chat history using LangChain.

- utilities.py: A module that retrieves video transcripts and builds a prompt from it.

- app.py: The main app that interacts with users. It takes the video URL, returns the summary, allows chat, and deals with user sessions.

1

write a python function that sets up a conversational AI system using the Amazon Bedrock service and the Claude language model (claude3). The AI can engage in a back-and-forth conversation with a user, maintaining context and providing relevant responses based on the prompt template and conversation history and then return the conversation with the user.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

import boto3

from langchain.prompts import PromptTemplate

from langchain.chains import ConversationChain

from langchain.memory import ConversationBufferMemory

from langchain_community.chat_models import BedrockChat

import streamlit as st

from botocore.config import Config

retry_config = Config(

region_name = 'us-east-1',

retries = {

'max_attempts': 10,

'mode': 'standard'

}

)

def bedrock_chain():

ACCESS_KEY = st.secrets["ACCESS_KEY"]

SECRET_KEY = st.secrets["SECRET_KEY"]

session = boto3.Session(

aws_access_key_id=ACCESS_KEY,

aws_secret_access_key=SECRET_KEY

)

bedrock_runtime = session.client("bedrock-runtime", config=retry_config)

model_id = "anthropic.claude-3-sonnet-20240229-v1:0"

model_kwargs = {

"max_tokens": 2048, # Claude-3 use “max_tokens” However Claud-2 requires “max_tokens_to_sample”.

"temperature": 0.0,

"top_k": 250,

"top_p": 1,

"stop_sequences": ["\n\nHuman"],

}

model = BedrockChat(

client=bedrock_runtime,

model_id=model_id,

model_kwargs=model_kwargs,

)

prompt_template = """System: TThe following is a video transcript. I want you to provide a comprehensive summary of this text and then list the key points. The entire summary should be around 400 word.

Current conversation:

{history}

User: {input}

Bot:"""

prompt = PromptTemplate(

input_variables=["history", "input"], template=prompt_template

)

memory = ConversationBufferMemory(human_prefix="User", ai_prefix="Bot")

conversation = ConversationChain(

prompt=prompt,

llm=model,

verbose=True,

memory=memory,

)

return conversation

- Get the YouTube ID from a YouTube URL

- Get the transcript of a video based on its ID

- Generate a prompt from the transcript

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

import logging

from youtube_transcript_api import YouTubeTranscriptApi

logger = logging.getLogger()

logger.setLevel("INFO")

def get_video_id_from_url(youtube_url):

logger.info("Inside get_video_id_from_url ..")

watch_param = 'watch?v='

video_id = youtube_url.split('/')[-1].strip()

if video_id == '':

video_id = youtube_url.split('/')[-2].strip()

if watch_param in video_id:

video_id = video_id[len(watch_param):]

logger.info("video_id")

logger.info(video_id)

return video_id

def get_transcript(video_id):

logger.info("Inside get_transcript ..")

transcript = YouTubeTranscriptApi.get_transcript(video_id)

logger.info("transcript")

logger.info(transcript)

return transcript

def generate_prompt_from_transcript(transcript):

logger.info("Inside generate_prompt_from_transcript ..")

prompt = "Summarize the following video:\n"

for trans in transcript:

prompt += " " + trans.get('text', '')

logger.info("prompt")

logger.info(prompt)

return prompthandle_input(), which detects the user input (whether it’s a URL to summarize or a follow-up question), returns output (summarization or answer in a chat), and resets user states accordingly.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

import logging

from youtube_transcript_api import YouTubeTranscriptApi

logger = logging.getLogger()

logger.setLevel("INFO")

def get_video_id_from_url(youtube_url):

logger.info("Inside get_video_id_from_url ..")

watch_param = 'watch?v='

video_id = youtube_url.split('/')[-1].strip()

if video_id == '':

video_id = youtube_url.split('/')[-2].strip()

if watch_param in video_id:

video_id = video_id[len(watch_param):]

logger.info("video_id")

logger.info(video_id)

return video_id

def get_transcript(video_id):

logger.info("Inside get_transcript ..")

transcript = YouTubeTranscriptApi.get_transcript(video_id)

logger.info("transcript")

logger.info(transcript)

return transcript

def generate_prompt_from_transcript(transcript):

logger.info("Inside generate_prompt_from_transcript ..")

prompt = "Summarize the following video:\n"

for trans in transcript:

prompt += " " + trans.get('text', '')

logger.info("prompt")

logger.info(prompt)

return promptAny opinions in this post are those of the individual author and may not reflect the opinions of AWS.