Build Document Processing Pipelines with Project Lakechain

Learn how to create cloud-native, AI-powered document processing pipelines on AWS with Project Lakechain.

João Galego

Amazon Employee

Published Jun 25, 2024

Last Modified Jun 26, 2024

In this post, we're going to explore Project Lakechain: a cool, new project from AWS Labs for creating modern, AI-powered, document processing pipelines.

Based on the AWS Cloud Development Kit (CDK), it provides

60+ ready-to-use components for processing images 🖼️, text 📄, audio 🔊 and video 🎞️, as well as integration with Generative AI services like Amazon Bedrock and vector stores like Amazon OpenSearch or LanceDB.The greatest thing about Project Lakechain (documentation is a close second!) is how easy it is to combine these components into complex processing pipelines that can scale out of the box to millions of documents... using only infrastructure as code (IaC).

But don't just take my word for it... let me show you how it actually works!

Before we get started, make sure these tools are installed and properly configured:

- AWS CLI ☁️

- Docker 🐋

- TypeScript

5.0+(optional) - AWS CDK v2 (optional)

For more information, please check the Prerequisites section of the Lakechain docs.

The only thing you need to do to set up Lakechain is to clone the repository

and install the project dependencies

At this point, you can either try one of the simple pipelines or, if you're feeling brave enough, one of the end-to-end use cases.

💡 The Quickstart section is an excellent place to start. It will guide you through the deployment of a face detection pipeline using AWS Rekognition.

Adapted from the Content Moderation Immersion Day workshop. Check it out!

Learning about a new framework is all about understanding the problems it tries to solve.

Sticking close to this philosophy, let's try to build a pipeline from scratch to get some hands-on experience.

💡 In this blog, I will not cover the basics of the AWS CDK. If you need a refresher on on how to work with the AWS CDK in TypeScript or some other topic, just head over to the AWS CDK Developer Guide.

We'll go for something simple: the Quickstart pipeline is about image moderation 🧑🦰🛑, so let's try to create a similar one for text 📄🛑.

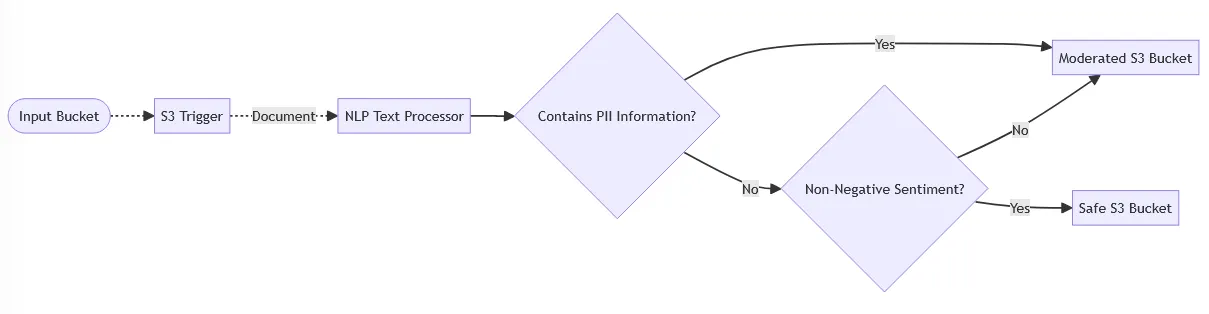

In good Mermaid style, our pipeline will look something like this:

Here's how it works:

- 🔫 The pipeline is triggered every time we upload a file to the

Input Bucket. - 🗣️ It then calls the NLP processor, which is powered by Amazon Comprehend, to determine the dominant language, perform sentiment analysis and detect personally identifiable information (PII).

- ✅ The text is sent to the

Safe Bucketif it doesn't contain PII data and has non-negative sentiment. - ⛔ Otherwise, it is placed in the

Moderated Bucketso it can be checked by a human reviewer.

If you're of the just-show-me-the-code persuasion, here's our text moderation stack in full:

✨ Good news, everyone! There's no need to create a new stack since the latest version of the code already contains an implementation of the text moderation pipeline.

In the Project Lakechain repo, just head over to the text moderation pipeline directory

and run the following commands to build it

Once you've configured the AWS credentials and the target region, you can deploy the example to your account

You can use the AWS CloudWatch Logs console to live tail the log groups associated with the deployed middlewares to see the logs in real-time:

Let's try to upload a file (Little Red Riding Hood 👧🔴👵🐺) to the

Input Bucket and see what happens:

After a couple of seconds, the file as well as some metadata will be added to the

Safe Bucket

In the metadata file, we can see that the dominant language is English 💂, the sentiment

NEUTRAL 😐 and that Amazon Comprehend has found no PII data 👤.☝️ Feel free to try other documents and don't forget to clean up everything when you're done

See you next time! 👋

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.