Why Async Lambda with AWS AppSync ?

Lets chat about the new feature for AWS Appsync and why its awesome for Generative AI applications.

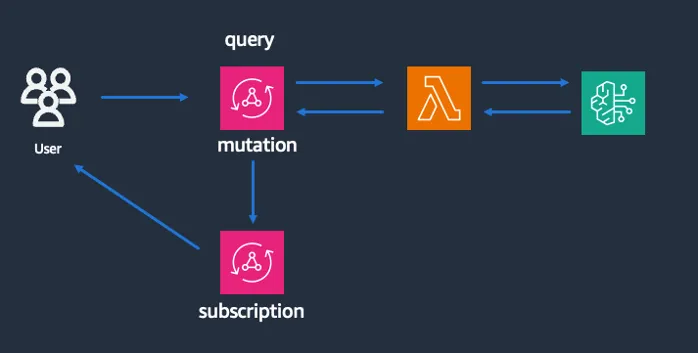

- Improved Scalability and Responsiveness: Immediate API responses while processing tasks in the background.

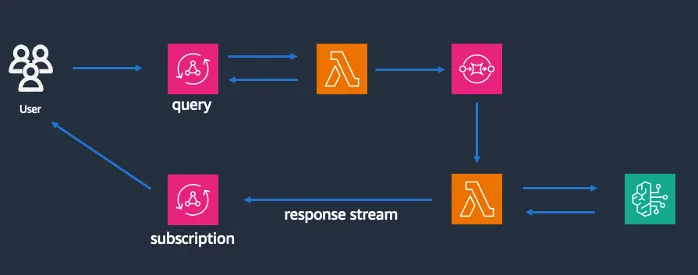

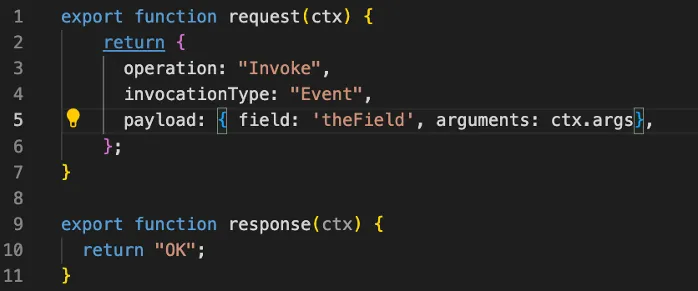

- Simplified Architecture: Reduces the need for additional steps to handle long-running tasks.

- Enhanced Error Handling: Built-in retry mechanisms and failure destinations improve reliability.

- Use Case Versatility: Ideal for generative AI, data processing, and other long-running operations.

- Payload Size Limitations: 240KB for subscription responses.

- Design Adjustments: Necessary for managing large payloads within the provided limits.

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.