Building a Serverless Web Scraper with a ~little~ lot of help from Amazon Q [Part 1]

I moved to Seattle at the end of 2021 on an L1b visa, and need to keep an eye on the priority dates for my Green Card application, so I decided to build an app that will pull the historic data for me and graph it.

Update: 2024-06-14: This turned out to be more than I initially though, so will be turned into a series of articles, this being the first. I'll be posting the followups in this series, and link them as a list.

All the code for this app can be found on GitHub, with the code as it was at the end of this article on the article-1 tag.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

variable "aws_profile" {

default = "development"

}

variable "aws_region" {

default = "us-west-2"

}

variable "state_file_bucket_name" {

default = "tf-us-visa-dates-checker"

}

variable "state_file_lock_table_name" {

default = "tf-us-visa-dates-checker-statelock"

}

variable "kms_key_alias" {

default = "tf-us-visa-dates-checker"

}terraform plan - I specifically never have the default profile configured due to the many different accounts I use. I would rather have an error that forces me to think where I want to run something than accidentally deploy / make any API calls to the incorrect account. I have set up a profile for this project called development, so I export it via the AWS_PROFILE environment variable by calling:1

export AWS_PROFILE=developmentterraform apply to create those resources for the state file, uncomment the lines in statefile.tf, and then run terraform init to store the state file in S3.Important: You cannot use variables in theterraformblock for thebackend, so make sure to copy the exact names for the resources from_bootstrap.tf.

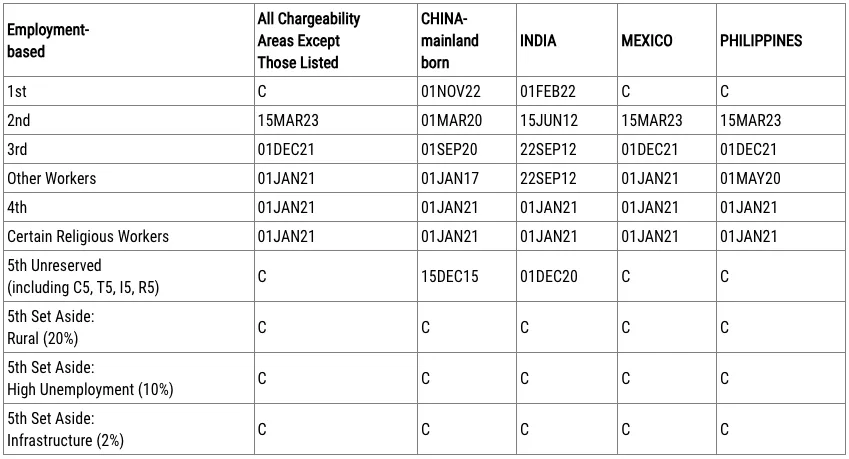

Thanks, can you show me how I would write a webpage scraper with Lambda to scrape all the pages linked from https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin.html with the format https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-july-2024.html - they should be linked from the first page listed. Each page has multiple sections and tables, I would like to only store the rows in the tables listed under the heading “A. FINAL ACTION DATES FOR EMPLOYMENT-BASED PREFERENCE CASES” and “B. DATES FOR FILING OF EMPLOYMENT-BASED VISA APPLICATIONS”. Can you show me how to create a Lambda function to do this and store the data in DynamoDB?

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

import requests

from bs4 import BeautifulSoup

import boto3

from datetime import datetime

# Initialize DynamoDB client

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('VisaBulletinData')

def scrape_visa_bulletin(url):

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

# Find the tables with the relevant data

employment_based_tables = soup.find_all('div', {'class': 'field-item even'})

# Extract data from the tables

employment_based_data = []

for table in employment_based_tables:

table_heading = table.find_previous('h3').text.strip()

if 'A. FINAL ACTION DATES FOR EMPLOYMENT-BASED PREFERENCE CASES' in table_heading or 'B. DATES FOR FILING OF EMPLOYMENT-BASED VISA APPLICATIONS' in table_heading:

rows = table.find_all('tr')

for row in rows[1:]: # Skip the header row

cols = row.find_all('td')

cols = [col.text.strip() for col in cols]

employment_based_data.append({

'table_heading': table_heading,

'data': cols

})

return employment_based_data

def lambda_handler(event, context):

base_url = 'https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin.html'

response = requests.get(base_url)

soup = BeautifulSoup(response.content, 'html.parser')

# Find all links to visa bulletin pages

links = soup.find_all('a', href=True)

visa_bulletin_links = [link['href'] for link in links if '/visa-bulletin-for-' in link['href']]

# Scrape data from each visa bulletin page

for link in visa_bulletin_links:

url = f"https://travel.state.gov{link}"

data = scrape_visa_bulletin(url)

for item in data:

table_name = datetime.now().strftime('%Y-%m-%d') + '_' + item['table_heading'].replace(' ', '_')

table.put_item(

Item={

'table_name': table_name,

'data': item['data']

}

)

return {

'statusCode': 200,

'body': 'Visa bulletin data scraped and stored in DynamoDB'

}app.tf for now, may split it out later, here is the table definition so far (foreshadowing… :) ):1

2

3

4

5

6

7

8

9

10

resource "aws_dynamodb_table" "visa_bulletin_data" {

name = "VisaBulletinData"

billing_mode = "PAY_PER_REQUEST"

hash_key = "table_name"

attribute {

name = "table_name"

type = "S" # String type

}

}requirements.txt is handled. I find this article and like the approach more as part of the 3rd attempt:- Install the dependencies in

requirements.txt - Zip these up

- Deploy it as a Lambda layer

requirements.txt change. I also move handler.py into /src, add src/package and layer to my .gitignore. Now I need to make sure that requirements.txt have the correct imports - I’ve used python enough to know about venv to install dependencies, but haven’t had to do it from scratch before. After poking around for a while, learn that the “easiest” way would be look at the import statements at the top of my code, and then call pip3 install <module> for each one, currently I have requests, boto3, and BeautifulSoup, and then run pip3 freeze > requirements.txt. This does highlight a problem in my current approach: I’ve been merrily setting up all the infrastructure with Terraform, but not run my code at all. Once I have the infrastructure creation working, I will then focus on making sure the code works. I end up with the following Terraform in app.tf:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

resource "aws_dynamodb_table" "visa_bulletin_data" {

name = "VisaBulletinData"

billing_mode = "PAY_PER_REQUEST"

hash_key = "table_name"

attribute {

name = "table_name"

type = "S" # String type

}

}

# Install dependencies and create the Lambda layer package

resource "null_resource" "pip_install" {

triggers = {

shell_hash = "${}"

}

provisioner "local-exec" {

command = <<EOF

cd src

echo "Create and activate venv"

python3 -m venv package

source package/bin/activate

mkdir -p ${path.cwd}/layer/python

echo "Install dependencies to ${path.cwd}/layer/python"

pip3 install -r requirements.txt -t ${path.cwd}/layer/python

deactivate

cd ..

EOF

}

}

# Zip up the app to deploy as a layer

data "archive_file" "layer" {

type = "zip"

source_dir = "${path.cwd}/layer"

output_path = "${path.cwd}/layer.zip"

depends_on = [null_resource.pip_install]

}

# Create the Lambda layer with the dependencies

resource "aws_lambda_layer_version" "layer" {

layer_name = "dependencies-layer"

filename = data.archive_file.layer.output_path

source_code_hash = data.archive_file.layer.output_base64sha256

compatible_runtimes = ["python3.12", "python3.11"]

}

# Zip of the application code

data "archive_file" "app" {

type = "zip"

source_dir = "${path.cwd}/src"

output_path = "${path.cwd}/app.zip"

}

# Define the Lambda function

resource "aws_lambda_function" "visa_bulletin_scraper" {

function_name = "visa-bulletin-scraper"

handler = "lambda_function.lambda_handler"

runtime = "python3.12"

filename = data.archive_file.app.output_path

source_code_hash = data.archive_file.app.output_base64sha256

role = aws_iam_role.lambda_role.arn

layers = [aws_lambda_layer_version.layer.arn]

environment {

variables = {

DYNAMODB_TABLE = aws_dynamodb_table.visa_bulletin_data.name

}

}

}

# Define the IAM role for the Lambda function

resource "aws_iam_role" "lambda_role" {

name = "visa-bulletin-scraper-role"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Effect": "Allow"

}

]

}

EOF

}

# Attach the necessary IAM policies to the role

resource "aws_iam_policy_attachment" "lambda_basic_execution" {

name = "lambda_basic_execution"

policy_arn = "arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole"

roles = [aws_iam_role.lambda_role.name]

}

resource "aws_iam_policy_attachment" "dynamodb_access" {

name = "dynamodb_access"

policy_arn = aws_iam_policy.dynamodb_access_policy.arn

roles = [aws_iam_role.lambda_role.name]

}

# Define the IAM policy for DynamoDB access

resource "aws_iam_policy" "dynamodb_access_policy" {

name = "visa-bulletin-scraper-dynamodb-access"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"dynamodb:PutItem"

],

"Resource": "${aws_dynamodb_table.visa_bulletin_data.arn}"

}

]

}

EOF

}dynamodb:PutItem Action will only allow my Lambda to write to the table, but not read. I’m going to leave it here for now till we get there. On the first terraform plan, I ran into the following error, and had to run terraform init -upgrade to install the null and archive providers:1

2

3

4

5

6

7

8

9

10

➜ us-visa-dates-checker git:(main) ✗ terraform plan

╷

│ Error: Inconsistent dependency lock file

│

│ The following dependency selections recorded in the lock file are inconsistent with the current configuration:

│ - provider registry.terraform.io/hashicorp/archive: required by this configuration but no version is selected

│ - provider registry.terraform.io/hashicorp/null: required by this configuration but no version is selected

│

│ To update the locked dependency selections to match a changed configuration, run:

│ terraform init -upgradesrc/local_test.py:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

from handler import lambda_handler

class MockContext:

def __init__(self):

self.function_name = "mock_function_name"

self.aws_request_id = "mock_aws_request_id"

self.log_group_name = "mock_log_group_name"

self.log_stream_name = "mock_log_stream_name"

def get_remaining_time_in_millis(self):

return 300000 # 5 minutes in milliseconds

mock_context = MockContext()

mock_event = {

"key1": "value1",

"key2": "value2",

# Add any other relevant data for your event

}

result = lambda_handler(mock_event, mock_context)

print(result)python3 local_test.py, and it will be able to access my AWS resources we exported the environment variable AWS_PROFILE. And it looks like it works since I don’t see any errors:1

2

(package) ➜ src git:(main) ✗ python3 local_test.py

{'statusCode': 200, 'body': 'Visa bulletin data scraped and stored in DynamoDB'}print line in the scrape_visa_bulletin function:1

2

def scrape_visa_bulletin(url):

print("Processing url: ", url)local_text.py, I can see that it is processing all the bulletins, but there is no data in my DynamoDB table:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

python3 local_test.py

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-june-2024.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-july-2024.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-july-2024.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-june-2024.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-may-2024.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-april-2024.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-march-2024.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-february-2024.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-january-2024.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-december-2023.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-november-2023.html

Processing url: https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin/2024/visa-bulletin-for-october-2023.html

...

{'statusCode': 200, 'body': 'Visa bulletin data scraped and stored in DynamoDB'}<div> fields that match field-item even for the class as defined in this line in my app:1

employment_based_tables = soup.find_all('div', {'class': 'field-item even'})tables, rows, and cells to inspect the data. The code afterwards for scrape_visa_bulletin now looks like this (and it isn’t storing the data in the DB yet):1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

def scrape_visa_bulletin(url):

print("Processing url: ", url)

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

employment_based_tables = soup.find_all('tbody')

employment_based_data = []

# Date pattern for the table cell dates

date_pattern = r"(\d{2})([A-Z]{3})(\d{2})"

# Extract the date from the URL

bulletin_date_pattern = r'visa-bulletin-for-(\w+)-(\d+)\.html'

match = re.search(bulletin_date_pattern, url)

if match:

month_name, year = match.groups()

month_abbr = month_name[:3].lower()

month_num = datetime.strptime(month_abbr, '%b').month

date_obj = datetime(int(year), month_num, 1)

else:

date_obj = None

employment_table_id = 0

for table in employment_based_tables:

rows = table.find_all('tr')

countries = []

# From 2022 till 2024 the number of rows differ

if len(rows) < 9 or len(rows) > 12:

continue

filing_type = 'Final Date' if employment_table_id == 0 else 'Filing Date'

employment_table_id += 1

for row_id, row in enumerate(rows):

cells = row.find_all('td')

for cell_id, cell in enumerate(cells):

clean_cell = cell.text.replace("\n", "").replace(" ", " ").replace("- ", "-").strip()

if row_id == 0:

if cell_id == 0:

table_heading = clean_cell

print("Table heading: ", table_heading)

else:

countries.append(clean_cell)

else:

if cell_id == 0:

category_value = clean_cell

else:

match = re.match(date_pattern, clean_cell)

if match:

day = int(match.group(1))

month_str = match.group(2)

year = int(match.group(3)) + 2000 # Year is only last 2 digits

month = datetime.strptime(month_str, "%b").month

cell_date = datetime(year, month, day)

else:

cell_date = date_obj

try:

employment_based_data.append({

'filing_type': filing_type,

'country': countries[cell_id - 1],

'category': category_value,

'bulletin_date': date_obj,

'date': cell_date

})

print("Date: [", date_obj.strftime("%Y-%m-%d"), "], Filing Type: [", filing_type, "], Country: [", countries[cell_id - 1], "], Category: [", category_value, "], Value: [", cell_date.strftime("%Y-%m-%d"), "]")

except:

print("ERROR: Could not process the row. Row: ", row)

return employment_based_datavisa-bulletin-for-july-2024.html to determine the date of the bulletin, I extract that, and store it in the bulletin_date. The debug output from the print line after I add the data to employment_based_data looks mostly right to me at this point:1

2

3

4

5

6

7

8

9

10

11

12

13

Table heading: Employment-based

Date: [ 2023-05-01 ], Filing Type: [ Filing Date ], Country: [ All Chargeability Areas Except Those Listed ], Category: [ 1st ], Value: [ 2023-05-01 ]

Date: [ 2023-05-01 ], Filing Type: [ Filing Date ], Country: [ CHINA-mainland born ], Category: [ 1st ], Value: [ 2022-06-01 ]

Date: [ 2023-05-01 ], Filing Type: [ Filing Date ], Country: [ INDIA ], Category: [ 1st ], Value: [ 2022-06-01 ]

Date: [ 2023-05-01 ], Filing Type: [ Filing Date ], Country: [ MEXICO ], Category: [ 1st ], Value: [ 2023-05-01 ]

Date: [ 2023-05-01 ], Filing Type: [ Filing Date ], Country: [ PHILIPPINES ], Category: [ 1st ], Value: [ 2023-05-01 ]

Date: [ 2023-05-01 ], Filing Type: [ Filing Date ], Country: [ All Chargeability Areas Except Those Listed ], Category: [ 2nd ], Value: [ 2022-12-01 ]

Date: [ 2023-05-01 ], Filing Type: [ Filing Date ], Country: [ CHINA-mainland born ], Category: [ 2nd ], Value: [ 2019-07-08 ]

Date: [ 2023-05-01 ], Filing Type: [ Filing Date ], Country: [ INDIA ], Category: [ 2nd ], Value: [ 2012-05-01 ]

Date: [ 2023-05-01 ], Filing Type: [ Filing Date ], Country: [ MEXICO ], Category: [ 2nd ], Value: [ 2022-12-01 ]

Date: [ 2023-05-01 ], Filing Type: [ Filing Date ], Country: [ PHILIPPINES ], Category: [ 2nd ], Value: [ 2022-12-01 ]

... (many more rows)1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

data: [

{

filing_type: Final Action Date

category: EB-3

countries: [

{

country: "All Chargeability Areas Except Those Listed"

history: [

{ bulletin_date: 2024-07-01, date: 2021-12-01},

{ bulletin_date: 2024-06-01, date: 2022-11-22},

{ bulletin_date: 2024-05-01, date: 2022-11-22},

{ bulletin_date: 2024-04-01, date: 2022-11-22},

{ bulletin_date: 2024-03-01, date: 2022-09-08},

]

}

]

}

]1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

def transform_data(employment_based_data):

# Assuming employment_based_data is your initial collection

transformed_data = []

# Group the data by filing_type and category

grouped_data = defaultdict(lambda: defaultdict(list))

for item in employment_based_data:

filing_type = item['filing_type']

category = item['category']

country = item['country']

bulletin_date = item['bulletin_date']

date = item['date']

grouped_data[filing_type][category].append({

'country': country,

'bulletin_date': bulletin_date,

'date': date

})

# Transform the grouped data into the desired structure

for filing_type, categories in grouped_data.items():

for category, country_data in categories.items():

countries = defaultdict(list)

for item in country_data:

country = item['country']

bulletin_date = item['bulletin_date']

date = item['date']

countries[country].append({

'bulletin_date': bulletin_date,

'date': date

})

transformed_data.append({

'filing_type': filing_type,

'category': category,

'countries': [

{

'country': country_name,

'history': history_data

}

for country_name, history_data in countries.items()

]

})transformed_data = transform_data(data) to the lambda_handler. All good so far, except when I run the code, it returns None for transformed_data. After wrapping the code in try blocks, I still can’t see the issue, and even with some more help, it stays None. I even try json-serializing the objects to better inspect them, which results in me learning about custom serialization in Python via:1

2

3

4

5

6

7

8

9

# Custom serialization function for datetime objects

def datetime_serializer(obj):

if isinstance(obj, datetime):

return obj.strftime("%Y-%m-%d")

raise TypeError(f"Type {type(obj)} not serializable")

...

print(json.dumps(transformed_data, default=datetime_serializer, indent=4))print statements and json.dumps later, I still see the correct data in grouped_data and transformed_data (inside the transform_data method though). Then it hits me: if I’m calling a method and using the result of it, that method should probably have a return call in it… Right, let’s pretend that didn’t happen and I had return transformed_data in there the whole time… Ahem!1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

resource "aws_dynamodb_table" "visa_bulletin_data" {

name = "VisaBulletinData"

billing_mode = "PROVISIONED"

read_capacity = 5

write_capacity = 5

hash_key = "pk" # Partition key

range_key = "sk" # Sort key

attribute {

name = "pk"

type = "S" # String type

}

attribute {

name = "sk"

type = "S" # String type

}

global_secondary_index {

name = "CountryIndex"

hash_key = "pk"

range_key = "sk"

projection_type = "ALL"

}

}

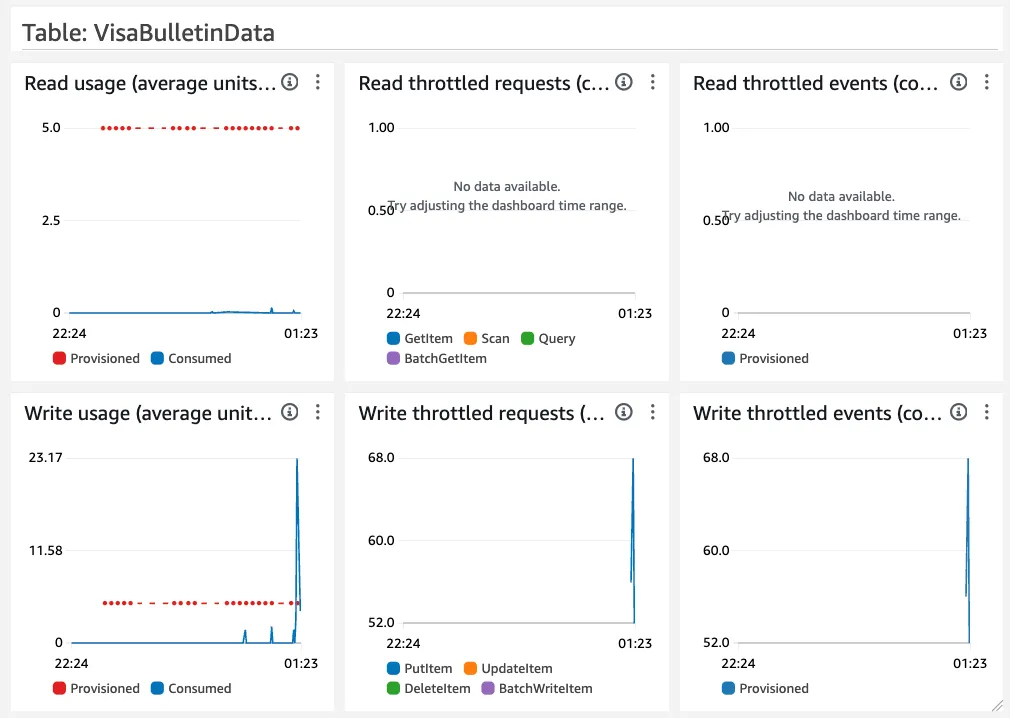

terraform apply this change, it returns the error write capacity must be > 0 when billing mode is PROVISIONED. After looking at the dynamodb_table resource definition, it appears you need to specify write_capacity and read_capacity for the global_secondary_index as well. With that fixed, I try to run the “final” version for today’s efforts, but it errors with:1

2

3

4

5

6

7

8

9

10

11

Traceback (most recent call last):

File "/Users/cobusb/projects/terraform-samples/us-visa-dates-checker/src/local_test.py", line 21, in <module>

result = lambda_handler(mock_event, mock_context)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/Users/cobusb/projects/terraform-samples/us-visa-dates-checker/src/handler.py", line 203, in lambda_handler

eb3_data = read_data()

^^^^^^^^^^^

File "/Users/cobusb/projects/terraform-samples/us-visa-dates-checker/src/handler.py", line 175, in read_data

KeyConditionExpression=Key('pk').eq(pk) & Key('sk').begins_with(sk_prefix)

^^^

NameError: name 'Key' is not definedKey function is part of the boto3.dynamodb.conditions module, and you need to import it explicitly in your Python script.”. Yes. This is me not paying attention to the output. After adding the import, the error is gone, but I do notice that after I changed the logic in lambda_handler it errors.1

2

3

4

5

6

for link in visa_bulletin_links:

if '2022' in link or '2023' in link or '2024' in link:

print("Processing link: ", link)

url = f"https://travel.state.gov{link}"

data = scrape_visa_bulletin(url)

transformed_data = transform_data(data)1

2

3

4

5

6

7

8

for link in visa_bulletin_links:

if '2022' in link or '2023' in link or '2024' in link:

print("Processing link: ", link)

url = f"https://travel.state.gov{link}"

data.append(scrape_visa_bulletin(url))

transformed_data = transform_data(data)

store_data(transformed_data)Note: This code assumes that theemployment_based_datacollection contains unique combinations offiling_type,country,category, andbulletin_date. If there are duplicate entries, you may need to modify the code to handle them appropriately (e.g., by keeping only the latest entry or aggregating the data in some way).

1

2

3

4

5

6

7

8

9

10

11

for item in employment_based_data:

filing_type = item['filing_type']

category = item['category']

country = item['country']

bulletin_date = item['bulletin_date']

date = item['date']

grouped_data[filing_type][category].append({

'country': country,

'bulletin_date': bulletin_date,

'date': date

})1

data.append(scrape_visa_bulletin(url))scrape_visa_bulletin is a list, not a single object, so using .append() causes the issue as it will add that whole list as a single object in my new list. Instead, I need to use .extend(). While debugging this and looking at how the data is stored, I also realise that we don’t need the transform_data at all, if you look at what is being stored via the table.put_item, the flat list of objects we extract from the web pages would work:1

2

3

4

5

6

7

8

9

10

11

12

13

pk = f"{filing_type}#{category}"

sk = f"{country}#{bulletin_date}"

table.put_item(

Item={

'pk': pk,

'sk': sk,

'filing_type': filing_type,

'category': category,

'country': country,

'bulletin_date': bulletin_date,

'date': date

}

)1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

for item in data:

filing_type = item['filing_type']

category = item['category']

country = item['country']

bulletin_date = item['bulletin_date']

date = item['date']

pk = f"{filing_type}#{category}"

sk = f"{country}"

table.put_item(

Item={

'pk': pk,

'sk': sk,

'filing_type': filing_type,

'category': category,

'country': country,

'bulletin_date': bulletin_date,

'date': date

}

)

ProcessedURLs and updating the code by adding processed_urls_table = dynamodb.Table('ProcessedURLs') at the start of handler.py under our existing reference to VisaBulletinData, and then update lambda_handler to:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

def lambda_handler(event, context):

... #skipped for brevity

# Scrape data from each visa bulletin page

for link in visa_bulletin_links:

if '2022' in link or '2023' in link or '2024' in link:

# Check if the URL has been processed

response = processed_urls_table.get_item(Key={'url': link})

if 'Item' in response:

print(f"Skipping URL: {link} (already processed)")

continue

# Process the URL

print(f"Processing URL: {link}")

url = f"https://travel.state.gov{link}"

data.extend(scrape_visa_bulletin(url))

# Store the processed URL in DynamoDB

processed_urls_table.put_item(Item={'url': link})

And create the table via Terraform:

resource "aws_dynamodb_table" "processed_urls" {

name = "ProcessedURLs"

billing_mode = "PROVISIONED"

read_capacity = 5

write_capacity = 5

hash_key = "url"

attribute {

name = "url"

type = "S"

}

}VisaBulletinData table? Let’s combine that into a single request! At first glance, I’m confused why it created 2 statements instead of adding the 2 resources as an array in the Resources: section. Looking a 2nd time, I spot the difference in the statement Action sections:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

resource "aws_iam_policy" "dynamodb_access_policy" {

name = "visa-bulletin-scraper-dynamodb-access"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"dynamodb:GetItem",

"dynamodb:PutItem"

],

"Resource": "${aws_dynamodb_table.processed_urls.arn}"

},

{

"Effect": "Allow",

"Action": [

"dynamodb:GetItem",

"dynamodb:Query"

],

"Resource": "${aws_dynamodb_table.visa_bulletin_data.arn}"

}

]

}

EOF

}VisaBulletinData table to read from it, we are not using a .get() call, but a .query() call. I would have missed that 😳 … Nice to see that someone else is at least paying attention.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

table.put_item(

pk = f"{filing_type}#{category}"

sk = f"{country}"

table.put_item(

Item={

'pk': pk,

'sk': sk,

'filing_type': filing_type,

'category': category,

'country': country,

'bulletin_date': bulletin_date,

'date': date

}

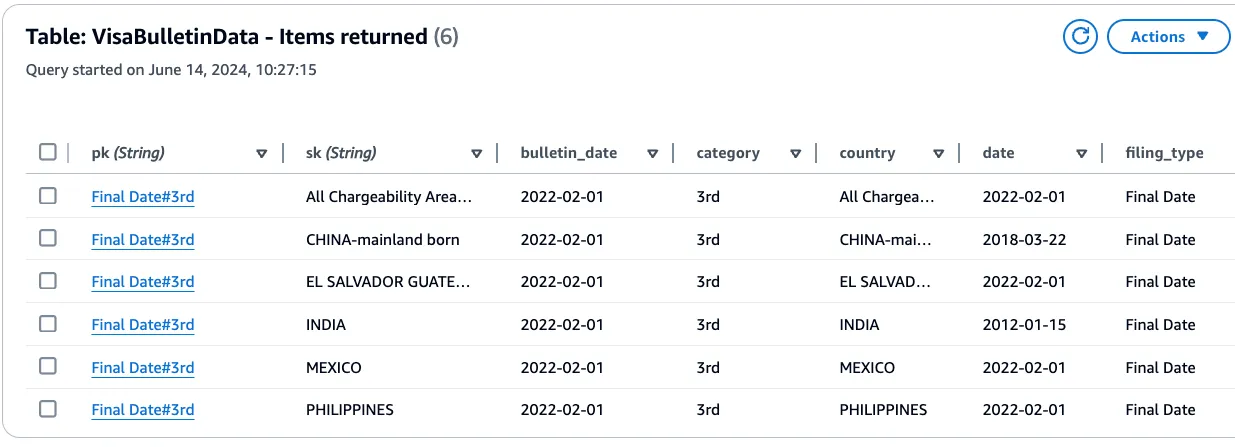

)Final Date#3rd, and it can only store 1 entry per country with 1 bulletin_date and date stored:

- Fix the data structure to store all the data

- Test the Lambda function to see if it works

- Add parameters to the function to be able to only select the specific set of dates you want

- Decide how to expose the Lambda function - either using AWS API Gateway as I have before, or setting up an invocation URL

- Set up a CI/CD pipeline to deploy it

- Add in tests

1

2

3

4

5

6

7

8

9

10

11

12

def read_data_locally(data, filing_type = 'Final Date', category = '3rd', country = 'All Chargeability Areas Except Those Listed'):

# Filter the data based on filing_type, category, and country

filtered_data = [entry for entry in data

if entry['filing_type'] == filing_type

and entry['category'] == category

and entry['country'] == country]

# Sort the filtered data in descending order by bulletin_date

sorted_data = sorted(filtered_data, key=itemgetter('bulletin_date'), reverse=True)

# Print the sorted data

for entry in sorted_data:

print(f"Bulletin Date: {entry['bulletin_date']}, Date: {entry['date']}")

return sorted_dataprint statement:1

2

3

4

5

6

7

8

9

10

11

Bulletin Date: 2024-07-01, Date: 2021-12-01

Bulletin Date: 2024-06-01, Date: 2022-11-22

Bulletin Date: 2024-05-01, Date: 2022-11-22

Bulletin Date: 2024-04-01, Date: 2022-11-22

Bulletin Date: 2024-03-01, Date: 2022-09-08

...

Bulletin Date: 2022-02-01, Date: 2022-02-01

Bulletin Date: 2022-01-01, Date: 2022-01-01

Bulletin Date: 2021-12-01, Date: 2021-12-01

Bulletin Date: 2021-11-01, Date: 2021-11-01

Bulletin Date: 2021-10-01, Date: 2021-10-01requirements.txt (correctly) populated took me longer than I expected. It was definitely a lot faster and easier with Amazon Q Developer than with my old approach of googling things piecemeal. If I had to summarise it, the conversational aspect along with not needing to sit and think exactly what keywords to add, makes a huge difference. Usually I would open multiple of the search results that are kind-of-but-not-quite what I’m looking for, spend the first 10 seconds to assess the source by scrolling the page, looking at when it was published, and if it feels trust-worthy. Then I would need to string together multiple parts from different sources unless I was really lucky and found exactly what I was looking for.transform_data method only to realise I didn’t need it all. All my experience over the last 32 years (GET OFF MY LAWN YA KIDS, I ENJOYED CHANGING DOS 6.22 MENUS!!!) is still very relevant.pip installs makes complete sense to me, and this is a really good way to split deploying changes between the application and the dependencies. I can see some interesting ways how you could use this test out upgrading the dependency version and then testing them before you roll it out to production…path.cdw and path.module, and I will need to read up more. Most of the time, I’ve seen path.module used, but it looks like path.cwd should be used instead.README.md on how to run it locally.- Amazon Bedrock: This is the core service you’ll use to build your generative AI application. With Bedrock, you can choose from various foundation models (FMs) like Anthropic’s Claude, AI21 Labs’ Jurassic-2, and Amazon’s Titan models. You can also create Knowledge Bases and Agents to integrate external data sources and execute complex tasks. [1]

- AWS Lambda: You can use AWS Lambda functions to fetch data from public websites and integrate with Amazon Bedrock. Lambda functions can be triggered by various events, such as API Gateway requests or scheduled events.

- Amazon API Gateway: If you want to expose your application as an API, you can use API Gateway to create, publish, and manage APIs that can invoke your Lambda functions.

- Amazon S3: You might need to store the data fetched from public websites in Amazon S3 buckets, which can then be used by your Bedrock Knowledge Bases or Agents.

- AWS IAM: You’ll need to manage permissions and roles using AWS Identity and Access Management (IAM) to allow your Lambda functions and Bedrock resources to access other AWS services securely.

- Terraform: You can use Terraform to define and provision the necessary AWS resources, such as Lambda functions, API Gateway, S3 buckets, IAM roles, and Bedrock resources, in a declarative and reproducible manner.

This is mostly ok, except I prefer not to create the infrastructure to store the statefile by hand, so I usually have_bootstrap.tfthat contains the details of my base infrastructure for Terraform, create the S3 bucket, DynamoDB locking table, and KSM key with that, and then I add in the backend config to use those resources.

- Create an S3 bucket: First, you’ll need to create an S3 bucket to store the state file. You can do this using the AWS Management Console, AWS CLI, or Terraform itself. Make sure to choose a unique bucket name and configure the appropriate access controls and versioning settings.

- Configure the Terraform backend: In your Terraform configuration, you’ll need to specify the S3 backend for storing the state file. Create a

backend.tffile with the following content:

1

2

3

4

5

6

7

8

terraform {

backend "s3" {

bucket = "XXXXXXXXXXXXXXXX" # Replace with your S3 bucket name

key = "path/to/state/file.tfstate" # Specify the path and name of the state file

region = "your-aws-region" # Replace with your AWS region

dynamodb_table = "your-dynamodb-table-name" # Optional, for state locking

}

}- Initialize the backend: Run

terraform initto initialize the Terraform working directory and configure the S3 backend. - Configure AWS credentials: Terraform needs access to your AWS credentials to interact with the S3 bucket and other AWS resources. You can configure your AWS credentials using environment variables, shared credentials file, or other methods supported by the AWS provider.

- Apply the configuration: Run

terraform applyto create the S3 bucket (if it doesn’t exist) and store the initial state file in the bucket.

1

2

3

4

5

6

7

8

import requests

from bs4 import BeautifulSoup

import boto3

from datetime import datetime

# Initialize DynamoDB client

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('VisaBulletinData')1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

def scrape_visa_bulletin(url):

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

# Find the tables with the relevant data

employment_based_tables = soup.find_all('div', {'class': 'field-item even'})

# Extract data from the tables

employment_based_data = []

for table in employment_based_tables:

table_heading = table.find_previous('h3').text.strip()

if 'FINAL ACTION DATES' in table_heading or 'DATES FOR FILING' in table_heading:

rows = table.find_all('tr')

for row in rows[1:]: # Skip the header row

cols = row.find_all('td')

cols = [col.text.strip() for col in cols]

employment_based_data.append({

'table_heading': table_heading,

'data': cols

})

return employment_based_data<div> elements with the class field-item even. For each table, it checks if the heading matches the desired sections (“FINAL ACTION DATES” or “DATES FOR FILING”). If so, it extracts the data from the table rows, skipping the header row, and appends it to a list.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

def lambda_handler(event, context):

base_url = 'https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin.html'

response = requests.get(base_url)

soup = BeautifulSoup(response.content, 'html.parser')

# Find all links to visa bulletin pages

links = soup.find_all('a', href=True)

visa_bulletin_links = [link['href'] for link in links if '/visa-bulletin-for-' in link['href']]

# Scrape data from each visa bulletin page

for link in visa_bulletin_links:

url = f"https://travel.state.gov{link}"

data = scrape_visa_bulletin(url)

for item in data:

table_name = datetime.now().strftime('%Y-%m-%d') + '_' + item['table_heading'].replace(' ', '_')

table.put_item(

Item={

'table_name': table_name,

'data': item['data']

}

)

return {

'statusCode': 200,

'body': 'Visa bulletin data scraped and stored in DynamoDB'

}lambda_handler function, we first fetch the base URL (https://travel.state.gov/content/travel/en/legal/visa-law0/visa-bulletin.html) and find all the links to visa bulletin pages using BeautifulSoup. Then, for each visa bulletin page link, we call the scrape_visa_bulletin function to extract the relevant data.put_item method of the DynamoDB table resource.VisaBulletinData with a partition key named table_name (string type). You can do this using the AWS Management Console, AWS CLI, or Terraform.There is a change needed here, it should use the full heading I supplied as there are other sections starting withFINAL ACTION DATESandDATES FOR FILING. I do like how Q extracted'/visa-bulletin-for-'for the individual bulletin links.

1

2

3

4

5

6

7

8

9

10

resource "aws_dynamodb_table" "visa_bulletin_data" {

name = "VisaBulletinData"

billing_mode = "PAY_PER_REQUEST"

hash_key = "table_name"

attribute {

name = "table_name"

type = "S" # String type

}

}resource "aws_dynamodb_table" "visa_bulletin_data" { ... }defines a new DynamoDB table resource.name = "VisaBulletinData"sets the name of the DynamoDB table.billing_mode = "PAY_PER_REQUEST"specifies that the table should use the pay-per-request billing mode, where you pay for the read and write throughput consumed by your application.hash_key = "table_name"defines the partition key (hash key) for the table astable_name.attribute { ... }block defines the attribute for the partition key, specifying its name (table_name) and type (Sfor String).

terraform apply to create the DynamoDB table with the specified settings.1

2

3

4

provider "aws" {

region = "your-aws-region"

# Configure AWS credentials if not using environment variables or shared credentials file

}terraform apply again to update the table.Since we already defined the AWS provider for Terraform, we can discard that part.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

# Define the Lambda function

resource "aws_lambda_function" "visa_bulletin_scraper" {

filename = "lambda_function.zip"

function_name = "visa-bulletin-scraper"

role = aws_iam_role.lambda_role.arn

handler = "lambda_function.lambda_handler"

runtime = "python3.9"

source_code_hash = filebase64sha256("lambda_function.zip")

environment {

variables = {

DYNAMODB_TABLE = aws_dynamodb_table.visa_bulletin_data.name

}

}

}

# Define the IAM role for the Lambda function

resource "aws_iam_role" "lambda_role" {

name = "visa-bulletin-scraper-role"

assume_role_policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Action": "sts:AssumeRole",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Effect": "Allow"

}

]

}

EOF

}

# Attach the necessary IAM policies to the role

resource "aws_iam_policy_attachment" "lambda_basic_execution" {

policy_arn = "arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole"

roles = [aws_iam_role.lambda_role.name]

}

resource "aws_iam_policy_attachment" "dynamodb_access" {

policy_arn = aws_iam_policy.dynamodb_access_policy.arn

roles = [aws_iam_role.lambda_role.name]

}

# Define the IAM policy for DynamoDB access

resource "aws_iam_policy" "dynamodb_access_policy" {

name = "visa-bulletin-scraper-dynamodb-access"

policy = <<EOF

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"dynamodb:PutItem"

],

"Resource": "${aws_dynamodb_table.visa_bulletin_data.arn}"

}

]

}

EOF

}aws_lambda_function resource defines the Lambda function. It specifies the filename of the ZIP archive containing the Python code (lambda_function.zip), the function name, the IAM role for the function, the handler function (lambda_function.lambda_handler), and the Python runtime version (python3.9). The source_code_hash attribute is used to trigger updates when the source code changes. The environment block sets the DYNAMODB_TABLE environment variable with the name of the DynamoDB table.aws_iam_role resource defines the IAM role that the Lambda function will assume. The assume_role_policy specifies that the role can be assumed by the Lambda service.aws_iam_policy_attachment resources attach the necessary IAM policies to the Lambda role. The AWSLambdaBasicExecutionRole policy provides basic permissions for Lambda functions, and the dynamodb_access_policy (defined below) grants access to the DynamoDB table.aws_iam_policy resource defines an IAM policy that grants the PutItem permission on the DynamoDB table. This policy is attached to the Lambda role in the previous step.- Create a ZIP archive (

lambda_function.zip) containing your Python code (lambda_function.py). - Update the

filenameattribute in theaws_lambda_functionresource with the correct path to the ZIP archive. - Replace the

runtimeattribute with the appropriate Python version if you’re using a different version. - Configure the AWS provider with your AWS credentials and region.

source_code_hash attribute in the Terraform configuration. Then, run terraform apply again to update the Lambda function.This is close, but not quite there, I don’t want to add a manual step to zip up the Python app. I’m also assuming I need to run a command to install the dependencies, or store them somewhere. I also notice that the IAM permission is only for writing to the table, not reading, but that is fine for now, we haven’t gotten to the part where we need to access the data. Let’s follow-up with another question on how to avoid the manual zipping of the file.

archive_file data source and the null_resource resource with local-exec provisioners to achieve this. [1]1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

# Create a ZIP archive of the Lambda function code

data "archive_file" "lambda_zip" {

type = "zip"

source_dir = "${path.module}/lambda_code/" # Directory containing the Lambda function code

output_path = "${path.module}/lambda_function.zip"

}

# Install dependencies and create the Lambda package

resource "null_resource" "lambda_package" {

triggers = {

source_code_hash = data.archive_file.lambda_zip.output_base64sha256

}

provisioner "local-exec" {

command = <<EOF

python3 -m venv package

source package/bin/activate

pip install -r ${path.module}/lambda_code/requirements.txt -t package/python

deactivate

cd package/python

zip -r9 ${path.module}/lambda_function.zip .

cd ../..

rm -rf package

EOF

}

}

# Define the Lambda function

resource "aws_lambda_function" "visa_bulletin_scraper" {

filename = data.archive_file.lambda_zip.output_path

function_name = "visa-bulletin-scraper"

role = aws_iam_role.lambda_role.arn

handler = "lambda_function.lambda_handler"

runtime = "python3.9"

source_code_hash = data.archive_file.lambda_zip.output_base64sha256

environment {

variables = {

DYNAMODB_TABLE = aws_dynamodb_table.visa_bulletin_data.name

}

}

depends_on = [null_resource.lambda_package]

}

# ... (IAM role and policy definitions remain the same)- The

data "archive_file" "lambda_zip"block creates a ZIP archive of the Lambda function code located in thelambda_code/directory. - The

resource "null_resource" "lambda_package"block is used to install dependencies and create the final Lambda package. Thetriggersblock ensures that this resource is re-created whenever the source code changes.- The

local-execprovisioner runs a series of commands:- Create a Python virtual environment (

venv). - Activate the virtual environment and install the dependencies listed in the

requirements.txtfile into thepackage/pythondirectory. - Deactivate the virtual environment.

- Change to the

package/pythondirectory and create a new ZIP archive containing the installed dependencies and the Lambda function code. - Remove the temporary

packagedirectory.

- The

aws_lambda_functionresource is updated to use thefilenameandsource_code_hashvalues from thearchive_filedata source. Thedepends_onattribute ensures that thenull_resourceis executed before creating or updating the Lambda function.

terraform apply. The final ZIP archive will be created in the same directory as your Terraform configuration.${path.module}/lambda_code/ with the actual path to your Lambda function code directory, and ${path.module}/lambda_code/requirements.txt with the path to your Python requirements file (if you have one).null_resource with local-exec provisioners should be used as a last resort solution, as it can introduce potential issues with remote execution environments like Terraform Cloud. If possible, consider using a more robust packaging solution like AWS Lambda Layers or Docker containers for managing dependencies.The mention of${path.module}makes me think this code is from a module, so I will need to update it. Looking at the steps, I don’t think this will work. Theresource "null_resource" "lambda_package"block uses thedata.archive_file.lambda_zip.output_base64sha256as a trigger to run the commands inprovisioner "local-exec", which makes sense as it is a way to figure out if it should trigger a new build based on if anything has changed. Further down it doesn’t use thezipcreated with thislocal-execthough, just the original with just the source code, so will need to update this.

lambda_function.py) with the imports at the top, you can generate the requirements.txt file automatically using the pipreqs tool. Here are the steps: [1]- Install

pipreqsusing pip:pip install pipreqs - Navigate to the directory containing your Python file (

lambda_function.py). - Run the

pipreqscommand with the path to your Python file:pipreqs /path/to/lambda_function.py

This will generate arequirements.txtfile in the same directory, containing the list of dependencies and their versions based on the imports in your Python file. [2]Alternatively, you can use the following command to generate therequirements.txtfile in a specific directory:pipreqs /path/to/lambda_function.py --force --savepath /path/to/requirements/directory

This command will create (or overwrite) therequirements.txtfile in the specified/path/to/requirements/directory. - Review the generated

requirements.txtfile and make any necessary adjustments or additions. [3] - In your Terraform configuration, update the

local-execprovisioner in thenull_resourceblock to use the correct path to therequirements.txtfile:

1

2

3

4

5

6

7

8

9

10

11

12

provisioner "local-exec" {

command = <<EOF

python3 -m venv package

source package/bin/activate

pip install -r /path/to/requirements/directory/requirements.txt -t package/python

deactivate

cd package/python

zip -r9 ${path.module}/lambda_function.zip .

cd ../..

rm -rf package

EOF

}- Replace

/path/to/requirements/directory/requirements.txtwith the actual path to yourrequirements.txtfile.

terraform apply, Terraform will use the requirements.txt file to install the necessary dependencies for your Python application.pipreqs tool analyzes the import statements in your Python file to generate the requirements.txt file. It may not always be 100% accurate, especially for complex projects with nested dependencies or non-standard import patterns. In such cases, you may need to manually review and update the requirements.txt file.lambda_handler function in your code during local development, you can create a separate Python script that imports and invokes the lambda_handler function with the appropriate event and context objects. [1]- Create a new Python file, e.g.,

local_test.py, in the same directory as yourlambda_function.pyfile. - In

local_test.py, import thelambda_handlerfunction from yourlambda_function.pyfile:from lambda_function importlambda_handler - Define a mock event object and a mock context object. The event object should mimic the structure of the event that your Lambda function expects to receive when invoked. The context object can be a simple dictionary or a custom class that implements the required methods and attributes.Here’s an example of a mock event object:

1

2

3

4

5

mock_event = {

"key1": "value1",

"key2": "value2",

# Add any other relevant data for your event

}1

2

3

4

5

6

7

8

9

10

def __init__(self):

self.function_name = "mock_function_name"

self.aws_request_id = "mock_aws_request_id"

self.log_group_name = "mock_log_group_name"

self.log_stream_name = "mock_log_stream_name"

def get_remaining_time_in_millis(self):

return 300000 # 5 minutes in milliseconds

mock_context = MockContext()local_test.py, call the lambda_handler function with the mock event and context objects:result = lambda_handler(mock_event, mock_context)print(result)

5. Run the

local_test.py script:python local_test.pylambda_handler function with the mock event and context objects, and print the result returned by the function.local_test.py to verify the behavior of your lambda_handler function.moto or placebo to mock AWS services during local development and testing.For this prompt, I had unfortunately closed my VSCode instance, and had to restart the conversation.

- Parse the HTML file using BeautifulSoup

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

import requests

from bs4 import BeautifulSoup

def extract_table_data(url):

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

# Find the table element

table = soup.find('table')

# Extract data from table rows and cells

data = []

for row in table.find_all('tr'):

row_data = []

for cell in row.find_all(['th', 'td']):

row_data.append(cell.get_text().strip())

data.append(row_data)

return data1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

import os

import boto3

def create_dynamodb_table(table_name):

dynamodb = boto3.resource('dynamodb')

table = dynamodb.create_table(

TableName=table_name,

KeySchema=[

{

'AttributeName': 'row_index',

'KeyType': 'HASH'

},

{

'AttributeName': 'col_index',

'KeyType': 'RANGE'

}

],

AttributeDefinitions=[

{

'AttributeName': 'row_index',

'AttributeType': 'N'

},

{

'AttributeName': 'col_index',

'AttributeType': 'N'

}

],

ProvisionedThroughput={

'ReadCapacityUnits': 5,

'WriteCapacityUnits': 5

}

)

return table

def store_data_in_dynamodb(table, data):

for row_index, row in enumerate(data):

for col_index, value in enumerate(row):

table.put_item(

Item={

'row_index': row_index,

'col_index': col_index,

'value': value

}

)

if __name__ == '__main__':

url = os.environ.get('URL')

table_name = 'table_data'

data = extract_table_data(url)

table = create_dynamodb_table(table_name)

store_data_in_dynamodb(table, data)row_index (hash key) and col_index (range key). The extract_table_data function retrieves the HTML content from the provided URL, parses the table, and returns a list of lists containing the table data. The store_data_in_dynamodb function iterates over the table data and stores each cell value in DynamoDB using the put_item method. [2]- Terraform code to create the DynamoDB table [3]

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

resource "aws_dynamodb_table" "table_data" {

name = "table_data"

billing_mode = "PROVISIONED"

read_capacity = 5

write_capacity = 5

hash_key = "row_index"

range_key = "col_index"

attribute {

name = "row_index"

type = "N"

}

attribute {

name = "col_index"

type = "N"

}

}table_data with a composite primary key consisting of row_index (hash key) and col_index (range key). The billing_mode is set to PROVISIONED, and the read_capacity and write_capacity are both set to 5. You can adjust these values based on your requirements.os.environ.get('URL') with the actual URL or a method to obtain the URL dynamically. Additionally, ensure that you have the necessary AWS credentials configured in your environment or use an AWS credentials provider for Boto3.employment_based_data list, you can modify the scrape_visa_bulletin function as follows:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

from datetime import datetime

import re

def scrape_visa_bulletin(url):

print("Processing url: ", url)

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

employment_based_tables = soup.find_all('table')

employment_based_data = []

# Extract the date from the URL

date_pattern = r'visa-bulletin-for-(\w+)-(\d+)\.html'

match = re.search(date_pattern, url)

if match:

month_name, year = match.groups()

month_abbr = month_name[:3].lower()

month_num = datetime.strptime(month_abbr, '%b')

date_obj = datetime(int(year), month_num, 1)

else:

date_obj = None

for table in employment_based_tables:

rows = table.find_all('tr')

table_heading = rows[0].find('td').text.strip()

if 'Employment-based' in table_heading:

print("Table heading: ", table_heading)

headers = [th.text.strip() for th in rows[0].find_all('td')[1:]]

for row in rows[1:]:

cols = row.find_all('td')

type_value = cols[0].text.strip()

for i, header in enumerate(headers):

value = cols[i + 1].text.strip()

employment_based_data.append({

'group_name': 'Employment-based',

'country': header,

'type': type_value,

'Value': value,

'Date': date_obj

})

return employment_based_data- Imported the

remodule for regular expression operations and thedatetimemodule from the Python standard library. - Added a regular expression pattern

date_patternto match the date portion of the URL (e.g., “july-2024” in “visa-bulletin-for-july-2024.html”). - Used

re.searchto find the match in the URL, and extracted the month name and year from the match groups. - Created a

datetimeobjectdate_objusing the extracted month name and year, setting the day to the first of the month. - Added the

date_objto the dictionary in theemployment_based_datalist as the value for the “Date” key.

employment_based_data list will contain dictionaries with the following keys: “group_name”, “country”, “type”, “Value”, and “Date”. The “Date” value will be a datetime object representing the first day of the month extracted from the URL.The code blocks are added here only for readability, the prompt contained it as pasted in-line code. Given that I have the following collection built up using:

1

2

3

4

5

6

7

employment_based_data.append({

'filing_type': filing_type,

'country': countries[cell_id - 1],

'category': category_value,

'bulletin_date': date_obj,

'date': cell_date

})1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

data: [

{

filing_type: Final Action Date

category: EB-3

countries: [

{

country: "All Chargeability Areas Except Those Listed"

history: [

{ bulletin_date: 2024-07-01, date: 2021-12-01},

{ bulletin_date: 2024-06-01, date: 2022-11-22},

{ bulletin_date: 2024-05-01, date: 2022-11-22},

{ bulletin_date: 2024-04-01, date: 2022-11-22},

{ bulletin_date: 2024-03-01, date: 2022-09-08},

]

}

]

}

]1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

from collections import defaultdict

# Assuming employment_based_data is your initial collection

transformed_data = []

# Group the data by filing_type and category

grouped_data = defaultdict(lambda: defaultdict(list))

for item in employment_based_data:

filing_type = item['filing_type']

category = item['category']

country = item['country']

bulletin_date = item['bulletin_date']

date = item['date']

grouped_data[filing_type][category].append({

'country': country,

'bulletin_date': bulletin_date,

'date': date

})

# Transform the grouped data into the desired structure

for filing_type, categories in grouped_data.items():

for category, country_data in categories.items():

countries = defaultdict(list)

for item in country_data:

country = item['country']

bulletin_date = item['bulletin_date']

date = item['date']

countries[country].append({

'bulletin_date': bulletin_date,

'date': date

})

transformed_data.append({

'filing_type': filing_type,

'category': category,

'countries': [

{

'country': country_name,

'history': history_data

}

for country_name, history_data in countries.items()

]

})

print(transformed_data)- We start by importing the

defaultdictfrom thecollectionsmodule, which will help us group the data efficiently. [1] - We create an empty list

transformed_datato store the transformed data. - We use a nested

defaultdictto group the data byfiling_typeandcategory. The outerdefaultdictgroups byfiling_type, and the innerdefaultdictgroups bycategory. For each item inemployment_based_data, we append a dictionary containing thecountry,bulletin_date, anddateto the appropriate group. - Next, we iterate over the grouped data (

grouped_data). For eachfiling_typeandcategorycombination, we create anotherdefaultdictto group the data bycountry. - For each

countrygroup, we create a list of dictionaries containing thebulletin_dateanddatefor that country. - Finally, we append a dictionary to

transformed_datawith thefiling_type,category, and a list of dictionaries containing thecountryand itshistory(the list ofbulletin_dateanddatedictionaries).

transformed_data list will contain the data in the desired structure.employment_based_data collection contains unique combinations of filing_type, country, category, and bulletin_date. If there are duplicate entries, you may need to modify the code to handle them appropriately (e.g., by keeping only the latest entry or aggregating the data in some way).print(transformed_data) statement is not being executed because of an error or exception occurring earlier in the code.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

from collections import defaultdict

# Assuming employment_based_data is your initial collection

transformed_data = []

# Group the data by filing_type and category

grouped_data = defaultdict(lambda: defaultdict(list))

try:

for item in employment_based_data:

filing_type = item['filing_type']

category = item['category']

country = item['country']

bulletin_date = item['bulletin_date']

date = item['date']

grouped_data[filing_type][category].append({

'country': country,

'bulletin_date': bulletin_date,

'date': date

})

except Exception as e:

print(f"Error while grouping data: {e}")

print("Grouped data:")

print(grouped_data)

# Transform the grouped data into the desired structure

try:

for filing_type, categories in grouped_data.items():

for category, country_data in categories.items():

countries = defaultdict(list)

for item in country_data:

country = item['country']

bulletin_date = item['bulletin_date']

date = item['date']

countries[country].append({

'bulletin_date': bulletin_date,

'date': date

})

transformed_data.append({

'filing_type': filing_type,

'category': category,

'countries': [

{

'country': country_name,

'history': history_data

}

for country_name, history_data in countries.items()

]

})

except Exception as e:

print(f"Error while transforming data: {e}")

print("Transformed data:")

print(transformed_data)transformed_data list is empty or None, it will print an empty list or None.employment_based_data collection, so that I can further assist you in troubleshooting the problem.Again, codeblock is just for readability. Thanks, that helped me find the issue. I now want to relook how the data will be stored in DynamoDB. I currently have the table defined using Terraform as:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

resource "aws_dynamodb_table" "visa_bulletin_data" {

name = "VisaBulletinData"

billing_mode = "PROVISIONED"

read_capacity = 5

write_capacity = 5

hash_key = "row_index"

range_key = "col_index"

attribute {

name = "row_index"

type = "N"

}

attribute {

name = "col_index"

type = "N"

}

}filing_type, category, and country, we need to modify the DynamoDB table schema and use a composite primary key. Here's how you can change the Terraform code for the DynamoDB table:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

resource "aws_dynamodb_table" "visa_bulletin_data" {

name = "VisaBulletinData"

billing_mode = "PROVISIONED"

read_capacity = 5

write_capacity = 5

hash_key = "pk" # Partition key

range_key = "sk" # Sort key

attribute {

name = "pk"

type = "S" # String type

}

attribute {

name = "sk"

type = "S" # String type

}

global_secondary_index {

name = "CountryIndex"

hash_key = "pk"

range_key = "sk"

projection_type = "ALL"

}

}- The

hash_keyis set to"pk"(partition key), and therange_keyis set to"sk"(sort key). Both keys are of type"S"(String). - A

global_secondary_indexnamed"CountryIndex"is defined, which has the samehash_keyandrange_keyas the table's primary key. Theprojection_typeis set to"ALL", which means that all attributes will be projected into the index. [1]

filing_type, category, and country values. Here's an example of how you can store the data:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

import boto3

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('VisaBulletinData')

for item in transformed_data:

filing_type = item['filing_type']

category = item['category']

for country_data in item['countries']:

country = country_data['country']

history = country_data['history']

for entry in history:

pk = f"{filing_type}#{category}"

sk = f"{country}#{entry['bulletin_date'].isoformat()}"

table.put_item(

Item={

'pk': pk,

'sk': sk,

'filing_type': filing_type,

'category': category,

'country': country,

'bulletin_date': entry['bulletin_date'],

'date': entry['date']

}

)pk (partition key) by combining the filing_type and category with a delimiter (#). The sk (sort key) is constructed by combining the country and bulletin_date (converted to an ISO-formatted string) with a delimiter.filing_type, category, and country, you can use the following code:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

import boto3

from boto3.dynamodb.conditions import Key

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('VisaBulletinData')

filing_type = 'Final Action Date'

category = 'EB-3'

country = 'All Chargeability Areas Except Those Listed'

pk = f"{filing_type}#{category}"

sk_prefix = f"{country}#"

response = table.query(

KeyConditionExpression=Key('pk').eq(pk) & Key('sk').begins_with(sk_prefix)

)

items = response['Items']- We construct the

pk(partition key) by combining thefiling_typeandcategorywith a delimiter (#). - We construct the prefix for the

sk(sort key) by combining thecountrywith a delimiter (#). - We use the

querymethod of the DynamoDB table to retrieve items where thepkmatches the constructed value, and theskbegins with the constructed prefix. - The retrieved items are stored in the

itemsvariable.

filing_type, category, and country values. You can modify the query conditions as needed to retrieve different subsets of the data.read_capacity and write_capacity values in the Terraform code with appropriate values based on your application's read and write requirements. Additionally, consider enabling auto-scaling for the DynamoDB table to automatically adjust the provisioned capacity based on your application's traffic.Key function is part of the boto3.dynamodb.conditions module, and you need to import it explicitly in your Python script.from boto3.dynamodb.conditions import KeyKey function will be available, and you can use it in your code without encountering the NameError.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

import boto3

from boto3.dynamodb.conditions import Key

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('VisaBulletinData')

filing_type = 'Final Action Date'

category = 'EB-3'

country = 'All Chargeability Areas Except Those Listed'

pk = f"{filing_type}#{category}"

sk_prefix = f"{country}#"

response = table.query(

KeyConditionExpression=Key('pk').eq(pk) & Key('sk').begins_with(sk_prefix)

)

items = response['Items']NameError: name 'Key' is not defined error.Key function is part of the boto3.dynamodb.conditions module, which provides a way to construct condition expressions for DynamoDB queries and scans. It allows you to create conditions based on the primary key attributes (partition key and sort key) and other attributes of the DynamoDB table.Key function from the boto3.dynamodb.conditions module, you can use it to construct the KeyConditionExpression for your DynamoDB query, specifying conditions on the pk (partition key) and sk (sort key) attributes.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

grouped_data = defaultdict(lambda: defaultdict(list))

try:

for item in employment_based_data:

filing_type = item['filing_type']

category = item['category']

country = item['country']

bulletin_date = item['bulletin_date']

date = item['date']

grouped_data[filing_type][category].append({

'country': country,

'bulletin_date': bulletin_date,

'date': date

})

except Exception as e:

print(f"Unable to group the data, error: {e}")1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

from collections import defaultdict

# Assuming employment_based_data is your initial collection

transformed_data = []

# Group the data by filing_type, category, and country

grouped_data = defaultdict(lambda: defaultdict(lambda: defaultdict(list)))

try:

for item in employment_based_data:

filing_type = item['filing_type']

category = item['category']

country = item['country']

bulletin_date = item['bulletin_date']

date = item['date']

grouped_data[filing_type][category][country].append({

'bulletin_date': bulletin_date,

'date': date

})

except Exception as e:

print(f"Unable to group the data, error: {e}")

# Transform the grouped data into the desired structure

for filing_type, categories in grouped_data.items():

for category, countries in categories.items():

countries_list = []

for country, history_data in countries.items():

countries_list.append({

'country': country,

'history': history_data

})

transformed_data.append({

'filing_type': filing_type,

'category': category,

'countries': countries_list

})

print(transformed_data)- We're using a nested

defaultdictwith three levels:filing_type,category, andcountry. This allows us to group the data by all three keys. - In the grouping step, we're appending the

bulletin_dateanddatedictionaries to the innermostdefaultdictfor each unique combination offiling_type,category, andcountry. - In the transformation step, we iterate over the

countriesdictionary for eachfiling_typeandcategorycombination. For eachcountry, we create a dictionary with thecountrykey and thehistorydata (the list ofbulletin_dateanddatedictionaries). - We append these dictionaries to a

countries_list, which is then added to thetransformed_datalist.