Easy Serverless RAG with Knowledge Base for Amazon Bedrock

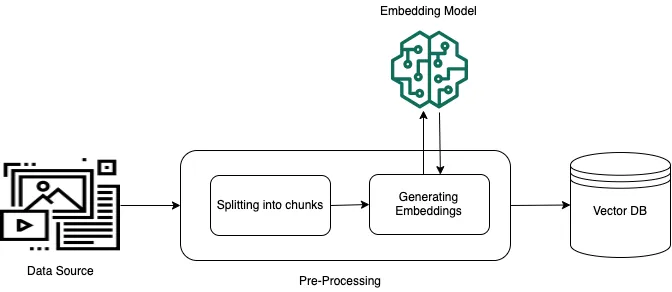

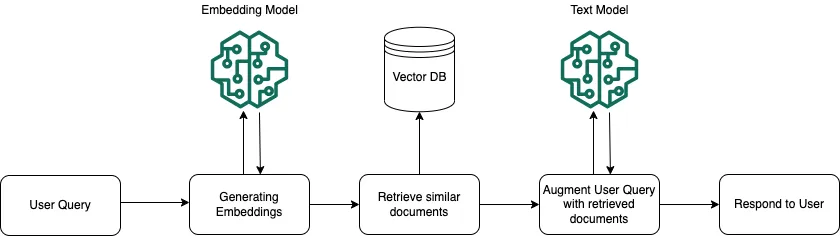

Discover enhancing FMs with contextual data for Retrieval Augmented Generation (RAG) to provide more relevant, accurate, and customized responses.

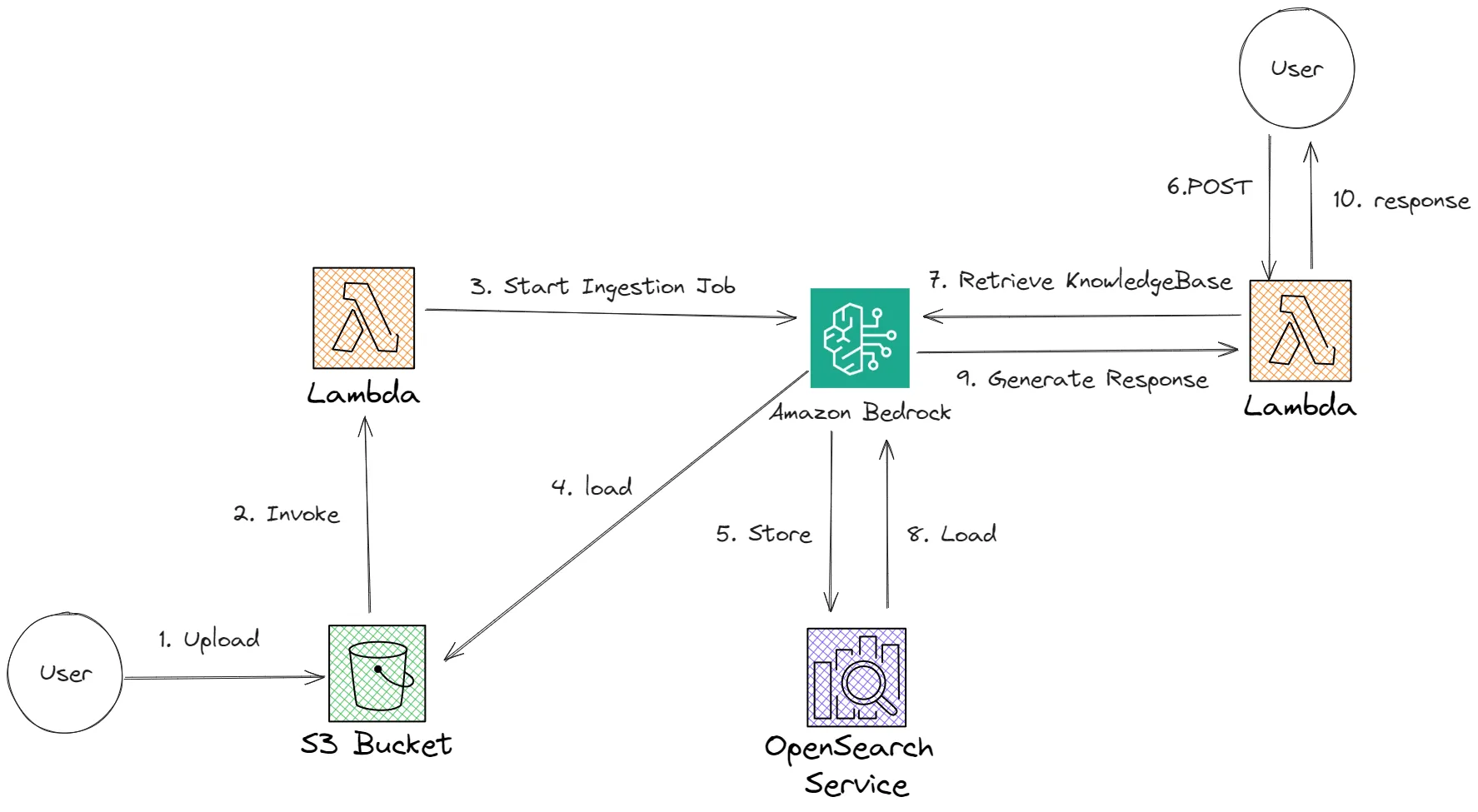

- Knowledge Bases for Amazon Bedrock provides a fully managed RAG experience and the easiest way to get started with RAG in Amazon Bedrock.

- AWS CDK to define our cloud infrastructure in AWS.

- AWS Lambda is our serverless function to generate responses from Amazon Bedrock to the user.

- Improve the accuracy and factual consistency of LLM responses. LLMs are trained on massive amounts of text data, but this data may not always be accurate or up-to-date. By accessing external knowledge bases, RAG can ensure that LLMs are using the most current and reliable information to generate their responses.

- Make LLMs more relevant to specific domains or tasks. By focusing on retrieving information from specific knowledge bases, RAG can tailor LLMs to specific domains or tasks. This can be useful for applications such as question answering, summarization, and translation.

- Provide transparency and explainability. RAG can provide users with the sources of the information that was used to generate a response. This can help users to understand how the LLM arrived at its answer and to assess its credibility.

- We will start creating our project folder by running following command in our terminal.

- Then initialize our CDK project.

- First, prepare our resume data in a text or PDF file and save it as

data/resume.txtwith example content like this.

- We will create a couple of lambda functions in

srcfolder.

- Create our first lambda function in

src/queryKnowledgeBase

- This function will handle user request queries and return the RAG response from Bedrock model.

- Type the following function code inside

src/queryKnowledgeBase/index.js

- Then create our second lambda function in

src/IngestJob

- This function will run Ingest Job in Bedrock Knowledge Base to do pre-processing and will get triggered when we upload our data to S3 Bucket.

- Type the following function code inside

src/IngestJob/index.js.

- Then to start building our service with CDK, go to

lib/resume-ai-stack.jsfile and type the following code.

- Then we can start deploying our services with CDK.

- After deployment is complete, CDK will return several output values including

KnowledgeBaseId,QueryFunctionUrlandResumeBucketName.

- Based on the output above, we will sync all files in our

datafolder to S3 bucket nameknowledgebasecdkstack-resumebucket4dbbd0e5-qcstjyegctqe.

- To start sending and receiving responses we can build our front end by following this script.

- Then we can start testing our RAG response for example by asking questions like

Tell me about yourself,What certifications do you own?or etc in the chat box. - It will return a response like this.