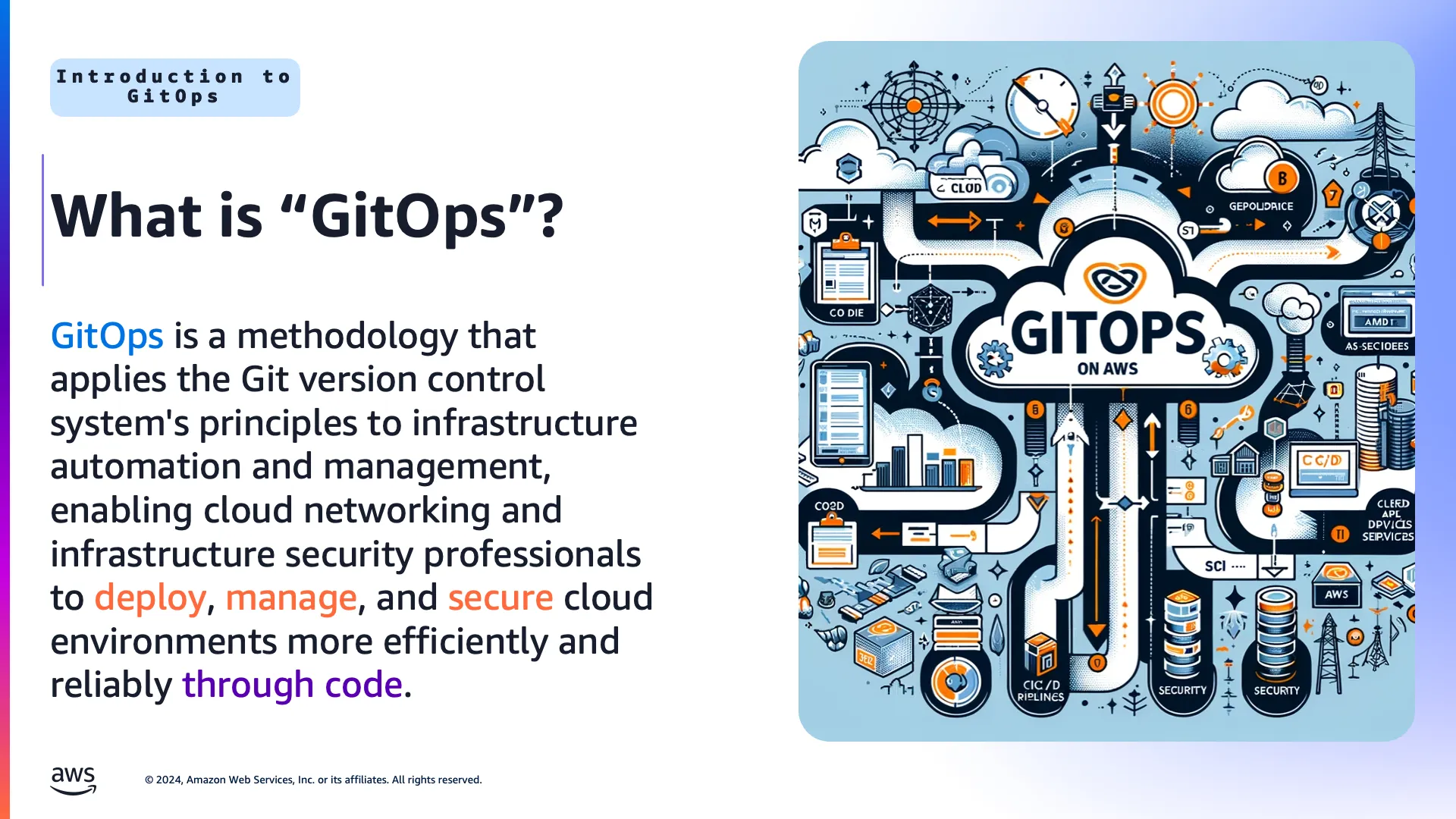

Embracing GitOps for Network Security and Compliance

Explore GitOps for AWS: merge DevOps with cloud control for enhanced security and compliance. Leverage Git for precise tracking and proactive measures.

- Transparency and Collaboration: Changes to infrastructure are as visible and reviewable as changes to application code, enhancing collaboration between teams.

- Security and Compliance: By managing infrastructure as code in Git, GitOps enables better audit trails, versioning, and compliance tracking.

- Stability and Reliability: GitOps promotes immutable infrastructure and declarative configurations, leading to more predictable and error-free deployments.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

"pipeline": {

"name": "CFNDeploymentPipeline",

"roleArn": "arn:aws:iam::123456789012:role/CodePipelineServiceRoleCFN",

"artifactStore": {

"type": "S3",

"location": "my-codepipeline-artifacts-123456789012"

},

"stages": [

{

"name": "Source",

"actions": [

{

"name": "Source",

"actionTypeId": {

"category": "Source",

"owner": "AWS",

"provider": "CodeCommit",

"version": "1"

},

"outputArtifacts": [

{

"name": "SourceArtifact"

}

],

"configuration": {

"RepositoryName": "CFNIACrepo",

"BranchName": "main"

},

"region": "us-west-1"

}

]

},

{

"name": "BuildAndDeployToDev",

"actions": [

{

"name": "BuildAndDeployToDev",

"actionTypeId": {

"category": "Build",

"owner": "AWS",

"provider": "CodeBuild",

"version": "1"

},

"inputArtifacts": [

{

"name": "SourceArtifact"

}

],

"outputArtifacts": [],

"configuration": {

"ProjectName": "BuildAndDeployToDev"

},

"region": "us-west-1"

}

]

},

{

"name": "DevApproval",

"actions": [

{

"name": "ManualApproval",

"actionTypeId": {

"category": "Approval",

"owner": "AWS",

"provider": "Manual",

"version": "1"

},

"configuration": {

"CustomData": "Please review the deployment in Dev before approving deployment to Prod."

}

}

]

},

{

"name": "DestroyOnDev",

"actions": [

{

"name": "DestroyOnDev",

"actionTypeId": {

"category": "Build",

"owner": "AWS",

"provider": "CodeBuild",

"version": "1"

},

"inputArtifacts": [

{

"name": "SourceArtifact"

}

],

"outputArtifacts": [],

"configuration": {

"ProjectName": "DestroyOnDev"

},

"region": "us-west-1"

}

]

},

{

"name": "DeployToProd",

"actions": [

{

"name": "DeployToProd",

"actionTypeId": {

"category": "Build",

"owner": "AWS",

"provider": "CodeBuild",

"version": "1"

},

"inputArtifacts": [

{

"name": "SourceArtifact"

}

],

"outputArtifacts": [],

"configuration": {

"ProjectName": "DeployToProd"

},

"region": "us-west-1"

}

]

}

]

}

}- It defines the artifacts store, which is an S3 bucket that the code will be moved into while the pipeline runs.

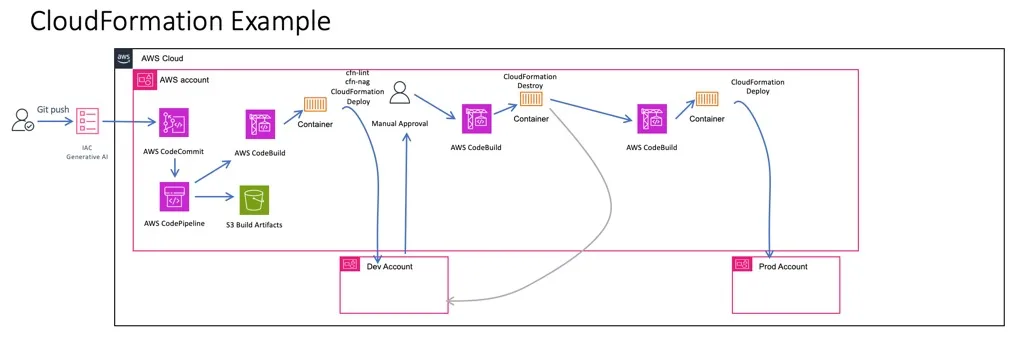

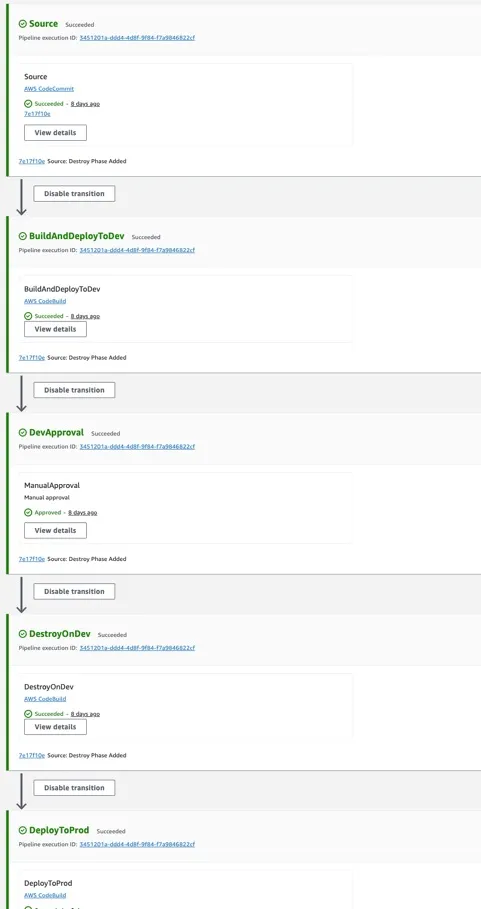

- It defines the stages of a pipeline. In our pipeline we have the Source, BuildAndDeployToDev, DevApproval, DestroyOnDev, and DeployToProd stages. In Figure 4 you can see the pipeline as its seen in the AWS Console.

- It defines the artifacts that are sent into each phase (InputArtifacts) and out of each phase (OutputArtifacts). This is important. You can look at the artifact the phase is trying to use by downloading it from the S3 bucket and unzipping it.

- It points to the name of the CodeBuild project that runs at each phase that includes one.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

version: 0.2

phases:

install:

runtime-versions:

ruby: 2.6

commands:

- ROLE_ARN_DEV="arn:aws:iam::091777568700:role/CodeBuildCrossAccountRoleDev"

- CREDS=$(aws sts assume-role --role-arn $ROLE_ARN_DEV --role-session-name dev-deploy)

- export AWS_ACCESS_KEY_ID=$(echo $CREDS | jq -r '.Credentials.AccessKeyId')

- export AWS_SECRET_ACCESS_KEY=$(echo $CREDS | jq -r '.Credentials.SecretAccessKey')

- export AWS_SESSION_TOKEN=$(echo $CREDS | jq -r '.Credentials.SessionToken')

- echo Installing dependencies

- pip3 install awscli --upgrade --quiet

- pip3 install cfn-lint --quiet

- apt-get install jq git -y -q

- gem install cfn-nag

- pip install --upgrade awscli botocore

pre_build:

commands:

- echo Running tests on CloudFormation templates

# - cfn-lint aws-network-firewall-tls-inspection.yml

# - cfn-nag scan --input-path aws-network-firewall-tls-inspection.yml

build:

commands:

- echo Deploying to Dev environment

- aws cloudformation deploy --template-file aws-network-firewall-tls-inspection.yml --stack-name my-firewall-stack --capabilities CAPABILITY_NAMED_IAM --region us-west-1

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.