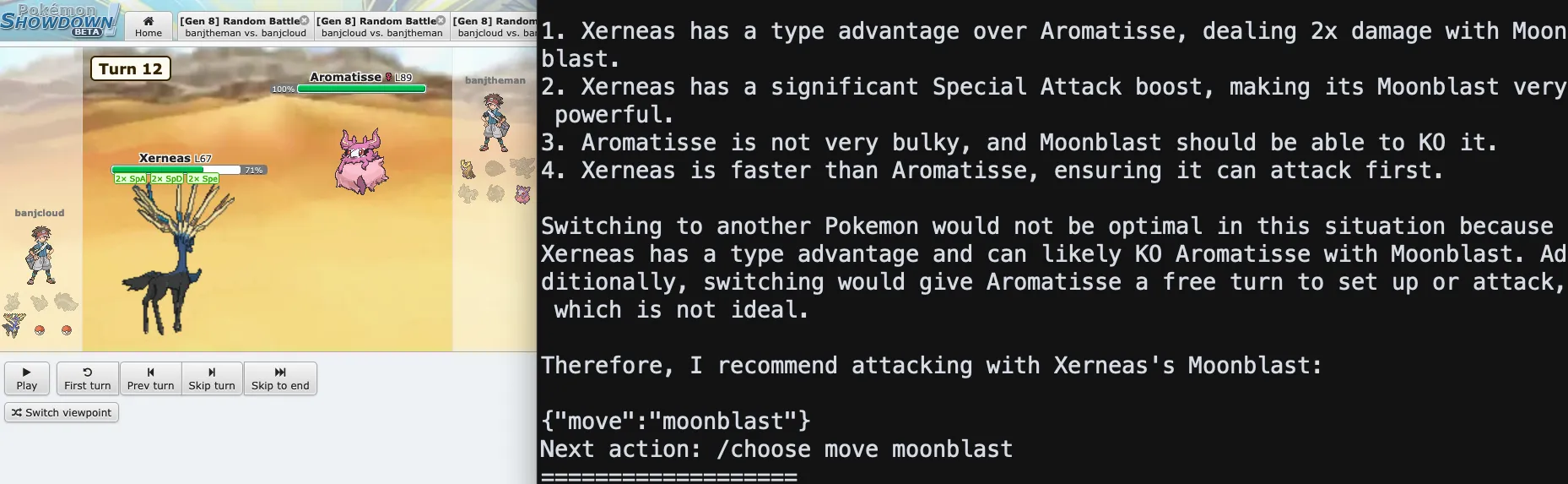

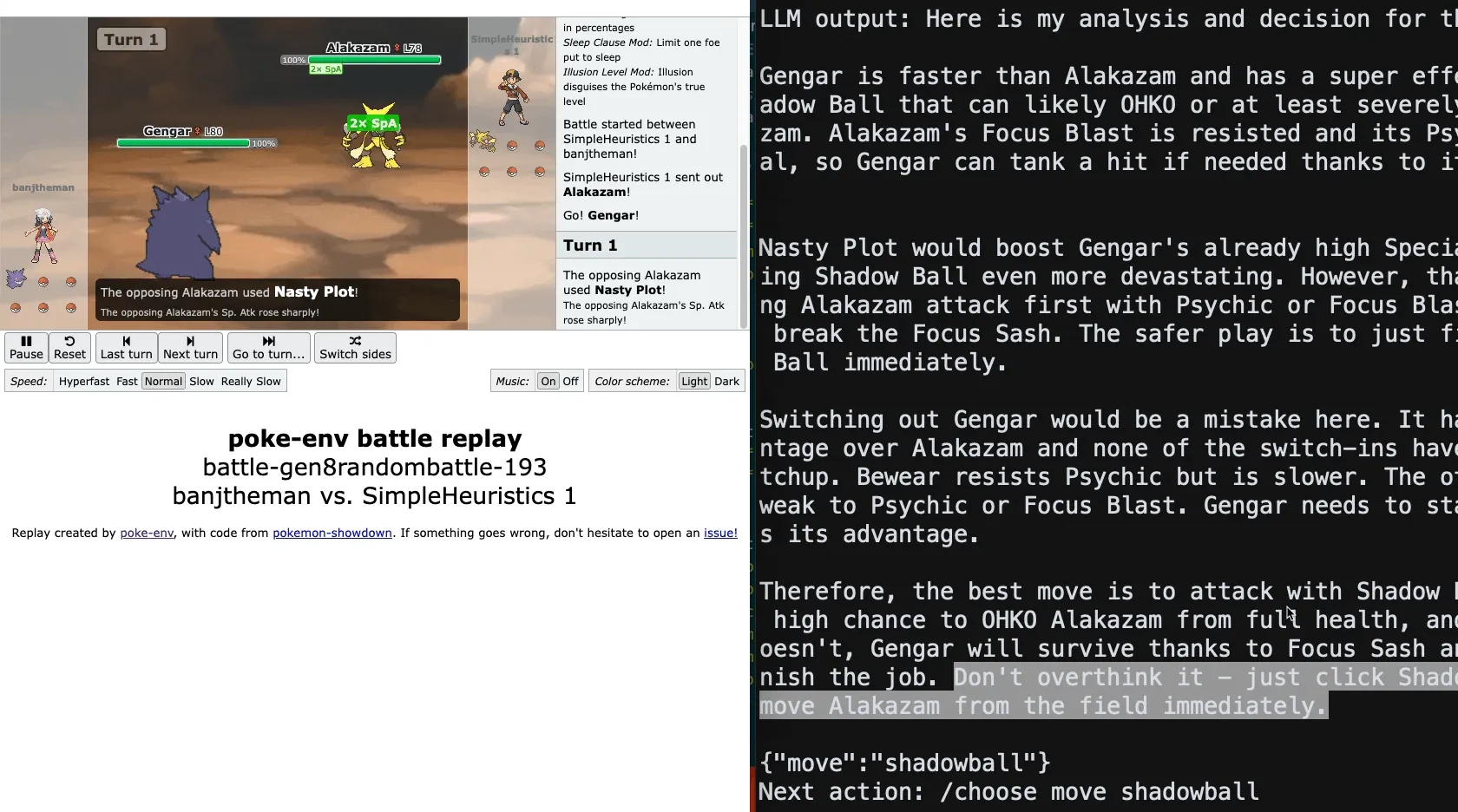

Five LLMs battled Pokemon. Claude Opus was super effective

Gotta prompt ‘em all!

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

Battle start: Opponent sent out Oranguru. You sent out Lycanroc.

Turn 1: Current battle state:

Opponent has 6 pokemons left.

Opposing pokemon:oranguru,Type:Normal and Psychic,HP:100%,Is dynamax:False,Attack:158,Defense:193,Special attack:211,Special defense:247,Speed:158,Ability:Inner Focus(The Pokémon's intense focus prevents it from flinching or being affected by Intimidate.) Bug, Dark-type attack is super-effective (2x damage) to oranguru. Psychic-type attack is ineffective (0.5x damage) to oranguru. Ghost-type attack is zero effect (0x damage) to oranguru.

oranguru's all the possible attacks:[psychic,psychic,Power:90],[thunderbolt,electric,Power:90],[focusblast,fighting,Power:120],

Your current pokemon:lycanroc,Type:Rock,HP:100%,Attack:227,Defense:148,Special attack:132,Special defense:148,Speed:223(faster than oranguru).Ability:Sand Rush(Boosts the Pokémon's Speed stat in a sandstorm.),Item:Focus Sash(An item to be held by a Pokémon. If it has full HP, the holder will endure one potential KO attack, leaving 1 HP.) Fighting-type attack is super-effective (2x damage) to lycanroc. Normal-type attack is ineffective (0.5x damage) to lycanroc.

Your lycanroc has 4 moves:

Move:stoneedge,Type:Rock,Power:118,Acc:80%,Effect:Has an increased chance for a critical hit.

Move:psychicfangs,Type:Psychic,Power:100,Acc:100%,Effect:Destroys Reflect and Light Screen.(ineffective (0.5x damage) to oranguru)

Move:closecombat,Type:Fighting,Power:141,Acc:100%,Effect:Lowers the user's Defense and Special Defense by one stage after inflicting damage.

Move:swordsdance,Type:Normal,Status-move,Power:0,Acc:100%,Effect:Raises the user's Attack by two stages.

You have 5 pokemons:

Pokemon:gourgeistsuper,Type:Ghost and Grass,HP:100%,Attack:219,Defense:256,Special attack:147,Special defense:176,Speed:96(slower than oranguru). Moves:[powerwhip,Grass,1x damage],[poltergeist,Ghost,0x damage],[rockslide,Rock,1x damage], Electric-type attack is ineffective (0.5x damage) to gourgeistsuper. Normal, Fighting-type attack is zero effect (0x damage) to gourgeistsuper.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

Bewear is still at full health thanks to not being hit yet. It can probably survive one boosted attack from Dragonite.

The best play is to go for another Ice Punch, hoping either for a freeze or a high damage roll to KO.

If Bewear attacks and doesn't KO, it will likely go down next turn.

But switching out Bewear gives Dragonite a free turn to keep setting up or attack.

None of the remaining Pokemon want to take +2 Outrage or Dual Wingbeat damage.

Gengar is already fainted so can't switch to it. Cinderace is faster than Bewear but is very low and will get KOed by any attack.

The others are all slower and will take huge damage.

Therefore, the best option is to stay in with Bewear and fire off another Ice Punch.

We need to try to KO that Dragonite before it runs through the team with its boosts. Switching is too risky here.

Bewear, finish it off with Ice Punch! No fear!

{"move":"Ice Punch"}

| Model | Ranking | Average Speed (seconds) |

|---|---|---|

| 🥇 Claude 3 Opus | Champion | 21 |

| 🥈 Claude 3 Sonnet | Tied 2nd | 10 |

| 🥈 Mistral Large | Tied 2nd | 8 |

| 🥉 Claude 3 Haiku | 3rd | 3 |

| Mixtral 8x7B | 4th | 8 |

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

You are a highly skilled and strategic Pokemon battler. Your primary goal is to make optimal move choices and switch decisions to defeat opposing Pokemon teams. Focus on knocking out the opposing Pokemon and only switch when absolutely necessary.

Your responses should have a confident, aggressive tone focused on maximizing damage output and securing KOs. Analyze the situation carefully, but prioritize attacking moves over switching whenever possible.

Given the current battle state with your active Pokemon, the opposing Pokemon, and any additional battlefield information, decide on the optimal action to take this turn - either choosing an attack move or switching to another Pokemon on your team if attacking is not viable.

Your decision should factor in:

Type advantages/disadvantages

Current boosts/debuffs on each Pokemon

Entry hazards on the field

Potential to set up for bigger damage later

Revenge killing opportunities

Preserving your own Pokemon's health, but not at the cost of missing KO opportunities

Use status-boosting moves like swords dance, calm mind, dragon dance, nasty plot strategically. The boosting will be reset when pokemon switch out. Set traps like stick yweb, spikes, toxic spikes, stealth rock strategically. When faced with a opponent that is boosting or has already boosted it's attack/special attack/speed, knock it out as soon as possible, even sacrificing your pokemon.

If your active Pokemon has a reasonable chance to KO the opponent's Pokemon, even if it is low on health, prioritize attacking over switching. "Panic switching" will lead to poor outcomes and lost battles, so focus on attacking first and only switch when your active Pokemon is guaranteed to faint to the opponent's next move.

Explain your reasoning step-by-step in arriving at your chosen action, emphasizing why attacking is the optimal play whenever possible and why you are confident in your choices.

<examples>

Example 1

Your Mesprit (full HP) vs Opponent's Metagross (7% HP)

Thinking process:

Metagross outspeeds Mesprit and can hurt with with Meteor Mash

Mesprit's Psychic attacks are not very effective against Metagross

Attacking has a high chance to KO Metagross, whereas switching lets it get off a free hit

Even at low HP, Mesprit's best play is to attack

Output move: Psychic

...

</examples>

For reference here was your last move:

{self.last_action}

Remember, your goal is to win. Be decisive and go for KOs whenever possible. Switching should be a last resort, not a go-to option. If you do switch, choose a Pokemon that can threaten the opponent or tank their hits. Seize every opportunity to deal big damage and remove opposing threats from the field. Maintain offensive pressure and don't allow unnecessary free turns.

If your previous move was a switch think long and hard before saying to switch again, explain why you will make two switches in a row which gives the opponent two free moves. Don't worry if the opponent Pokemon is strong because of boosts do not switch twice in a row, doing so will cause you to lose the match. Also, Do not worry about preserving pokemon that will not help in the battle any more, trying to preserve a pokemon that is about to faint will cost you the match. You play to win!!!Therefore, the best move is to attack with Shadow Ball. It has a high chance to OHKO Alakazam from full health, and even if it doesn't, Gengar will survive thanks to Focus Sash and can then finish the job.Don't overthink it - just click Shadow Ball and remove Alakazam from the field immediately.

- Stating incorrect type match ups

- Confusing defense with special defense

- Forgetting about abilities and held items

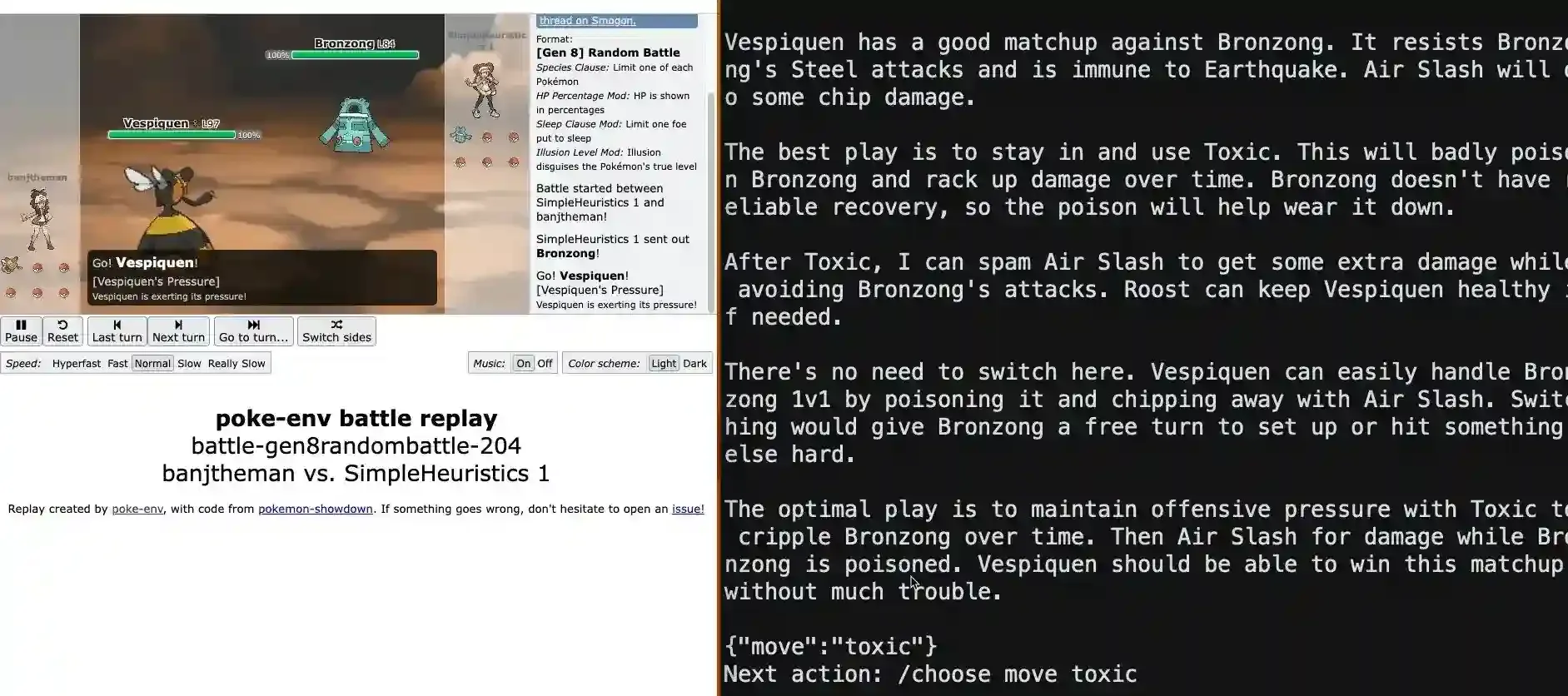

- The optimal play is to maintain offensive pressure with Toxic to cripple Bronzong over time. Then Air Slash for damage while Bronzong is poisoned. Vespiquen should be able to win this matchup without much trouble. (Bronzong is a steel type and immune to poison attacks)

- Looking at the rest of my team, Arcanine stands out as the best switch-in. It resists Orbeetle's Bug attacks, is immune to its Psychic attacks, and can threaten with super-effective Fire moves like Flare Blitz. (Arcanine is not immune to Psychic attacks)

- While Blacephalon's Special Attack is boosted, Aurorus has Defense stat of 203, which could help mitigate some of the damage. (Wrong Stat to compare)

I acknowledge switching twice in a row gives Gardevoir free turns to attack. However, preserving Turtonator and swapping in the bulkier, offensive threat in Malamar is worth it to put pressure back on Gardevoir.

- Tweaking prompts to optimize LLM performance

- Trying out different LLMs to find the best contenders

- Exploring model behaviors to gain deeper insights

Any opinions in this post are those of the individual author and may not reflect the opinions of AWS.